Search the Community

Showing results for tags 'machine vision'.

-

I try to migrate from LV2014 to LV2020. If I try to open Allied Vision Manta_G 145B camera in NI-MAX i get Error 0xBFF6901D "unable to load xml file". IMAQdx Version is 20.6 (RT + Dev). Basler Cameras work fine. I have upgrated the firmware of the cam to the newest version, but it didn't help. I have also installed IMAQdx 21.0, but without success. A Manta-032B works without problems... Any help or workaround is most welcome. Is there any way to load the XML file manually?

-

Hello everyone, We have started with the development of our own embedded MIPI camera module for the Raspberry Pi with an industrial image sensor. Currently we are in the specification phase and we are interested in your preferences so we will develop a product that does fit your requirements. If you have some time, please consider filling in this 4-question questionnaire.

-

Hello, I'm trying to implement an object tracking in LabVIEW. I've written a VI (attached) to detect and locate the centroid of elliptical shapes (because my circular subject can possibly be rotated and appear as an ellipse). However, the detection is not successful on every frame. For this reason, I would like to use the object detection method I've written as a ROI reference for an object tracker, whereby the object detector runs every 10-20 frames or so to make sure the tracker has not accumulated too much error. Any advice on how I can do this? Thanks! Additionally, any criticism or advice on my object detection method would be appreciated. Vision.vi

-

Hello, Here, I have attached the VI in which I want to do auto exposure and set that exposure value. Basically this is program for laser beam analysis on live image. Basically I want to set exposure time accordingly laser beam intensity. If anyone previously worked on it then please help me with that. I am new to image processing. Other image I attached, that's the part I want to implement with this program. Help me out to solve this problem. Thanks Thanks, Basic_Camera_Live_Beam_Information.vi

-

- machine vision

- auto exposure

-

(and 2 more)

Tagged with:

-

Hello, Research purpose, I am using Imagining Source (DMK 33Ux249) camera to capture the laser beam. I am trying to write a code for auto exposure according to power level of laser beam. I used Vision acquistion for capturing live video image and tried to open the front panel of the vision acquistion. But, I couldn't figure out how to set the exposure level automatically. Basically whole task is, 1. Capturing live image 2. set the exposure time according to laser beam profile 3. Remember the exposure the time and set again according to next frame or beam profile. Is anybody previously work or have an idea to solve this issue please let me know. Thanks

- 4 replies

-

- machine vision

- auto exposure

-

(and 2 more)

Tagged with:

-

Hello All, I am trying to removing the background and normalizing the image data. I have an image which I attached here. All I want as end result of normalized image with no background. At last I want to check the beam profile before and after. Is anybody previously worked on it? Any VI? Any help would be appreciate. Thanks in Advance

- 10 replies

-

- laser beam profile

- machine vision

-

(and 3 more)

Tagged with:

-

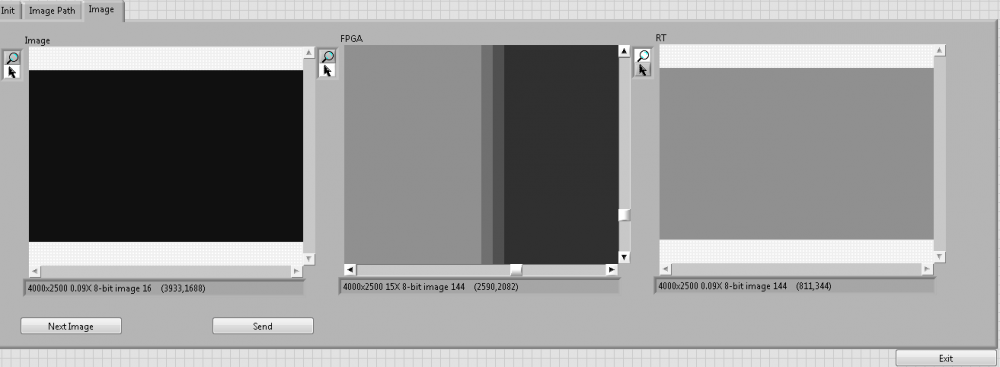

Hi, I am trying to use image convolution inside FPGA. My Image size is around 6kx2k. The convolution is applied properly until 2600 pixels in x resolution. After that, the values seem to miss previous row data. In Detail: As convolution is matrix operation, image data needs to be stored for the operation. But it seems there is an inadvertent error storing only 2600 pixels per row inside FPGA. And hence the filtered output is calculated assuming these pixels to be 0. I have tried with different image sizes, different convolution kernels, and also in different targets (cRIO 9030 and IC 3173). All results are same. I have attached a screenshot of FPGA VI and an example image. The example image shows an input image of 4000x2500 of same pixel value 16.The kernel is 3x3 of values 1 with divider=1. The RT image is processed using IMAQ convolute inside RT controller and has value 144 [(9*16)/1] for all pixels. But the FPGA processed image (zoomed in) has 144 until 2597 pixels and then 112 (7*16- showing 1 column of 2 rows missing) at 2598, 80 (5*16- showing 2 columns of 2 rows missing) at 2599 and 48 after that (missing 3 columns of 2 rows- current row is always present). This shows the data is missing from the previous rows after 2600 index. Is there some mistake in the code or any workaround available?

- 1 reply

-

- image convolute

- fpga

-

(and 1 more)

Tagged with:

-

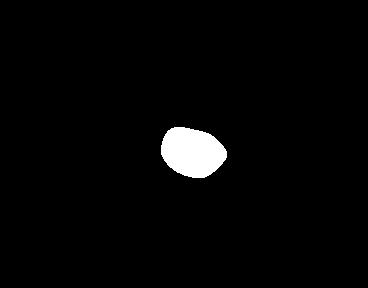

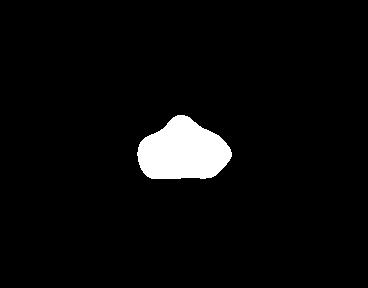

Hi! I have a video of a laser beam spot. The images contain noise. I need only the central bright portion of the image as the other circular portions are noise. After eliminating the noise I have to find the area of the spot by counting the bright pixels in the image.I have tried to execute the same but I find the output image after processing to be faulty.Ideally it should in black and red as I have done threshold. Can you help me with this ? Also I need help with counting the bright pixels to find area . I have tried converting the image into 1D array and then tried to add array elements.But again I find the result to be wrong. The images in black and white are the required output images.I can find them inside vision assistant as black and red (which is the same) but when it is displayed on the front panel I do not get the same image. Video_Final2.vi Video2.avi

-

Freelance/consultancy help needed at our Southern California location. I have a project where I need to take an existing LabVIEW solution and adapt it to make the application FDA 21 CFR Part 11 compliant. The application is a machine vision solution using the Vision Development Module. The vision part is working well and doesn't need to be replaced. The system uses machine vision to inspect pharmaceutical injection components. I'm looking for an engineer with experience in developing, qualifying and validating Part 11-compliant systems, including the addition of audit trail and data integrity functionality. I wrote all of the original LabVIEW code so you would be working directly with me to implement the solution. I work at Amgen, a large biotechnology/pharmaceutical company based just north of Los Angeles. I have a preference to work with engineers located within reasonable driving range of our facility, however due to the niche nature of this project we can probably be somewhat flexible with distance. If you are interested please contact me and I will call you to discuss the project in more depth.

-

- 1

-

-

- imaq

- machine vision

-

(and 3 more)

Tagged with:

-

I would like to try and upgrade my current CCD (pretty close to 640x480) to a higher res model (1080p). Here are my two requirements: It has to work LabVIEW/Vision software (could interface through GIG-E/IP or whatever) It has to fit inside of a tube about this size: (marker for scale) http://i.imgur.com/9i8nPRE.jpg The housing tube can be increased in size up to about a 1.25" diameter the tube runs back 2' until it opens up into an open space where we have a gigabit switch. The camera could just have a telescoping lens or be small enough to fit in a tube that size with the cables running out the back of the tube. I have been searching Hobby sites and small electronic sites/stores but nothing has caught my eye yet. I am hoping someone here may be using some CCD (maybe for security reasons?) that does pretty close to what I need. Thanks for any help!

- 2 replies

-

- imaq

- machine vision

-

(and 3 more)

Tagged with:

-

In my job I develop in-house machine vision and image processing solutions for my company. In industrial machine vision and inspection, there are several main players (Cognex, Keyence, Matrox, Halcon, etc.) LabVIEW and IMAQ are not totally out of the picture but are far from common for manufacturing applications. I try to use LabVIEW whenever I can because I find it's great for quickly prototyping proof-of-concept systems. I use the Vision Acquisition and Vision Development Module toolkits extensively. For the most part, the functions provided by NI meet my needs. Increasingly though, I find myself pushing their limits, and yearning for the functionality offered by some of the competition. In some cases I can leverage these external libraries but that can be cumbersome. Aside from a couple of older, paid LabVIEW libraries from third party companies, I can't find much out there to complement the IMAQ palettes. 1.) Are there quality VIs out there and I'm just not seeing them? 2.) Is there an appetite for them? 3.) Would anyone be interested in working (with me or otherwise) on an open-source image processing toolkit?

-

Hello. I'm doing a project in which I want to create this pattern that I attached. The pattern of nine points have to know its coordinates. If anyone can give me any idea how to do this in labview, I would appreciate Thank you regards

-

Hi, If some can provide me with the code for color quantization,it will be very greatful for me. http://en.wikipedia.org/wiki/Color_quantization Thank you

-

HI, After finding the dominant color in an image(attached the image file),using the dominant color from the orginal image,i have to create color mask image as per the technique from this pdf i had attached ,can you please help me out in performing a color mask to an image usiing dominant color. Thank you, Madhubalan A fast MPEG-7 dominant color extraction with new similarity.pdf

-

Good afternoon, everybody. I'm new here and actually working in a Localization Algorithm using LabView. I tried to extract walls contour from a simple floor plan to use it in my algorithm later. At first I thought doing it manually but then I realized there's a lot of high level VI's in Labview for computer vision. In example, "Extract contour" would give me the coordinates found in an image, so I wrote a simple piece of code to test it. But first, I need to select a ROI. I used "Rectangle to ROI" and added the imputs programmatically, but the Extract Contour VI doesn't accept it as a valid ROI and returns an error. I have absolutely no idea what's going on, because I create the same cluster that the info suggests as a valid input. You can try my attached code to see the problem by yourself using any image. Thanks in advance. RectangleContour.vi