All Activity

- Past hour

-

ensegre started following Serial Communication Question, Please

-

Serial Communication Question, Please

ensegre replied to jmltinc's topic in Remote Control, Monitoring and the Internet

- Today

-

jmltinc started following Serial Communication Question, Please

-

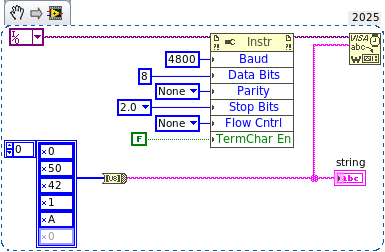

Decades too late, I am just learning RS-232 communication and the only resource that has a Com Port library is LabVIEW. I am having problems understanding how I should send the data and really could use some pointers... I have a Ham radio which I would like to communicate with through the computer. It is as Plain Jane as you can get: 4800 Baud 1 Start Bit 8 Data Bits 2 Stop Bits No Parity No Flow Control The radio expects 5 bytes - the first four are parameters either in BCD or Hex and a fifth Command code in Hex. Any of the four Parameter bytes not needed are padded to provide 5 bytes, which the radio expects. There are two commands I would like to send - one requires only the Hex command (and padded Parameter bytes); the other BCD Parameters. Walk before I run... 1) The easiest is transferring the VFO contents from VFO-A to VFO-B. The Command is 85 (Hex) with the four Parameter bytes padded (I tried zeros). I had a friend create a simple routine in C++ and QT which does this nicely - so the radio works. While I cannot see inside his exe, I presume the 5 bytes are 00 00 00 00 85. 2) The other command is to change VFO-A to the frequency 14.250.00 MHz. The parameters contained the frequency in BCD Packed Decimal) and the Command 0A (Hex) to complete the five bytes. The five bytes are formatted as such: Byte 1: 00 Byte 2: 50 Byte 3: 42 Byte 4: 01 Byte 5: 0A Per the radio manufacturer, sent in this order will change the frequency. But no matter what I try, I cannot even perform the simple task of copying VFO-A to B. I have tried arrays writing within a loop, sending a string, typecasting, flattening - all examples I found online. I don't know how LabVIEW handles data through VISA Write. Worse, the radio manufacturer gives a BASIC example of command 2 (changing frequency) as: Print #2 CHR$(&H00);CHR$H50);CHR$(&H42);CHR$(&H01);CHR$(&HA) I recognize this BASIC syntax as converting Hex into String and sending them off to the Port, but cannot convert this in LabVIEW. Yes, I use VISA Configure Port matching what the radio expects. I think my problem is what I am feed VISA Write - I am hopelessly lost here. I am not even sure 85H is 85 when sent as a string. Are the 5 bytes converted to an array and sent as one string or sent in 5 iterations (if the latter, do I convert a single U8 from a byte array to a string? If there is a conversion, what is it? Thanks for helping the dinosaur. John

-

Seeking Architectural Guidance: Implementing a Plugin-Based System

ShaunR replied to LEAF-1LEAF's topic in LabVIEW General

VI server. Simple and easy to implement with no framework dependencies. Define a distinction between Services and Plugins (plugins don't contain state, services do). Use a standardised uniform front panel interface between plugins. I use a single string control (see this post) and events for returns. An alternative is a 2d array of strings which is more flexible. Each plugin is contained in it's own LLB which contains all of it's specific VI's. Just list the llb's in the directory for plugins to load. Replace the LLB to upgrade; delete to remove. Names starting with an underscore (either in the LLB name, directory name or file name) are ignored and never loaded. They are effectively "private". A scheme to prevent unknown plugins being loaded. -

Tifer joined the community

-

losting changed their profile photo

-

Seeking Architectural Guidance: Implementing a Plugin-Based System

losting replied to LEAF-1LEAF's topic in LabVIEW General

Hi there, Great question — building a modular LabVIEW application with dynamic plugin loading is definitely a rewarding (and sometimes tricky) path. I’ve worked on similar architectures in test systems for electronic components, where we needed to dynamically load test procedures (as VIs) based on the device type or protocol (SPI, I2C, UART, etc.). Here’s what worked well for us: VI Server + Strict Type Definition: We defined a strict connector pane interface for all plugin VIs. This made it easy to load, call, and communicate with each plugin uniformly, whether it was a basic power test or a complex timing validation routine. Plugin Metadata: We stored metadata (e.g. DUT type, test category, plugin path) in an external config file (JSON or INI), so the main application could dynamically discover and load plugins based on the connected device or selected test plan. Encapsulation: To keep each plugin clean and focused, we implemented per-plugin state handling internally using FGVs or even mini actor-like modules. Main challenges: Version compatibility: When firmware or hardware revisions changed, some plugins required updated logic — this required version tracking and good documentation. Debugging dynamic calls: Debugging a broken plugin that fails to load at runtime can be painful without good error logging. We included detailed error tracing and visual logging in the framework early on — a huge time-saver. Hardware abstraction: We wrapped hardware interaction (like GPIO toggles or I2C commands) in common interface VIs to decouple the plugin logic from the actual test instruments. If your application also deals with automated test equipment or embedded devices, making your plugin architecture hardware-agnostic will greatly increase its flexibility and reusability. Would be happy to chat more about plugin communication models or modular test design for electronics! Best, - Yesterday

-

X___ started following Did I dream this?

-

There is no typos and errors in your posts. Only pearls of wisdom and oracles of truth that we mortals can't understand yet...

-

Drat, and now my typos and errors are put in stone for eternity (well at least until LavaG is eventually shutdown when the last person on earth turns off the light) 😁

-

@Rolf Kalbermatter the admins removed that setting for you as everything you say should be written down and never deleted 🙂

-

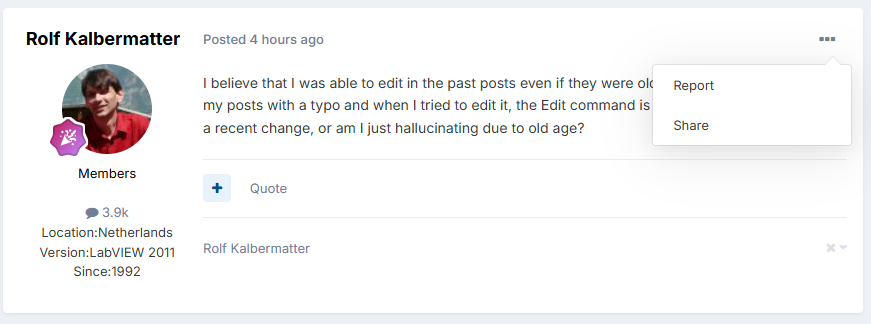

Unless I'm completely hallucinating, there used to be an Edit command in this pulldown menu. And it is now for this post, but not for the previous one.

-

hooovahh started following Did I dream this?

-

I am unaware of a time limit on editing your own posts. You are welcome to use the report to moderator to make fixes. I realize small things are just easier to ignore than making a report.

-

Rolf Kalbermatter started following Did I dream this?

-

I believe that I was able to edit in the past posts even if they were older. Just came across one of my posts with a typo and when I tried to edit it, the Edit command is not present anymore. Is that a recent change, or am I just hallucinating due to old age?

-

windjmj changed their profile photo

- Last week

-

ponty joined the community

-

list107 joined the community

-

Ivan_Daskalov joined the community

-

yoko joined the community

-

crewockeez joined the community

-

Darren started following list children classes?

-

If the child classes are statically linked in the code (via class constants, or whatever other mechanism you use), then this approach should always work, because the child classes will always be in memory.

-

Sylvain38_1973 joined the community

-

junandroid joined the community

-

Jonathan Lindsey started following list children classes?

-

Despite the documentation, I tried running this in the run-time environment and it worked. I did have to add class constants of each of the child classes to the block diagram (no this does not defeat the purpose for me). Am I missing something and in a different environment, it could stop working?

-

NuclearKitty joined the community

-

BerryKing joined the community

- Earlier

-

Rolf Kalbermatter started following Labview Application crash Error 0xc0000005

-

As far as I can see on the NI website, the WT5000 instrument driver is not using any DLL or similar external code component. As such it seems rather unlikely that this driver is the actual culprit for your problem. Exception 0xC0000005 is basically an Access Violation fault error. This means the executing code tried to cause the CPU to access an address that the current process has no right to access. While not entirely impossible for LabVIEW to generate such an error itself, it would be highly unlikely. The usual suspects are: - corrupted installation of components such as the NI-VISA driver but even LabVIEW is an option But if your application uses any form of DLL or other external code library that is not part of the standard LabVIEW installation, that is almost certainly (typically with 99% chance) the main suspect. Does your application use any Call Library Node anywhere? Where did you get the according VIs from? Who developed the according DLL that is called?

-

Davide Bugiantella started following Labview Application crash Error 0xc0000005

-

HI. Using LabVIEW 2018 32-bit, I created an application for Windows 10, but random crashes occur with error code 0xc0000005. My suspicions fall on the VIs used to communicate with the Yokogawa WT5000, but after reviewing the various lvlog files, I haven't been able to identify which specific VI is causing the crash. That said, I would kindly ask for your assistance in identifying the VI responsible for the issue. Thank you. 03dc9418-0115-4f24-ba73-07f98ebf6821.zip 3eb1d949-0f35-4147-809b-7f7dff13ad06.zip 6bcc6278-fb26-48c7-a715-ab2f35a336de.zip 6f80126d-b9e2-4a12-b7f2-e8c15fb9ea48.zip

-

Darren started following How to get all Tags of an Object

-

-

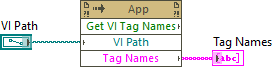

Hi Francois, Thanks for creating the code inside Retrieve Tags 8.6.zip. The code inside the zip file works perfectly for me. Specifically, when running Get All Tags from VI.vi I am getting all tags stored inside the Tagged Test VI.vi. Ideally I would like to use Get All Tags from VI.vi to inspect the tags inside VIs saved using the latest versions of LabVIEW (2024 Q3 and 2025Q1). As far as I can tell Get All Tags from VI.vi works only on VIs saved in LabVIEW 8.6 or LabVIEW 2009. This is because each version of LabVIEW uses a hardcoded six-byte string. VIs saved in 8.6 use "0860 8001 0000" while VIs saved in 2009 use "0900 8000 0000". Do you know what the corresponding six-byte sequences are for the latest versions of LabVIEW? Is there a more generic way of finding the tag names, one that does not require a six-byte sequence for each LabVIEW version? Many thanks, Petru

-

Still waiting🥲

-

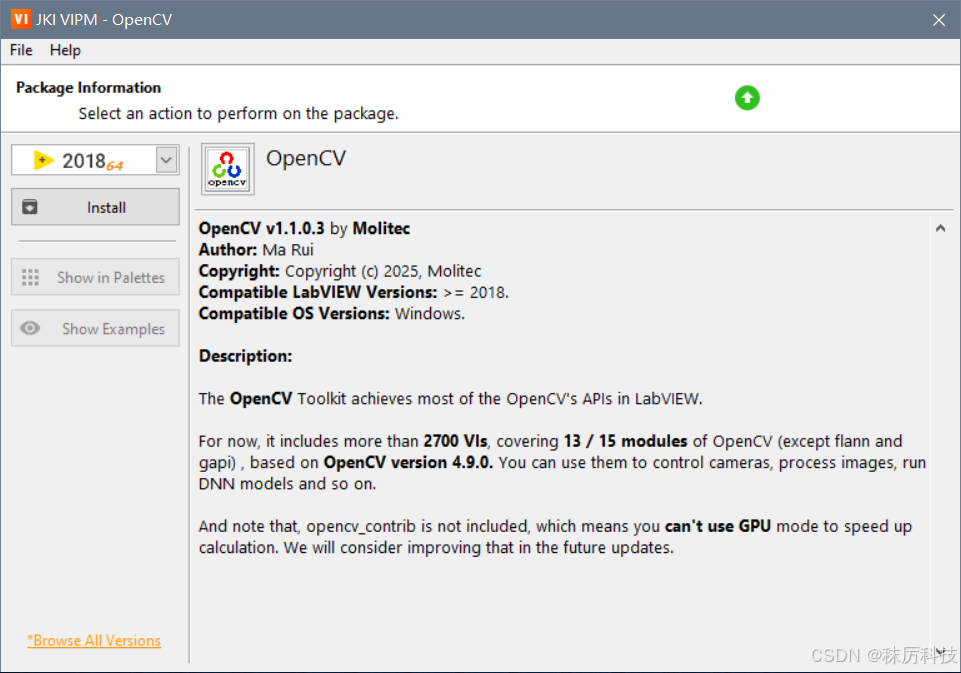

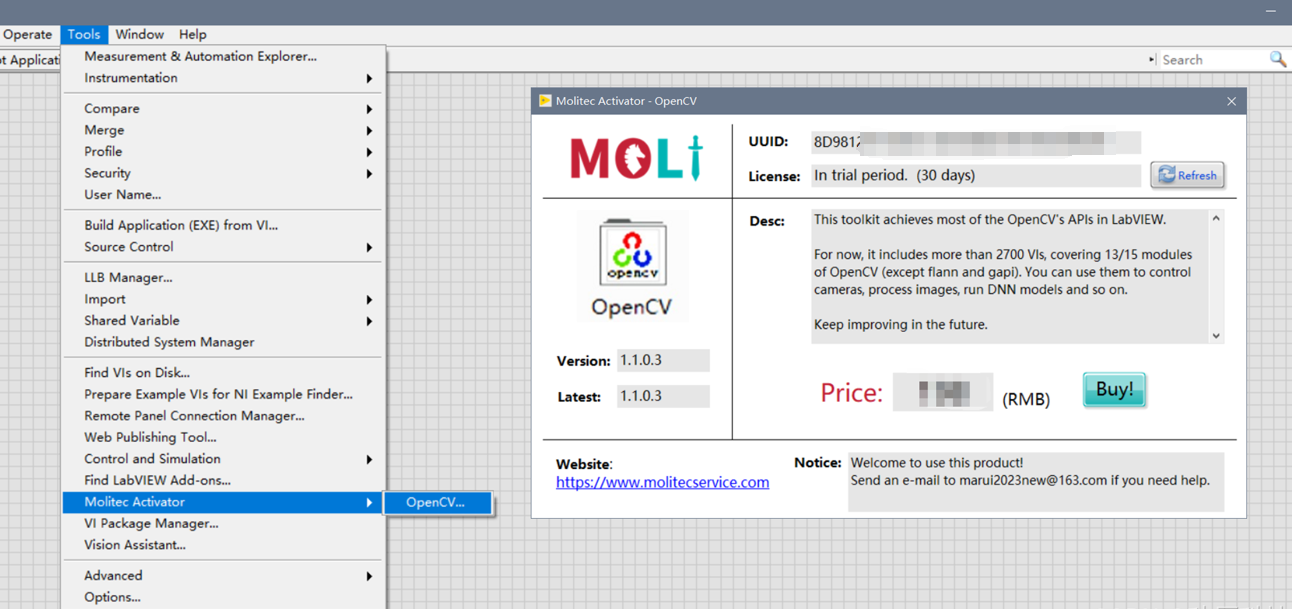

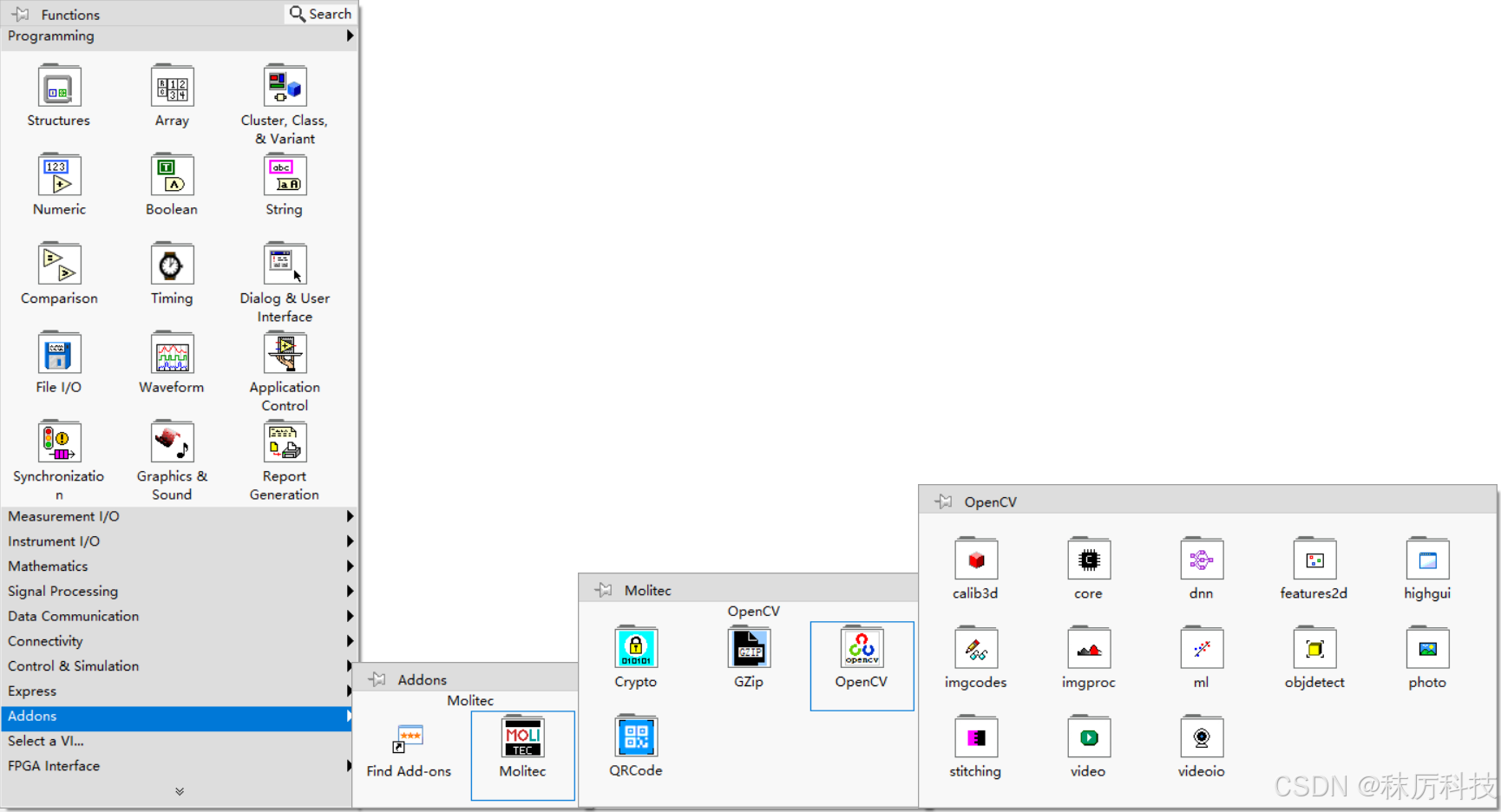

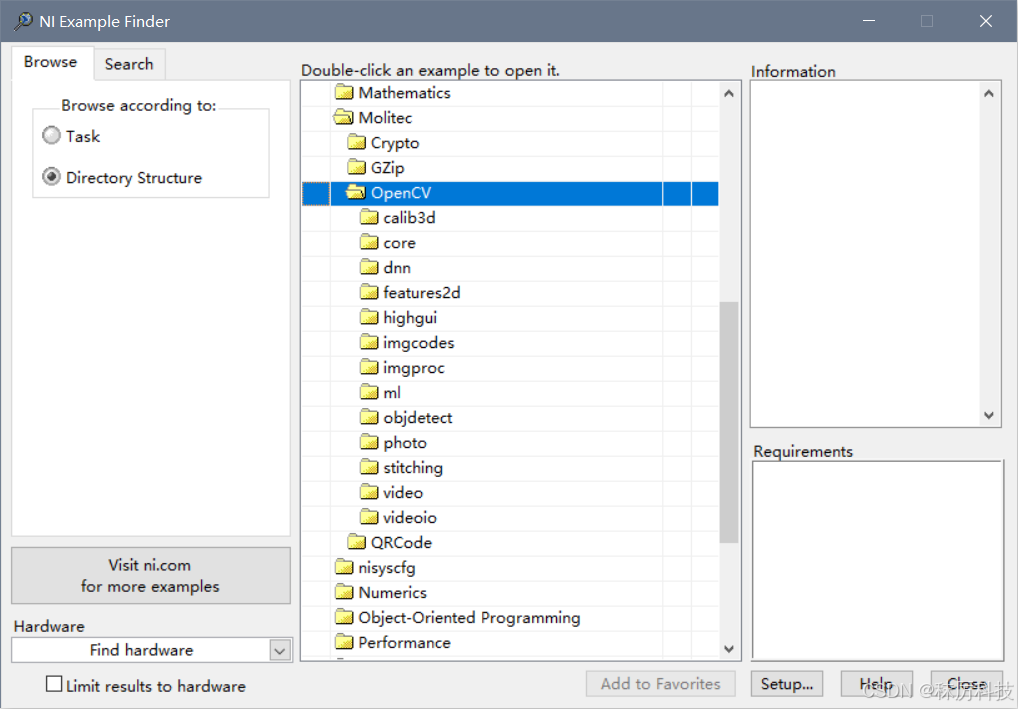

Hello everyone, I developed an Addons-Toolkit of LabVIEW, which achieves most of the OpenCV's APIs. It includes more than 2700 VIs, covering 13/15 modules of OpenCV (except flann and gapi) . You can use it to control cameras, process images, run DNN models and so on. Welcome to my CSDN blog to download and give it a try! (Chargeable, 30 days trial) Requirements: Windows 10 or 11, LabVIEW>=2018, 32 or 64 bits.

-

MOLITEC changed their profile photo

-

Had the same issue. Removing a VI from a lvlib, using Pane Relief to set splitter size and moving it back to the the lvlib worked for me to. Thanks!

-

About a minute for a change, that's what I've seen on a production machine. On my home computer it's as on yours though: a single edit takes 1-2 seconds. I'm still investigating it. I will try different options such as enabling those ini keys or disabling the auto-save feature. To me it looks like the whole recompilation is triggered sometimes. By the way, there are more keys to fine-tune XNodes: I haven't yet figured out the details.

-

Dadreamer is talking about minutes per change though. I still think the symptom is probably exacerbated by XNodes but probably not the fundamental problem.

-

The newest version of LabVIEW I have installed is 2022 Q3. I had 2024, but my main project was a huge slow down in development so I rolled back. I think I have some circular library dependencies, that need to get resolved. But still same code, way slower. In 2022 Q3 I opened the example here and it locked up LabVIEW for about 60 seconds. But once opened creating a constant was also on the order of 1 or 2 seconds. QuickDrop on create controls on a node (CTRL+D) takes about 8 seconds, undo from this operation takes about 6. Basically any drop, wire, or delete operation is 1 to 2 seconds. Very painful. If you gave this to NI they'd likely say you should refactor the VI so it has smaller chunks instead of one big VI. But the point is I've seen this type of behavior to a lesser extent on lots of code.

-

Do you see the unresponsiveness in dadreamer's example?

-

It has gotten worst in later versions of LabVIEW. I certainly think the code influences this laggy, unresponsiveness, but the same code seems to be worst the later I go.

-

Editing a constant in your test VI only results in a pause for about 1.5 secs on my machine. It's the same in 2025 and 2009 (back-saved to 2009 is only 1.3MB, FWIW). I think you may be chasing something else. There was a time when on some machines the editing operations would result in long busy cursors of the order of 10-20 secs - especially after LabVIEW 2011. Not necessarily XNodes either (although XNodes were the suspect). I don't think anyone ever got to the bottom of it and I don't think NI could replicate it.

.thumb.jpg.4f264959b550e11a7954ae7fa0f34911.jpg)