-

Posts

5,001 -

Joined

-

Days Won

311

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by ShaunR

-

NI abandons future LabVIEW NXG development

ShaunR replied to Michael Aivaliotis's topic in Announcements

Just welcoming an old friend to the forum -

NI abandons future LabVIEW NXG development

ShaunR replied to Michael Aivaliotis's topic in Announcements

Well. Aren't we a ray of sunshine nowadays -

NI abandons future LabVIEW NXG development

ShaunR replied to Michael Aivaliotis's topic in Announcements

AQ leaves, NXG gets shelved. Insert your rumour-mongering and conspiracy theories here -

You have obviously never done Agile Development proper then since it is an iterative process which starts with the design step just after requirements acquisition. It's not a fear of failure, it is a fast-track route to failure which usually ends up with the software growing like a furry mold. But anyway. It's your baby. You know best. Good luck :)

-

Uhuh. Seat-of-your pants design; the fastest way to project failure.

-

This is the standard way to do service discovery. My "Dispatcher" implementation had a "broker" that ran on the local machine to act as a gateway to services that registered with it on that machine. External (or local) clients would then contact it to discover services and it would hand off comms to the service for direct communication. It behaved as a router rather than the usual broker and meant it didn't become a bottleneck for high speed transfers. Your framework would be a good match for the above implementation since it already has all the publish, subscribe and routing features, IIRC.

-

That's fine. I just don't like to distribute modified software that I produce where the licencing isn't explicit - which is why I asked you for one (thinking you had produced it as the original author).

-

OK. I've deleted the backport since the licence is indeterminate. I thought it was a library you had created.

-

Backported to LV2009. what's the licencing for this? Edit. Removed software due to indeterminate licencing.

-

The major use case for UDP I would have is for COAPS (which, incidentally, has service discovery rather than node discovery). Most node discovery methods require a known entry point (gateway, router, default port etc) and it's hard to get away from that that in a reliable way across numerous network architectures.

-

LabVIEW "live" USB

ShaunR replied to Neil Pate's topic in Application Builder, Installers and code distribution

Very hard to do but can be done-non trivial. You will obviously have to talk to your client and find out exactly what they mean - get one of the USB sticks they use . Maybe you'll be lucky and they just mean using the LabVIEW application as the windows shell, which I do on production-line machines. -

It's a compromise between convenience and security and partially solves the "trust" issue by having really, really trustworthy organisations There have been other alternatives proposed but the "trust" issue has never really been solved adequately, to date. I trust me so my certificates are great (for me). The problem with that is then distribution. SSH. which is arguably the progenitor of modern TLS, got a lot of things very right. We haven't really moved on from that model except to make a whole new business sector for the key management.

-

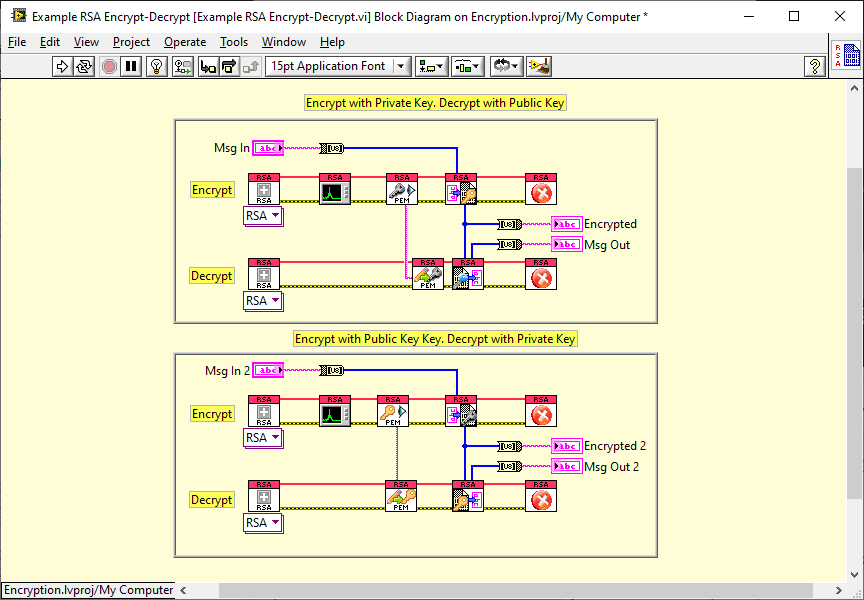

I don't think there is anything off-the-shelf, to my knowledge - Bluetooth has it's own encryption scheme. I think you are looking at using some existing TLS client/server implementation and replacing the underlying Socket connection with a Bluetooth connection. Edit: Thinking a little more. there may be another way. the caveat would be it would only work for RSA certs in this scenario, There is an example of RSA Encrytion/Decryption. You could load an x509 cert, extract the keys and use the encryption functions to encrypt the bluetooth data. This wouldn't handle the authentication but it's some of the way there. It might be possible to check the authenticity separately from the encryption but I'm not sure at the moment.

-

You can't outsource security If you understand that all TLS communications are interceptable by governments because of CA's, then you might also be reticent when dealing with some governments.

-

I am taking a sabbatical from LabVIEW and NI R&D

ShaunR replied to Aristos Queue's topic in LAVA Lounge

Congratulations. It will go great and it's a fantastic area to grow into. You won't regret it but i very much doubt you will be back at NI - it'd be a step backwards. -

I wouldn't. HTTPS is just one protocol. I personally use secure websockets which is much better suited to this sort of thing IMO, Most of these use TLS though and more recently I've been playing with DTLS. If I don't use those sorts of protocols then I use SSH but that's nothing to do with what the OP is asking as it doesn't use X.509 certs (which is,as you know, just a standardised certificate format). I think that's just middle-management phrasing. I wouldn't be surprised if the device already supports this method of updating and they were told it uses X.509 certificates "for security". but we diverge..... The main thing to ascertain is whether Certificate Authority certs are required or if self-signed certificates can be used. They both allow "securely writing"; the difference is authenticity. This dictates whether things work out-of-the-box (in the same way as your web-browser works out-of-the-box) or you have to add pub key certs to root bundles. Personally I prefer self-certs for this sort of thing as it provides greater granularity for who can access the device, I don't trust Certificate Authorities, and when IT get a whiff of you requiring company CA certs, they usually try to get involved. It really depends on whether you have control over the server though.

-

This is quite a common requirement nowadays, especially within the IoT sphere. Many embedded devices come with libraries for what is called OTA (Over The Air). A multitude of devices are then monitored and configured (including software/firmware updates) via a web server. I wouldn't be surprised if the NI Systemlink uses something similar (via either HTTPS or MQTT). TLS is quite burdensome for constrained devices, specially if you have to put a webserver on the device to upload rather than using OTA libraries. To be honest. This isn't something I would use LabVIEW for. There are much better solutions available specifically for this purpose.

-

Design advice needed for data acquisition system

ShaunR replied to brownx's topic in Application Design & Architecture

You will have to re-architect that if you want to.... By the way. The TCPIP I described earlier is a layer to break cohesion - meaning that the VI's can be controlled by anything, in any language, including Test Stand. So if you want the "on demand" aspect, then that is probably the easiest way forward. -

Design advice needed for data acquisition system

ShaunR replied to brownx's topic in Application Design & Architecture

Another thing I did with the aforementioned was to add TCPIP (a later version). It meant you could launch a VI and then communicate with it via strings sent from Test stand - even on remote machines. The wrapper was only slightly more complex than the pure launcher but it meant you could configure the devices on-the-fly, abort or reset devices and query state information with simple strings from Test Stand (I used SCPI-like commands). Anyway. I diverge. You've probably guessed by now that the choice you had was to have an instance per device which you control and sequence from TS, which is what I was describing, or an application that does it and reports back to TS, which is what you are designing. The others have given you options for the latter and I was just giving you a method for the former. -

Design advice needed for data acquisition system

ShaunR replied to brownx's topic in Application Design & Architecture

A very complicated reporting tool. Some things are better for scripting/sequencing than others. Take the step response of an oven, for example. You need to step change the setpoint (SP) and monitor the temperature until a certain value has been achieved as it changes and note the time taken. To determine whether it has finished it's step, you detect the plateau in rate of change. In an application this is trivial and the test time is the time it takes to achieve get within +-% for n secs of SP or when dtemp/dt < x for n points (depends on specification). It is usually also monitored to determine whether it overshot before it got there (which may be a fail). In Test Stand it is orders of magnitude more complicated to achieve the same process and you will probably try to wait x amount of time and hope that the temperature is achieved. However. You will miss the overshoot and that's not the test anyway. So you will write some software to do the state monitoring and calculate the rate of change and, now, you are using Test Stand just as a reporting tool. Test Stand is good for "stateless", pass/fail testing and suffers the same problems as any other scripting/sequencing environment when state and/or continuous monitoring is introduced. It may be that it has served you faithfully for the most part in your testing but it is not a test panacea which, I think, is why you are asking the question. -

Design advice needed for data acquisition system

ShaunR replied to brownx's topic in Application Design & Architecture

The trivial answer is the launcher can be run re-entrant so you can run it from multiple scripts with different configurations etc But what you are trying to achieve, from your description, is a poor fit for Test Stand, period. So I expect there will be other things that that perhaps you haven't mentioned (like the background tasks) that will make it difficult to use from Test Stand regardless of the solution. It sounds like you really need an entire application rather than a simple Test Stand integration. -

Calling arbitrary code straight from the diagram

ShaunR replied to dadreamer's topic in LabVIEW General

What about VIRefPrepNativeCall and VIRefFinishNativeCall? They sound interesting but maybe a red herring.- 12 replies

-

Design advice needed for data acquisition system

ShaunR replied to brownx's topic in Application Design & Architecture

I wrote a simple "connector" for test stand a few years ago. It was just a VI launcher which had a standard calling convention (from Test Stand for configuration of the called VI) and a standard output back to Test Stand. It would call any VI with the appropriate FP connectors, dynamically, with parameters supplied from Test Stand. You could call any VI's (DVM, Frequency generators etc) which were wrapped in a normalising VI which created a standard interface for the launcher to call and formatted data to the standard format to be returned. All configuration, reporting and execution was in Test Stand. It took about 5 mins to write the launcher and about 5 mins to write a wrapper VI for each device. The caveat here is that there was already a device VI to wrap. This a similar technique to VI Package Manager which runs the pre and post install VI's. -

Calling arbitrary code straight from the diagram

ShaunR replied to dadreamer's topic in LabVIEW General

hmmmm.- 12 replies