-

Posts

6,219 -

Joined

-

Last visited

-

Days Won

121

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by Michael Aivaliotis

-

OpenG Library Exported to Github

Michael Aivaliotis replied to Michael Aivaliotis's topic in OpenG Developers

Sort of. I think Github uses the email address, not the username. I've asked @Rolf Kalbermatter for his GitHub email, because it didn't work with his username. I decided to leave the repos under GCentral and use the OpenG prefix as suggested. -

OpenG Library Exported to Github

Michael Aivaliotis replied to Michael Aivaliotis's topic in OpenG Developers

I've started adding repos: https://github.com/Open-G -

OpenG Library Exported to Github

Michael Aivaliotis replied to Michael Aivaliotis's topic in OpenG Developers

@LogMAN, Is it possible to remap a source forge username to a GitHub username? -

OpenG Library Exported to Github

Michael Aivaliotis replied to Michael Aivaliotis's topic in OpenG Developers

@Rolf Kalbermatter, I will be redoing the export today based on the new scripts, if they work. So if you committed code recently, it will get included. I think you are the only one working on any code at the moment. I will add you as a collaborator on the repo so you don't need to fork. Once the conversion is complete: Make a branch on the git repo. Checkout the branch Do a file diff between your local SVN and the Git branch. Copy over the changed code from SVN to Git. Commit the branch. @LogMAN, unfortunately GitHub does not have a good way to group related repositories, so they visually seem to belong together. The only distinction is organizations. There's the Project feature, which I think just helps with development workflows. Having numerous OpenG repositories will make this a bit messy. But I guess there's no way around that. It seems GitLab and Bitbucket have a slightly better approach with this. -

OpenG Library Exported to Github

Michael Aivaliotis replied to Michael Aivaliotis's topic in OpenG Developers

Thanks @LogMAN. After I get paying work done, I will try this out. For anyone else following along. If you are currently an OpenG developer ( @Rolf Kalbermatter?) and want to be added to the OpenG team on GitHub, send me a PM. -

[CR] Hooovahh Array VIMs

Michael Aivaliotis replied to hooovahh's topic in Code Repository (Uncertified)

We can create a VIM array package for OpenG that is separate from the other array package. We could call it something else. So it could be distributed in 2017. Currently the entire OpenG sources are in a single repo. So you have to build everything in one LV version (2009). If we made each package its own repo then it could have its own LV versioning roadmap separate from the whole. See discussion here. -

OpenG Library Exported to Github

Michael Aivaliotis replied to Michael Aivaliotis's topic in OpenG Developers

Well, I was hoping someone would continue the discussion, so great! We can redo the conversion. But is it really that critical to migrate the history intact? I question the need for that. If not we can start fresh. Authors - The conversion I went through had the ability to add email addresses to the author names. I just don't know what the email addresses are for the authors in Sourceforge. I've attached the author list if anyone wants to help flesh out the email addresses.Then we can rerun the conversion. Branches and tags. - I looked at the SVN repo and there's only one branch, SVN does not due branches well, so I'm not surprised nobody used that feature. There are a few tags and those are very old, circa 2007. Not sure if anybody cares about those. It seems the tagging procedure (if any) was dropped long time ago. You should be tagging with every release but that does not appear to have happened. One repo - The original SVN was a single repo, this is why i kept it the same. The conversion is a lot simpler. Breaking up a single SVN repo into multiple GIT repos and keeping the history intact seems complicated if you have commits that include files that cross library virtual boundaries. If you can think of a way to do this, that would help. Commit messages. - The commit messages are all there. It's the URLs inside the messages that are not pointing correctly, but I'm not sure what they should be point too and how to fix that during the conversion. Also, what if SourceForge changes the URL structure later? Again, is this important? Well, this is a good starting point. The alternative is to start fresh and create multiple GitHub repos, with the latest revision of the source. Then the SVN repo can be an archive if anyone wants to get at it. I welcome your help if you can create scripts to solve some of the above problems. authors.txt -

OpenG Library Exported to Github

Michael Aivaliotis replied to Michael Aivaliotis's topic in OpenG Developers

I followed these instructions: https://www.atlassian.com/git/tutorials/migrating-overview I did that because my goal initially was to export to bitbucket, which I did. Then I changed my mind and decided that Github would be better for a community project like this. So then, since it was already in a Git format it was simple from within the Github website to select "import" and just point to the Bitbucket URL. Note: Those instructions work best if executed from a linux machine. I quickly spun-up an Ubuntu VM to do this. -

I've exported the OpenG sources from Sourceforge SVN to Github. It's located here: https://github.com/Open-G I'm hoping this will encourage collaboration and modernization of the OpenG project. Pull requests are a thing with Git, so contributions can be encouraged and actually used instead of dying on the vine.

-

[CR] Hooovahh Array VIMs

Michael Aivaliotis replied to hooovahh's topic in Code Repository (Uncertified)

Not having looked at your code, do you think this should go into OpenG? How can we improve OpenG? Where is the OpenG repo? -

Poll on Architecture and Frameworks

Michael Aivaliotis replied to drjdpowell's topic in Application Design & Architecture

I'm glad to see all these frameworks being bashed about. I like to read opinions from people who have tried the various frameworks and can compare based on real implementations. Not example code. Just came back from the US CLA summit (videos being posted to LabVIEW Wiki soon). Apparently there's YAF (Yet Another Framework) being used by Composed Systems and it was presented at the CLA summit: https://bitbucket.org/composedsystems/mva-framework/src/master/ It seems to be an actor-framework extension. Framework on top of framework? Jon argued that the complexity of a framework is secondary to the ability of a framework to allow certain programming concepts to be used during development. One being, separation of concerns. if you look at slide 4, Jon vehemently disagrees with that statement. In other words, you should not look at a complex framework and be afraid of it. Don't focus only on how easy it is to learn or get going with it. Question to you. What is important in choosing a framework? Here's a link to the slides: https://labviewwiki.org/w/images/2/24/Design_for_Change.pdf -

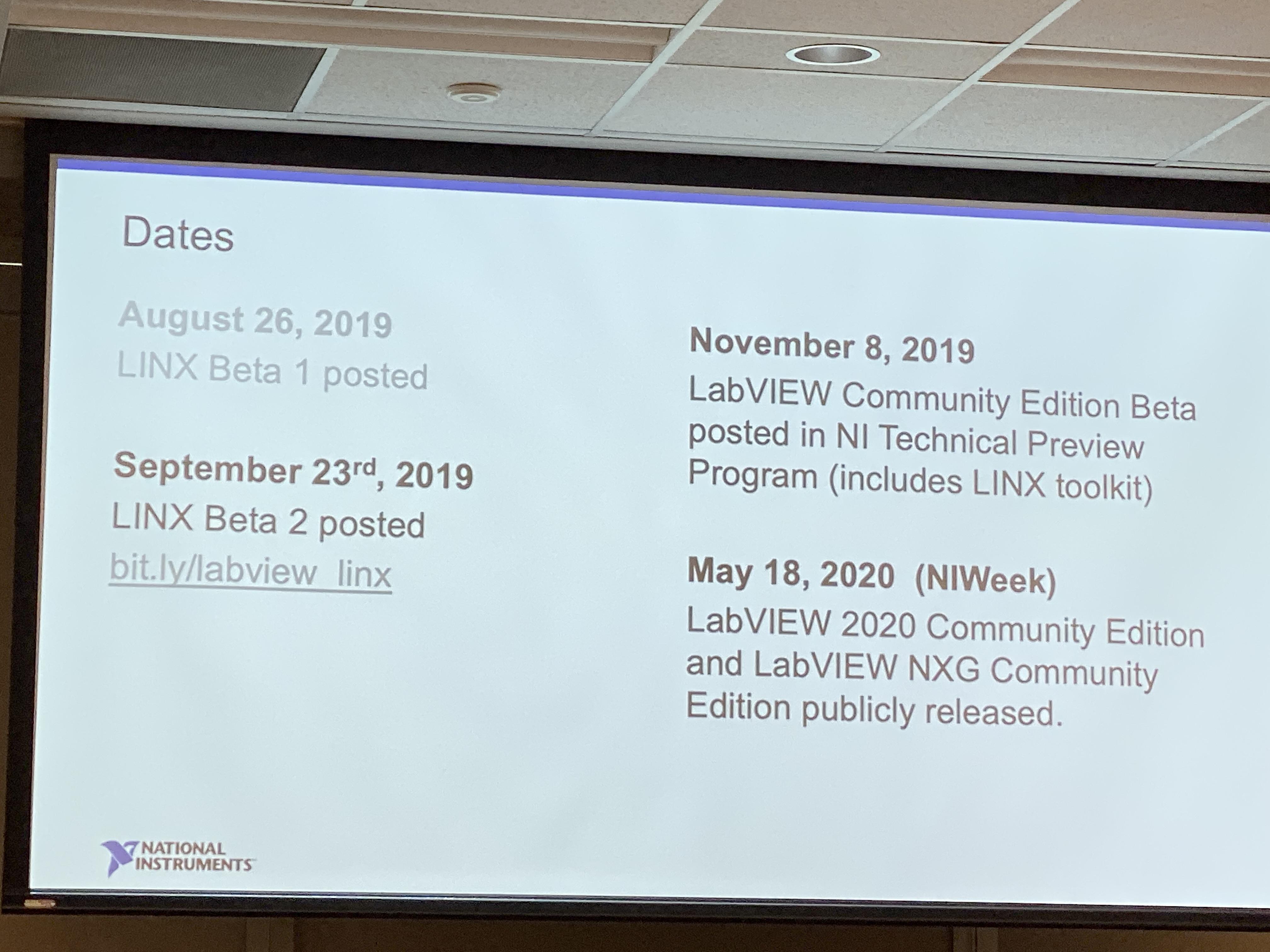

LabVIEW Community Edition Announced

Michael Aivaliotis replied to hooovahh's topic in LabVIEW Community Edition

-

How do you report or delete articles on the wiki?

Michael Aivaliotis replied to LogMAN's topic in Wiki Help

All of this spam was created a long time ago when the site was more vulnerable. The wiki has been locked down since then, and this should not happen again (but some get through). I deleted the first list of pages you mentioned. The uploaded images are tedious to delete because there no simple tool I've found to do it on a mass scale. Since they're not linked anywhere then they don't do much harm except use up server space. It seems you have time. So I will connect with you directly offline to give you some rights. -

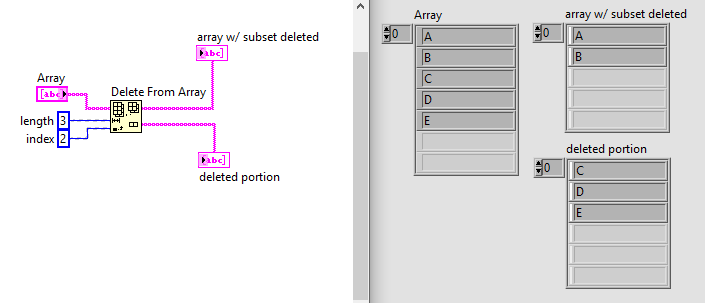

deleting a portion of a string not an array

Michael Aivaliotis replied to rscott9399's topic in LabVIEW General

The OP didn't clarify his requirements so we could all be wrong. But ya, all you guys say is true. - Thanks. My intent was to start the conversation, not to provide the best solution. I just wanted to show how the delete from array works and to provide some visuals. -

deleting a portion of a string not an array

Michael Aivaliotis replied to rscott9399's topic in LabVIEW General

I dunno. Your question is not clear, but let me take a shot. This example deletes the last 3 elements of an array of strings. Don't forget that array indexes start at 0, not 1. -

Where are OpenG Product Pages for packages?

Michael Aivaliotis replied to wildcatherder's topic in OpenG General Discussions

@Tim_S, the OP makes a valid point. All you say is true, however, the link remains broken and the person packaging the OpenG tools should either fix the link and provide the documentation, or remove the link from the package build. -

We're starting to add the back catalog to YouTube. NIWeek 2018 videos are also up.

-

Mark of course has the originals and always will. So that's not an issue. However, nobody cares about some LabVIEW videos. Yes, that's a great idea. It's a wiki so anyone can add them if needed. Feel free to contact them or edit the page. That's because the videos are unlisted. We are doing this so that in order to view the videos you have to go to the wiki page as the entry point. This way they are kept within the community. This was the compromise to balance accessibility. We can deal with that issue if we hit it, which I doubt we will. Considering the videos are unlisted and we have approval by the presenters.

-

Hey folks. this year we're trying something new. All Videos for NIWeek 2019 can be found here: https://labviewwiki.org/wiki/NIWeek_2019 Feedback welcome. Thanks to @Mark Balla and other volunteers for recording the videos. Edit: We're starting to add the back catalog to YouTube. NIWeek 2018 videos are also up.

-

Where do I view old lavausergroup.org threads?

Michael Aivaliotis replied to Sparkette's topic in Site Feedback & Support

I don't have the time or desire to figure out how to automate this. But it's cool that trick works. -

Communication issues EtherCAT cRIO 9039

Michael Aivaliotis replied to EtherCAT_slave's topic in Real-Time

Is this error message coming from a VI that you are running on the RT target? Have you tried using the NI Distributed System Manager? I use that to debug Ethercat issues. When you switch to Active mode using NI DSM, it works pretty consistently. Unfortunately the NI Ethercat driver is a load of poop. Soft reboot of the controller usually fixes things up. -

Where do I view old lavausergroup.org threads?

Michael Aivaliotis replied to Sparkette's topic in Site Feedback & Support

No. -

Where do I view old lavausergroup.org threads?

Michael Aivaliotis replied to Sparkette's topic in Site Feedback & Support

I have no idea. That seems to be a URL in the old format which died a log time ago. The post probably still exists but was converted to a new URL format and there is no redirect. -

What happens when you create a page? Do you get an error or something?