-

Posts

3,948 -

Joined

-

Last visited

-

Days Won

275

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by Rolf Kalbermatter

-

Neat trick but that's for .Net assemblies only and won't work for standard DLLs, will it? I use Dependency Checker for that. It's an old tool that hasn't been updated to deal with some newer Windows features very well and gets somewhat confused about DLLs that are of different bitness than itself, but it is usually enough to see the non standard system DLLs and its dependencies. Anything like kernel32.dll etc you shouldn't have to worry about anyhow.

-

I think it is a little far fetched to request full VIPM feature completeness from any new package management system. But NIPM was more an NI attempt to do something along the lines of MSI than VIPM despite their naming choice and marketing hype. As such it hasn't and still doesn't really support most things that VIPM (and OGPM before) where specifically built for. It was just NI's way to ditch the MSI installer technology which got very unwieldy and can't really handle NI software package installs well (I find it intolerable that a software installation for the LabVIEW suite with moderate driver support takes anything from 4 to 6 hours on a fairly modern computer. That's some 6GB of software to install and shouldn't take that long ever!) Also MSI can't ever be used for support realtime installations as it is totally built on COM/ActiveX technology which is a total no go for anything but Windows platforms. Unfortunately NIPM had and still has many problems even in the area where it was actually built for. It doesn't often work well when you try to install a new LabVIEW version alongside a previous one. That was something that worked at least with the old MSI installers pretty well. And yes despite its name it hasn't really any VIPM or GPM capabilities specifically targetted at LabVIEW library distributions and the configuration part to build such packages is very lacking.in the I suppose they were eventually planning on making NIPM the underlaying part of installing software through System Link and not have it any user facing interface at all, but had to do something in the short term. As it is it doesn't look to have enough features for anything but for NI to distributing their software installers.

-

ITreeView activex library

Rolf Kalbermatter replied to Bjarne Joergensen's topic in Calling External Code

If it is in SysWOW64 then it is a 32-bit library. System32 contains 64-bit binaries on 64-bit Windows systems. On 32-bit systems System32 contains 32-bit binaries and SysWOW64 doesn't exist! -

Huuu? If that was like this you wouldn't need a string control, a text table, and just about any other control except booleans and numerics has some form of text somehwere by default, or propertys to change the caption of controls, axis labels, etc. etc. In that case adding Unicode support would indeed have been a lot easier. But the reality is very different! Also your conversion tool might have been pretty cool, but converting between a few codepages wouldn't have made a difference. If you have a text that comes from one codepage you are bound to have characters that you simply can't convert into other codepages so what to do about that? LabVIEW for instances links dynamically to files based on file names. How to deal with that? The OS does one type of conversion depending on the involved underlaying filesystems and a few other parameters and there is only no loss if both filesystems support fully Unicode and any transfer method between the two is fully Unicode transparent. That certainly wasn't always true even a few years back. Then the Unicode (if it was even fully preserved) is translated back to whatever codepage the user has set his system to for applications like LabVIEW which use the ASCI API. Windows helpfully translates every character it can't represent in that codepage into a question mark except that that is officially not allowed to be used in path names. LabVIEW stores filenames in its VIs and then if LabVIEW would use a self cooked conversion it would be bound to have some different conversions than what Windows or your Linux system might come up with. Even the Windows Unicode translation tables contained and still contain diversions from the official Unicode standard. They are not fully transparent when compared for instance to implementations like ICU or libconv. And they probably never will completely be because Microsoft is bound to legacy compatibility just as much and changing things now would burn some big customers. And that is just the problem of filenames. There are many many more such areas where there are no really clean solutions for. In many cases no solution is better than a halfbacked one that might make you feel safe only to let you fall badly on your nose. The only fairly safe solution is to go completely Unicode. Any other solution falls either immediately flat on its nose (e.g. codepage translation) or has been superseeded by Unicode and is not maintained anymore. That's the reality. And just for fun even Unicode can be tricky when it comes to collation for instance. Comparing strings just on codepoints is for instance a sure way to fail as you have so called non-forwarding codepoints that combined with other codepoints can form a character. Except that Unicode for many of these characters also contains single codepoints. Looking at the binary representation the strings surely look different, but logically they are not! I'm not even sure Windows uses any collation when trying to locate filenames. If it doesn't it might be unable to find a file based on a path name eventhough the name stored on disk and visible for instance in Explorer looks textually exactly the same than the name you passed to the file API. But without proper collation they simply are not and you would get a File Not Found error! WTF the file is right there in Explorer! As to solving encoding when interfacing to external interfaces (instrument control, network, file IO, etc etc) there are indeed solution to that, by specifying an encoding at these interfaces. But I haven't seen one that really convinced me to be easy to use. Java (and even .Net which was initially just a not-a-Sun version of Java from Microsoft) for instance uses a string to indicate the encoding to use but that string has been traditionally not very well defined and there are various variants that mean basically the same but look very different and the actual real support that comes standard with Java is pretty limited since it has to work on many different platforms that might have very little to no native support for this. .Net has since become a lot more support but that hasn't made it simpler to use. And yes the fact that LabVIEW used to be multiplatform didn't make this whole business any easier to deal with. While you could sell to customers that ActiveX and .Net simply was technically impossible on other platforms than Windows, that would't have fared well with things like Unicode support and many other things. Yet the underlaying interfaces are very different on the different platforms and in some cases even conceptually different.

-

That's not as easy even if you leave away other platforms than Windows. In old days Windows did not have support preinstalled for all possible codepages and I'm not sure it does even nowadays. Without the necessary translation tables it doesn't help if you know what codepage text is stored in so translation into something else is not guaranteed to work. Also the codepage support as implemented in Windows does not allow you to display text in a different codepage than what is currently active and even if you could switch the current codepage on the fly all text previously printed on screen in another codepage would suddenly look pretty crazy. While Microsoft had support for Unicode initially only for the Windows NT platform, (which wasn't initially supported by LabVIEW at all) they only added a Unicode shim to the Windows 9x versions (which were 32 bit like Windows NT but with a somewhat Windows 3.1 compatible 16/32 bit kernel around 2000 by a special Library called Unicows (Probably for Unicode for Windows Subsystem) that you could install. Before that Unicode was not even available on Windows 95. 98 and ME, which was the majority of platforms LabVIEW was used on after 3.1 was kind of dieing. LabVIEW on Windows NT was hardly used despite that LAbVIEW was technically the same binary than for the Windows 9x versions. But the hardware drivers needed were completely different and most manufacturers other than NI were very slow to start supporting their hardware for Windows NT. Windows 2000 was the first NT version that saw a little LabVIEW use and Windows XP was the version where most users definitely abandoned Windows 9x and ME for measurement and industrial applications. That only would have worked if LabVIEW for Windows would use internally everywhere the UTF-16 API, which is the only Windows API that allows to display any text on screen independent of codepage support, and this was exactly one of the difficult parts to get changed in LabVIEW. LabVIEW is not a simple notepad editor where you can switch the compile define UNICODE to be defined and suddenly everything is using the Unicode APIs. There are deeply ingrained assumptions that entered the code base in the initial porting effort that was using 32-bit DOS extended Watcom C to target the 16-bit Windows 3.1 system that only had codepage support and no Unicode API whatsover and neither had the parallel Unix port for the Sun OS, which was technically Unix SRV4 but with many special Sun modifications, adaptions and special borks built in. It still allowed eventually to release a Linux version of LabVIEW without having to write an entirely new platform layer but even Linux didn't have working Unicode code support initially. It took many years before that was sort of standard available in Linux distributions and many more years before it was stable enough that Linux distributions started to use UTF-8 as standard encoding rather than the C runtime locals so nicely appreaviated with EN-en and similar which had no direct mapping to codepages at all. But Unix while not having any substantial Unicode support for a long time eventually went a completely different path to support Unicode than what Microsoft had done. And the Mac port only learned to have useful Unicode support after Apple eventually switched to their BSD based MacOS X. And neither of them really knew anything about codepages at all so a VI written on Windows and stored with the actual codepage inside would have been just as unintelligent for those non-Windows LabVIEW versions as it is now. Also in true Unix (Linux) way they couldn't of course agree on one implementation for a conversion API between different encodings but there were multiple competing ones such as ICU and several others. Eventually the libc also implemented some limited conversion facility although it does not allow you to convert between arbitrary encodings but only between widechar (usually 32-bit Unicode) and the currently active C locale. Sure you can change the current C locale in your code but that is process global so it also affects how libc will treat text in other parts of your program which can be a pretty bad thing in multithreading environments. Basically your proposed codepage storing wouldn't work at all for non-Windows platforms and even under Windows only has and certainly had in the past very limited merit. You reasoning is just as limited as the original choice of NI was when they had to come up with a way to implement LabVIEW with what was available then. Nowadays the choice is obvious and UTF-8 is THE standard to transfer text across platforms and over the whole world but UTF-8 only got a viable and used feature (and because it was used also a tested, tried and many times patched one to work as the standard had intended it) in the last 10 to 15 years. At that time NI was starting to work on a rewrite of LabVIEW which eventually turned into LabVIEW NXG.

-

No Classic LabVIEW doesn't and it never will. It assumes a string to be in whatever encoding the current user session has. That's for most LabVIEW installations out there codepage 1252 (over 90% of LabVIEW installations run on Windows and most of them on Western Windows installations). When LabVIEW classic was developed (around end of the 80ies of the last century codepages was the best thing out there that could be used for different installations and Unicode didn't even exist. The first Unicode proposal is from 1988 and proposed a 16 bit Unicode alphabet. Microsoft was in fact an early adaptor and implemented it for its Windows NT system as 16 bit encoding based on this standard. Only in 1996 was Unicode 2.0 released which extended the Unicode character space to 21 bits. LabVIEW does support so called multibyte character encodings as used for many Asian codepages and on systems like Linux where nowadays UTF-8 (in principle also simply a multibyte encoding) is the standard user encoding it supports that too as this is transparent in the underlaying C runtime. Windows doesn't let you set your ANSI codepage to UTF-8 however, otherwise LabVIEW would use that too (although I would expect that there could be some artefacts somewhere from assumptions LabVIEW does when calling certain Windows APIs that might not match how Microsoft would have implemented the UTF-8 emulation for its ANSI codepage. By the time the Unicode standard was mature and the various implementations on the different platforms were more or less working LabVIEW's 8-bit character encoding based on the standard encoding was so deeply engrained that full support for Unicode had turned into a major project of its own. There were several internal projects to work towards that which eventually turned into a normally hidden Unicode feature that can be turned on through an INI token. The big problem with that was that the necessary changes touched just about every code in LabVIEW somehow and hence this Unicode feature is not always producing consistent results for every code path. Also there are many unsolved issues where the internal LabVIEW strings need to connect to external interfaces. Most instruments for instance won't understand UTF-8 in any way although that problem is one of the smaller ones as the used character set is usually strictly limited to ASCII 7-bit and there the UTF-8 standard is basically byte for byte compatible. So you can dig up the INI key and turn Unicode in LabVIEW on. It will give extra properties for all control elements to set them to use Unicode text interpretation for almost all text (sub)elements instead but the support doesn't for instance extend to paths and many other internal facilities unless the underlaying encoding is already set to UTF-8. Also strings in VIs while stored as UTF-8 are not flagged as such as non Unicode enabled LabVIEW versions couldn't read them, creating the same problem you have with VIs stored on a non Western codepage system and then trying to read them on a system with a different encoding. If Unicode support is an important feature for you, you will want to start to use LabVIEW NXG. And exactly because of the existence of LabVIEW NXG there will be no effort put in LabVIEW Classic to improve its Unicode support. To make it really work you would have to rewrite large parts of the LabVIEW code base substantially and that is exactly what one of the tasks for LabVIEW NXG was about.

-

The word hidden in there makes it look very exciting but the reality is that it is simply the toolbar buttons that are made invisible in the VI Properties. Apparently there is an enum that describes all possible permutations with individual enum labels and that enum gets also saved to disk but for performance reasons they decided to rather have a bitmask field to use at runtime. So this "routine" converts from the enum to the bitfield. There should most likely be another routine that does the reverse, most likely by running through the gButtonsHidden global array and comparing sr_field14 with its values and storing the found index in sr_field22, or more likely that routine is directly called in the SaveVI routine to generate the right enum value to store in the SAVERECORD version. Why so complicated you may ask? Well the backsaving feature makes all kinds of complications necessary in order to be able to store VI files in older formats.

-

An USB device implementing the TMC class! That looks very much like the underlaying USB session itself somehow got borked. Once VISA calls into the Windows USB driver it can only hope that this driver will return within a reasonable time. If the driver detects some problem with the hardware resource it just can hang there and VISA only can wait until the Windows driver decides it wants to return. Unfortunately the fact that it is a Thorlabs device (from the VID and PID in the USB session name it must be a PM320E Optical Power and Energy Meter) doesn't increase my confidence in the actual hardware interface. Their physical hardware is amazing in what it can and does, their electronics and firmware is a lot less impressive. Personally I guess some previous command you send hasn't been fully processed by the device, or you haven't read the entire response, or haven't reset a status flag in the device or something like that. These things all shouldn't be able to lock up the USB interface, but they can and sometimes do.

-

(((*sr)->field_4 & 0xFF0000) >> 8 | ((unsigned int)(*sr)->field_4 >> 24) | (((*sr)->field_4 & 0xFF00) << 8 | ((*sr)->field_4 << 24); This is simply the BigEndian to LittleEndian swapping. All numeric values in the VI data structures are stored as Big Endian, since they need to be independent of the CPU architecture. On loading into memory on LittleEndian platforms (currently all but the VxWorks platform for PowerPC Real-time platforms, but in the past MacOS Classic (68k and PPC), Mac OS X for PPC, Sun Sparc and I believe HP PA RISC were all BigEndian versions of LabVIEW) they have to be swapped. Basically this is a pretty unexciting routine. It just copies the data structure from the memory stream on disk into a real data structure in memory and accounts for endian swapping on relevant platforms and massages some flags into a different format. The in memory version of the INSTRUMENT data structure basically changed with every single LabVIEW version considerably, even with minor changes between minor LabVIEW versions, so trying to access the fields at runtime in that structure is a very version dependent adventure.

-

Coining a phrase: "a left-handed scissors feature"

Rolf Kalbermatter replied to Aristos Queue's topic in LabVIEW General

Old fashioned it may be but you seem to have a surprising high trust in Microsoft, Google, Amazon and CO to not only be able to secure their infrastructure beyond any doubt but also to never turn evil from inside and scan your files no matter what. Ohh wait Google does that already, their user rights document you have to agree to to use their cloud services specifically states that they are allowed to scan your documents for service improvement purposes. In terms of Google that also means to improve their ad business revenues, it's after all still their core business with which they grew to the multi-multi billion $$ business they are nowadays. Sure they have other business diversifications that start to get important too, but the "Don't do evil" slogan from early days Google has long been abolished. 😄 Microsoft, Amazon and all the rest are businesses too, and when they believe they can get away with something to improve the revenue numbers under the final accounting line they will do it, as is their holy duty in the name of our modern day golden calf called shareholder value! 😄 But the real danger is not only evil intent, it is simply neglect! There is not a single week in recent years where not some server out there is discovered to expose very sensitive data to the broad lovely and always friendly internet. Cloud services being the core business of those business units from these companies makes them of course try hard to avoid such issues but errors often lie in very small corners but are so easily done. System Link cloud service being in fact outsourced to a real cloud service (NI doesn't want to go into running their own cloud service business anytime soon) doesn't make that less likely. It's simply one more intermediatery between your client, you, NI and the cloud service, that can add a critical error source. -

DLL Linked List To Array of Strings

Rolf Kalbermatter replied to GregFreeman's topic in Calling External Code

You completely borked it! An array of clusters is not an array of pointers to a C struct! It's simply an array of clusters all inlined in one single memory block! Look at resize_array_handle_if_required() and what you try to do in there! The first NumericArrayResize() should not allocate n * uPtr anymore but rather use a type of uB and allocate n * sizeof(DevInfo). Of course you still need to allocate any string handles inside the struct but that would be done in: static MgErr add_dev_info_to_labview_dev_info(DevInfo* pDevInfo, const IIR_USB_RELAY_DEVICE_INFO_T* info) { MgErr err = mgNoErr; int len = strlen(info->serial_number); pDevInfo->iir_usb_relay_device_type = info->type; err = NumericArrayResize(uB, 1, (UHandle*)&(pDevinfo->serial_number), len); if (!err) { MoveBlock(info->serial_number, LStrBuf(*(pDevinfo->serial_number)), len); LStrLen(*(pDevinfo->serial_number)) = len; } return err; } Nobody said C programming was easy at all. That is why we all use LabVIEW! In LabVIEW you can concentrate on the actual problem rather than having to go byte counting and using all over the place malloc() and free() (or the LabVIEW memory manager equivalents thereof). Also now you absolutely really and truely need to make sure to encompass the typedef for the DevInfo structure with the lv_prolog.h and lv_epilog.h include statements. Memory alignment for the 32 bit version of your DLL will otherwise play badly havoc with what the C compiler thinks the array should look like and what LabVIEW expects unless you happen to have defined the device_type variable element to be an int32. -

A the so called system color indices. Forgot about them. They are determined on startup based on the current OS scheme or possibly when Windows sends a system message that the OS scheme has been changed.

-

Well a color in LabVIEW is simply an int32 with the lower 24 bits being an RGB color when the MSB is 0. There are a few special constants when the MSB is exactly 1, with the 0x1000000 value indicating transparent, and 0x1000001 is a special type for most recent color and 0x1000002 indicates an invalid color. I don't think there are any other values above 0x1000002 that have any meaning in LabVIEW. The color box is a control with a special display logic that shows the transparent color with the T on top. It is meant as color chooser more than a visual object and if it just would display transparent as, well transparent, it would be hard for a user to know that it is a special color. You can do that with a boolean whose color is set to transparent if you need to. The boolean doesn't have special logic to display the T to indicate that it is transparent.

-

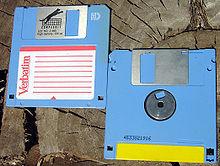

LabVIEW 2.5 was distributed on 3.5" floppy disks. About 4 or 5 or so of these in 1.44MB high density formatted. I ditched them several years ago as it is pretty hard to find a drive nowadays to read them. Later releases were substantially more floppy disk. I don't think CD-ROM was an option before LabVIEW 6.0.

-

Thousends of releases? I kind of doubt it. Leaving away LabVIEW prior to the multiplatform version (2.2.x and earlier which only were Macintosh) there have been 2.5, 3.0, 3.1, 4.0, 5.0, 5.1, 6.0, 7.0, 7.1, 8.0, 8.2, 8.5, 8.6, 2009, 2010, 2011, 20012, 2013, 2014, 2015, 2016, 2017, 2018, 2019 releases so far. Of each of them there was usually 1 and rarely two maintenance releases, and of each maintenance release between 1 to 8 bug fix releases. This does probably only amount to about 100 releases in total and maybe another 50 for beta releases of these versions (a beta has usually 2 to 4 intermediate releases although that tends to be no more than 2 in the last 10 years or so). I'm not aware of any LabVIEW release that had the debug symbols exposed. PDPs were used even in Microsoft Visual C 6.0, the first version that was probably used by NI to create a released LabVIEW version (NI only switched to use Microsoft C for the standard 32-bit builds for Windows NT, the Windows 3.1 versions of LabVIEW were created using Watcom C 10.x which was the only compiler able to create full 32-bit executables that could run on 16-bit Windows 3.1 through the built-in DOS extender runtime). Microsoft makes this anyhow pretty hard to happen by accident as such DLL/EXE files would normally link to the debug version of the Microsoft C Runtime library and you can't install that legally on a computer without installing the entire Microsoft C Compiler contained in the Visual Studio software. There is definitely no downloadable installer for the debug version of the C runtime engine. The only early leak I'm aware of was that the original LabVIEW 2.5 prerelease contained a huge extcode.h file in the cintools directory that showed much more than the officially documented LabVIEW manager functions. About half of it was still pretty much unusable as you needed other functions that were not exposed in there to make use of some of the features, and a lot of those functions were removed from the exported functions around LabVIEW 4.0 and 5.0 as they were considered obsolete or undesirable to pollute the exported symbols list, but it did contain a few interesting functions that are still exported from the LabVIEW kernel but not declared in the current extcode.h file. They fixed that extcode.h bug before the official release of LabVIEW 3.0, which was the first non-beta version of LabVIEW running on other computers than Macintosh. (2.5 was basically a beta release called prerelease version to have something to show for NI Week 1992 that runs on Windows 3.1, and there was a 2.5.1 and I believe 2.5.2 bug fix release of it in 1993). Also lvrt.dll is a development that only got introduced around LabVIEW 6.0. As this was released in 2000 it most likely used at least Microsoft Visual Studio C++ 6.0; Before that the application builder was concatenating the generated runtime LLB to a stub executable that contained the entire LabVIEW runtime engine. That was a pretty neat feature as it created a single file executable, but as LabVIEW was extended and more and more functionality implemented in external files and DLLs that get linked dynamically, this was pretty much unmaintainable in the long run and the entire runtime engine was externalized.

-

Coining a phrase: "a left-handed scissors feature"

Rolf Kalbermatter replied to Aristos Queue's topic in LabVIEW General

Part of these is the Macintosh legacy. You could make a case that Microsoft is at fault as they had to invent differences to the Mac, either for the sake of being different or maybe also to not give to much fodder for a court case about plagiarizsme. -

DLL Linked List To Array of Strings

Rolf Kalbermatter replied to GregFreeman's topic in Calling External Code

There were a few errors in how you created the linked list. I did this and did a quick test and it seemed to work. The chunk in the debugger is expected. The debugger does not know anything about LabVIEW Long Pascal handles and that they contain an int32 cnt parameter in front of the string and no NULL termination character. So it sees a char array element and tries to interpret it until it sees a NULL value, which is of course more or less beyond the actual valid information. #include "extcode.h" #include "stdlib.h" #include "string.h" typedef struct IIR_USB_RELAY_DEVICE_INFO { char *serial_number; struct IIR_USB_RELAY_DEVICE_INFO *next; } IIR_USB_RELAY_DEVICE_INFO_T; static IIR_USB_RELAY_DEVICE_INFO_T* iir_usb_relay_device_enumerate() { int i, len; IIR_USB_RELAY_DEVICE_INFO_T *ptr, *deviceInfo = NULL; const char* sn[] = { "abcd", "efgh", "ijkl", NULL }; for (i = 0; sn[i]; i++) { IIR_USB_RELAY_DEVICE_INFO_T* info = (IIR_USB_RELAY_DEVICE_INFO_T*)malloc(sizeof(IIR_USB_RELAY_DEVICE_INFO_T)); len = (int32)strlen(sn[i]) + 1; info->serial_number = (unsigned char*)malloc(len); memcpy(info->serial_number, sn[i], len); info->next = NULL; if (!deviceInfo) { deviceInfo = info; } else { ptr->next = info; } ptr = info; } return deviceInfo; } static void iir_usb_relay_device_free_enumerate(IIR_USB_RELAY_DEVICE_INFO_T *deviceInfo) { IIR_USB_RELAY_DEVICE_INFO_T *ptr; while (deviceInfo) { ptr = deviceInfo; deviceInfo = deviceInfo->next; free(ptr->serial_number); free(ptr); } } #define EXPORT_API __declspec(dllexport) /* Make sure to wrap any data structure definitions that are passed from and to LabVIEW with the two include files that make sure to set and reset the memory alignment to what LabVIEW expects for the current platform */ #include "lv_prolog.h" typedef struct { int32 cnt; LStrHandle elm[]; } **LStrArrayHandle; #include "lv_epilog.h" /* define a typecode that depends on the bitness of the platform to indicate the pointer size */ #if IsOpSystem64Bit #define uPtr uQ #else #define uPtr uL #endif MgErr EXPORT_API iir_get_serial_numbers(LStrArrayHandle *arr) { MgErr err = mgNoErr; LStrHandle *pH = NULL; int len, i, n = (*arr) ? (**arr)->cnt : 0; IIR_USB_RELAY_DEVICE_INFO_T *ptr, *deviceInfo = iir_usb_relay_device_enumerate(); /* This only works reliably if there is guaranteed that the deviceInfo linked list won't change in the background while we are in this function! */ for (i = 0, ptr = deviceInfo; ptr; ptr = ptr->next, i++) { /* Resize the array handle only in power of 2 intervals to reduce the potential overhead for resizing and reallocating the array buffer every time! */ if (i >= n) { if (n) n = n << 1; else n = 8; err = NumericArrayResize(uPtr, 1, (UHandle*)arr, n); if (err) break; } len = (int32)strlen(ptr->serial_number); pH = (**arr)->elm + i; err = NumericArrayResize(uB, 1, (UHandle*)pH, len); if (!err) { MoveBlock(ptr->serial_number, LStrBuf(**pH), len); LStrLen(**pH) = len; } else break; } iir_usb_relay_device_free_enumerate(deviceInfo); /* If we did not find any device AND the incoming array was empty it may be NULL as this is the canonical empty array value in LabVIEW. So check that we have not such a canonical empty array before trying to do anything with it! It is valid to return a valid array handle with the count value set to 0 to indicate an empty array!*/ if (*arr) { /* If the incoming array was bigger than the new one, make sure to deallocate superfluous strings in the array! This may look superstitious but is a very valid possibility as LabVIEW may decide to reuse the array from a previous call to this function in a any Call Library Node instance! */ n = (**arr)->cnt; for (pH = (**arr)->elm + (n - 1); n > i; n--, pH--) { if (*pH) { DSDisposeHandle(*pH); *pH = NULL; } } (**arr)->cnt = i; } return err; } -

DLL Linked List To Array of Strings

Rolf Kalbermatter replied to GregFreeman's topic in Calling External Code

uQ is the LabVIEW typcode for an unsigned 8 byte integer (uInt64). uL is the typecode for a uInt32. These are the sizes of a pointer in the respective environment and a LabVIEW handle is a pointer! You are right of course. That was a typo! But the real typo is in the declaration: LStrHandle *pH = NULL; The rest of the code is meant to have this variable be a reference to the handle not the handle itself. -

DLL Linked List To Array of Strings

Rolf Kalbermatter replied to GregFreeman's topic in Calling External Code

A string array is simply an array handle of string handles! Sounds clear doesn't it? 😄 In reality we didn't just arbitrarily create this data structure but LKSH simply defined it knowing how a LabVIEW array of string handles is defined. You can actually get this definition from LabVIEW by creating the Call Library Node with the Parameter configured to Adapt to Type, Pass Array Handle Pointer, then right click on the Call Library Node and select "Create .c file". LabVIEW then creates a C file with all datatype definitions and an empty function body. -

DLL Linked List To Array of Strings

Rolf Kalbermatter replied to GregFreeman's topic in Calling External Code

That entirely depends on the implementation. If the API just returns a pointer to a globally maintained list then there is no way to make this safe unless: 1) It only ever updates this list when calling this function just before returning the first element pointer. This means that updating this list because of OS events for unplugging devices is not a safe option. 2) It provides some locking such that getFirstDeviceInfo() acquires a lock and you have to unlock the list after you are done for instance by calling a function unlockDeviceInfo() or similar. Another option is that the API returns a newly allocated list and you need to call a freeDeviceInfo() or similar function everytime afterwards to deallocate the entire list. If any of these is true you SHOULD be safe, otherwise there is no way to make it safe. -

DLL Linked List To Array of Strings

Rolf Kalbermatter replied to GregFreeman's topic in Calling External Code

There is a serious problem with this if you ever intend to compile this code for 64 bit. Then alignment comes into play (LabVIEW 32 bit uses packed data structures but LabVIEW 64 bit uses default alignment so the Array of handles requires sizeof(int32) + 4 + n * sizeof(LStrHandle) bytes. More universally it is really: #define RndToMultiple(nDims, elmSize) ((((nDims * sizeof(int32)) + elmSize - 1) / elmSize) * elmSize) #if IsOpSystem64Bit || OpSystem == Linux /* probably also || OpSystem == MacOSX */ #define ArrayHandleSize(nDims, nElm, elmSize) RndToMultiple(nDims, elmSize) + nElms * elmSize #else #define ArrayHandleSize(nDims, nElm, elmSize) nDims * sizeof(int32) + nElms * elmSize #endif But NumericArrayResize() takes care of these alignment troubles for the platform you are running on! Personally I solve this like this instead: #include "extcode.h" /* Make sure to wrap any data structure definitions that are passed from and to LabVIEW with the two include files that make sure to set and reset the memory alignment to what LabVIEW expects for the current platform */ #include "lv_prolog.h" typedef struct { int32 cnt; LStrHandle elm[]; } **LStrArrayHandle; #include "lv_epilog.h" /* define a typecode that depends on the bitness of the platform to indicate the pointer size */ #if IsOpSystem64Bit #define uPtr uQ #else #define uPtr uL #endif MgErr iir_get_serial_numbers(LStrArrayHandle *strArr) { MgErr err = mgNoErr; LStrHandle *pH = NULL; deviceInfo_t *ptr, *deviceInfo = getFirstDeviceInfo(); int len, i = 0, n = (*strArr) ? (**strArr)->cnt : 0; /* This only works reliably if there is guaranteed that the deviceInfo linked list won't change in the background while we are in this function! */ for (ptr = deviceInfo; ptr; ptr = ptr->next, i++) { /* Resize the array handle only in power of 2 intervals to reduce the potential overhead for resizing and reallocating the array buffer every time! */ if (i >= n) { if (n) n = n << 1; else n = 8; err = NumericArrayResize(uPtr, 1, (UHandle*)strArr, n); if (err) break; } len = strlen(ptr->serial_number); pH = (**strArr)->elm + i; err = NumericArrayResize(uB, 1, (UHandle*)pH, len); if (!err) { MoveBlock(ptr->serial_number, LStrBuf(**pH), len); LStrLen(**pH) = len; } else break; } if (deviceInfo) freeDeviceInfo(deviceInfo); /* If we did not find any device AND the incoming array was empty it may be NULL as this is the canonical empty array value in LabVIEW. So check that we have not such a canonical empty array before trying to do anything with it! It is valid to return a valid array handle with the count value set to 0 to indicate an empty array!*/ if (*strArr) { /* If the incoming array was bigger than the new one, make sure to deallocate superfluous strings in the array! This may look superstitious but is a very valid possibility as LabVIEW may decide to reuse the array from a previous call to this function in a any Call Library Node instance! */ n = (**strArr)->cnt; for (pH = (**strArr)->elm + (n - 1); n > i; n--, pH--) { if (*pH) { DSDisposeHandle(*pH); /* Clean out the handle pointer to indicate it was disposed */ *pH = NULL; } } (**strArr)->cnt = n; } return err; } This is untested code but should give an idea! -

In the case of the libraries that I contributed to OpenG, I tried to add all the names to the copyright notice who provided more than a trivial bug fix. I also happened to add my name to a few VIs in other OpenG packages when I felt it was more than a trivial bug fix.

-

Should I abandon LVLIB libraries?

Rolf Kalbermatter replied to drjdpowell's topic in LabVIEW General

Well there could be two that apply! "Killing me softly" and "Ready or Not, here I come you can't hide" 😀 -

Should I abandon LVLIB libraries?

Rolf Kalbermatter replied to drjdpowell's topic in LabVIEW General

The upgraded LLB almost certainly never ever will happen. The lvclassp or whatever it would be called probably neither because you can do that basically today by wrapping one or more lvclasses into a lvlib and then turning that into a lvlibp. While these single file containers are all an interesting feature they have many potential trouble as can be seen with lvlibp. Some are unfortunate and could be fixed with enough effort, others are fundamental problems that are hard to almost impossible to be really done right. Even Microsoft has been basically unable to plugin an archive system like a ZIP archive into its file explorer in a way that feels fully natural and doesn't limit all kind of operations that a user would expect to be able to do in a normal directory. Not saying it's impossible although the Windows Explorer file system extension interface is basically a bunch of different COM interfaces that are both hard to use right and incomplete and limited in various ways. A bit of a bolted on extension with more extensions bolted on on the side whenever the developers found to need a new feature. It works most of the time but even the Microsoft ZIP extension has weird issues from using the COM interfaces in certain ways that were not originally intended. It works good enough to not having to spend more time on it to fix real bugs or to axe the feature and let users rely on external archive viewers like 7-ZIP, but is far from seamless. At least for classic LabVIEW I think the time has come where NI won't spend any time in adding such features anymore. They will limit future improvements to features that can be relatively easily developed for NXP and then backported to classic LabVIEW with little effort. Something like a new file format is not such a thing. It would require a rewrite of substantial parts of the current code and they are pretty much afraid of touching the existing code substantially as it is in large parts very old code with programming paradigms that are completely the opposite to what they use nowadays with classes and other modern C++ programming features. Basically the old code was written with standard C in ways that was meant to fit into the constrained memory of those days with various things that defies modern programming rules completely. Was it wrong? No, it was what was necessary to get it to work on the hardware that was available then, without waiting another 10 years to have on the architecture and hope to get the hardware that makes a modern system able to run, with programming paradigms that were nowhere used at that time. -

In general you are working here with non released, non documented features in LabVIEW. You should read the Rusty Nails in Attics thread sometimes. Basically LabVIEW has various areas that are like an attic. There exist experimental parts, non finished features and other things in LabVIEW that were never meant for public consumptions, either because they are not finished and tested, an aborted experiment or a quick and dirty hack for a tool required for NI internal use. There are ways to access some of them, and the means to it have been published many times. NI does not forbid anyone to use them although they do not advertize it. Their stance with them is: If you want to use it, then do but don't come to us screaming because you stepped on a rusty nail in that attic! The fact that the node has a dirty brown header is one indication that it is a dirty feature.