-

Posts

3,948 -

Joined

-

Last visited

-

Days Won

275

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by Rolf Kalbermatter

-

Yep I know! Linux is a fileID, except there are at least two different types of identifiers, one is a socket like one and one is a posix file IO one. Mac is nasty. For 32 bit it seemed to be a Carbon API FS number, later changed to the posix file IO number for 64 bit. Not sure they changed anything for 32 bit too. The differences and uncertainities make it not a safe bet to just ASSume things and HOPE it will always remain like this, sorry. But nooooo, a file refnum is NOT a Windows file handle. Pease repeat after me: IT IS NOT!. You need the file manager function FRefNumToFD() to retrieve the underlaying file descriptor handle. The File primitives do quite a bit more than just calling the according Windows API function. A lot sits in the path resolution where thinks like shortcuts will autmatically be resolved. It won't do anything special about symlinks and all the Windows APIs except the CreateHandle() with special flags and GetFileAttributes() are made by Microsoft explicitely to work in the way to not do special things with symlinks in the name of maximum backwards compatibility. You need to call special functions to deal with symlinks and some are still not available officially such as reading the target of a symlink explicitly.

-

It's called bindings to non native libraries and functionality. It's a standard problem in every programming environment. The perceived difficulty has always a direct relation with the distance of the programming paradigme of the calling environment to the callee. In C it is almost non existent, since you have to bother about memory management, thread management, etc, etc. no matter what you try to call. In higher level languages with a managed environmen like LabVIEW or .Net, it seems a lot more complicated. It isn't really but the difference between what you normally have to do in the calling environment is much bigger when calling such non native entities. And each environment has of course a few special subtleties. The one currently causing me a lot of extra work for the OpenG ZIP library is the fact that LabVIEW always has and still does assume that STRING==BYTEARRAY and that encoding does not exist in a LabVIEW platform. A ZIP file can contain encoding in the stored file names and nowadays regularly does. So the strings that are returned as filenames in an archive need to be treated with care. Except when I then try to turn it into a LabVIEW path to create the file, the whole care falls into the water as the filepath either will alter the name to something else or even possibly attempt to create a file with invalid characters. So the solution is to replace the Open File, Create File and Create Directory among with some others functions (like Delete File) with my own version that can handle the paths properly. Great idea except that LabVIEW does not document and hence not guarantee how the underlaying file system object is mapped into a file refnum. So in order to be safe here I also have to create the Read File, Write File, Close File, File Size and such functions.All doable but serious work to do. I'm basically rewriting the LabVIEW File Manager and Path Manager functionality for a considerable amount.

-

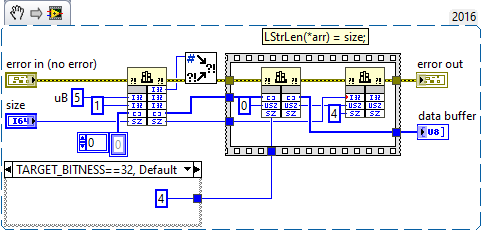

Sssssht! My first version was without that sequence structure and I was for a brief moment wondering if maybe my ability to do the pointer juggling had failed me. After looking over it once more I figured the problem must be elsewhere and then it struck me that the control assignment was happening right after the NumericArrayResize() call. LabVIEW has a preference to do terminal assignments always as soon as possible.

-

That's just to force execution of the copying of the array size before assigning the handle to the control. Looks strange when you have created an array with elements but the control shows an empty array. For use as subVI it wouldn't really matter as by the time the subVI returns the array it is correctly sized but when you test run it from the front panel it looks weird.

-

Well it is when you look at how the equivalent looks in C 😄 MgErr AllocateArray(LStrHandle *pHandle, size_t size) { MgErr err = NumericArrayResize(uB, 1, (UHandle*)pHandle, size); if (size && !err) LStrLen(**pHandle) = (int32)size; return err; } Very simple! The complexity comes from what in C is that easy LStrLen() macro, which does some pointer vodoo that is tricky to resemble in LabVIEW.

-

That was my first thought too 😆. But!!!! The Call Library Node only allows for Void, Numeric and String return types and the String is restricted to C String Pointer and Pascal String Pointer. The String Handle type is not selectable. -> Bummer! And the logic with the two MoveBlock functions to tell the array in the handle what size it actually has, needs to be done anyway. Otherways the handle might be resized automatically by LabVIEW at various places when passing through Array nodes for instance, such as the Replace Array Subset node. Also Replace Array Subset would not copy data into an array beyond the indicated array size too. Handle size and array size are not strictly coupled beyond the obvious requirement handle size >= dimensions * sizeof(int32) + array size * array element size

-

Nope, sorry. Still, trying to get information if a memory allocation might succeed by looking at whatever memory statistics might be available can never be a foolproof approach. It has the classical race that between checking if you can and doing it, the statistics might be not actual anymore and you still fail. The only fool proof approach is to actually do the allocation and deal with the failure of it. Of course for memory allocations that is always tricky as seen here. We want to read in a 900MB file and want to be sure we can read it in. Checking if we can and then trying can still fail. We have to allocate the entire buffer beforehand and then copy piece by piece the file into this buffer. Another approach might be a memory mapped file but trying to trick LabVIEW build in functions to use such a beast is an entire exercise in its own. You basically invert the complete execution flow from calling a function that returns some data, to first preparing a buffer and hand it to a function to use it to eventually return that data. If you ever have dealt with streams (in Java, or .Net which has not only taken the whole stream concept verbatim from Java) you will know this problem. It's super handy and normally quite easy but internally quite complex. And you always end up with two distinct types that can't be easily connected without some intermediate proxy, Input Streams and Output Streams. And such a proxy will always involve copying data from one stream to the other, adding significant overhead to the originally very simple and seemingly beautiful idea. Now, one solution in hindsight that would be beneficial in the OPs case would be if those LabVIEW low level functions would return an error 2 or so in these cases rather than throw up a dialog that gives you only the option to quit, crash or puke. With the current almost everywhere present error cluster and its consisten handling throughout LabVIEW, this would seem the logical choice. Back when LabVIEW was invented however, error clusters were not even thought of yet and error handling from things like out of memory conditions was anyhow an end of story condition in almost all cases, since once that happened LabVIEW would almost surely run into other out of memory conditions when trying to handle the previous error conditions. When LabVIEW for Windows came out, most users found 8MB of memory an outragous expensive requirement and were insisting that LabVIEW should be able to fly to the moon and back with the 4MB it was claiming to work with in the marketing material.

-

There is nothing broken! It's the nature of the beast that the CPU needs to be able to read the machine instructions in order to execute them. If the CPU can anyone else can too unless you execute on special security enhanced CPU engines where the code is encrypted and only decrypted inside the CPU itself with no external access to that decrypted code. Such hardware is however VERY specialized and VERY expensive and VERY unusual. Good luck in your attempts but there are a lot more interesting and beneficial things to do with your time than "breaking" LabVIEW VIs. Especially since it is not breaking but simply piecing together all kinds of information that has to be present in various ways in order to be even functional. If LabVIEW VIs were broken in that context, every single Windows, Linux, Mac and whatever executable and shared library would be broken too. And especially the much loved .Net assemblies! Reverse engineering them is even with obfuscation a piece of cake. Still tedious, sure, but much easier than trying to reverse engineer a LabVIEW executable from getting all the VIs out and disassembling every machine code stream for each VI and figuring out the linker information to piece those disassembly streams correctly together.

-

It depends what you want to do with the memory and how but in principle it is pretty easy. This function will simply return error 2 when the allocation was not successful. The challenge is to use this allocated buffer with built in LabVIEW functions. Depending on what functions you may want to use this with, you could for instance pass in the buffer in a VI in which you read the binary file in chunks and copy each chunck into this buffer with the Array Replace Subset function. Memory management is a bitch and you have to often choose between preallocating memory and passing it all the way down a call chain hierarchy to use it there or to let the low level functions attempt to do it and pass the result up through the Call Chain. LabVIEW chooses for the latter and that has good reasons. The first is a lot more complicated to implement and use and has generally less performance since you tend to copy data twice or more (when using streams for instance which at each data direction inversion will usually involve a data copy). Allocate Array Buffer.vi

-

That's about the same as when you have a DLL and want to get the C source back! Rewriting it from scratch! As explained before, those VIs inside an executable have their diagram and usually front panel completely stripped out. They are not hidden or anything, they are simply completely gone, nada, futschi, niente! LabVIEW doesn't need them to execute the VI in an executable, so why keep it and ballon the executable size unneccessarily? Also there are many people who do not want their source code (and precious IP) handed out to their users and they would be very upset if LabVIEW executables contained the full source code, no matter how much hidden. So the safest thing to do is to remove it, what is not there can not be stolen! The only thing inside such a VI is the actual compiled code (machine code instructions for the CPU it is meant to run on) and some linker information so LabVIEW can piece the VIs together when loading the whole hierarchy and connect the correct terminals with the data values that are represented through the wires that go into the node, only the wires in the calling diagram are gone too just as the rest of the diagram. Still for the compiled code that is enough. So you could with lots of trickery and reverse engineering retrieve the machine code streams from the VIs and feed them piece for piece to a disassembler and then you end up with Assembly Code text, the text form of the lowest level machine instructions that the CPU processes. This is source code too, but not something that most people will easily understand. It is one level deeper than C programming and several levels deeper than LabVIEW diagram code! To regenerate C from assembly code while not easy and not automatic is possible, going from assembly to LabVIEW is pretty much futile except for the approach of describing the algorithme involved from the assembly code and then recreate it in LabVIEW. The problem is that going from assembly code to something like "Read channel 0 from DAQ board 1, turn it in a hex string and write the result to GPIB instrument at primary address 4 on GPIB bus 0" would likely cover 10 pages of assembly code and would be hard to deduce from those 10 pages without very careful study. It would be in absolutely all cases quicker and more effective to simply put up a high level description of what the application does and reimplement it from scratch based on that. This is one of the reason the other approach has never been really tried and the effort is to big to allow a hobbyist to try it for fun.

-

Of course it is. They changed the PK0x030x04 identifier that is in the first four bytes of a ZIP stream, since when they did it with the original identifier, there was a loud scream through the community that it was very easy to steal the IP contained in a LabVIEW execuable. And yes it was easy as most ZIP unarchivers have a habit of scanning a file for this PK header, no matter where it is in a file and if they do and the local directory structure following it makes sense they will simply open the embedded ZIP archive. This is because many generators for self extracting archives simply tacked an executable stub in front of a ZIP archive to make it work as an executable. The screaming about stealing IP was IMHO totally out of proportions, the VIs in an executable have no diagram, no icon and usually not even a front panel (unless they are set to show their frontpanel at some point). But NI listened and simply changed the local directory header for the embedded ZIP stream and all was well 😆. The ZIP functions available in LabVIEW are a byproduct of integrating the minizip and zlib sources into LabVIEW for the purpose of compressing binary data structures inside of VIs to make the VIs smaller and of using a ZIP archive in executables rather than the old <=8.0 LLB format used. The need to change away from the embedded LLB was mainly because with the introduction of classes and lvlibs, the VI names alone where not always unique and therefore couldn't be stored in the single level LLB anymore. They needed a hierarchical archive format and rather than extending the LLB format to support subdirectories, it was much easier to use the ZIP archive format and the ZLIB provided sources came with a liberal enough license to do that.

-

At this point, if you only use NI hardware you are fairly safe. It's either supported with 64 bit drivers or discontinued anyways. If you use other 3rd party drivers the situation is a lot more checkered. Some have already abandoned 32 bit software and only deliver 64 bit anymore. Others have not made the step and many might never as their hardware and software offering is in a sort of maintenance state. "Look it works!" "Hands off and don't touch it anymore! It was hard enough to get it not to crash constantly!" 😆

-

I've been looking at the GCentral site and visited the Package Index page. While I find it a good initiative I see here the same problem that makes me loath browsing the NI site for products. I'm interested in the list of packages mostly yet half of the screen is used up by the GCentral logo and lots and lots of whitespace. I may be a dynosaur in terms of modern computer technology and not understand the finesse of modern web user interface design, but a site like that simply does not make me want to use it! Maybe this design will be beneficial to me in 10 years from now when my eyesight has detoriated so much that I won't see small print anymore but wait, the text in the actual list is still pretty small, so that won't help at all. It's also not because of the much acclaimed fluent design. The size of the actual screen stays statically the same no matter how I resize the browser window. This kind of web interfaces makes me wonder where we are all heading to. Design above functionality seems to be the driving force everywhere.

-

OpenG Library Exported to Github

Rolf Kalbermatter replied to Michael Aivaliotis's topic in OpenG Developers

While I can understand Jim's concerns I also think that the current state of OpenG is pretty much an eternal stasis, otherwise known as death. Considering that, any activity to revive the community effort, either under the umbrella of OpenG, G-Central or any other name you want is definitely welcome. And while I'm willing to work on such activities, organizing it has never been my strong point. I don't like politics, which is an integral part of organizing something like this. There are other problems with initiatives like this: People do usually need a job that pays the bills. They also have a life besides computers. And they frequently move on or loose motivation to work on such an initiative. One reason being that there is so much work to do and while quite a few people want to use it, there are very few wanting to contribute to it. Those who want to contribute often prefer to do it in their own way rather than help in an existing project. It's all unfortunate but very human. -

crio sqlserver cRIO (9040) & SQL Server access

Rolf Kalbermatter replied to Ghis's topic in Hardware

For what I and our company does it seems to be more than adequate, but then we don't focus on shiny web applications which sport the latest craze that changes every other year. We build test and manufacturing system where the UI is just a means to control the complex system not the means to its own end. In fact shiny, flashy user interfaces rather distract the operator from what he is meant to do so they are usually very sober and simple. For this the LabVIEW widgets are mostly more than enough and the way of creating a graphical user interface that simple works is still mostly unmatched in any other programming environment that I know. -

Using a Thunderbolt expansion chassis with a NI frame grabber

Rolf Kalbermatter replied to bmoyer's topic in Hardware

The problem is that NI seems to get out of a lot of hardware in recent years. Most Vision hardware has been discontinued or at least abandoned (no new products released and technical support on the still sold products is definitely sub-par compared to what it used to be in the past). NI Motion is completely discontinued (which is a smaller loss as it had its problems and NI never was fully commited to compete against companies like MKS/Newport and similar in that area). NI DAQ hasn't the same focus it used to have. NI clearly has set its targets on other areas and for some part moved on for some time already. That may be good news for their stock holders, but not so great news for their existing user base. -

If you want to take the Python route then of course. As far as the Call Library Node is concerned there is virtually no difference since at least LabVIEW 2009 and even before that the only real difference from 8.0 on onwards to 2009 is the automatic support for 32bit and 64 bit DLL interfacing at least if it is about pointers being passed directly as parameters. Once you deal with pointers inside structures you have to either create a wrapper DLL anyhow or deal with conditional code compilation on the LabVIEW diagram for the different bitnesses.

-

crio sqlserver cRIO (9040) & SQL Server access

Rolf Kalbermatter replied to Ghis's topic in Hardware

NXG is Windows only anyhow. Sure NI says that they have been keeping options open for other platforms with NXG and to some extend that must be true because they need to be able to keep building executables for at least the NI Linux RT targets, but I would say it is a safe bet that the significantly longer than expected development time of LabVIEW NXG is not caused mainly by these attempts on maintaining full multiplatform support but that there have most probably rather been decisions to take shortcuts that make a mulitplatform version mostly unfeasable. NI's bet is maybe that .Net Core will eventually get mature enough so they can port NXG to other .Net Core supported platforms easily if and only if the market should turn at some point and Windows is suddenly turning into a niche product 😀. Python may be an option for those people who have used Visual Basic in the past but I do not see how you can efficiently build Python applications that combine all kinds of high performance IO such as DAQ, Vision, Instrument Control and Graphical User Interfaces all in one. Sure for many bench test systems that is not really needed and a command line operated test application can work too, but that's not quite what most customers want. 😂 And don't tell me there are GUI libraries for Python. I know there are, and I know there are people who have created amazing looking apps that way, but when did you last venture into calling GDI functions to build a GUI? I tried to create GUIs in Java a few years ago and that was a pretty painful experience. The graphical editors available for that are limited and flaky at best. Most autocreated code from them has eventually to be modified and even partly rewritten to get a decent working GUI. I can't see the Python GUI frameworks offering really a better experience. -

crio sqlserver cRIO (9040) & SQL Server access

Rolf Kalbermatter replied to Ghis's topic in Hardware

Actually unixODB does exist but it's not trivial to find drivers for some database systems that will work for your unixODBC version. The Microsoft SQL Server drivers for unixODBC require a very specific version of unixODBC to work with. The bigger problem however is that pretty much all database access libraries that exist for LabVIEW do this either through the ADO/DAO Active X interface or the .Net Database API which in turn internally interfaces to ODBC for the drivers that are not native ADO/DAO or .Net. Both Active X and .Net are not available in LabVIEW on non-Windows systems. So all those Database Toolkits will do nothing for you on a Linux system even if you would get unixODBC setup correctly and working with the driver. Accessing the ODBC API through Call Library Nodes is doable and has been done by some people under Windows although nothing is publicly available that would be considerable as a full toolkit. What I have seen is a starting point but not a full featured toolkit and porting it to work with the unixODBC API would be another extra effort. The ODBC API is complex enough to make this a bit of a challenge also because some of the API interface is not exactly LabVIEW friendly.