-

Posts

3,947 -

Joined

-

Last visited

-

Days Won

275

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by Rolf Kalbermatter

-

Poll: Should the CLA Exam require applied knowledge of OOP?

Rolf Kalbermatter replied to Mike Le's topic in LabVIEW General

Point in case: LabVIEW NXG! -

NI abandons future LabVIEW NXG development

Rolf Kalbermatter replied to Michael Aivaliotis's topic in Announcements

QT from a pure technological view might be a good choice. From a licensing point of view it might be quite a challenge however. Qt | Pricing, Packaging and Licensing That all said, they do use a QT library in the LabVIEW distribution somehow. Not sure for which tool however, but definitely not the core LabVIEW runtime. If it is for an isolated support tool, it is quite manageable with a single user license. For the core LabVIEW runtime, many of the LabVIEW developers would need a development license. And some of the QT paradigms aren't exactly the way a LabVIEW GUI works. Aspect ratio accurate rendering would most likely be even further from what it is now with even bigger differing results when loading a VI frontpanel on different platforms. -

NI abandons future LabVIEW NXG development

Rolf Kalbermatter replied to Michael Aivaliotis's topic in Announcements

The cross platform backend they definitely have already. It exists since the early days of multiplatform LabVIEW whose first release was version 2.5 on Windows. It was originally fully written in C and at times a bit hackish. The motto back then was: make it work no matter what even if that goes against the neatness of the code. In defense it has to be said that what is considered neat code nowadays was totally unknown back then and in many cases even impossible to do, either because the tools simply did not exist or the resulting code would have been unable to start up on the restrained resources even high end systems could provide back then. 4MB of RAM in a PC was considered a reasonable amount and 8MB was high end and costing a fortune. Customers back then were regularly complaining about not being able to do create applications that would do some "simple" data acquisition such as a continuous streaming of multiple channels at 10kS/s and graphing it on screen with a PC with a "whooping" 4MB of memory. The bindings to multiple GUI technologies also exists. The Windows version uses Win32 window management APIs, and GDI functions to draw the content of any front panel, Mac used the MacOS classic window management APIs and Quickdraw and later the NSWindows APIs and Quartz, while the Linux version uses for everything the XWindows API. These are quite different in many ways and that is one of the reason why it is not always possible to support every platform specific feature. The window and drawing manager provide a common API to all the rest of LabVIEW and higher level components are not supposed to ever access platform APIs directly. But moving to WPF or similar on Windows would not solve anything, but only make the whole even more complicated and unportable. The LabVIEW front end is built on these manager layers. This means that LabVIEW does basically use the platform windows only as container and everything inside them is drawn by LabVIEW itself. That does have some implications since every control has to be done by LabVIEW itself but on the other hand trying to do a hybrid architecture would be a complete nightmare to manage. It would also mean that there is absolutely no way to control the look and feel of such controls across platforms. You have that already to some extend since LabVIEW does use the text drawing APIs of each individual platform and scales the control size to the font attributes of the text as drawn on that platform, resulting in changed sizes of the control frames if you move a front panel from one computer to another. If LabVIEW would also rely on the whole numeric and text control as implemented by the platform those front panels would look even more wildly different between computers. And no, front end moved into the IDE is not a solution either. Each front panel that you show in a build application is also a front end part so needs to be present as core runtime functionality and not just an IDE bolted on feature. -

HTTP Post does not work

Rolf Kalbermatter replied to Thang Nguyen's topic in Remote Control, Monitoring and the Internet

HTTP Error 401: https://airbrake.io/blog/http-errors/401-unauthorized-error#:~:text=The 401 Unauthorized Error is,client could not be authenticated.&text=Conversely%2C a 401 Unauthorized Error,to provide any such authentication. Somehow your HTTP server seems to require some authentication and you have not added any header to the query that provides such authentication. -

No. Static libraries are a collection of compiled object modules. The format of static libraries is compiler specific and you need a linker that understands the object file format. That is typically limited to the compiler toolchain that created the static library! Some compilers support additional formats but it is often hit an miss if it wlll work to link foreign libraries with your project.

-

G Interfaces for LabVIEW 2020

Rolf Kalbermatter replied to Aristos Queue's topic in Object-Oriented Programming

Well, it would be along the line of Visual Studio then. There you have the sub-view, typically located on the left top side with the Solution Explorer, Class View and Resource View tabs (all configurable of course so it may look different in your installation, but this is the default setup.) It's not like you can't claim that the LabVIEW project window drew quite a bit of inspiration from that Solution Explorer window in Visual Studio. 😃 -

NI abandons future LabVIEW NXG development

Rolf Kalbermatter replied to Michael Aivaliotis's topic in Announcements

That could be because it’s not available (and never may be). VHDL sounds like a good idea, until you try to really use it. While it generally works as long as you stay within a single provider, it starts to fall apart as soon as you try to use the generated VHDL from one tool in another tool. Version differences is one reason, predefined library functions another. With the netlist format you know you’ll have to run the result through some conversion utility that you often have to script yourself. VHDL gives many users the false impression that it is a fully featured lingua franca for all hardware IP tools. Understandable if you believe the marketing hype but far from the reality. -

NI abandons future LabVIEW NXG development

Rolf Kalbermatter replied to Michael Aivaliotis's topic in Announcements

LabVIEW Community Edition (or your Professional License with the Linx Toolkit installed) can be deployed to Raspberry Pi and BeagleBone Black boards. It's still a bit of rough ride sometimes and the installations seems to sometimes fail for certain people for unclear reason, but it exists and can be used. -

NI abandons future LabVIEW NXG development

Rolf Kalbermatter replied to Michael Aivaliotis's topic in Announcements

It's back online. Probably some maintenance work on the servers. -

I am taking a sabbatical from LabVIEW and NI R&D

Rolf Kalbermatter replied to Aristos Queue's topic in LAVA Lounge

Congratulations on your new endeavour. Working for a space program is one of those dreams every technically inclined boy probably has at some point. It actually even tops the dream of working for Lego. 😄 -

Industrial EtherNet (EtherNet/IP)

Rolf Kalbermatter replied to siva's topic in Remote Control, Monitoring and the Internet

This thread is about Ethernet/IP, a specific protocol used in industrial automation. While it might be possible to install Eternet/IP support on your PLC, there is nothing in the product description of Epec's controller hardware that mentions anything about it. Most controllers support CAN with CanOpen, and only very few controllers have even an Ethernet port. But all the literature mentions is that it allows integration into IoT networks, which would probably indicate that it supports some HTTP or WebSocket protocols, but hard to say for sure, how they support Ethernet connectivity in their controller software. -

Yes it could if this driver was developed in LabWindows/CVI. And there are some parts that get installed with LabVIEW that were in fact developed in LabWindows/CVI and therefore will cause the runtime engine to be installed. LabVIEW runtime itself does not need it however so its logical that it does not install them. Where did you deduce that it needs the 4.0.1 version of the CVI runtime? That is an awfully old version released about 25 years ago. I would guess that the DLL needs a newer version unless it was released in the late 90ies of last century. Edit: I just see that the copyright is 1997 to 2001 so your version might be in fact not very much off. Although a never LabWindows/CVI should work too. LabWindows/CVI did not use version specific runtime libraries and the functions were usually kept backwards compatible.

-

It's not really weird. The NI Analysis library is only a thin wrapper around the Intel Math Kernel library. And that library does all kinds of very low level performance enhancing tricks. Part of that is that it determines at loading what CPU, number of cores and which SIMM extensions the CPU supports to tune its internal functions to take maximum advantage of the CPU features for performance reason. It so happens that the detection code for this trips over its feet when presented with an AMD Ryzen CPU and rather than falling back to a safe but not that performant option it simply aborts the DLL loading which in turn aborts the LabVIEW wrapper DLL loading. LabVIEW doesn't know why the loading of the DLL failed, just that it did fail. Of course, anyone suspecting any malevolence in that failure of detecting a competitors CPU, really must be ill minded. 😀 Realistically I suspect it wasn't malevolence, but the fact that it wasn't for their own CPUs didn't quite make the urgency to fix it very high. And NI doesn't update the AA library every few months to the latest and greatest MKL release, as that has some serious implications in terms of testing effort.

-

If the driver itself only consists of this single DLL, then that still does not exclude the possibility of dependencies, but a little different than what I deduced from your first post. Every C(++) compiler will use a C (and in case of C++ also a C++) runtime library for all the standard functions that a programmer expects to be able to use. This runtime library is similar to the LabVIEW runtime engine. While it is possible to include the relevant C runtime functions together into the DLL, this is often (and in Visual C by default) not done to make the DLL not contain redundant code that is elsewhere available on the system. Now most C compilers have long ago started to use version specific C runtimes. If your DLL was created with Visual Studio 2010 for instance, it is set to depend on the Micosoft C runtime version 10.0. A Visual Studio 2012 executable or DLL is dependent on Microsoft C runtime version 11.0. Each C runtime has its own set of DLLs that need to be present on the target system in order to load a DLL or EXE created in the according Visual Studio version. Microsoft Windows comes pre-installed with some C runtime installations and that can vary over time since various Windows tools are compiled with various Microsoft C compiler versions. And LabVIEW and many of the NI tools are created in various versions of Visual Studio too. So what most probably happens is that on a full LabVIEW IDE install some of the libraries or tools installed uses the exact same runtime version as your DLL. On a normal LabVIEW runtime installed machine this specific NI tool or library is not necessary and hence not installed and therefore loading of your DLL must fail. Your DLL manufacturer needs to document in which version of Visual Studio the DLL was created to let you download the according Microsoft C runtime installer from the Microsoft side (or provide that installer together with their DLL).

-

Obviously your DLL has other DLL dependencies. And it is the task of Windows to resolve such scondary dependencies. But Windows won't search in the directory the referencing DLL is. Instead Windows has a number of standard locations it will look for such DLLs. Make them appear in one of those directories and things are well. 1) if already loaded it will simply reuse the DLL 2) In the directory the process exe file resides 3) in the Windows\System32 directory 4) in the Windows directory 5) in the current path, which starts in the process exe directory but is affected whenever you dismiss the file dialog in an application 6) in one of the directories listed in the PATH environment variables. The quick fix is to move your DLLs in the same directory as your build exe You most likely have installed the driver at some point on your machine and it put copies of the DLLs in Windows\System32. You then copied those DLLs into your project library but all but the directly called DLLs through the Call Library Node are then basically never used since Windows will find and load the DLLs from the system directory.

-

Creating a shared Object with Opencv

Rolf Kalbermatter replied to Yaw Mensah's topic in LabVIEW General

Well, OpenCV has various parts which were C++ since a very long time, some of them with C wrappers but not everything. And they moved more and more to a C++ API as that makes writing code easier once the proper ground works are defined. So for a pure C API you will need to go back quite a bit. Also while I haven't checked recently the OpenCV library did not remove the classic C API last I checked. Maybe they did in the meantime. But image manipulation is not something you can easily interface to with the Call Library Node anyhow even if the API is pure C. The memory management problems associated with images are complicated enough that trying to directly interface this to LabVIEW is going to be a very painful exercise. LabVIEW is by nature a by value programming environment which would be terribly wasteful to apply to pictures. This means you have to somehow create an interface between the two anyhow that wraps the whole in a way that makes it intuitive to use in LabVIEW without the inherent overhead of treating everything by value. This is best written in C but with LabVIEW specialities in mind, and this is also what quite a big part of IMAQ Vision is actually about. So while you can try to interface to OpenCV directly up to some point, it's not going to make a pretty interface to use, since you will have to bother every user of your library with C specific trivia that a normal LabVIEW user is not used to (or write a huge interface layer in LabVIEW that wraps everything up and uses techniques that would be easier implemented in C(++). -

Creating a shared Object with Opencv

Rolf Kalbermatter replied to Yaw Mensah's topic in LabVIEW General

You can not call C++ objects with the Call Library Node without going into custom assembly programming. C++ is NOT binary compatible between compilers and often not even between compiler versions so there is no common ground on which the Call Library Node could even start to attempt to provide an interface to that. What you have to do is developing your own shared library wrapper in C++ that exports standard C functions that you can then call with the LabVIEW Call Library Node. In the most simply case you could simply wrap each method of an object with a function like detailed in the labview wiki here: https://labviewwiki.org/wiki/DLL/shared_library While this is the quickest approach to getting something working it is not the most convinient approach for other potential LabVIEW users of your library since you basically hurdle them with many C++ API intrinsicacies that a typical LabVIEW programmer has no idea about. -

All incantations of the Winutil VIs out there are in fact from that library or its predecessor. Also the VI copies in this Multipanel Example.

-

I simply took the library from the NI site and threw out anything that was accessing the DLL and then changed things to work for both 32-bit and 64-bit. I'm guessing it would therefore be under whatever license it was, which I think would be the NI Example Code License, whatever that actually means.

-

I find the description of the command syntax in the manual anyhow highly ambigious and unclear. Usually GPIB devices from that time were supposed to state one long list of capabilities in the form of abbreviations. Those were pretty important before IEEE 488.2 was released, since the GPIB bus had many capabilities and most devices only supported a subset of them. IEEE 488.2 defined a minimum set of capabilities that a device had to support in order to claim 488.2 compatibility (in addition to basic command syntax and such) and after that this capability list got almost completely redundandent. A GPIB complying device that does not support 488.2 is supposed to list these capabilities somewhere. Usually they were both mentioned in the manual as well as a sticker somewhere on the outside of the device.

-

Just as an extra reference point, this post contains a redone winutil.llb file where all the references to the private winutil DLL have been removed and as far as possible replaced to compatible direct calls to the according Windows APIs. In addition the Call Library Nodes have been updated to be compatible for 32-bit and 64-bit operation and I also added one or two functions from this example into the library as well. In order to support seamless 32-bit/64-bit support one has to make sure to use the new Windows control contained in that lib for everything and modify any other Call Library Nodes your project may use accordingly too. https://forums.ni.com/t5/LabVIEW/How-to-run-an-exe-as-a-window-inside-a-VI/m-p/4096356#M1179928

-

I was refering to the instrument manual. That one has absolutely nothing to do with VISA whatsoever. It has about two half pages of IEEE description and some very limited command description. Not very extensive in any form and flavor. NI VISA on the other hand is very capable of also accessing IEEE488.1 devices, which is the name of the old style IEEE488 interface specification. Doing so is however not trivial as those low level settings can be obscure and not easy to understand without knowing how the GPIB bus works on signal level. And it is usually not necessary since all the modern devices simply work with IEEE 488.2 settings which are the default when you open a GPIB VISA session. But reading through the entire thread again, I would say the first thing you should do is to make sure you send the correct string. For Voltage mode it seems it should contain 10 characters whereas for current mode it would need 8 characters.

-

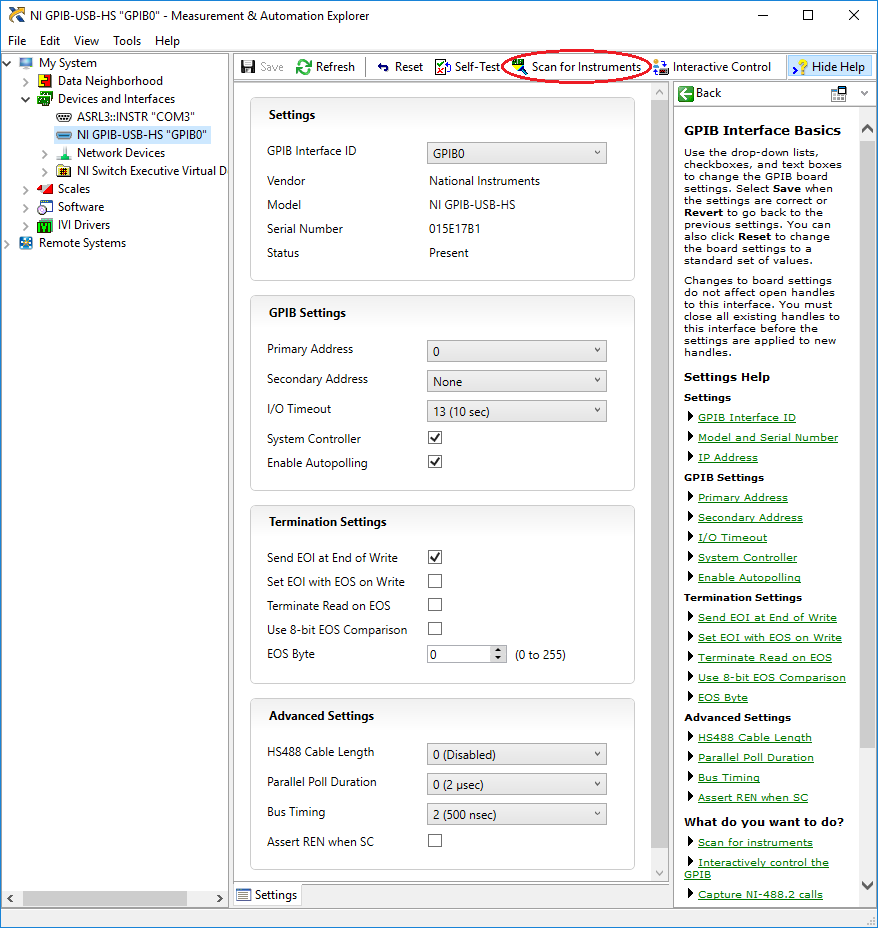

The manufacturing year is irrelevant. When that device was designed in 1981 or before, that’s the technology it uses. Your device may contain a new firmware which fixes a bug or two but certainly not with new improved functionality. As to those settings they absolutely positively must exist in NI-Max. They are functionality of the NI-488.2 driver and have absolutely nothing to do with your specific device. Or are you using something else than a NI GPIB interface to connect to the device? This is what you should see for your GPIB controller in MAX: As you can see there are a number of settings that could be relevant. Considering the age of the device I would guess playing around with Bus Timing (make it slower as the old GPIB controller used in that device might not really be up to snuff with modern GPIB timing), and Assert REN when SC (assert remote enable hardware handshake) might actually have an influence. There should be a similar section with options when you select your device instead in the device tree. But I couldn't find an image of that easily and I haven't used GPIB in several years so can't quickly get a screenshot from one of my machines. As to the GPIB section in the manual there is absolutely nothing that resembles anything IEEE 488.2. They only mention IEEE488 and the little they have in there is absolutely not 488.2 compatible in any shape or form. HP used to fill 10-20 pages and more about all the different GPIB capabilities and features of their devices and several 100 pages about the commands you could use! 😀 The GPIB standard may be technically several magnitudes less challenging than PCIe, to just name one, but you needed to know more about the different capabilities of the device and your controller to make it work. IEEE 488.2 was an attempt to define a common set of features a device and controller should use and also to define some basic commands and their syntax (such as *IDN?).