-

Posts

3,953 -

Joined

-

Last visited

-

Days Won

275

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by Rolf Kalbermatter

-

An application crash is IMHO a DOS. Never mind that it does obviously happen due to an actual user action (the opening of the file) rather than stealthy in the background, and I find it irrelevant in this context that LabVIEW can sometimes crash out of the blue without anyone trying to DOS it. (That explicitly excludes the crashes that I sometimes cause when testing external code libraries ) Code execution is another but as I wrote not very likely option. Buffer overflow write access errors can theoretically be used to execute some code that you loaded through careful manipulation of the file into a known or easily predictable location in memory. But that is kind of tricky to exploit and the LabVIEW nature makes it even more tricky to get any predictable memory location. Even if you manage to do that it almost certainly will only work on a specific LabVIEW version.

-

It is in the basic RSRC format structure of VIs and the code to load that into LabVIEW has basically not changed much since about LabVIEW 2.5. So it is safe to assume that this vulnerability has existed since the begin of multiplatform LabVIEW. I doubt that it was there in this way in the Mac only versions 2.2.x and earlier as VIs were then real Macintosh resource files and LabVIEW used the Macintosh resource manager for loading them. (Note that the Mac OS 7 from those times wouldn't even survive a few seconds of being connected to the net! It's security was by nowadays standards mediocre and its only security from modern internet attacks was its lack of a standard internet connectivity out of the box; you had to buy an expensive coax network plugin card to make it connect to TCP/IP and install a rather buggy network layer that was redesigned more than once and eventually named Open Transport, because it connected the Mac to a non-Apple only standard network ). NI puts the latest three versions before the current one into maintenance mode and only releases security patches for them, as well as making sure that driver installations like NI-DAQmx etc support those four versions. Anything older is put in unsupported status and is normally not even evaluated if a bug exists in it. If a customer reports a bug in an older version and it gets assigned a CAR, then that older version may be mentioned, but retrospective investigations in older than the last 4 versions is officially not done by NI. This threat is assigned on the nist.gov link that I mentioned a Common Weakness Enumeration 787 status, which is an out of bounds write access, or buffer overflow write error. As such it is pretty easy to use as a DOS attack and potentially also as a code privilege execution escalation but those are tricky to exploit and LabVIEW makes them even trickier to exploit due to its highly dynamic memory management scheme.

-

It depends how you do it. There are many registry hacks to enable and disable various features also for Winsock. That way you force it indeed even for all network interfaces. But the setsocketopt() works on a socket (the underlaying socket driver handle that a LabVIEW network refnum encapsulates). That way you only change the option for that specific network connection.

-

Well, LabVIEW is a software development platform just like Visual C and many other development environments are too. Should Visual C disallow creation of code because you can write viruses with it? I can send you a Visual C project and tell you to compile it and if you are not careful you just compiled and started a virus. The project may look even totally harmless but containing precompiled library files that disguise as some DLL import library, and/or even a precompiled dll that you absolutely need to talk to my super duper IOT device that I give away for free . Will you use diligent care and not run that project because you suspect something is funky with this? The only real problem a LabVIEW VI has is that you can configure it to autostart on load. This is the only real problem with VIs. This is a feature from the times when you did not have an application builder to create an executable (and in 1990 the security awareness was much lower, heck the whole internet at that time was basically open, with email servers trusting each other blindly that the other won't be abused for malicious or even commercial reasons). If you wanted a noob to be able to use a program you wrote you could then tell him to just click that VI file and LabVIEW would be started, the VI loaded and everything was ready to run, without having to explain that he also should push that little arrow in the right upper corner just under the title bar. The solution to this is to NOT click on a VI file that you do not know what it contains to open it but instead to open an empty VI and drag the VI onto its empty diagram. That way you can open the VI diagram and inspect it without causing it to autostart. Removing the autostart feature in later LabVIEW versions would probably have been a good idea but was apparently disregarded as it would be a backwards incompatibility. Also the article on The Hackers News site dates from August 29, 2017 (and all the links I can find on the net about CVE 2017-2779 are dated between August 29 and Sebtember 13). They may have gotten a somewhat ignorant response from a person at NI, or they might not! I long ago stopped to believe articles on the net blindly. This site has a certain interest to boost about their activities and that may include putting others into a somewhat more critical light than is really warranted. Here is a more "official" report about the security advisory (note that the link on the The Hackers News report has gone stale already), and it mentions on September 13, 2017 the official response from NI, although the NI document has a publish date of September 22, 2017, probably due to some later redaction of it. If I remember correctly the original response contained an acknowledgement and stated that NI is looking into this and will update the document once they determined the best cause of action. So on September 22, 2017 they probably updated that document to state the availability of the patches. Note that the blog post from Cisco Talos about this vulnerability, which is cited as source of the article on The Hackers News, but without providing any link to the actual Cisco Talos blog post, does contain the same claim that NI does not care, but has an updated notice from September 17, 2017 that NI has made an official response available. The security report from Cisco Tales itself does not mention anything about NI not caring! It does however state that the vulnerability was apparently disclosed to NI on January 25, 2017, so yes there seems to have been a problem at NI not giving this due diligence until it was publicly disclosed. I would think that the original reaction was apparently lacking but the reaction time of less than one month after the sh*t hit the fan, to produce a patch for a product like LabVIEW is actually pretty quick. And that if someone at The Hackers News really would care about this type of reports rather than just blasting others, they would have found some time to edit that page to mention the availability of patches, contrary to their statement that NI doesn't care!

-

As crossrulz said, definitely use something like Wireshark to debug the actual communication. VISA supports TCP socket communication on all platforms it is available. But installaiton on Linux may be not always seamless. I always prefer to use the native TCP nodes whenever possible. VISA is for me only an option if I happen to write an instrument driver for an instrument that supports multiple connections such as serial and/or GPIB together with TCP/P.

-

VISA TCP Socket is in principle the same that you also get with the native TCP nodes. Advantages: - VISA implements more in terms of automatic message termination recognition - VISA may seem more familiar to you if you have excessive experience writing instrument drivers Disadvantages: - You need to have NI-VISA runtime installed on the target system and the according TCP passport driver. If you use the native nodes, everything is included in the standard LabVIEW runtime. For the rest the VISA Write is pretty much equivalent to the TCP Write and VISA Read is similar to TCP Read. Therefore implementation of any protocol on top of TCP/IP will be pretty much the same independent if you use VISA or native TCP.

-

"LabVIEW" CLN calls: are we supposed to know?

Rolf Kalbermatter replied to ensegre's topic in LabVIEW General

Sorry I"m not sure what you try to say. But generally unless the function is documented in the External Code Reference Manual, calling it is certainly playing with fire Those documented functions are fairly guaranteed to not go away, and 100% sure to not suddenly change the signature (parameters and their types). The documentation also states what you have to watch out when calling this function and which parameters for instance could accept a NULL pointer if not needed. Nothing of this can be "guessed" from just the function name in the shared library export list. And even if you guess everything right, there is no guarantee at all that this function will stay in future versions, or won't change the parameters somehow, as the developer just has to make sure to update any internal code that calls this function and can rightly assume that nobody else was making use of that function as it is not documented. -

"LabVIEW" CLN calls: are we supposed to know?

Rolf Kalbermatter replied to ensegre's topic in LabVIEW General

Well the function documentation has been there since LabVIEW 2.5. There was even a separate printed manual just for them (and a chapter or two about writing CINs). The documented use of these functions is to call them from external code, originally from CINs and later from DLLs. Officially there is no documentation that I can remember that states that the Call Library Node can be used to call these functions by using LabVIEW as library name. As ensegre writes, he is aware that especially here on Lava it is sort of common knowledge among people who care, that this possibility exists, but it would seem to me that he looks for a more official source for this that comes in some way from NI. As mentioned I'm not aware of such an official statement in any documentation that could be attributed to NI or an employee that speaks for NI. So he will probably have to live with the supporting facts that make this a long standing, stable and unlikely to disappear feature that is known in the developer community outside of NI. As long as you call the documented LabVIEW manager functions, knowing what you are doing (calling MoveBlock() with bad parameters is for instance a serious hazard and the nature of the Call Library Node has no way to protect you from shooting in your foot with a machine gun!) there is nothing that could prompt NI to remove this feature from LabVIEW classic. It may be present or not in NXG for various involved reasons. -

"LabVIEW" CLN calls: are we supposed to know?

Rolf Kalbermatter replied to ensegre's topic in LabVIEW General

Well, this may be not an official NI document but it is an example being published over 12 years ago. It sort of documents the use of the LabVIEW keyword in the Call Library Node as library name, to refer to the internal LabVIEW kernel functions. It is also used in various places in VIs inside vi.lib, so not very likely to change over night either. The fact that you could refer to the LabVIEW kernel functions through use of this keyword is more or less present since the Call Library Node was introduced in LabVIEW around 5.0. Changing this now would break lots of code out there, which either inherited some of those password protected VIs from vi.lib or custom made libraries that were created by various people. I'm personally not really to much concerned that this would suddenly go away. How and if it is supported in LabVIEW NXG I haven't looked at yet, but unless you want to be NXG compatible too with your library, which I suppose will impose quite a few other more important challenges than this, I would not bother. -

I find that a bit unwieldy, but could go for the 60 weeks year, with 6 days per week and no designated weekend, but rather a 4 day work shift. It would also solve some of the traffic problems at least to some extend as only 2 third of the population would at any moment be in the work related traffic jams, and 1 third in the usually on different times and different locations occuring weekend traffic jams. And the first Deci calendar was the French Republican calendar, but it was very impractical and hard to memorise, with every day of the year having its own name. Napoleon abolished it quickly after taking over power, and not just because it was not his own idea :-).

-

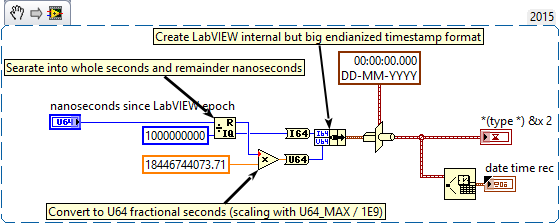

One extra tidbit: The Timestamp fractional part is AFIK actually limited to the most relevant 32 bits of the 64 bit unsigned integer. For your situation that should not matter at all as you still get a resolution of about 0.1 ns that way. Also while the Timestamp allows a range of +- 263 or +-10E19 seconds (equals ~3*10E12 or 3 trillion years) from the LabVIEW time epoch, with a resolution of ~0.1ns, 99.9% is not even theoretical useful since calendars as we know it only exist for about 5*10E3 years. It's pretty unlikely that the Julian or Gregorian calendar will still be used in 1000 years from now.

-

-

Calling GetValueByPointer.xnode in executable

Rolf Kalbermatter replied to EricLarsen's topic in Calling External Code

Nope sorry. Somewhere between transfering that image to the LAVA servers and then back to my computer something seems to have santized the PNG image and removed the LabVIEW specific tag. The image I got onto my computer really only contains PNG data without any custom tags. The same thing seems to happen with the snippet from my last post. I suspect something in Lava doing some "smart" sanetizing when downloading known file formats, but can't exclude the possibility of a company firewall doing its "smarts" transparently in the background. Hope that Michael can take a look in this if snippets have been getting sanitized on Lava or if it is something in our browsers or network. I attached a simplified version of my VI for you. C String Pointer to String.vi- 10 replies

-

Labview anti-pattern: Action Engines

Rolf Kalbermatter replied to Daklu's topic in Application Design & Architecture

It's not necessarily a mistake but if you go down that path you have to make double and triple sure to not create circular references and similar stuff in your class hierarchy. While this can work on a Windows system (albeit with horrendous load times when loading the top level VI and according compile times) an executable on RT usually simply dies on startup with such a hierarchy. -

Data type matching when calling a DLL

Rolf Kalbermatter replied to patufet_99's topic in Calling External Code

The bitness of LabVIEW is relevant. 32-bit LabVIEW doesn't suddenly behave differently when run on a 64-bit system! -

Data type matching when calling a DLL

Rolf Kalbermatter replied to patufet_99's topic in Calling External Code

Actually it's more compilicated than that! On Windows 32-bit, (and Pharlap ETS) LabVIEW indeed assumes byte packing (historical reasons, memory was scarce in 1990 and that is when the 32-bit architecture of LabVIEW was built) and you have to add the dummy element fillers to make the LabVIEW cluster match a default C aligned structure. On Windows 64-bit they changed the alignment of LabvIEW structures to be compatible to the default alignment of 8-byte most C compilers assume nowdays. And yes while the default alignment is 8 byte this does not mean that all data elements are aligned to 8 byte, The alignment rule is that each data element is aligned to a multiple of the greater of its own size or the current alignment setting (usually the default alignment but can be changed with #pragma pack to something else temporarily when declaring a struct datatype). The good news is that if you pad the LabVIEW clusters that you pass to the API, it will work on both Windows versions, but might not on other platforms (Mac OS X, Linux and embedded cRIO systems). So if you do multiplatform development a wrapper DLL to fix these issues is still a good idea! -

I'm sorry Benoit but your explanation is at least misleading and as I understand it, in fact wrong. A C union is not a cluster but more like a case structure in a type description. The variable occupies as much memory as the biggest of the union elements needs. With default alignment these are the offsets from the start of the structure: typedef struct tagZCAN_CHANNEL_INIT_CONFIG { /* 0 */ UINT can_type; //0:can 1:canfd union { struct { /* 4 */ UINT acc_code; /* 8 */ UINT acc_mask; /* 12 */ UINT reserved; /* 16 */ BYTE filter; /* 17 */ BYTE timing0; /* 18 */ BYTE timing1; /* 19 */ BYTE mode; } can; struct { /* 4 */ UINT acc_code; /* 8 */ UINT acc_mask; /* 12 */ UINT abit_timing; /* 16 */ UINT dbit_timing; /* 20 */ UINT brp; /* 24 */ BYTE filter; /* 25 */ BYTE mode; /* 26 */ USHORT pad; /* 28 */ UINT reserved; } canfd; }; }ZCAN_CHANNEL_INIT_CONFIG; So the entire structure will occupy 32 bytes: the length of the canfd structure and the extra 4 bytes for the can_type variable in the beginning. The variant where a can message is described only really occupies 24 bytes, and while you can pass in such a cluster inside a cluster with the can_type value set to 0 if you send the value to the function for reading you always will have to pass in 32 bytes if the function is supposed to write a message frame into this parameter as you might not know for sure what type the function will return.

-

Should I use .net or a custom library

Rolf Kalbermatter replied to ATE-ENGE's topic in LabVIEW General

There is no right or wrong here. It always depends! .Net is Windows only and even if there exists Mono for Linux platforms it is not really a feasible platform for real commercial use, even with .Net Core being made open source. And that is why CurrentGen LabVIEW never will support it and NexGen LabVIEW which is based for some parts on .Net still has a very long way to go before it may be ever available on non-Windows platforms. My personal guess is that NexGen will at some point support the NI Linux RT based RIO platforms as target but it will probably never run on Macs or Linux systems as host system. So if you want to stay on Windows only and never intend to use that code on anything else including RIO and other embedded NI platforms, then you should be safe to use .Net. Personally I try to avoid .Net whenever possible since it is like adding a huge elephant to a dinosaur to try to make a more elegant animal, and using my code on the embedded platforms is regularly a requirement too. Admittingly I do know how to interface to platform APIs directly so using .Net instead, while it sometimes may seem easier, feels most times like a pretty big detour in terms of memory and performance considerations. For things like the mentioned example from the OP, I definitely would prefer a native LabVIEW version if it exists than trying to use .Net, be it a preexisting Microsoft platform API (which exists for this functionality) or a 3rd party library. Incidentally I implemented my own Hash routines long times ago, one of them being a Sha256, but also others. Other things like a fully working cryptographic encryption library are not feasible to implement as native LabVIEW code. The expertise required to write such a library correctly is very high and even if someone is the top expert in this area, a library written by such a person without serious peer review is simply a disaster waiting to happen, where problems like HeartBleed or similar that OpenSSL faced will look like peanuts in comparison. If other platforms than Windows is even remotely a possibillity then .Net is in my opinion out of question, leaving either external shared libraries or LabVIEW native libraries. -

ADODB possible LabVIEW ActiveX Bug

Rolf Kalbermatter replied to GregFreeman's topic in LabVIEW General

One difference between LabVIEW and .Net is the threading for ActiveX components. While ActiveX components can be multithreading safe, they seldom really are and specify the so called Apartement threading to be required. This means that the component is safe to be called from any user thread but that all methods of an object need to be called from the same thread that was used when creating the object. In .Net as long as you do not use multi-threading by means of creating Thread() objects or some derivated objects of Thread(), your code runs single threaded (in the main() thread of your application). LabVIEW threading is more complicated and automatic multi-threading. This means that you do not have much control over how LabVIEW distributes code over the multiple threads. And the only thread execution system where LabVIEW does guarantee that all the code is called in the same thread is the UI Execution system. This also means that apartment threaded ActiveX objects are always executed in the UI Execution system and have to compete with other UI actions in LabVIEW and anything else that may need to be called in a single threaded context. This might also play a role here. Aside from that, I'm not really sure how LabVIEW should know to not create a new connection object each time, but instead reuse an already created one. .Net seems to somehow do it but the API you are using is in fact a .Net wrapper around the actual COM ADO API.- 5 replies

-

- 1

-

-

- connections

- database

-

(and 1 more)

Tagged with:

-

There is no rule anywhere that forbids you to post in multiple places. There is however a rule at least here on Lava that asks people to mention in a post, if they posted the same elsewhere. That is meant as a courtesy to other readers who might later come across a post, so they know where to look for potential additional answers/solutions.

-

Running LabVIEW executable as a Windows Service

Rolf Kalbermatter replied to viSci's topic in LabVIEW General

Not for free, sorry. It's not my decision but the powers in charge are not interested to support such a thing for external parties nor give it away for free. And I don't have any copyright on this code. -

Duplicate post over here.

-

Investigating LabVIEW Crashes - dmp file

Rolf Kalbermatter replied to 0_o's topic in Development Environment (IDE)

Error 1097 is hardly related to a resource not being deallocated but almost always to some sort of memory corruption due to overwritten memory. LabVIEW sets up an exception handler around Call Library Nodes if you don't disable that in the Call Library Node configuration, that catches low level OS exception, and when you put the debug level to the highest, it also creates some sort of trampoline around buffers. That are memory areas before and after buffers passed to the DLL function as parameter and filled with a specific pattern and after the function returns to LabVIEW, it checks that these trampoline areas still contain the original pattern. If they don''t then the DLL call somehow wrote beyond (or before) the buffer it is supposed to write too and that is then reported as error 1097 too. It may only affect the trampoline area and that would mean that nothing really bad happened, but if it overwrote the trampoline areas it may just as well have overwritten more and then crashes are going to be happening for sure, rather sooner than later. In most cases the reason for error 1097 is actually a buffer passed to the function that the function is supposed to write some information into. Unlike in normal LabVIEW code where the LabVIEW nodes will allocate whatever buffer is needed to return an array of values for instance, this is not how C calls usually work. Here the caller has to preallocate a buffer large enough for the function to return information in. One byte to little and the function will overwrite some memory it is not supposed to do. I'm not sure which OpenCV wrapper library you are using, but image acquisition interfaces are notorious to make such buffer allocation errors. Here you have relatively large buffers that need to be preallocated, and the size of that buffer depends on various things such as width, height, line padding, bit depth, color coding etc. and it is terribly easy to go wrong there and calculate a buffer size to allocate that will not match with what the C function tries to fill in because of a mismatch of one or more of these parameters. For instance if you create an IMAQ image and then retrieve its pointer to pass to the OpenCV library to copy data into, it will be very important to use the same image type and size as the OpenCV library does want to fill in, and to tell OpenCV about the IMAQ constraints such as the border size, line stride, etc.- 11 replies