-

Posts

763 -

Joined

-

Last visited

-

Days Won

42

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by smithd

-

That could work, but you'd probably have to make sure the lvproj links to the source distribution rather than the original. I doubt app builder will do anything with the lvproj file. With the source dist option, you should also double check that your bitfile works correctly if you're using cRIO. Back to the original question, I see this as being two fundamentally different issues. Basic protection is pretty simple as described above, you can use either a source dist or a lvlibp and remove the block diagrams. From an IP protection standpoint, you may want to remove front panels+turn off debugging on the code as well. After all if you can see the inputs and outputs to a function and run it, there might be a way to glean whats going on. Depends on what you're looking for/how paranoid you are. Also depending on your level of paranoia, I'd recommend walking through this series of whitepapers and making sure you at least understand what you are and are not doing to protect your system (just skip to the three links at the bottom): http://www.ni.com/white-paper/13069/en/ The second issue is less clear, as I'm not sure what benefit you get from including the lvproj. It seems like the easiest answer is what shaun said or to make a post-build VI which copies the lvproj file to the source dist's location. But I am curious why this is important. To me you'd normally build your exe in dev, make an image, and then use the image for deployment. I'm confused why you'd want to make a specific configuration but not build an exe out of it.

-

As for your other question about plugins, theres a few links I'll put below but you should ask yourself these questions: Do you really need to do this? ...I mean really, are you sure? ......No really, its not fun. Ok so if you really do decide to do this, theres a few things that would come in handy: https://ekerry.wordpress.com/2012/01/24/created-single-file-object-oriented-plugins-for-use-with-a-labview-executable/ https://decibel.ni.com/content/groups/large-labview-application-development/blog/2011/05/08/an-approach-to-managing-plugins-in-a-labview-application https://decibel.ni.com/content/docs/DOC-21441 Depending on how many plugins you have and how many platforms you need to support and how many labview versions you support (Versions*OS*plugins=lots??) you should look into using a build server like jenkins. Finally, as for the actual code you might find this handy https://github.com/NISystemsEngineering/CEF/tree/master/Trunk/Configuration%20Framework/class%20discovery%20singleton its an FGV which locates plugins on disk or in memory, removes duplicates and allows you to load classes by an unqualified or qualified name (assuming they're unique). Its not particularly complex but it provides all the little wrapper functions we needed.

-

grandchild calling grandparent method

smithd replied to John Lokanis's topic in Object-Oriented Programming

I don't think this will help anymore (you said you can't modify the grandparent) but I've found it handy in the past for what I think are similar situations. Its essentially a nice way to describe what the dr. was suggesting: http://en.wikipedia.org/wiki/Template_method_pattern Here your top-parent provides a public static method and a protected dynamic method. This gives defined places for a child to override behavior but also makes sure the public method does what you want, like catching errors or handling incoming messages. That way you separate the functionality specific to the logic/algorithm from the functionality specific to the child. Its a really handy pattern. -

I also imagine people in favor of this model would be happy to mistake liking a piece of software vs liking a style of sw. For example for a long time my group has used yEd for diagramming things. yEd is fine, but its kind of irritating to use and last year I found a similar tool online (https://www.draw.io/) which is significantly better in terms of usability and a little bit better in terms of features. But I'd really love it if I could just download it and use it offline.

-

Parallel process significantly slower in runtime then in Development

smithd replied to Wim's topic in LabVIEW General

Now I'm sad I didn't see this earlier. As soon as I read your first post my immediate thought was...McAfee? Fun story: on some of the domain computers here at NI (can't figure out which ones or what is different), the labview help will take about 1-2 minutes to load any time you click on detailed help because McAfee is...McAfeeing. You go into task manager and kill mcshield (which is railing the CPU) and it works in an instant. Its truly amazing. And...yes, you can add exceptions. McAfee doesn't seem interested in making it easy, but it is possible. Renaming to labview.exe is a pretty sweet workaround though.- 32 replies

-

- development env

- runtime

-

(and 1 more)

Tagged with:

-

webserver on cRIO 9068

smithd replied to kull3rk3ks's topic in Remote Control, Monitoring and the Internet

This is what you want: http://zone.ni.com/reference/en-XX/help/371361K-01/lvhowto/ws_static_content/ But I'd start here if you've never used lv web services before: http://zone.ni.com/reference/en-XX/help/371361K-01/lvhowto/build_web_service/ -

Choose Child Implementation based on configuration file

smithd replied to dblk22vball's topic in Object-Oriented Programming

There is a function called get lv class default value by name which you might be able to use. It requires that the class is already in memory, but if you follow a standard naming convention "DeviceA.lvclass", "DeviceB.lvclass" your user can just type in "A" or "B", you can format that into your class name, and then use that function to grab the right class. We've also done something more general for our configuration editor framework here: https://github.com/NISystemsEngineering/CEF/tree/master/Trunk/Configuration%20Framework/class%20discovery%20singleton Basically it lets you add a search folder and will try to locate the desired class by qualified name, by filename, or by unqualified name and then pass out the result. Its not particularly fancy but it helps avoid simple errors like case sensitivity and the like. (As a side note if you're likely to have classes where case sensitivity matters or if you have classes with the same unqualified name (Blah.lvclass) but a different qualified name (A.lvlib::Blah.lvclass vs B.lvlib::Blah.lvclass) then you shouldn't use this. But then you also probably shouldn't be doing those things.) -

I don't personally know of anyone in my group using IRIG with the timekeeper but it might help to better explain what it does. The timekeeper gives the FPGA a sense of time through a background sync process and a global variable which defines system time. It doesn't know anything about time itself -- its simply trying to keep up with what you, as the user of the API, say is happening. To give you an example with GPS, the module provides a single pulse per second and then the current timestamp at that time. You're responsible for feeding that time to the timekeeper and the timekeeper is responsible for saying "oh hey I thought 990 ms passed but really a full second passed, so I need to change how quickly I increment my counter". The timestamp is not required to be absolute (it could be tied to another FPGA, or to the RTOS), you simply have to give it a "estimated dt" and "true dt", basically. With that in mind, if you can get some fixed known signal from the IRIG api you mentioned, the timekeeper could allow you to timestamp your data. It can theoretically let you master the RT side as well (https://decibel.ni.com/content/projects/ni-timesync-custom-time-reference) but I'm not sure how up-to-date that is or if that functionality ever got released.

-

I think git the command line is pretty unusable but I've found sourcetree to be the correct subset of functionality to do everything that I want. I don't particularly care for the lets-fork-everything style, so we basically use git as a non-distributed system. The easy renaming of files, lack of locks, and the ability to instantly create a local repository are all excellent. And, as the article said, a lot of people use git because of github. Thats fine too. All told I find it to be just a lot simpler to work with than perforce or tfs (I haven't used TSVN in ages).

-

For a fixed number you could use a feedback node with delay=200. For an unknown number you can use any number of memory blocks, either an array, an actual block of memory, or a FIFO. These all have advantages and disadvantages depending on how much space you have and if you need to have access to the data except to do an average. However the basic concept is exactly the same as what is shown in that other thread.

-

Depends on how fast you want it to run. You could of course store an array on the FPGA and sum it every time, but that will take a while for a decently large array. If thats fine, then you're close, you just need to remember that FPGA only supports fixed-size arrays and that the order in which you sum samples doesn't particularly matter. You really just need an array, a counter to keep track of your oldest value, and replace array subset. If you do need this to run faster you should think about what happens on every iteration -- you only remove one value and add another value. So lets say you start with (A+B+C)/3 as your avg. On the next iteration its (B+C+D)/3. So the difference is adding (D/3), the newest sample divided by size, and subtracting the oldest sample/size (A/3).

-

Timed loop actual start timestamp gives erroneous value

smithd replied to eberaud's topic in LabVIEW General

Ah, so you want documentation. Thats tougher. I found this (http://digital.ni.com/public.nsf/allkb/FA363EDD5AB5AD8686256F9C001EB323) which mentions the slower speed of timed loops, but in all honesty they aren't that much slower anymore and you shouldn't really see a difference on windows where scheduling jitter will have way more effect than a few cycles of extra calculations. The main reasons as I understand it are: -It puts the loop into a single thread, which means that the code can run slower because labview can't parallelize much -TLs are slightly slower due to bookkeeping and features like processor affinity, and most people don't need most of the capabilities of timed loops. -It can confuse some users because timed loops are associated with determinism, but on windows you don't actually get determinism. Broadly speaking, the timed loop was created to enable highly deterministic applications. It does that by allowing you to put extremely tight (for labview) controls on how the code is run, letting you throw it into a single thread running on a single core at really high priority on a preemtive scheduling system. While these are important for determinism in general you're going to get better overall performance by letting the schedulers and execution systems operate as they'd like, since they are optimized for that general case. Thus, there is a cost to timed loops which makes sense if you need the features. On windows, none of those features make your code more deterministic since windows is not an RT OS, so the benefits (if any) are usually outweighed by the costs. As for if I'm suggesting you replace them all -- not necessarily. Its not going to kill your code to have them, of course, so making changes when the code does function might not be a good plan. Besides that, it sounds like you're using absolute timing for some of these loops, which is more difficult to get outside of the timed loop (its still, of course, very possible, but if I'm not mistaken you have to do a lot of the math yourself) So, for now I'd say stick with the workaround and maybe slowly try changing a few of the loops over where it makes sense. -

Timed loop actual start timestamp gives erroneous value

smithd replied to eberaud's topic in LabVIEW General

In order to better schedule the code, a timed loop is placed into its own higher-priority thread. Since there is one thread dedicated to the loop, labview must serialize all timed loop code (as opposed to normal labview where code is executed on one of a ton of different threads). This serialization is one of the reasons the recommendation is only to use them when you absolutely need one of the features, generally synchronizing to a specific external timing source like scan engine or assigning the thread to a specific CPU. So it will not truly execute in parallel, although of course you still have to show labview the data dependency in one way or another. For some reason this is not documented in the help for the timed loop, but instead for the help on how labview does threading: http://zone.ni.com/reference/en-XX/help/370622K-01/lvrtbestpractices/rt_priorities/ I also wanted to note that a sequence structure does not add overhead usually, although it can make code execute more slowly in some specific situations by interfering with the normal flow of data. That shouldn't be the case here as you're only using a single frame. Its essentially equivalent to a wire with no data. Also, timed loops are generally not recommended on windows, which it sounds like thats what you're using. They may still be used, and should function, but because windows is not an RTOS you won't actually have a highly deterministic program. This makes it more likely that the costs of the timed loop outweigh the benefits. -

I'm with paul on this one. I think I said this earlier in the thread but my group ran into some of the same issues on a project and ended up in a pretty terrible state, with things like the excel toolkit being pulled onto the cRIO. Careful management of dependencies is pretty easy to ignore in LabVIEW for a while but it bites you in the end.

-

Timed loop actual start timestamp gives erroneous value

smithd replied to eberaud's topic in LabVIEW General

If you can reproduce it reliably and can narrow it down to a reasonable set of code, absolutely. I wasn't able to find anything like this in the records when I did a quick search a moment ago. Obviously the simpler the code, the better. Also be sure to note down what type of RT target you're using (I didn't see that in your post above) and then any software you might have installed on the target, esp things like timesync which might mess with the time values in the system. For now, I'd say the easiest workaround would be to just get the current time at the start of each loop iteration using the normal primitive. -

Preserving cluster version among different LabVIEW versions

smithd replied to vivante's topic in LabVIEW General

Wow, awesome. I was under the impression you could just use variant to flattened string which includes the type info, but maybe your VI is what I heard about. And that VI also has a different connector pane than everything else, 3:2 :/ -

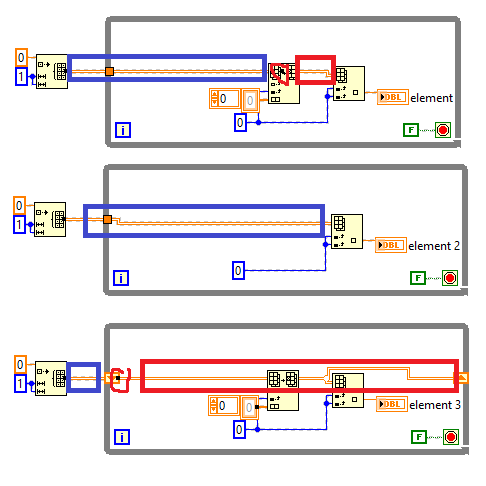

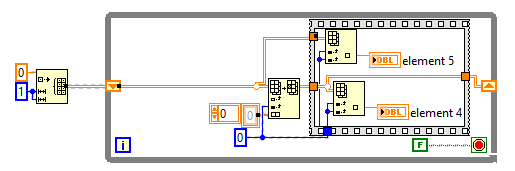

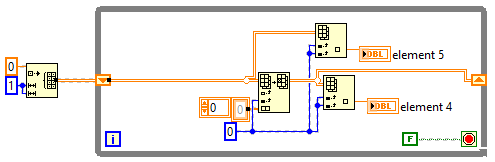

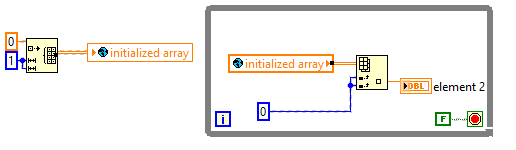

This is very tricky, and I definitely don't totally understand it. Also, despite the NI under my name I am not remotely part of R&D and so may just be making this stuff up. But it seems to be mostly accurate in my experience. LabVIEW is going to use a set of buffers to store your array. You can see these buffers with the "show buffer allocations" tool, but the tool doesnt show the full story. In the specific image in Shaun's post, there should be no difference between a tunnel and shift register, because everything in the loop is *completely* read-only, meaning that LabVIEW can reference just one buffer (one copy of the array) from multiple locations. If you modify the array (for example, your in-place element structure), it means there has to be one copy of the array which retains the original values and another copy which stores the new values. Thats defined by dataflow semantics. However, labview can perform different optimizations if you use them differently. I've attached a picture. The circled dots are what the buffer allocations tool shows you and the rectangles are my guess of the lifetime of each copy of the array: There are three versions. In version 1 the array is modified inside the loop, so labview cannot optimize. It must take the original array buffer (blue), make a copy of it in its original form into a second buffer (red) and then change elements of the red buffer so that the access downstream can access the updated (red) data. Version 2 shows the read-only case. Only one buffer is required. Version 3 shows where a shift register can aid in optimization. As in version one we are changing the buffer with replace array subset, but because the shift register basically tells labview "these two buffers are actually the same", it doesn't need to copy the data on every iteration. This changes with one simple modification: However you'll note that in order to force a new data copy (note the dot), I had to use a sequence structure to tell labview "these two versions must be available in memory simultaneously". If you remove the sequence structure, LabVIEW just changes the flow of execution to remove the copy (by performing index before replace): For fun, I've also put a global version together. You'll note that the copy is also made on every iteration here (as labview has to leave the buffer in the global location and must also make sure the local buffer, on the wire, is up to date). Sorry for the tl;dr, but hopefully this makes some sense. If not, please correct me

-

With a timed loop I believe it defaults to skip missed iterations. In the configuration dialog there should be a setting ("mode" I think) which tells it to run right away. However if this is a windows machine you shouldn't be using a timed loop at all, as it will probably do more harm than good. And if this *isnt* a windows machine, then railing your CPU (which is what changing the timed loop mode will do) is not a good idea. -> Just use a normal loop. As for the actual problem you're encountering, its hard to say without a better look at the code. You might use the profiler tool (http://digital.ni.com/public.nsf/allkb/9515BF080191A32086256D670069AB68) to give you a better idea of the worst offenders in your code, then focus just on those functions. As ned said, the index should be fast and isn't likely to be the performance issue. Copying a 2D array (reading from a global) or any number of other things could be the problem.

-

TCP Write works when it shouldn't

smithd replied to Cat's topic in Remote Control, Monitoring and the Internet

If both PCs are running LabVIEW you can use network streams and the flush command to make sure data is transferred and read on the host. Getting an application-level acknowledgement will obviously slow things down tremendously, but if thats what you need.... -

Error 2: Memory is full - but it isn't

smithd replied to ThomasGutzler's topic in Object-Oriented Programming

Why couldn't you install the database on the same machine? Local comms should be fast and depending on how complex your math is you might be able to make the db take care of it for you in the form of a view or function. Unless I'm mistaken, he was pointing out that the representation of data in memory is in the form of pointers (http://www.ni.com/white-paper/3574/en/#toc2, section "What is the in-memory layout..." or http://zone.ni.com/reference/en-XX/help/371361H-01/lvconcepts/how_labview_stores_data_in_memory/). So you have an array of handles, not an array of the actual data. If you replace an array element you're probably just swapping two pointers, as an example. -

"Separate Compiled Code From Source"? (LV 2014)

smithd replied to Jim Kring's topic in Development Environment (IDE)

I've been using source separate since 2011 and it seems to work 95% of the time. If you use any of my group's libraries which have been updated recently (much of the stuff on ni.com/referencedesigns), you are also likely using source separate code. However because of other issues we've taken to using at least two classes or libraries to help resolve the situation you describe. One is responsible for any data or function which has to be shared between RT and the host. Then there is one class or library for the host, and one for RT. I think my colleague Burt S has already shown you some of the stuff which uses this with an editor (PC), configuration (shared API), and runtime (RT) classes. This pattern works really well for us and we've begun applying it to new projects (or refactoring ongoing projects to get closer to this, relieving many of our edit-time headaches). While there is still shared stuff, it all seems to update correctly and labview doesn't appear to have any sync issues. Where I have had annoying issues are with case sensitivity (kind of an odd situation but the long and short of it is that LabVIEW on windows ignores case errors while LabVIEW on RT rejects case issues -- but I haven't confirmed this is related to source sep) and with modifying execution settings (for example if you enable debugging you sometimes have to reboot the cRIO to take effect, even worse if you use any inlining). Both of these issues are documented. -

^^this is more or less what we've been doing with the configuration editor framework (http://www.ni.com/example/51881/en/) and its pretty effective -- CEF is a hierarchy, not containment, but the implementation is close enough. We've also found that for deployed systems (ie RT) we end up making 3 classes for every code module. The first class is the editor which contains all the UI related stuff which causes headaches if its anywhere near an RT system. The second is a config object responsible for ensuring the configuration is always a valid one as well as doing the to/from string, and the idea is that this class can be loaded into any target without heartache. The third is a runtime object which does whatever is necessary at runtime, but could cause headaches if its loaded up into memory on a windows machine. Using all three is kind of annoying (some boilerplate necessary) but theres a definite separation of responsibilities and for us its had a net positive effect. The other thing I've done with the above is to make a single UI responsible for a whole family of classes, like the filtering case here. Basically I store the extra parameters as key value pairs, and the various classes altogether implement an extra three methods. One says "what kvpairs do you need to run correctly", one says "does this kvpair look OK to you" (validation), and the third says "OK heres the kvpair, run with it" (runtime interpretation). This has worked pretty well for optional parameters like timeouts and such, which you wouldn't want to make a whole new UI for but are still valuable to expose. Also this obviously only works well if you (a) know good default values and (b) have some mechanism for a user configuring the system so that the computer doesn't have to be smart.

-

The dongle solution is probably better but this walks through a similar solution just using the information we can pull from the cRIO SW. http://www.ni.com/example/30912/en/ note this hasn't been updated in ages so the concepts still work but there might be easier ways to get the data, like the system config tool -- for example if you yank out serial number and eth0's MAC address you could be decently sure you have a unique identifier for the cRIO. You might also want to follow many of these steps, esp disabling the FTP or webdav server (step 9) http://www.ni.com/white-paper/13272/en/#toc9

- 4 replies

-

- protection

- encryption

-

(and 1 more)

Tagged with:

-

Yeah, XML was just a convenience but for some reason a lot of people get hung up on that. Sometimes I think it would have been better to include no serialization at all. I don't think I've ever used the XML format for anything. If I were selecting all over again in 2013, I'd pick the json parser instead (even if it is a bit of a pain to use for no real reason <_<). Anyway, the MGI files look nice, simple enough format. Good luck with the rest of the CVT.

- 8 replies

-

- globals

- current value table

-

(and 1 more)

Tagged with:

-

For the use he described, what would be the superior alternative? I'm on board with what you're saying, generally, but in this case...

- 8 replies

-

- globals

- current value table

-

(and 1 more)

Tagged with: