-

Posts

3,463 -

Joined

-

Last visited

-

Days Won

298

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by hooovahh

-

Yes thank you I see that now. The link to a login page, private hosting, and the listing for Commercial Pricing at the top of the page made me think it was more restrictive.

-

I'm unsure of your tone and intent with text, but I'll do my best to explain the vocabulary. The Free (as in beer) is like when I go to a friends house and they offer me a free beer. I can have this beer, and I am entitled to enjoying it without monetary cost. Of course someone else paid for it, but I get to enjoy it, they shared it with me. I do no have the rights or the freedom to reproduce this beer, market it, and sell it. My free-ness is limited to the license you provide. I guess an equivalent analogy here could be if my friend gives me a beer, but expects me to help him move. It is an agreement we both have. If I am "free to do what I want" that is a different kind of freedom. A freedom that is more like free speech, a right that we have. It isn't as limited as someone giving me a beer, it is closer to someone giving me a beer recipe. Typically this is associated with free open source software. Open source software is free (like beer) someone is giving it to me without having to pay. But the freedom I get goes farther than that. This probably is a problem with the english language, and how words can have many meanings and without context "free" can mean different things. The Beer/Speech is just there to help clear up what kind of free we are talking about.

-

Yup. Last time I checked.

-

Do you guys also disagree philosophically about sharing your work online? That link doesn't appear to be to a download but a login page, it isn't clear to me if the code is free (as in beer). I assume it isn't free (as in speech). In this situation I think Rolf's implementation meets my needs. I initially thought I needed more low level control, but I really don't.

-

All very good information thanks for the discussion. I was mostly just interested in reentrant for the ZLIB Deflate VI specifically. For my test I took 65k random CAN frames I had, organized them by IDs, then made something like roughly 60 calls to the deflate, getting compressed blocks for each ID and time. Just to highlight the improvement I turned the compression level up to 9, and it took about 400ms. In the real world the default compression level is just fine. I then set the loop to enable parallelism with 16 instances, which was the default for my CPU. That time to process the same frames at the same compression level took 90ms. In the real world I will likely be trying to handle something like a million frames, in chunks, maybe using pipelining. So the improvement of 4.5 times faster for the same result is a nice benefit if all I need to do is enable reentrant on a single VI. Just something for you to consider, and seems like a fairly low risk on this VI, since we aren't talking to outside resources, just a stream. I'd feel similarly for the Inflate VI. I certainly would not try to access the same stream on two different functions at the same time. Thanks for the info on time. I feel fairly certain that in my application, a double for time is the first easy step to improve log file size and compression.

-

I don't see any function name in the DLL mention Deflate/Inflate, just lvzlib_compress and lvzlib_compress2 for the newer releases. Still I don't know if you need to expose these extra functions just for me. I did some more testing and using the OpenG Deflate, and having two single blocks for each ID (Timestamp and payload) still results in a measurable level of improvement on it's own for my CAN log testing. 37MB uncompressed, 5.3MB with Vector compression, and 4.7MB for this test. I don't think that going to multiple blocks within Deflate will have that much of a savings, since the trees, and pairs need to be recreated anyway. What did have a measurable improvement is calling the OpenG Deflate function in parallel. Is that compress call thread safe? Can the VI just be set to reentrant? If so I do highly suggest making that change to the VI. I saw you are supporting back to LabVIEW 8.6 and I'm unsure what options it had. I suspect it does not have Separate Compile code back then. Edit: Oh if I reduce the timestamp constant down to a floating double, the size goes down to 2.5MB. I may need to look into the difference in precision and what is lost with that reduction.

-

Thanks but for the OpenG lvzlib I only see lvzlib_compress used for the Deflate function. Rolf I might be interested in these functions being exposed if that isn't too much to ask. Edit: I need to test more. My space improvements with lower level control might have been a bug. Need to unit test.

-

So then is this what an Idea Exchange should be? Ask NI to expose the Inflate/Deflate zlib functions they already have? I don't mind making it I just want to know what I'm asking for. Also I continued down my CAN logging experiment with some promising results. I took log I had with 500k frames in it with a mix of HS and FD frames. This raw data was roughly 37MB. I created a Vector compatible BLF file, which compresses the stream of frames written in the order they come in and it was 5.3MB. Then I made a new file, that has one block for header information containing, start and end frames, formats, and frame IDs, then two more blocks for each frame ID. One for timestamp data, and another for the payload data. This orders the data so we should have more repeated patterns not broken up by other timestamp, or frame data. This file would be roughly 1.7MB containing the same information. That's a pretty significant savings. Processing time was hard to calculate. Going to the BLF using OpenG Deflate was about 2 seconds. The BLF conversion with my zlib takes...considerably longer. Like 36 minutes. LabVIEW's multithreaded-ness can only save me from so much before limitations can't be overcome. I'm unsure what improvements can be made but I'm not that optimistic. There are some inefficiencies for sure, but I really just can't come close to the OpenG Deflate. Timing my CAN optimized blocks is hard too since I have to spend time organizing it, which is a thing I could do in real time as frame came in if this were in a real application. This does get me thinking though. The OpenG implementation doesn't have a lot of control for how it work at the block level. I wouldn't mind if there is more control over the ability to define what data goes into what block. At the moment I suspect the OpenG Deflate just has one block and everything is in it. Which to be fair I could still work with. Just each unique frame ID would get its own Deflate, with a single block in it, instead of the Deflate containing multiple blocks, for multiple frames. Is that level of control something zlib will expose? I also noticed limitations like it deciding to use the fixed or dynamic table on it's own. For testing I was hoping I could pick what to do.

-

How to load a base64-encoded image in LabVIEW?

hooovahh replied to Harris Hu's topic in LabVIEW General

😅 You might be waiting a while, I'm mostly interested in compression, not decompression. That being said in the post I made, there is a VI called Process Huffman Tree and Process Data - Inflate Test under the Sandbox folder. I found it on the NI forums at some point and thought it was neat but I wasn't ready to use it yet. It isn't complete obviously but does the walking through of bits of the tree, to bytes. EDIT: Here is the post on NI's forums I found it on. -

Yes I'm trying to think about where I want to end this endeavor. I could support the storage, and fixed tables modes, which I think are both way WAY easier to handle then the dynamic table it already has. And one area where I think I could actually make something useful, is in compression of CAN frame data and storage. zLib compression works on detecting repeating patterns. And raw CAN data for a single frame ID is very often going to repeat values from that same ID. But the standard BLF log file doesn't order frames by IDs, it orders them by time stamps. So you might get a single repeated frame, but you likely won't have huge repeating sections of a file if they are ordered this way. zLib has a concept of blocks, and over the weekend I thought about how each block could be a CAN ID, compressing all frames from just that ID. That would have huge amounts of repetition, and would save lots of space. And this could be very multi-threaded operation since each ID could be compressed at once. I like thinking about all this, but the actual work seems like it might not be as valuable to others. I mean who need yet another file format, for an already obscure log data? Even if it is faster, or smaller? I might run some tests and see what I come up with, and see if it is worth the effort. As for debugging bit level issues. The AI was pretty decent at this too. I would paste in a set of bytes and ask it to figure out what the values were for various things. It would then go about the zlib analysis and tell me what it thought the issue was. It hallucinated a couple of times, but it did fairly well. Yeah performance isn't wonderful, but it also isn't terrible. I think some direct memory manipulation with LabVIEW calls could help, but I'm unsure how good I can make it, and how often rusty nails in the attic will poke me. I think reading and writing PNG files with compression would be a good addition to the LabVIEW RT tool box. At the moment I don't have a working solution for this, but suspect that if I put some time into I could have something working. I was making web servers on RT that would publish a page for controlling and viewing a running VI. The easiest way to send image data over was as a PNG, but the only option I found was to send it as uncompressed PNG images which are quite a bit larger than even the base level compression. I do wonder why NI doesn't have a native Deflate/Inflate built into LabVIEW. I get that if they use the zlib binaries they need a different one for each target, but I feel that that is a somewhat manageable list. They already have to support creating Zip files on the various targets. If they support Deflate/Inflate, they can do the rest in native G to support zip compression.

-

So a couple of years ago I was reading about the ZLIB documentation on compression and how it works. It was an interesting blog post going into how it works, and what compression algorithms like zip really do. This is using the LZ77 and Huffman Tables. It was very education and I thought it might be fun to try to write some of it in G. The deflate function in ZLIB is very well understood from an external code call and so the only real ever so slight place that it made sense in my head was to use it on LabVIEW RT. The wonderful OpenG Zip package has support for Linux RT in version 4.2.0b1 as posted here. For now this is the version I will be sticking with because of the RT support. Still I went on my little journey trying to make my own in pure LabVIEW to see what I could do. My first attempt failed immensely and I did not have the knowledge, to understand what was wrong, or how to debug it. As a test of AI progression I decided to dig up this old code and start asking AI about what I could do to improve my code, and to finally have it working properly. Well over the holiday break Google Gemini delivered. It was very helpful for the first 90% or so. It was great having a dialog with back and forth asking about edge cases, and how things are handled. It gave examples and knew what the next steps were. Admittedly it is a somewhat academic problem, and so maybe that's why the AI did so well. And I did still reference some of the other content online. The last 10% were a bit of a pain. The AI hallucinated several times giving wrong information, or analyzed my byte streams incorrectly. But this did help me understand it even more since I had to debug it. So attached is my first go at it in 2022 Q3. It requires some packages from VIPM.IO. Image Manipulation, for making some debug tree drawings which is actually disabled at the moment. And the new version of my Array package 3.1.3.23. So how is performance? Well I only have the deflate function, and it only is on the dynamic table, which only gets called if there is some amount of data around 1K and larger. I tested it with random stuff with lots of repetition and my 700k string took about 100ms to process while the OpenG method took about 2ms. Compression was similar but OpenG was about 5% smaller too. It was a lot of fun, I learned a lot, and will probably apply things I learned, but realistically I will stick with the OpenG for real work. If there are improvements to make, the largest time sink is in detecting the patterns. It is a 32k sliding window and I'm unsure of what techniques can be used to make it faster. ZLIB G Compression.zip

-

A11A11111, or any such alpha-numeric serial from that era worked. For a while at the company I was working at, we would enter A11A11111 as a key, then not activate, then go through the process of activating offline, by sending NI the PC's unique 20 (25?) digit code. This would then activate like it should but with the added benefit of not putting the serial you activated with on the splash screen. We would got to a conference or user group to present, and if we launched LabVIEW, it would pop up with the key we used to activate all software we had access to. Since then there is an INI key I think that hides it, but here is an idea exchange I saw on it. LabVIEW 5 EXEs also ran without needing to install the runtime engine. LabVIEW 6 and 7 EXEs could run without installing the runtime engine if you put files in special locations. Here is a thread, where the PDF that explains it is missing but the important information remains.

-

LabVIEWs response time during editing becomes so long

hooovahh replied to MikaelH's topic in LabVIEW General

My projects can be on that order of size and editing can be a real pain. I pointed out the difficulties to Darren in QD responsiveness and he suggested looking for and removing circular dependencies in libraries and classes. I think it helped but not by much. Going to PPLs isn't really an option since so many of the VIs are in reuse packages, and those packages are intended to be used across multiple targets, Windows and RT. This has a cascading affect and linking to things means they need to be PPLs, made for that specific target, and then the functions palette needs to be target specific to pull in the right edition of the dependencies. AQ mentioned a few techniques for target specific VI loading, but I couldn't get it to work properly for the full project. -

Wow that is a great tool. My suggestion (which is not as good) is to use a NoSleep program. I made one in AutoIt scripting years ago and have been having it run on startup of test PCs for years. There are a couple versions, one would turn on and off the scroll lock every 30 seconds, but sometimes it did need a mouse jiggle to keep it awake. Here is one I found online that is similar to what I have used. One down side of this I did discover is that if you use remote desktop, and you remove into a machine that has a mouse jiggle no sleep, then it will do weird things in the host PC.

-

I've never done something like that before, but maybe seeing some code would help. I suspect you are in the wrong application context, and some how getting access to the XNode but in the running instance, instead of the editing VI. XNodes are only updated in the edit time environment.

-

My HA install is running on bare metal computer, which is an 8th gen I3 embedded PC, 8GB of RAM 250GB SSD. Here is the install instructions. I wanted a bit more performance, and a company was throwing these computers away. Besides this can give me more head room to do other things if I ever want to. There's even an option to install HA on windows, but it mentions it in a VM specifically. Nevermind, this is explaining how to have it on Windows, which involves having a Linux VM.

-

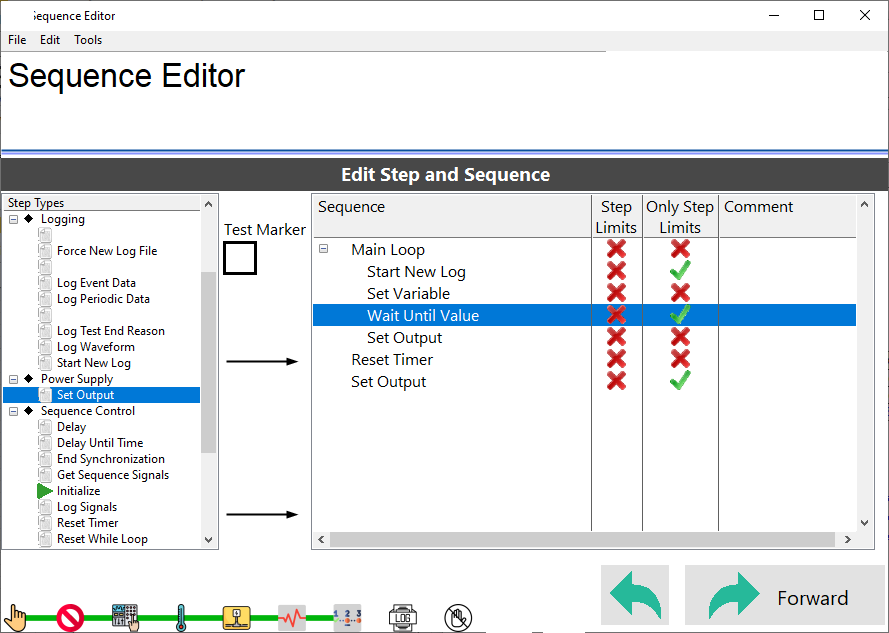

David has posted to LAVA 1 time, and it was 10 years ago. I don't think he's going to respond. You cannot control the position of the glyph with the built in function. The only solution I know of is a pretty time consuming one, where you put a transparent picture control on top of the tree, and then you can do whatever you want. I've applied this to a sequence editor. The left and right are tree controls (as noticed by the collapsing icon). The tree on the right has two centered columns. This has two picture controls that position themselves to look like it is part of the tree. There's a decent amount of work on the back end to handle things like window resizing, collapsing the tree, and other random behaviors. But the end result is something I have full control of. Also in a related topic here is an idea exchange to support larger glyphs. There is a linked example on how to fake it in a fairly convincing way.

-

Tree control how to add custom icon to column

hooovahh replied to Vandy_Gan's topic in LabVIEW Community Edition

This still works. This is still a MCLB not a tree. I'm unsure if the feature exists for trees. -

Tree control how to add custom icon to column

hooovahh replied to Vandy_Gan's topic in LabVIEW Community Edition

You've probably already found some stuff but here is an old thread that talks a bit about it. It is an unofficial feature that NI seemingly never finished, or never documented. INI keys can help you, or I think you can copy a MCLB that has the feature enabled. Another solution I've done in the past is to have a 2D picture box on top of the control, then having that be an image that you can set. This gets way more complicated with scrolling, and resizing windows, but it allows you to have glyphs that aren't the default size which is also what I wanted. -

Oh yes great point. I've always been in the world of English applications, but a Label would probably be a safer choice. I'm just thinking about situations where at runtime connecting to things could be changed for a more dynamic UI. It has been done before different ways of course.

- 9 replies

-

- ui tools;

- tag engine

-

(and 3 more)

Tagged with:

-

I've been manually installing it myself. I copy the liblvzlib.so to the controller, then I SSH in with Putty, and sudo copy to /usr/local/lib/liblvzlib.so, then restart the controller. I was happy using the old CDF format custom install for as long as I could.

-

Very neat looking. I like the concept. I do think people might not like the unprotected nature of being able to read and write to tags from anywhere. Imagine I have a sensor that keeps flipping back to NaN. I suspect trouble shooting where the tag is getting written from, or debugging this type of wireless program can be a challenge. If tools are made for tracking this type of thing it might make it easier. This also would make something like dynamic UIs easier. You can have a set of controls that can be inserted into subpanels, and then to read/write data you just need the controls named something specific. Oh that gets me thinking, can the tag be based on the Caption of the control not the label? That way it can change at runtime. Also I think the video should have been a youtube link. Watching such a long gif is a weird experience without pause, or playback controls.

- 9 replies

-

- ui tools;

- tag engine

-

(and 3 more)

Tagged with:

-

I am also rocking version 4.2.0b1-1 for the same reasons.