Search the Community

Showing results for tags 'labview'.

-

I find myself frequently looking for a good pattern for collecting a pool of array elements until they reach a certain size and then removing the oldest elements first. I have used very stupid methods like a bunch of feedback nodes being fed into an build array node. But today I thought up one that I really enjoyed and I thought that I'd share it. Its a simple pattern and no crossing wires . Perhaps someone has thought of something better, if so don't hesitate to share.

-

I was trying a python http communication tutorial - https://aiohttp-demos.readthedocs.io/en/latest/tutorial.html#views - when I had to disable the NI Application Web Server to proceed. And then I thought, what the **** am I doing? Maybe I should take the free (well, prepaid) gift of a working web server. Here's my task. A central HQ computer will have a GUI that monitors five machine stations, each of which has its own computer. Every approx 10 ms (negotiable), each station gives a report consisting of two arrays, the larger being 2048 data points, the other much smaller. Whenever HQ feels like it, HQ can tell a station to start or stop (its computer stays on). A local IP connection is used, with a router at each end. There is also a Raspberry Pi with its own IP address at each station's router, that can send camera frames to HQ. The station-computers use Python and C++ to do their work, not counting whatever needs to be added to communicate with HQ. Your advice please? Should I use Labview? On both ends or just the HQ? And which if any of these helpful add-ons suggested by Hooovahh should I use?

-

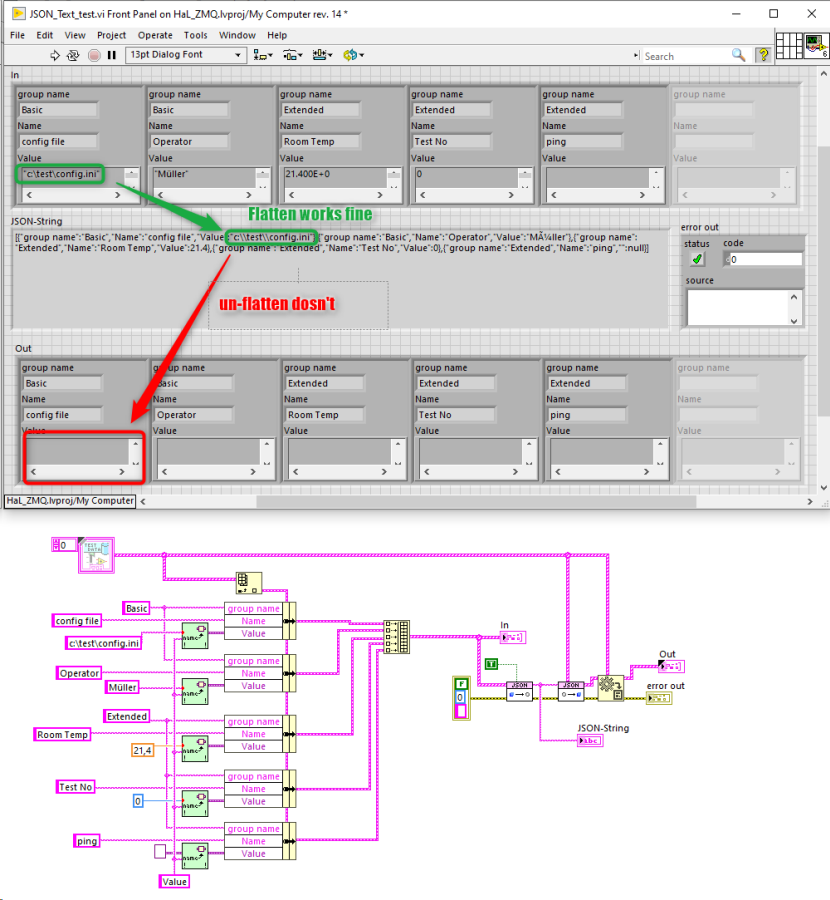

Hi! I have to convert a dynamically generated array into a JSON string and back. Unfortunately I found that the un-flatten method loses the variant data. See the screenshot of FP and BD and the comments inside. JSON_Text_test.vi Is this a bug in JSON Text or is my data-construction not supported as expected? In case of the letter I have modify huge parts of my code. So I hope that it is a bug 😉 The 2nd thing I recognized is that the name "Value" of the cluster is not used during flatten. Instead the name of the connected constant / control / line is used. I found the green VI ("Set Data Name__ogtk.vi") at OpenG Toolkit that allows me to programmatically set the variant data name. As you can imagine I would prefer not to need the OpenG VI. Thanks in advance for your kind help 🙂

-

HAs anyone tried creating a sub vi programmatically by selecting the set of blocks through scripting?????

-

I have installed Labview 2020 on Dedian Buster using the rpm to deb conversion method via alien. Due to Architecture mismatch i deleted the *i386.rpm files before conversion. My Problem is that after creating a project at "Build Specification"-> "rigth click" i am only able to select "Source Distribution". Application does not show up as an option. I will be grateful for any suggestions. Thank you in advance.

-

I would like to build a model using image data and NI-cRIO-9063 and NI 9264 for voltage control. for image, I made a script in python using OpenCV libraries that detecting some points . For voltage control, I use cRIO-9063 with NI 9264 voltage controller. My question is, I am new in LabVIEW and I don't have any idea how can I make a loop for voltage control in python. Is there any library available in python that directly connect cRIO and NI 9264 devices? if not then how can I combine my image data(which is in python) with cRIO device? I need argent help.

- 3 replies

-

- imageprocessing

- python

-

(and 1 more)

Tagged with:

-

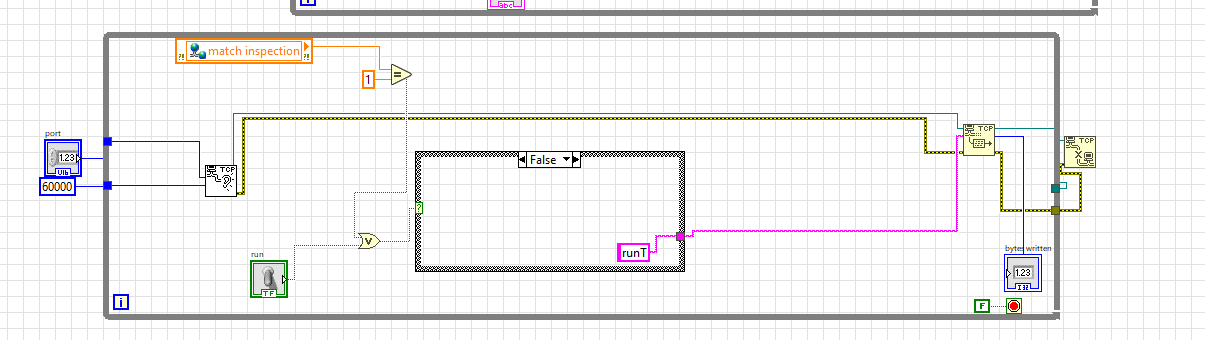

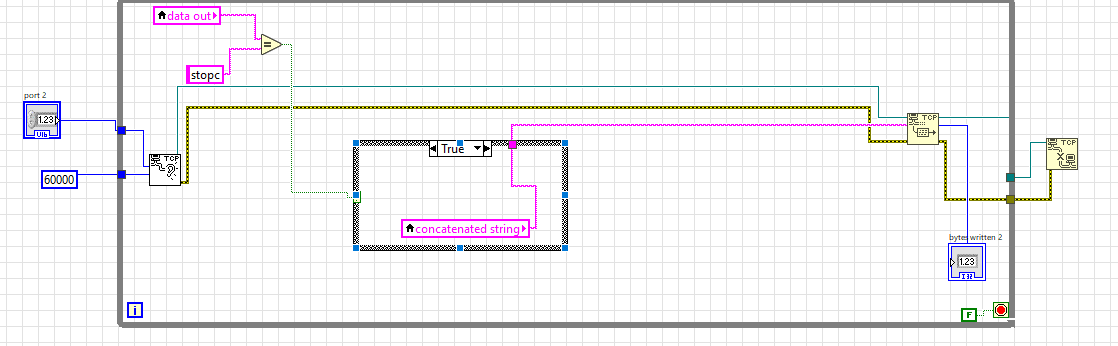

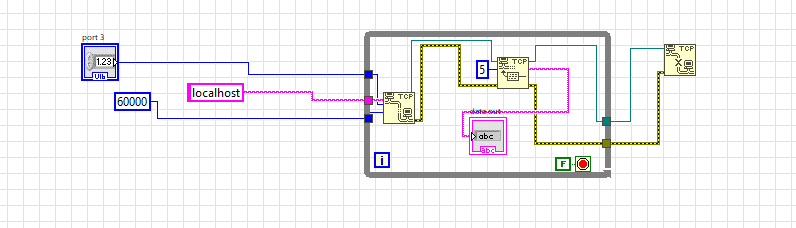

Hi there, I am working on a machine vision project with LabVIEW. The camera will locate some parts and send their coordinates via TCP/IP and I created a client also with LabVIEW to display these coordinates here is how the communication going. First, if the camera detects something then msg will be sent to the client to inform him. then if msg was received correctly client responds with another msg to request the coordinates. at last, the server sends the coordinates to the client. here I faced some problems 1- the msg sent are with variable length ("x=0,y=0,Rz=0"==> "x=225,y=255,Rz=5" ==> "x=225,y=255,Rz=90" length vary between 16 and 22 ) with the constant "byte to read " it will not display the full msg. 2-the client works fine but at a certain time, it shows errors like ("LabVIEW: (Hex 0x80) Open connection limit exceeded";;;;;; "LabVIEW: (Hex 0x42) The network connection was closed by the peer. If you are using the Open VI Reference function on a remote VI Server connection, verify that the machine is allowed access by selecting Tools>>Options>>VI Server on the server side"

-

I am just starting on trying to be able to use Python code from a LabVIEW application (mostly for some image analysis stuff). This is for a large project where some programmers are more comfortable developing in Python than LabVIEW. I have not done any Python before, and their seem to be a bewildering array of options; many IDE's, Libraries, and Python-LabVIEW connectors. So I was wondering if people who have been using Python with LabVIEW can give their experiences and describe what set of technologies they use.

-

View File GRBL 1.1 Hi everyone, Since GRBL standard is open source, I decided to post my Library that I used in LabVIEW to interface a standard GRBL version 1.1 controller. Not all GRBL function has been integrated, but this is a very good start. Enjoy and let me know your comments. Benoit Submitter Benoit Submitted 07/26/2018 Category Hardware LabVIEW Version

-

Hello everybody! Wondering how many people have tried the new vipm.io site. We have added a ton of features to make it easy to Discover LabVIEW Tools and there are some cool ones coming soon. Check it out and let me know what you think 😀 Javier

-

VIPM.io now allows you to post LabVIEW Resources, Ideas, and Tools. For example, you could post a link to a video tutorial or blog article about a package. You can also post ideas, like feature requests or new tools. Best of all, package developers are notified when you post your ideas and resources, and you can comment and discuss posts with the community. Take a look at this video to learn more: https://www.vipm.io/posts/664960df-f111-4e13-989a-24be8207182d/

-

I want to connect My ccd camera with labview. The details of my system is given bellow. I cannot connect it please help OS: WINDOWS 7, 64bit LabView Run-Time 2013(64-bit) NI-IMAQ 4.8 NI-IMAQdx 4.3 Camera: QICAM Monochrome Cooled (QIC-F-M-12-C) Model QICAM Resolution 1392 x 1040 Sensor 1/2" Sony ICX205 progressive-scan interline CCD Pixel Size 4.65 x 4.65µm Cooling Type Peltier thermoelectric cooling to 25˚C below ambient Digital Output 12 bit Video Output FireWire (IEEE 1394b) Max. Frame Rate 10 fps full resolution @ 12 bits Pixel Scan 20, 10, 5, 2.5MHz Mount Type C-mount optical format

-

CLA_ATM_QMH_PRACTICE.zipHi Folks, I'm taking the CLA exam in a few weeks and would like some feedback on the solution I put together (attached). A few specific questions: Can I dump tags in the VI Documentation of the VI like I did in Error Handler - Console Error.vi and get credit since there are instructions for developers to complete this work? This would be my strategy if I run out of time. Does this seem like a passing solution? Why/why not? Where do you think I would lose the most points in this solution? Any other feedback on this exam or general strategy tips are greatly appreciated! Best Regards, Aaron

-

It's nothing to fancy. I added a few things to the UI to support more features and in preparation of adding the VI renamining/relinking step that was done seperately in the OpenG DEAB tool before calling the OpenG package builder. But I never got around to really add the deab part into the package builder. It's kind of extra difficult as the DEAB compononent doesn't currently support newer features like lvclass and lvlib at all and of course no mallable VIs etc. I can post what I have somewhere, but don't get to excited.

- 63 replies

-

- open source

- share

-

(and 3 more)

Tagged with:

-

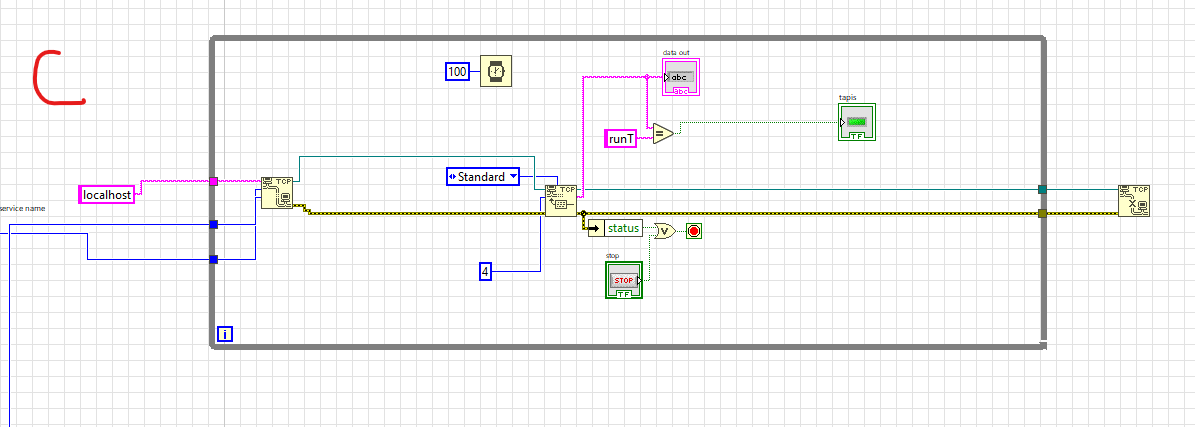

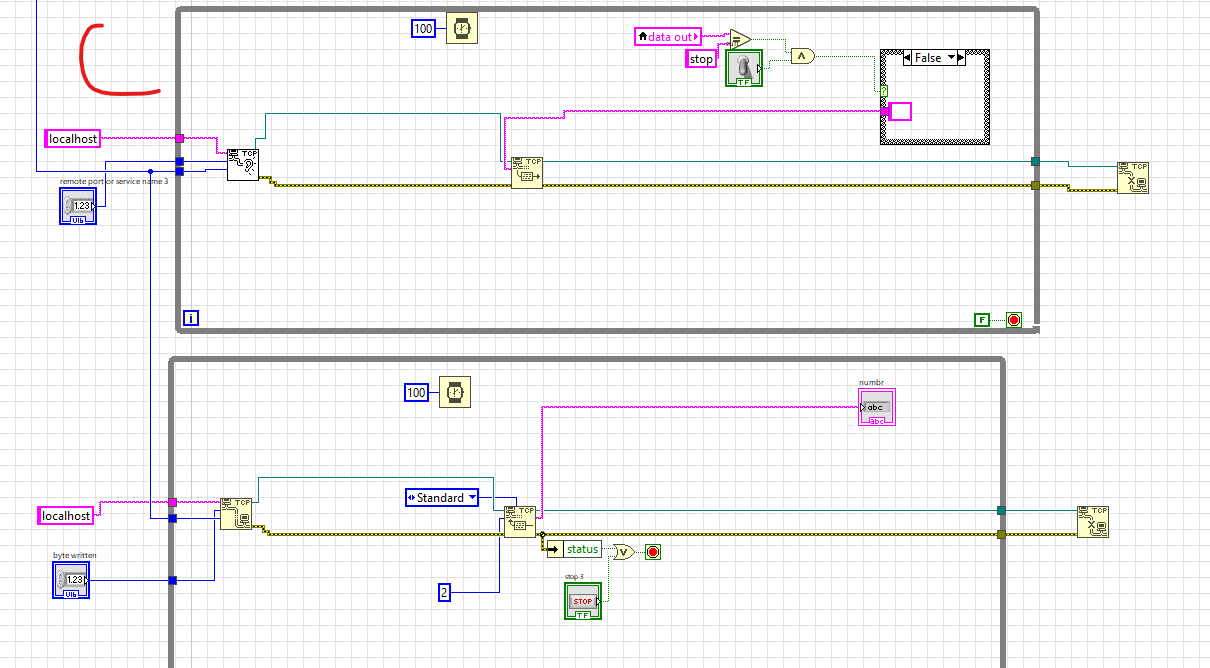

I am implementing a TCP connection between MyRio(client) and Python(server). The goal is to send data from the server to the client, perform some calculations, and send the result to the server. I need to keep changing the data being sent to the client. I noticed the first data works fine and the result returned to the server, but the client could not read subsequent data. I debugged and was able to detect that the error is coming from the first read function is the "Reading values subvi" but I don't know how to correct it. How do I go about it? I have attached the files below. Reading Unknown Bytes from TCP.vi Second_client.vi SimpleServer.py

-

Hello I am quite new to labview and I have some questions regarding labview. We have came up with a simulation build in labview as shown in the folder. However, we will be making use of Myrio to connect current and voltage sensors to send the data to labview when labview is running. We are currently having difficulties trying to search if it is possible to send the wave files and the popout messages ( messagebox.vi and Player2Wins.vi) to NI Dashboard for Labview in Ipad by using myrio. Is it possible for myrio to store wave files and the additional VIs and sending these to NI Dashboard for Labview? Otherwise, it is possible for Labview to send the entire program.vi, messagebox.vi and Player2Wins.vi to cloud and people are able to see the GUI in program.vi remotely? ( We have found information like Labview web services etc. We hope that people are able to view our GUI and press the " Press start" button as well as closing the popout messages in a website not from our local network). I sincerely apologize if what I am asking does not make sense to people who are reading this. I hope that someone will help me with this as it is very difficult for me to do and I need some guidance from people who are familiar with these. Thanks for taking your time to read and your reply. Simulation_Build_(3).zip Pop-up_Message.vi Player2Wins.vi

-

View File 55 easily distinguishable color.vi This is the only way I found how to have a bunch of color that are unique and easily distinguishable. The maximum I saw in the web was about 26. This one offer 55 of them without gray tone. You can modify this VI to support gray tone as well and goes up to 60 colors. Submitter Benoit Submitted 05/30/2018 Category User Interface LabVIEW Version

- 4 replies

-

- labview

- multiple color

-

(and 2 more)

Tagged with:

-

Hello, i created a new tips repository in: https://edupez.com/ English and Portuguese

-

Hello Everyone, I have created this feature to create named variables of any data type in memory and access its value from any part of data code which is under same scope using its name. This variables stores instantaneous value. Best use case of this toolkit is acquire data set variable values & read from any loop. Do not use for Read-Modify-Write Once variables are created in memory, you can be grouped them and access its values using names. You can create variable for any data datatype & access its value using its Name. I have tested this toolkit for memory & performance, which is much faster than CVT & Tag Bus Library Please check and let me know your suggestions. use LabVIEW 15 sp1 BR, Aniket Gadekar, aniket99.gadekar@gmail.com DataVariableToolkit.zip

-

So I have a serious question. Does LabVIEW have a way (built in or library) of calculating the next larger or next smaller floating point value. C standard library "math.h" has two functions: nextafter, and nexttowards. I have put together a c function: that seems to do the trick nicely for single floats (well only for stepup): #include <math.h> #include <stdint.h> uint32_t nextUpFloat(uint32_t v){ uint32_t sign; = v&0x80000000; if (v==0x80000000||v==0){ //For zero and neg zero return 1; } if ((v>=0x7F800000 && v<0x80000000)||(v>0xff800000)){ //Check for Inf and NAN return v; //no higher value in these cases. } sign = v&0x80000000; //Get sign bit v&=0x7FFFFFFF; //strip sign bit if(sign==0){ v++; }else{ v--; } v=v|sign; //re merge sign return v; } I could put this in labVIEW, but these things are tricky and there are some unexpected cases. So its always better to use a reference.

-

So, I wanted to get an opinion here? I'm Pulling new values in from a device, and have UI elements change color depending on their value. This is of course done through property nodes. My question is what is better: A. Updating UI element only on value change (checking for value changes) B. Updating all UI elements all at once within a Defer front panel updates segment? ( and not checking for value changes) Thats all.

-

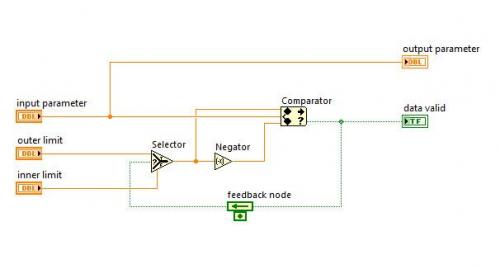

Version 2.0

173 downloads

The Threshold Hysteresis VI allows an input (for example, a sensor) to drift out of an inner limit without generating an invalid flag, but if it drifts outside an outer limit it then becomes invalid. For the reverse, when the input crosses back into the outer limit it remains invalid until the value falls inside the inner limit. For simplicity, only one input parameter is provided for both the inner & outer limits, and is negated for the lower limit, as generally tolerance limits are equidistant from the nominal.-

- threshold

- hysteresis

-

(and 2 more)

Tagged with:

-

Hello, Im using Labview and im trying to get a single pixel color value of HSV starting on the x0 y0 coordinates from my picture, x1 y0, x2 y0, ... at the rows and then continue to columns. Where would i get the frequency of each color (0-255) on the whole picture at the table with one column for H => 0-255, S => 0-255 and V=> 0-255. But i need the frequency so i only need to add +1 everytime it appearce in there for each color to count it at the end (i need it for fuzzy later, to show my fruit has a good color and is ready to be harvested). Could you please help me with that? If you have any more questions about it , feel free to ask.

- 1 reply

-

- hsv

- color value

-

(and 2 more)

Tagged with:

-

Hi everyone, I was forced to insert google (or equivalent) maps in a project and, for some time, I used Mohammad Garousi version (that I found the most complete version freely available on the web). It uses the GMap.NET dlls. After some time I noticed a recurring random issue at dll level, after which a red cross appears in the .net container without any chance to correct or avoid the error. After some time I decided to create a new map container that might solve the issue. I decided to use a picture instead of the .net container. I was able to add new functions too, like creating a route, customize its behavior (such as changing the route color to spectrum), or add another image moving with map position or zoom change and so over. The result is attached below. This is a full-working example that can be studied or modified at will, but still a demo and not a library or final release. User can move map dragging it inside the picture, can zoom with the slider and so on. Coordinates and cursor positions are updated live any time the cursor is in the picture area. You can draw a route enabling the draw button. Remember to set it off when drawing is over. You can add a third party image (the default one is a drone image) in the picture 0,0 position. You can easily customize the default position acting on the block diagram code. Any time you set the image button to on, the image will be placed to the 0,0 position, recalculating its latitude-longitude coordinates. You can dynamically change map representation, route color or zoom. I hope this example can be useful for those who need a more stable GIS representation. If any issues are discovered, please contact me. Bye Flavio LabGIS.rar

-

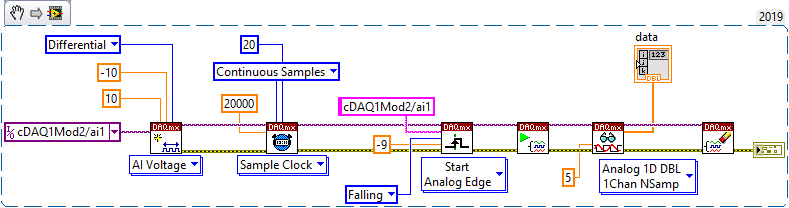

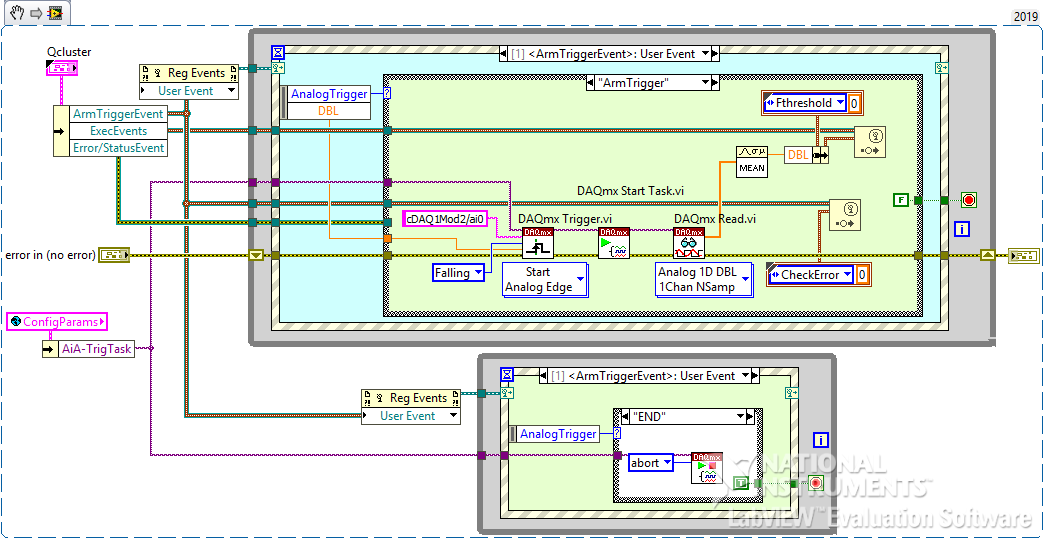

I have a requirement that I thought would be SIMPLE, but can't get it to work. I have a 9205 card in a little 9174 cDAQ USB chassis. My *intended* behavior is to wait (block) at the DAQmx Trigger/Start Analog Edge on, say channel ai1, until I get a falling edge thru, say, -0.050V. So I have a little vi (that contains 2 parallel loops) that I want to sit & wait for the trigger to be satisifed. I'm doing "routine" voltage measurements in another AI loop on a different channel. I want this vi to run separately from my "routine" voltage measurements because I want the app to respond "instantly" to input voltage exceeding a limit to prevent expensive damage to load cells. I was afraid that if I used either Finite or Continuous sampling to "catch" an excessive voltage, I might miss it while I'm doing something else. Yes, yes, a cRIO real-time setup would be better for this, but this is a very cost-sensitive task... I just want to "Arm & Forget" this process until it gets triggered, whereupon it fires an event at me. SO... I'm also reading the same voltage on channel ai0 for regular-ole voltage measurements, and just jumpering them together. I did this because I read somewhere that you can't use the same channel for multiple DAQ tasks - I *thought* I would need to set up the tasks differently. {but now that think about it, the setups can be the same...}. I've set up the DAQmx task the same as shipping examples and lots of posts I've seen. I'm supplying a nice clean DC voltage to a 9205 card using a high quality HP variable power supply. Using NI-MAX, I've verified that my 9174 chassis & 9205 are working properly. THE PROBLEM - When I run it, the vi just sails right through to the end, with no error, and an empty data array out. No matter WHAT crazy voltage I give the "DAQmx Trigger.vi" (set up for Start Analog Edge), it never waits for the trigger to be satisfied, just breezes on through as if it weren't there. If I set the Sample Clock for "Finite Samples", the DAQmx Read fails with timeout - makes sense, since the trigger wasn't satisfied. What could I possibly be doing wrong with such a simple task??????? So my fundamental misunderstanding still vexes me - does the DAQmx Trigger vi not block and wait for the trigger condition to be satisfied, like the instructions state - "Configures the task to start acquiring or generating samples when an analog signal crosses the level you specify"? I stripped my requirement down to the bare essentials - see the 1st snippet, the 2nd is my actual vi. Any ideas, anybody?

.thumb.png.d7c7b190a318a5aa97c6dd482546d474.png)