Leaderboard

Popular Content

Showing content with the highest reputation since 02/28/2025 in all areas

-

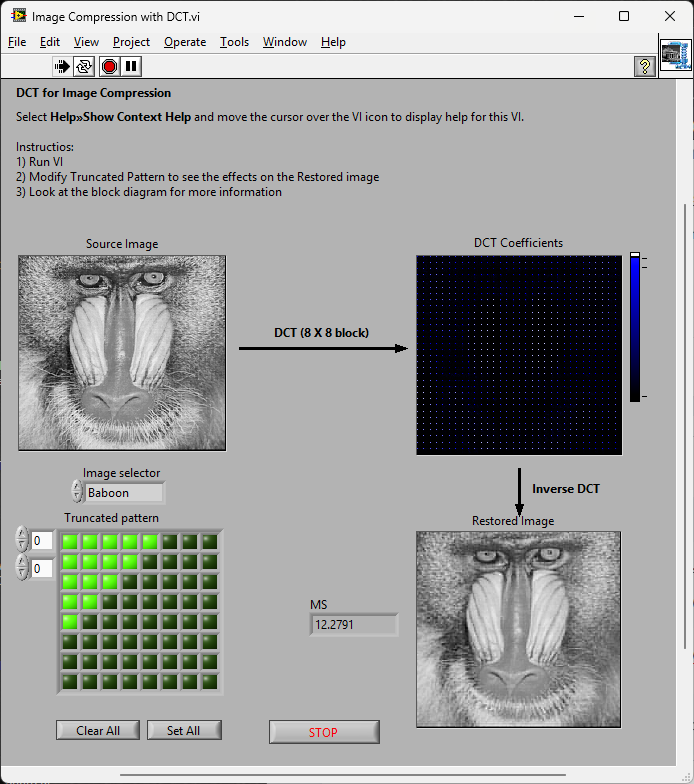

So a couple of years ago I was reading about the ZLIB documentation on compression and how it works. It was an interesting blog post going into how it works, and what compression algorithms like zip really do. This is using the LZ77 and Huffman Tables. It was very education and I thought it might be fun to try to write some of it in G. The deflate function in ZLIB is very well understood from an external code call and so the only real ever so slight place that it made sense in my head was to use it on LabVIEW RT. The wonderful OpenG Zip package has support for Linux RT in version 4.2.0b1 as posted here. For now this is the version I will be sticking with because of the RT support. Still I went on my little journey trying to make my own in pure LabVIEW to see what I could do. My first attempt failed immensely and I did not have the knowledge, to understand what was wrong, or how to debug it. As a test of AI progression I decided to dig up this old code and start asking AI about what I could do to improve my code, and to finally have it working properly. Well over the holiday break Google Gemini delivered. It was very helpful for the first 90% or so. It was great having a dialog with back and forth asking about edge cases, and how things are handled. It gave examples and knew what the next steps were. Admittedly it is a somewhat academic problem, and so maybe that's why the AI did so well. And I did still reference some of the other content online. The last 10% were a bit of a pain. The AI hallucinated several times giving wrong information, or analyzed my byte streams incorrectly. But this did help me understand it even more since I had to debug it. So attached is my first go at it in 2022 Q3. It requires some packages from VIPM.IO. Image Manipulation, for making some debug tree drawings which is actually disabled at the moment. And the new version of my Array package 3.1.3.23. So how is performance? Well I only have the deflate function, and it only is on the dynamic table, which only gets called if there is some amount of data around 1K and larger. I tested it with random stuff with lots of repetition and my 700k string took about 100ms to process while the OpenG method took about 2ms. Compression was similar but OpenG was about 5% smaller too. It was a lot of fun, I learned a lot, and will probably apply things I learned, but realistically I will stick with the OpenG for real work. If there are improvements to make, the largest time sink is in detecting the patterns. It is a 32k sliding window and I'm unsure of what techniques can be used to make it faster. ZLIB G Compression.zip5 points

-

Phew that is a pretty strong opinion! Although I personally am not a fan of the overall style of DQMH none of my problems are with the scripting/wizards or placeholder text. I think any framework that tries to do "a lot" will be complicated... your own personal framework (which you likely find trivial to use) is likely to be a bit weird to others. DQMH is extremely popular for a reason... To paraphrase the words of a wiser person than I, "please don't yuck someone elses yum"3 points

-

Absolutely echo what Shaun says. Nobody banned them. But most who tried to use them have after some more or less short time run from them, with many hairs ripped out of their head, a few nervous tics from to much caffeine consume and swearing to never try them again. The idea is not really bad and if you are willing to suffer through it you can make pretty impressive things with them, but the execution of that idea is anything but ideal and feels in many places like a half thought out idea that was eventually abandoned when it was kind of working but before it was a really easily usable feature.2 points

-

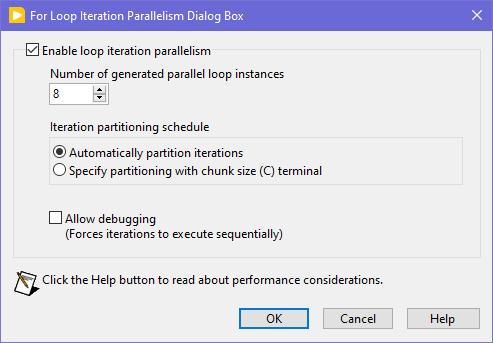

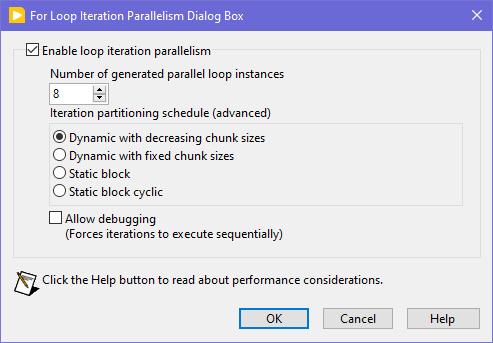

Seems like this one has "escaped everyone's grasp" too. ParallelLoop.ShowAllSchedules=True Because was only checked from the password-protected diagram of ParallelForLoopDialog.vi (LabVIEW 20xx\resource\dialog). Present since LabVIEW 2010. When activated, allows to apply more advanced iteration partitioning schedule. In other words, instead of this you will get this Сould this be useful? I can't say. Maybe in some very specific use-cases. In my quick tests I didn't manage to get increase in any productivity. It's easy to mess up with those options and make things worse, than by default. Also can be changed by this scripting counterpart.2 points

-

Look at this new download on VIPM https://www.vipm.io/package/bjm_lib_request_power/2 points

-

You want an ability to override the Equality or Comparison operators? I'm unsure, whether it really existed in OpenG packages, but now you have those neat malleable VIs, that let you do that: Search Unsorted 1D Array , Sort 1D Array , Search Sorted 1D Array. They have an additional input to specify your own equals or less function in a form of a custom comparison class or a VI refnum. There's an article to help: Creating a Custom Sorting Function in LabVIEW2 points

-

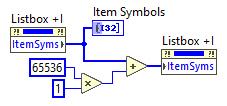

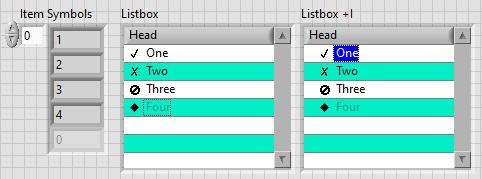

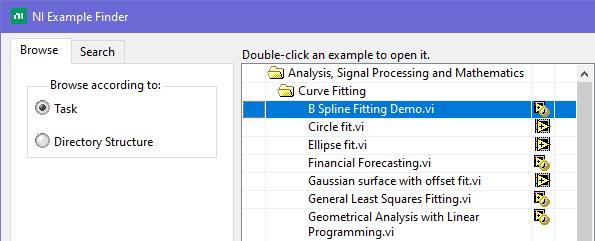

This is exactly what was said in that ancient thread: Tree control in labview. So if you add 65536*N to the Item Symbols property of the Listbox and have the "Enable Indentation" option activated, you shift the symbol/glyph and the text N levels to the right. Could be useful for simple 'parent-child' relationships, if you don't want to use a Tree. And still it's used in Find Examples / NI Example Finder window:2 points

-

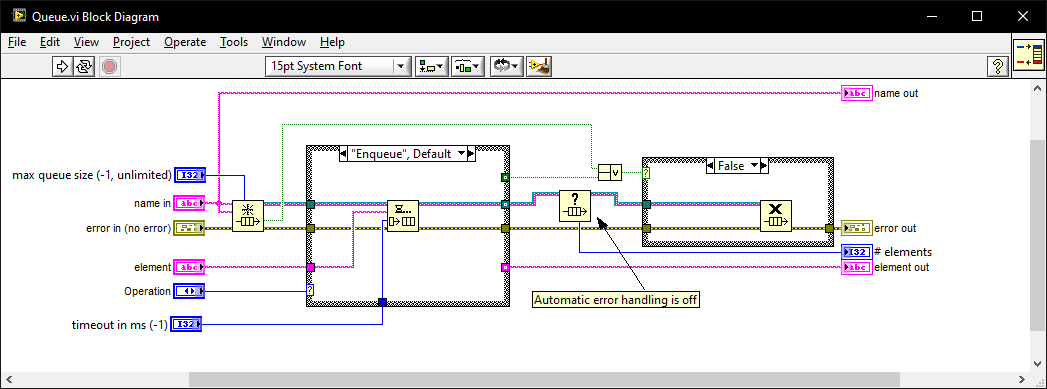

I once went for an interview where they gave me a coding test and asked me to modify it. It was a very long time ago so I don't remember the exact modification they wanted (nothing to do with memory leaks) but I do remember the obtain queue and read queue inside a while loop with the release queue outside. I asked if they wanted me to also fix the memory leak as well as the modifications and they were a little puzzled until I explained what you have just said. I must have seen (and fixed) this while-loop bug-pattern a thousand times since then in various code bases. I also created this VI which I generally use instead of the primitives as it intialises on first call, can be called from anywhere, and prevents most foot-shooting by rolling them all into a single VI and ensuring all references but 1 are closed after use. Queue.vi2 points

-

2 points

-

In the past I have used the IMAQ drivers for getting the image, which on its own does not require any additional runtime license. It is one of those lesser known secrets that acquiring and saving the image is free, but any of the useful tools have a development, and deployment license associated with it. I've also had mild success with leveraging VLC. Here is the library I used in the past, and here is another one I haven't used but looks promising. With these you can have a live stream of a camera as long as VLC can talk to it, and then pretty easily save snapshots. EDIT: The NI software for getting images through IMAQ for free is called "NI Vision Common Resources". This LAVA thread is where I first learned about it.2 points

-

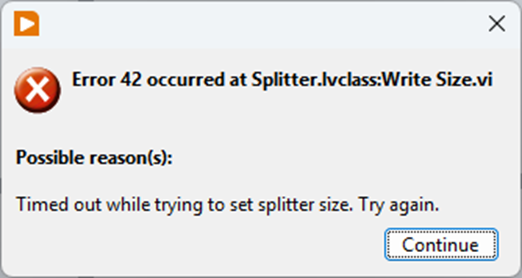

Just to share how I got around this: By deleting 1 front panel item at a time I found that one single control was causing PaneRelief to crash; an XY graph. Setting it temporarily to not scale and replacing it with a standard XY graph (the one I had had some colours set to transparent etc) was enough to avoid having PaneRelief crash LabVIEW, but it would now just present a timeout error: I found a way arund this too though: the VI in question was member of a DQMH lvlib that probably added a lot of complexity for PaneRelief. With a copy saved as a non-member it worked: I could replace the graph, edit the splitters with PaneRelief without the timeout error (even setting the size to 0), then copy back the original graph replacing the temporary one, and finally move the copy back into the lvlib and swap it with the original. Voila! What a Relief... 😉 I probably have to repeat this whole ordeal if I ever need to readjust the splitters in that VI with PaneRelief though 😮2 points

-

I confirm that this license is nearly identical to the standard EULA we use for our commercial products. Some wording is not applicable to a distributed palette of VIs like this. Our intention was to share a few reusable tools, used internally, with the community. Ideally, we should have released them under a standard open-source license such as MIT or a similar option. These VIs have been released “as-is,” without support or any guarantee that they will function for your specific use case. You may need to troubleshoot or fix any issues on your own. Feel free to use them in any context. I’ll look into whether it's possible to update the packages on the tool network to replace the current license with a more standard open-source one.2 points

-

I put a temporary ban on inserting external links in posts (except from a safe list). We'll see what affect it has.2 points

-

2 points

-

Your reporting of spam is helpful. And just like you are doing one report per user is enough since I ban the user and all their posts are deleted. If spam gets too frequent I notify Michael and he tweaks dials behind the scene to try to help. This might be by looking at and temporarily banning new accounts from IP blocks, countries, or banning key words in posts. He also will upgrade the forum's platform tools occasionally and it gets better at detecting and rejecting spam.2 points

-

1 point

-

Reentrant execution may be a safe option. Have to check the function. The zlib library is generally written in a way that should be multithreading safe. Of course that does NOT apply to accessing for instance the same ZIP or UNZIP stream with two different function calls at the same time. The underlaying streams (mapping to the according refnums in the VI library) are not protected with mutexes or anything. That's an extra overhead that costs time to do even when it would be not necessary. But for the Inflate and Deflate functions it would be almost certainly safe to do. I'm not a fan of making libraries all over reentrant since in older versions they were not debuggable at all and there are still limitations even now. Also reentrant execution is NOT a panacea that solves everything. It can speed up certain operations if used properly but it comes with significant overhead for memory and extra management work so in many cases it improves nothing but can have even negative effects. Because of that I never enable reentrant execution in VIs by default, only after I'm positively convinced that it improves things. For the other ZLIB functions operating on refnums I will for sure not enable it. It should work fine if you make sure that a refnum is never accessed from two different places at the same time but that is active user restraint that they must do. Simply leaving the functions non-reentrant is the only safe option without having to write a 50 page document explaining what you should never do, and which 99% of the users never will read anyways. 😁 And yes LabVIEW 8.6 has no Separated Compiled code. And 2009 neither.1 point

-

A Timestamp is a 128 bit fixed point number. It consists of a 64-bit signed integer representing the seconds since January 1, 1904 GMT and a 64-bit unsigned integer representing the fractional seconds. As such it has a range of something like +- 3*10^11 years relative to 1904. That's about +-300 billion years, about 20 times the lifetime of our universe and long after our universe will have either died or collapsed. And the resolution is about 1/2*10^19 seconds, that's a fraction of an attosecond. However LabVIEW only uses the most significant 32-bit of the fractional part so it is "only" able to have a theoretical resolution of some 1/2*10^10 seconds or 200 picoseconds. Practically the Windows clock has a theoretical resolution of 100ns. That doesn't mean that you can get incremental values that increase with 100ns however. It's how the timebase is calculated but there can be bigger increments than 100ns between two subsequent readings (and no increment). A double floating point number has an exponent of 11 bits and 52 fractional bits. This means it can represent about 2^53 seconds or some 285 million years before its resolution gets higher than one second. Scale down accordingly to 285 000 years for 1 ms resolution and still 285 years for 1us resolution.1 point

-

1 point

-

You could also check https://github.com/ISISSynchGroup/mjpeg-reader which provides a .Net solution (not tried). So, who volunteers for something working on linux?1 point

-

1 point

-

From what I can remember, for LV 5.0.x and older RTE (i.e., a loader plus small subset of resources) was included into the EXE automatically during the build process. For LV 5.1.x there was a choice: to include RTE into the build or to use an external RTE. And since LV 6.0 only an external RTE was supposed. I could say more, such a trick is still possible for all modern versions on all three platforms (Win, Mac, Linux). The latest version I tested it on, was LV 2018, but I'm pretty sure, the technique hasn't changed much. I can't remember, from which version NI started to use Visual Studio 2015, but since then each EXE requires The Universal CRT, that is contained in Microsoft Visual C++ 2015 Redistributable. One could install such a distro on a clean machine or copy all these files from the machine, where such a CRT is already installed. Now besides of those the application will also require this minimal subset of folders/files (true for LV 2018 64-bit): On Linux it goes much easier (true for LV 2014 64-bit): For LV 2018 64-bit with a "dark" RTE it also wants And for Mac OS you can embed RTE into the application with this script: Standalone LabVIEW-built Mac Application with Post-Build Action. Of course (and I'm sure everyone understands that), the technique described above, is applicable to very simple 'a la calculator' apps and not very to not at all for more or less complex projects. The more functions are called, the more dependencies you get. If something from MKL is used, you need lvanlys.dll and LV##0000_BLASLAPACK.dll, if VISA is used, you need visa32.dll, NiViAsrl.dll and maybe others, and so on and so forth.1 point

-

The thing I loved about the original LabVIEW was that it was not namespaced or partitioned. You could run an executable and share variables without having to use things like memory maps. I used to to have a toolbox of executables (DVM, Power Supplies, oscilloscopes, logging etc. ) and each test system was just launching the appropriate executable[s] at the appropriate times. It was like OOP composition for an entire test system but with executable modules. Additionally, crashes were unheard of. In the 1990's I think I had 1 insane object in 18 months and didn't know what a GPF fault was until I started looking at other languages. We could run out of memory if we weren't careful though (remember the Bulldozer?). Progress!1 point

-

I have always used this library to prevent the screensaver and windows lock from occurring. Our IT locks down the computer so the screensaver, lock screen, cannot be changed. This library bascially tells Windows it's in Presentation mode, e.g., slideshow, watching a movie, etc, such that the screen will not got to screensaver or lock screen.1 point

-

1 point

-

You might have more success posting this on the Discord. Most of the conversations happen there these days.1 point

-

Thanks, I'll be honest, I'm allergic to Discord. Vehemently so. To the point where I refuse to use it. Just seems like a lot of unfiltered noise to this old man. I'm gonna play with NodeRed and see if it's the tool of choice. And oh, back in the day I was a National Instruments Alliance Member. Dunno if that's still a thing or not. Cheers,1 point

-

I posted a demo set of VIs here which can pop up a window, centered on whatever monitor the mouse is on. There's also settings to have the window center on the mouse wherever it is, but saying on the same monitor. And yes this uses the All Screens, Working Area properties.1 point

-

There is an Application property called Display->All Monitors. It will give you the pixel ranges of the monitors in your system. What I've done is to use the calling VI's position to figure out which monitor it was on and then place the new VI window as needed. You could use a win32 dll call to get the mouse position as well if that better meets your requirements.1 point

-

Yup. There is: MMAP (1.0.1).1 point

-

Discussion forums like LAVA and ni.com are challenged these days by LLMs that can answer in a well structured manner very quickly and handle follow-up questions on the spot. Forums have their stenghts, but when it comes to basic questions that LLMs can find a lot about, spread across multiple forums, the LLMs win. It is a bit of a catch 22 though, as the LLMs need the forums alive to stay up to date 😮 ------------------------------ Here is Grok answering you question of what a DVR is: DVRs as References: In LabVIEW, DVRs (Data Value References) are a mechanism to access data by reference rather than by value. This means that instead of creating copies of the data (as is typical in LabVIEW's dataflow paradigm), a DVR provides a pointer-like reference to a single memory location where the data resides. This avoids unnecessary data duplication, which can be critical for large datasets or performance-sensitive applications. Avoiding Copies: By using DVRs, you can manipulate the same data in memory without creating copies, which is especially useful for large arrays, clusters, or other complex data structures. This reduces memory usage and improves performance. Parallel Operations and Race Conditions: When multiple parallel operations (e.g., parallel loops or VIs) access the same DVR, there is a potential for race conditions if the access is not properly synchronized. LabVIEW provides the In-Place Element Structure to safely access and modify DVR data. This structure ensures that only one operation can read or write to the DVR at a time, preventing race conditions. Without this, simultaneous read/write operations could lead to unpredictable results or data corruption. Key Points to Add: Thread Safety: DVRs are not inherently thread-safe. You must use the In-Place Element Structure (or other synchronization mechanisms like semaphores) to avoid race conditions when multiple parallel tasks access the same DVR. Use Cases: DVRs are commonly used in scenarios where you need to share data between parallel loops, modules, or processes without copying, such as in real-time systems or when managing shared resources. Limitations: DVRs are only valid within the application instance where they are created, and the data they reference is freed when the reference is deleted or the application stops. --------------------- Before DVRs we typically had to resort to cloning of functional globals (VIs), but DVRs make this a little more dynamic and slick. You can have a single malleable VI operate on multiple types of DVRs too e.g., that opens up a lot of nice reuse. If you have an object that requires multiple circular buffers e.g. you can include circular buffer objects in the private data of that object, with the circular buffer objects containing a DVR to an array acting as that buffer... -------------------- Here is ChatGPT comparing functional globals with DVRs: Functional Globals (FGs) and Data Value References (DVRs) are both techniques used in programming (particularly in LabVIEW) to manage shared data, but they offer different approaches and have different strengths and weaknesses. FGs encapsulate data within a VI that provides access methods, while DVRs provide a reference to a shared memory location. Functional Globals (FGs): Encapsulation: FGs encapsulate data within a VI, often a subVI, that acts as an interface for accessing and modifying the data. This encapsulation can help prevent unintended modifications and promote better code organization. Control over Access: The FG's VI provides explicit methods (e.g., "Get" and "Set" operations) for interacting with the data, allowing for controlled access and potential validation or error handling. Potential for Race Conditions: While FGs can help avoid some race conditions associated with traditional global variables, they can still be susceptible if not implemented carefully, particularly if the access methods themselves are not synchronized. Performance: FGs can introduce some overhead due to the VI calls, but this can be mitigated by using techniques like inlining and careful design. Example: An FG could be used to manage a configuration setting, with a "Get Configuration" and "Set Configuration" VI providing access to the settings. Data Value References (DVRs): Shared Memory Reference: DVRs are references to a memory location, allowing multiple VIs to access and modify the same data. This is a more direct way of sharing data than FGs. Flexibility: DVRs can be used with various data types, including complex data structures, and can be passed as parameters to subVIs. Potential for Race Conditions: DVRs, like traditional globals, can be prone to race conditions if not handled carefully. Proper synchronization mechanisms (e.g., queues, semaphores, or action engines) are often needed to prevent data corruption. Performance: DVRs can be very efficient, especially when used with optimized data access patterns. Example: A DVR could be used to share a large array between different parts of an application, with one VI writing to the array and another reading from it. Key Differences and Considerations: Control vs. Flexibility: FGs offer more control over data access through their defined interface, while DVRs offer more flexibility in terms of the data types and structures that can be shared. Race Condition Mitigation: While both can be susceptible, FGs can be designed with built-in synchronization mechanisms (like action engines), while DVRs require explicit synchronization mechanisms to prevent race conditions. Performance Trade-offs: DVRs can offer better performance in many cases, especially when dealing with large data sets, but this can be offset by the complexity of managing synchronization. Code Readability and Maintainability: FGs can make code more readable and maintainable by encapsulating data access logic, but poorly designed FGs can also lead to confusion. In summary: Use Functional Globals when: You need controlled access to data, want to encapsulate data management logic, or need to ensure some level of synchronization. Use Data Value References when: You need to share data efficiently between multiple parts of your application, need flexibility in the data types you are sharing, or when performance is critical and synchronization can be handled externally. It's worth noting that in many cases, a combination of both techniques might be used to leverage the strengths of each approach. For example, a DVR might be used to share data, while a functional global (or an action engine) is used to manage access to that data in a controlled and synchronized manner.1 point

-

Some people might be tempted to use Obtain Queue and Obtain Notifier with a name and assume that since the queue is named each Obtain function returns the same refnum. That is however not true. Each Obtain returns a unique refnum that references a memory structure of a few 10s of bytes that references the actual Queue or Notifier. So the underlaying Queue or Notifier is indeed only existing once per name, BUT each refnum still consumes some memory. And to make matters more tricky, there is only a limited amount of refnum IDs of any sort that can be created. This number lies somewhere between 2^20 and 2^24. Basically for EVERY Obtain you also have to call a Release. Otherwise you leak memory and unique refnum IDs.1 point

-

4. WinAPI version using ChooseColor function. NativeColors.rar Far from ideal, don't kick too hard. 🙂 Determine Clicked Array Element Index is from here.1 point

-

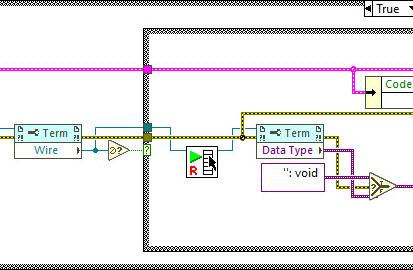

I kind of liked this idea and wished VIM's could allow for such a backpropagation. Even had a thought of making an idea on the dark forums. But then I played a while with the Variant To Data node. It doesn't play well. It can't determine a sink, if a polymorphic VI is connected or even when a LV native (yellow) node is connected. Borders of structures are another issue, obviously. So, it'd require making two ideas at least: to implement VIM backpropagation and to enhance the Variant To Data node. (As a hack one could eliminate the Variant to Data in their code with coerceFromVariant=TRUE token, but then the diagram starts to look odd and no error handling is performed). If someone still wants the code, shown in the very first post, it's here: https://code.google.com/archive/p/party-licht-steuerung/source/default/source?page=3 (\trunk\PLS-Code\PLS Main.vi). And these are the papers to progress through the lessons: LabVIEW Intermediate I Successful Development Practices Course Manual. Nothing interesting there for an experienced LV'er though. XNodes demonstrated here work a way better, and could be a good alternative (if you're OK with unsupported features, of course). As I tried to adapt them for my own purposes, I decided to improve the sink search technique. It surprised me a bit, that there's still no complete code to walk through all the nested structures to determine a source/sink by its wire. Maybe I didn't search well but all I found was this popup plugin: Find Wire Source.llb. It stops on Case structures though. I have reversed its logic to search for a sink instead of a source and tried to apply recursion, when it encounters a Case structure. Well, it's still not ideal, but now it works in most my cases. There are some cases, when it cannot find a sink, e.g. wire branches with void terms: Too many scenarios to process them all. Nevertheless, this little VI might be useful for someone. You may use it as a popup plugin, of course, or may pull out that Execute Find Wire Destination (R).vi and use it in your XNodes. As an example: Find Wire Destination.llb Already tried such nodes in a work project. I must admit that not all the time back-propagation is suitable, so about 50/50. But when it's used, it works.1 point

-

Top Level here almost certainly doesn't mean the diagram of the template VI. Instead LabVIEW distinguishes between a Top Level diagram which is basically the entire diagram window of a VI and sub diagrams such as each individual frame inside a case structure but also the diagram space inside a loop structure for instance. The tricky part may be that the diagram itself may indeed only exist once and remains the same even for clone VIs. The actual relevant part is the data space which is separate for each active clone (when you have shared clones) and unique for each clone (when you have pre-allocated clones).1 point

-

I actually had a similar experience when first moving everything to the new OpenG structure. It broke heaps of stuff (even inside its own OpenG stuff), so I rolled back the change. Some time later I tried again, and think I did have to deal with a bit of pain initially with relinking or maybe some missing stuff, but since then things have been stable.1 point

-

Well regardless of the reason, what I was trying to say is that references opened in a VI, get closed when the VI that opens them goes idle.1 point

-

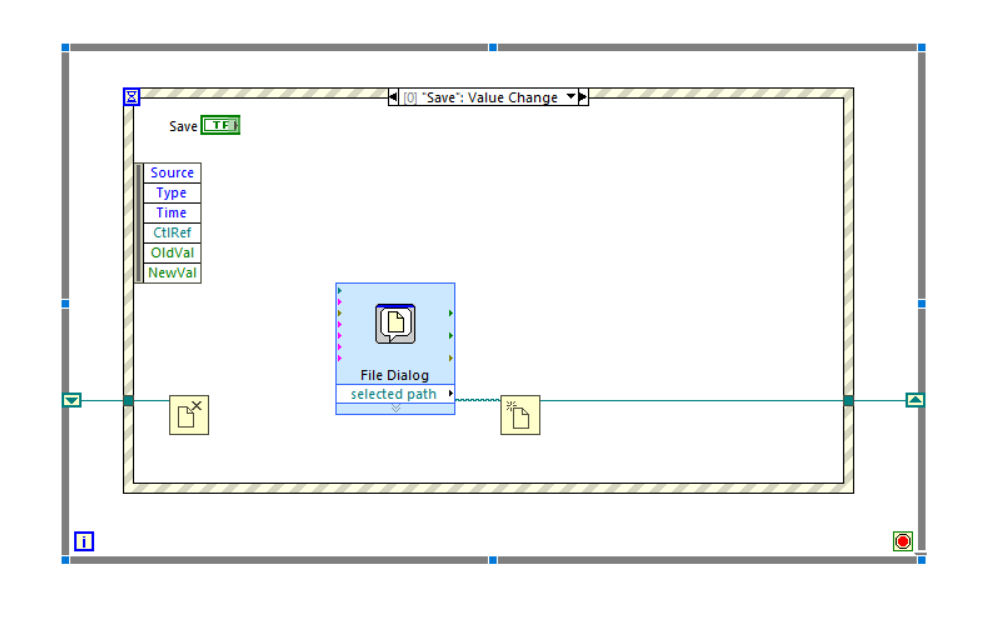

@Natiq this (non-functional example) should be enough to get you started. The weird arrow thing on the boundaries of the while loop is a shift register. The event structure can also be configured to have a timeout case where you can then perform other stuff, like reading your image and writing it to the reference on the the shift register. There is heaps of information out there (YouTube for example), a bit of searching will lead to some more details.1 point

-

There is a "best practices" document (this too) but I suspect you are looking for a less abstract set of guidelines.1 point

-

We use the MPSSE.dll LABview driver from Benoit. We are trying the i2c read 1 byte and multi bytes. We expect ack for all bytes except the last byte with nak. During read, we understand that the I2C master drives the ack/nak. However, ack and nak happens randomly. Any body have any suggestions Thank you Dan1 point

-

Regarding Levenshtein: Wladimir Levenshtein developed 1995 an algorithm for this. It is called the Levenshtein Distance. Some years ago I developed a VI to calculate the Levenshtein Distance. Here it is (LabVIEW 2016). Can you post your VIs in LV2020 or 2019, please. Levenshtein Distance.vi1 point

-

Here is a quick and dirty edit. It allows for column separators to be moved, but I noticed that on resize it will set the column widths. So this means if you manually move the columns, and then resize the control it may change the columns in an unexpected way. But at that point you can manually move the separators again. I only have 2017 and 2018 so this is for 2017 and newer now. Variant_Probe-2.4.3-0.ogp1 point

-

Well that's okay I felt like doing some improvements on the image manipulation code. Attached is an improved version that supports ico and tif files and allows to select an image from within the file. For ico files it basically grabs the one image you select (with Image Index) and make an array of bytes that is a ico file with only that image in it, and then displays it in the picture box. For Tif files there is a .Net method for selecting the image which for some reason doesn't work on ico files. Edit: Updated to work with Tifs as well. Image Manipulation With Ico and Tif.zip1 point

-

I used scripting and low-level VI editing to generate a VI with every single decoration object in LabVIEW, at least those with ID's 0 to -4096. There may be some out of that range (and many in that range don't have a valid image associated with them) but this range contains a lot of them. 0 to -4096.vi1 point

-

Looks like someone beat me to it! Oh well, I already exported it (also for 2009, incidentally) so I'll post it here in case it'd be more convenient to use a regular VI file. 0 to -4096.vi1 point

-

Mwuhahahahaha! Three config tokens have escaped your grasp! I modified them specifically for folks like Flarn! They don't appear as plain text anywhere in the EXE (or in any VI for that matter). Do they guard any great secret of LabVIEW? I'm not telling! But you can have fun pouring through the code and looking for interesting bits and trying to figure out what you need to put in your config file. LabVIEW 2013 or later. Good luck.1 point

-

Basically you need 2 more Property nodes if you want to keep your headers color. you must do what QueueYueue said first. Then : Active Cell.Active Column Number = -2 (this selects all columns) Active Item.Row Number = -1 (this selects the column headers) Active Cell.Background Color = Desired color Then : Active Cell.Active Column Number = -1 (this selects row header) Active Item.Row Number = -2 (this selects all rows) Active Cell.Background Color = Desired color1 point

-

The OpenG Pipe Project does just that. It is a LabVIEW Library that replaces the System Exec function and returns pipe refnums for the three standard IO interfaces and functions to read and write to those refnums. The project hasn't been released yet as I consider it not entirely release quality but it does work for me and I have actually used in in several of my projects already. Since there is no officially released package yet you can't just download it through VIPM from internet. But here is a copy of a package you can install using VIPM. oglib_pipe-1.0-1.ogp1 point