Leaderboard

Popular Content

Showing content with the highest reputation since 03/04/2025 in Posts

-

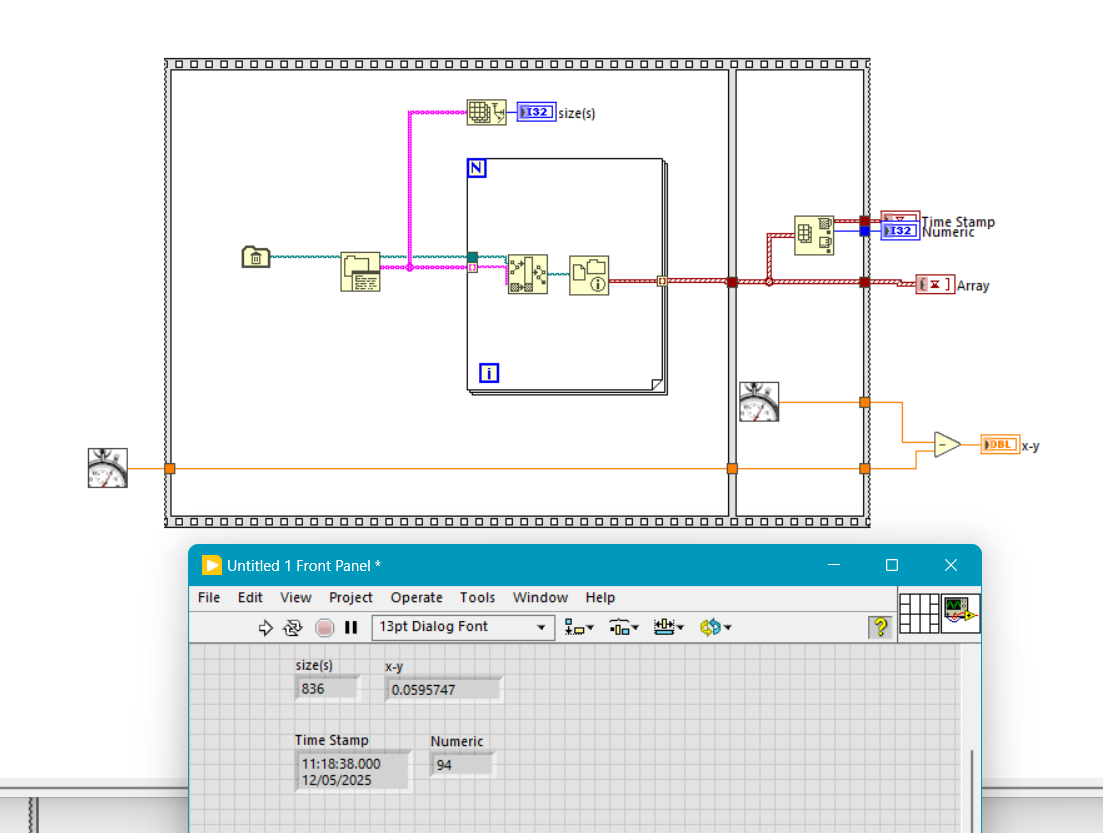

So a couple of years ago I was reading about the ZLIB documentation on compression and how it works. It was an interesting blog post going into how it works, and what compression algorithms like zip really do. This is using the LZ77 and Huffman Tables. It was very education and I thought it might be fun to try to write some of it in G. The deflate function in ZLIB is very well understood from an external code call and so the only real ever so slight place that it made sense in my head was to use it on LabVIEW RT. The wonderful OpenG Zip package has support for Linux RT in version 4.2.0b1 as posted here. For now this is the version I will be sticking with because of the RT support. Still I went on my little journey trying to make my own in pure LabVIEW to see what I could do. My first attempt failed immensely and I did not have the knowledge, to understand what was wrong, or how to debug it. As a test of AI progression I decided to dig up this old code and start asking AI about what I could do to improve my code, and to finally have it working properly. Well over the holiday break Google Gemini delivered. It was very helpful for the first 90% or so. It was great having a dialog with back and forth asking about edge cases, and how things are handled. It gave examples and knew what the next steps were. Admittedly it is a somewhat academic problem, and so maybe that's why the AI did so well. And I did still reference some of the other content online. The last 10% were a bit of a pain. The AI hallucinated several times giving wrong information, or analyzed my byte streams incorrectly. But this did help me understand it even more since I had to debug it. So attached is my first go at it in 2022 Q3. It requires some packages from VIPM.IO. Image Manipulation, for making some debug tree drawings which is actually disabled at the moment. And the new version of my Array package 3.1.3.23. So how is performance? Well I only have the deflate function, and it only is on the dynamic table, which only gets called if there is some amount of data around 1K and larger. I tested it with random stuff with lots of repetition and my 700k string took about 100ms to process while the OpenG method took about 2ms. Compression was similar but OpenG was about 5% smaller too. It was a lot of fun, I learned a lot, and will probably apply things I learned, but realistically I will stick with the OpenG for real work. If there are improvements to make, the largest time sink is in detecting the patterns. It is a 32k sliding window and I'm unsure of what techniques can be used to make it faster. ZLIB G Compression.zip5 points

-

Phew that is a pretty strong opinion! Although I personally am not a fan of the overall style of DQMH none of my problems are with the scripting/wizards or placeholder text. I think any framework that tries to do "a lot" will be complicated... your own personal framework (which you likely find trivial to use) is likely to be a bit weird to others. DQMH is extremely popular for a reason... To paraphrase the words of a wiser person than I, "please don't yuck someone elses yum"3 points

-

Absolutely echo what Shaun says. Nobody banned them. But most who tried to use them have after some more or less short time run from them, with many hairs ripped out of their head, a few nervous tics from to much caffeine consume and swearing to never try them again. The idea is not really bad and if you are willing to suffer through it you can make pretty impressive things with them, but the execution of that idea is anything but ideal and feels in many places like a half thought out idea that was eventually abandoned when it was kind of working but before it was a really easily usable feature.2 points

-

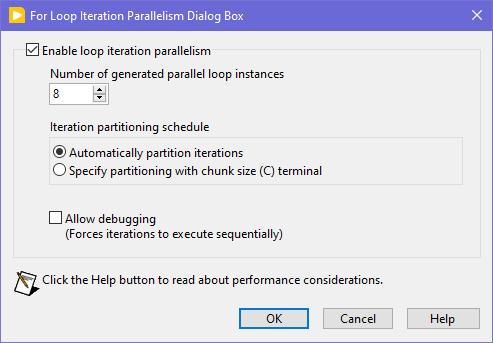

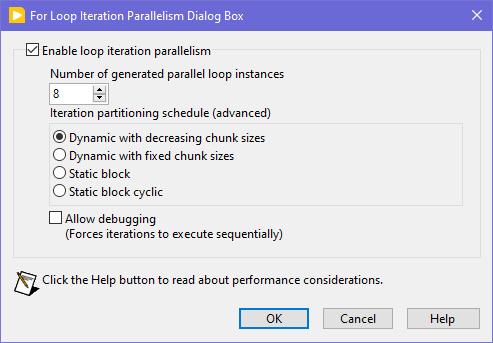

Seems like this one has "escaped everyone's grasp" too. ParallelLoop.ShowAllSchedules=True Because was only checked from the password-protected diagram of ParallelForLoopDialog.vi (LabVIEW 20xx\resource\dialog). Present since LabVIEW 2010. When activated, allows to apply more advanced iteration partitioning schedule. In other words, instead of this you will get this Сould this be useful? I can't say. Maybe in some very specific use-cases. In my quick tests I didn't manage to get increase in any productivity. It's easy to mess up with those options and make things worse, than by default. Also can be changed by this scripting counterpart.2 points

-

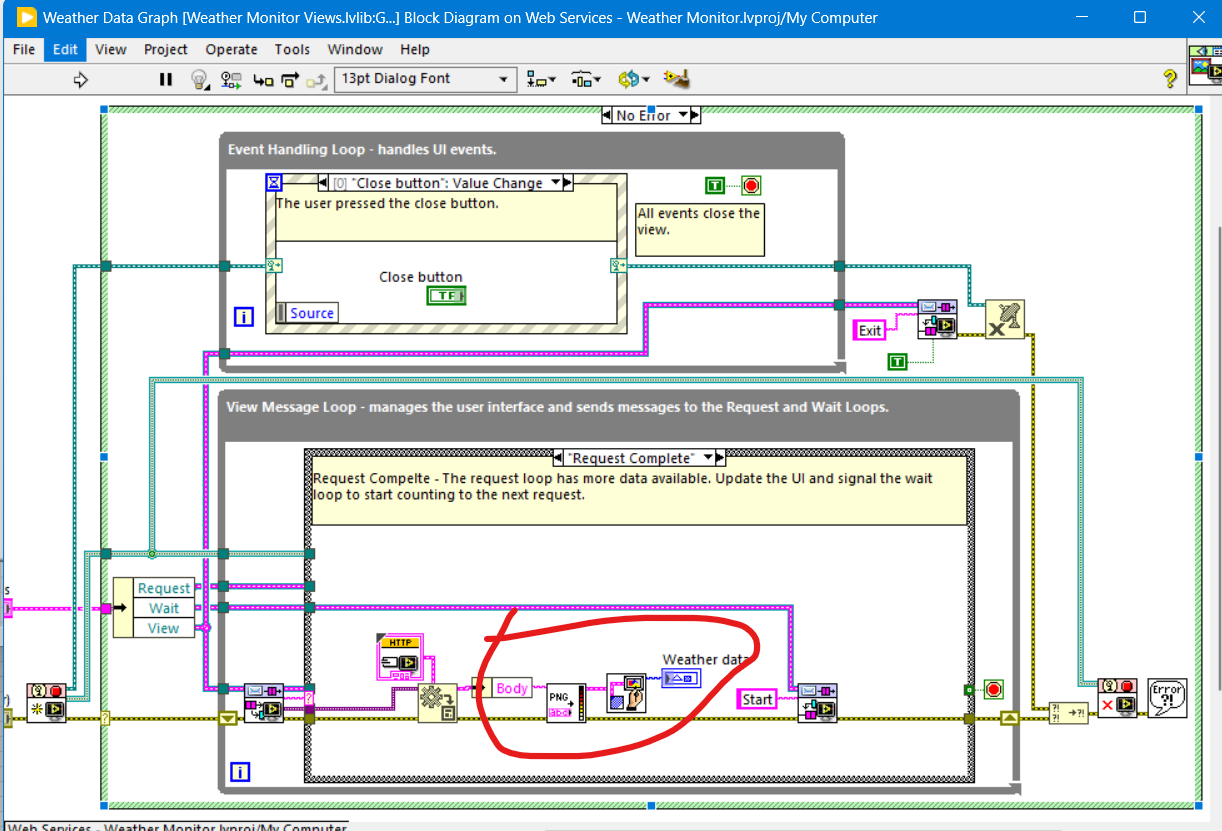

Look at this new download on VIPM https://www.vipm.io/package/bjm_lib_request_power/2 points

-

You want an ability to override the Equality or Comparison operators? I'm unsure, whether it really existed in OpenG packages, but now you have those neat malleable VIs, that let you do that: Search Unsorted 1D Array , Sort 1D Array , Search Sorted 1D Array. They have an additional input to specify your own equals or less function in a form of a custom comparison class or a VI refnum. There's an article to help: Creating a Custom Sorting Function in LabVIEW2 points

-

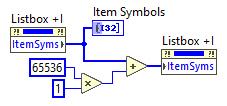

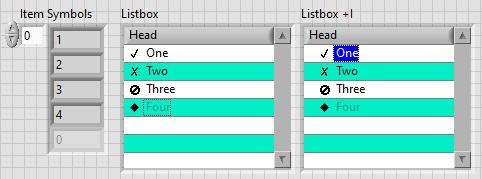

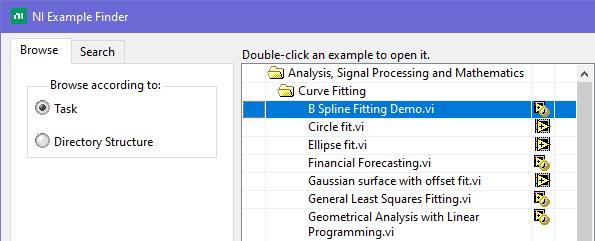

This is exactly what was said in that ancient thread: Tree control in labview. So if you add 65536*N to the Item Symbols property of the Listbox and have the "Enable Indentation" option activated, you shift the symbol/glyph and the text N levels to the right. Could be useful for simple 'parent-child' relationships, if you don't want to use a Tree. And still it's used in Find Examples / NI Example Finder window:2 points

-

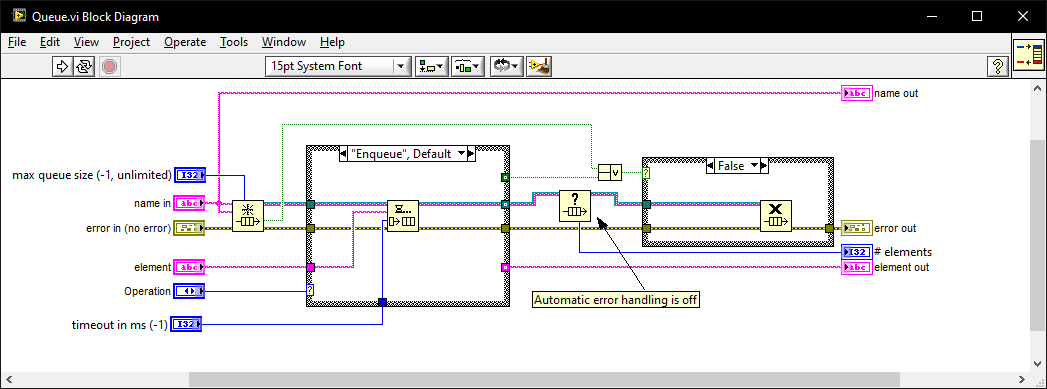

I once went for an interview where they gave me a coding test and asked me to modify it. It was a very long time ago so I don't remember the exact modification they wanted (nothing to do with memory leaks) but I do remember the obtain queue and read queue inside a while loop with the release queue outside. I asked if they wanted me to also fix the memory leak as well as the modifications and they were a little puzzled until I explained what you have just said. I must have seen (and fixed) this while-loop bug-pattern a thousand times since then in various code bases. I also created this VI which I generally use instead of the primitives as it intialises on first call, can be called from anywhere, and prevents most foot-shooting by rolling them all into a single VI and ensuring all references but 1 are closed after use. Queue.vi2 points

-

2 points

-

In the past I have used the IMAQ drivers for getting the image, which on its own does not require any additional runtime license. It is one of those lesser known secrets that acquiring and saving the image is free, but any of the useful tools have a development, and deployment license associated with it. I've also had mild success with leveraging VLC. Here is the library I used in the past, and here is another one I haven't used but looks promising. With these you can have a live stream of a camera as long as VLC can talk to it, and then pretty easily save snapshots. EDIT: The NI software for getting images through IMAQ for free is called "NI Vision Common Resources". This LAVA thread is where I first learned about it.2 points

-

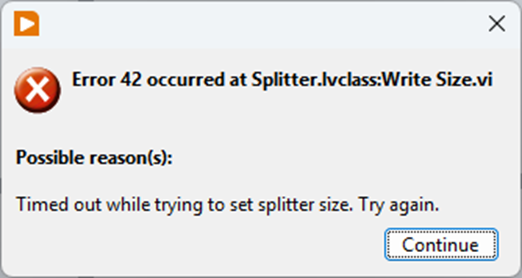

Just to share how I got around this: By deleting 1 front panel item at a time I found that one single control was causing PaneRelief to crash; an XY graph. Setting it temporarily to not scale and replacing it with a standard XY graph (the one I had had some colours set to transparent etc) was enough to avoid having PaneRelief crash LabVIEW, but it would now just present a timeout error: I found a way arund this too though: the VI in question was member of a DQMH lvlib that probably added a lot of complexity for PaneRelief. With a copy saved as a non-member it worked: I could replace the graph, edit the splitters with PaneRelief without the timeout error (even setting the size to 0), then copy back the original graph replacing the temporary one, and finally move the copy back into the lvlib and swap it with the original. Voila! What a Relief... 😉 I probably have to repeat this whole ordeal if I ever need to readjust the splitters in that VI with PaneRelief though 😮2 points

-

I confirm that this license is nearly identical to the standard EULA we use for our commercial products. Some wording is not applicable to a distributed palette of VIs like this. Our intention was to share a few reusable tools, used internally, with the community. Ideally, we should have released them under a standard open-source license such as MIT or a similar option. These VIs have been released “as-is,” without support or any guarantee that they will function for your specific use case. You may need to troubleshoot or fix any issues on your own. Feel free to use them in any context. I’ll look into whether it's possible to update the packages on the tool network to replace the current license with a more standard open-source one.2 points

-

I put a temporary ban on inserting external links in posts (except from a safe list). We'll see what affect it has.2 points

-

2 points

-

Your reporting of spam is helpful. And just like you are doing one report per user is enough since I ban the user and all their posts are deleted. If spam gets too frequent I notify Michael and he tweaks dials behind the scene to try to help. This might be by looking at and temporarily banning new accounts from IP blocks, countries, or banning key words in posts. He also will upgrade the forum's platform tools occasionally and it gets better at detecting and rejecting spam.2 points

-

With ZLib you just deflateInit, then call deflate over and over feeding in chunks and then call deflateEnd when you are finished. The size of the chunks you feed in is pretty much up to you. There is also a compress function (and the decompress) that does it all in one-shot that you could feed each frame to. If by fixed/dynamic you are referring to the Huffman table then there are certain "strategies" you can use (DEFAULT_STRATEGY, FILTERED, HUFFMAN_ONLY, RLE, FIXED). The FIXED uses a uses a predefined Huffman code table.1 point

-

You could also check https://github.com/ISISSynchGroup/mjpeg-reader which provides a .Net solution (not tried). So, who volunteers for something working on linux?1 point

-

1 point

-

From what I can remember, for LV 5.0.x and older RTE (i.e., a loader plus small subset of resources) was included into the EXE automatically during the build process. For LV 5.1.x there was a choice: to include RTE into the build or to use an external RTE. And since LV 6.0 only an external RTE was supposed. I could say more, such a trick is still possible for all modern versions on all three platforms (Win, Mac, Linux). The latest version I tested it on, was LV 2018, but I'm pretty sure, the technique hasn't changed much. I can't remember, from which version NI started to use Visual Studio 2015, but since then each EXE requires The Universal CRT, that is contained in Microsoft Visual C++ 2015 Redistributable. One could install such a distro on a clean machine or copy all these files from the machine, where such a CRT is already installed. Now besides of those the application will also require this minimal subset of folders/files (true for LV 2018 64-bit): On Linux it goes much easier (true for LV 2014 64-bit): For LV 2018 64-bit with a "dark" RTE it also wants And for Mac OS you can embed RTE into the application with this script: Standalone LabVIEW-built Mac Application with Post-Build Action. Of course (and I'm sure everyone understands that), the technique described above, is applicable to very simple 'a la calculator' apps and not very to not at all for more or less complex projects. The more functions are called, the more dependencies you get. If something from MKL is used, you need lvanlys.dll and LV##0000_BLASLAPACK.dll, if VISA is used, you need visa32.dll, NiViAsrl.dll and maybe others, and so on and so forth.1 point

-

I haven't had much time to investigate this until this month, but I think I've found the cause. XNodes on the production computer were not designed optimally. In the AdaptToInputs ability I was unconditionally passing a GenerateCode reply, thinking that the AdaptToInputs is only called when interacting with the XNode (connecting/disconnecting wires). It turned out that LabVIEW also calls the AdaptToInputs ability once, when the VIs are loaded and any single change is made, no matter if it touches the XNode or not. As I had many such non-optimal XNodes in many places, it was causing code regeneration in all of them. Besides of that some of my VIs had very high code complexity (11 to 13), because of a bunch of nested structures. When the XNodes regeneration was occurring simultaneously with the VIs recompilation, it was taking that a minute or so. After I added extra conditions into my AdaptToInputs ability (issue a GenerateCode reply only, when the Term Types are changed), the edits in my VIs started to take 1.5 seconds. Still the hierarchy saves can be slow, when some 'heavy' VIs are changed, but it's a task for me to refactor those VIs, so their complexity could decrease to 10 or less. By the way, my example from the previous page was not suitable for demonstrating the situation, as its code complexity is low and the Match Regular Expression XNode does not issue a GenerateCode reply in the AdaptToInputs.1 point

-

1 point

-

My problem was on a windows machine but I managed to solve it; I found that using LVCompare also segfaulted on the same file, but did not segfault with the -nofp command line option. With this I was able to confirm the specific file that both LVMerge and LVCompare were segfaulting on, and systematically delete half the code and re-test whether LVCompare would crash. After a few hours I was able to track down the offending piece of code to a random chart; I'm honestly still not sure what was causing them to segfault, but deleting and replacing the chart fixed the issue. Hope this helps someone else out there!1 point

-

You may also want tell people where you can actually download or at least buy this. Although if you want to sell it, do not expect to many reactions. It is already hard to get people to use such toolkits when you offer them for free download.1 point

-

I would suggest rabbitmq, i want(ed) to present it at a LabVIEW user group (LUGE) but haven't done it yet. It's very powerful. I use redis and did a quick presentation (in french) at LUGE recently, i haven't used the stream feature though, I only used it as cache.1 point

-

Redis is certainly high performance and suited to multiple, loose writers, readers and subscribers, with bindings for so many ecosystems. One of its several features, which I haven't perused, are Streams. I'd be curious too to know whether continuous cross-app data streaming could be efficiently implemented using them.1 point

-

Yup. There is: MMAP (1.0.1).1 point

-

1 point

-

I cannot look at your file, but I suggest save the data to TDMS or any binary format of your choice. Once the file is saved, then you can convert it to text.1 point

-

There is no typos and errors in your posts. Only pearls of wisdom and oracles of truth that we mortals can't understand yet...1 point

-

Drat, and now my typos and errors are put in stone for eternity (well at least until LavaG is eventually shutdown when the last person on earth turns off the light) 😁1 point

-

@Rolf Kalbermatter the admins removed that setting for you as everything you say should be written down and never deleted 🙂1 point

-

Had the same issue. Removing a VI from a lvlib, using Pane Relief to set splitter size and moving it back to the the lvlib worked for me to. Thanks!1 point

-

1 point

-

In addition to the LV native method, there are options with .NET and command prompt: Get Recently Modified Files.1 point

-

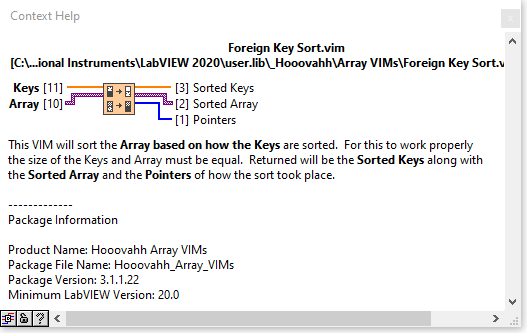

That's how I'd do it. Then combine that with the Foreign Key Sort from my Array package, putting the Time Stamps into the Keys, then paths into the Arrays, and it will sort the paths from oldest to newest. Reverse the array and index at 0, or use Delete From Array to get the last element, which would be the newest file.1 point

-

1 point

-

I have experienced the same thing when my VI was the member of a large class. I removed the VI from the class, set the splitter positions, and then added it back to the class. :shrug:1 point

-

Put the acquire image and save to file in the event structure timeout case, but only write to file conditionally (i.e. if the user has clicked the button)1 point

-

1 point

-

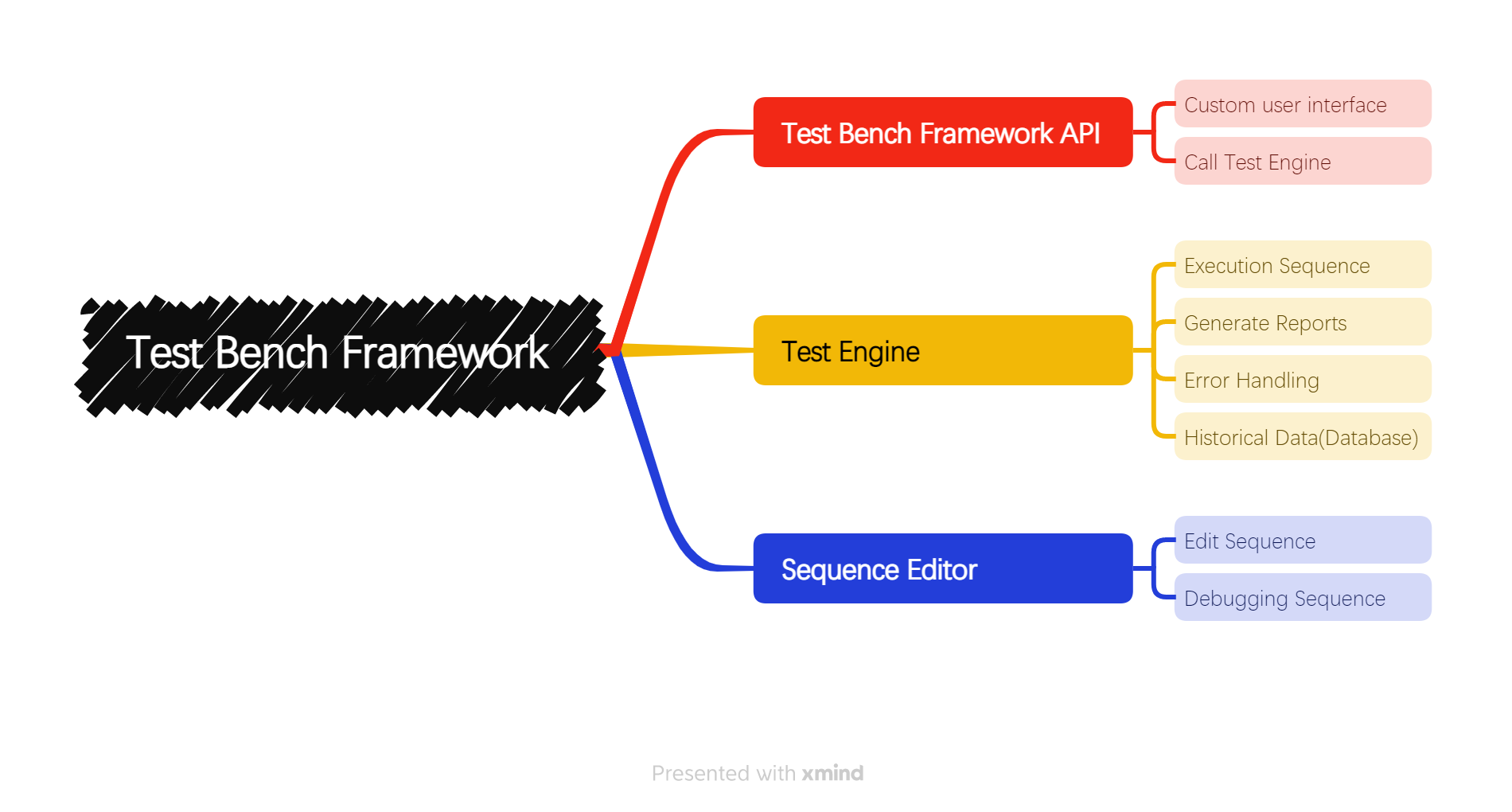

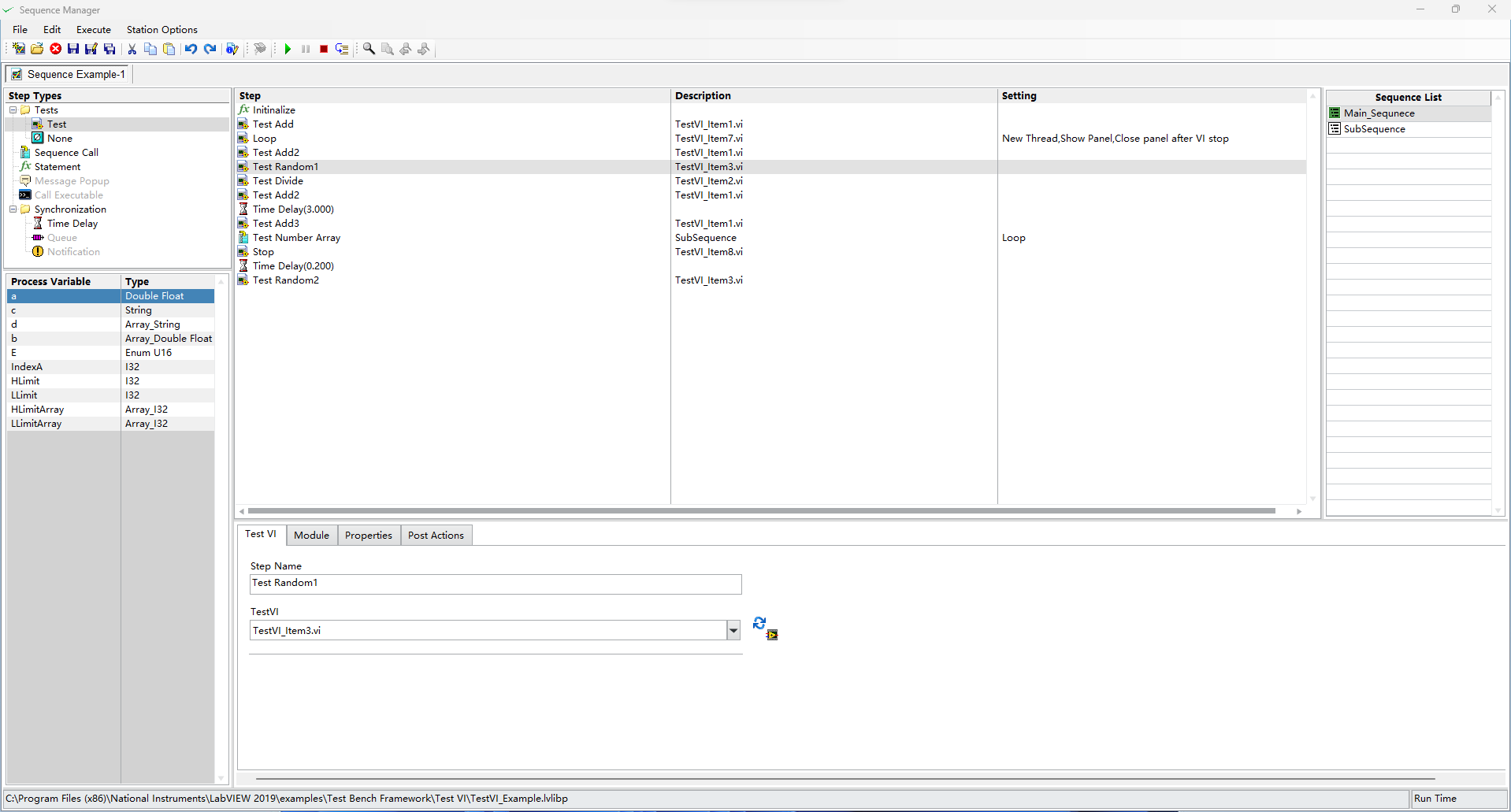

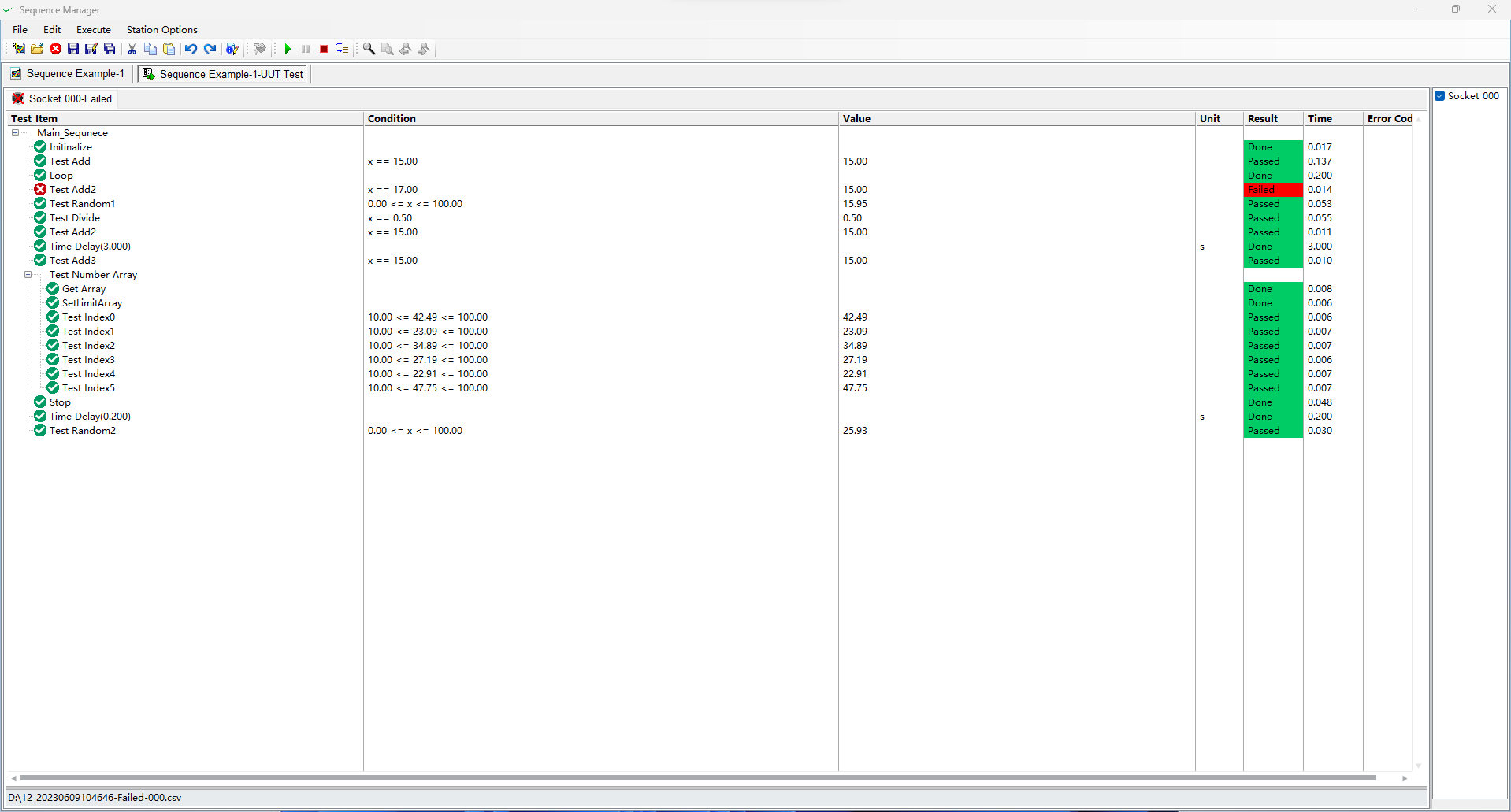

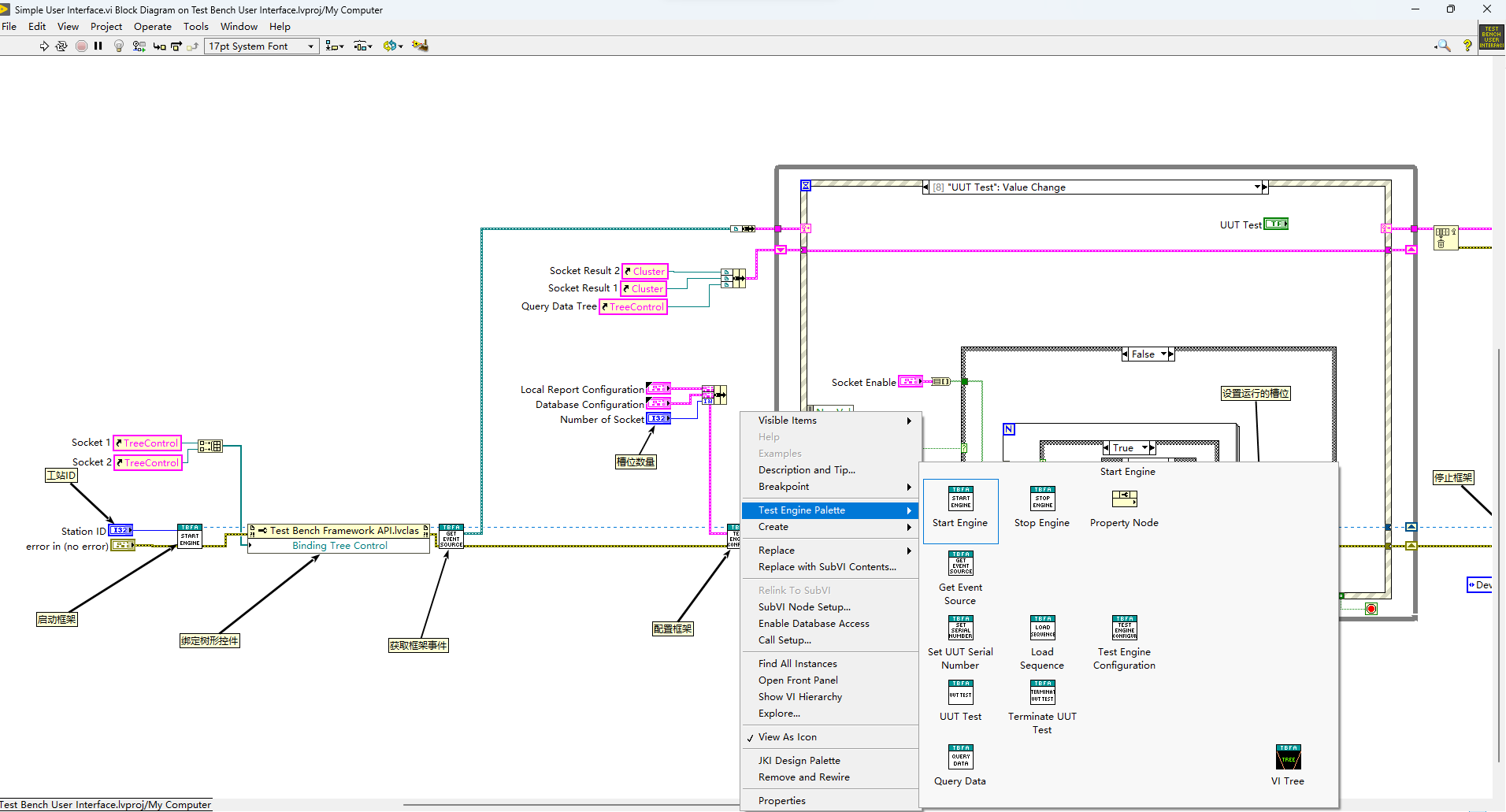

I used LabVIEW to develop a toolkit for ATE software. The toolkit is called "Test bench Framework", which includes a test sequence editor and a test engine.This toolkit features the ability to execute several different sequences in parallel.If you are interested in this kit please contact me, thank you! This toolkit is over 10MB in file size and cannot be published on VIPM, so I uploaded it to Github.Test-Bench-Framework . I used the TestStand icon inside my own sequence editor and wondered if there would be any copyright issues involved.But it's not commercially available yet.1 point

-

We use the MPSSE.dll LABview driver from Benoit. We are trying the i2c read 1 byte and multi bytes. We expect ack for all bytes except the last byte with nak. During read, we understand that the I2C master drives the ack/nak. However, ack and nak happens randomly. Any body have any suggestions Thank you Dan1 point

-

Here is a VI that gets the title of the window that is active. You could then continually loop until the title you expect is active, then perform operations. https://forums.ni.com/t5/LabVIEW/Get-Current-Active-Window/m-p/3930389#M11169261 point

-

1 point

-

Here is a quick and dirty edit. It allows for column separators to be moved, but I noticed that on resize it will set the column widths. So this means if you manually move the columns, and then resize the control it may change the columns in an unexpected way. But at that point you can manually move the separators again. I only have 2017 and 2018 so this is for 2017 and newer now. Variant_Probe-2.4.3-0.ogp1 point

-

It's easy, there is probably a vi with that name in memory, so if you would remove the class prefix there would be a conflict. Rename the vi first to something unique and the try to delete it.1 point

-

I used scripting and low-level VI editing to generate a VI with every single decoration object in LabVIEW, at least those with ID's 0 to -4096. There may be some out of that range (and many in that range don't have a valid image associated with them) but this range contains a lot of them. 0 to -4096.vi1 point

-

Looks like someone beat me to it! Oh well, I already exported it (also for 2009, incidentally) so I'll post it here in case it'd be more convenient to use a regular VI file. 0 to -4096.vi1 point

-

Sweet! That solves it. So, now we can write a LabVIEW console app! Here is the VI that let's you write to the StdOut of the calling console: Write to StdOut of Calling Parent.vi -John1 point

-

Maybe you'll get Xmas before you know it... I found a way to retrieve all tags from a VI. I basically scan the VI file and get an index to the position of tags in the file. I then extract the tag names. Since I don't know to which objects it is related, I have to scan all objects on FP and BD to associate them properly. Once done, you get a list of refnums and variants for the Object's references and a list of tags to which it is associated. I also included an example of code to write a tag to the Block Diagram. Use the same template to write to FP or any objects. Open the project and launch "Get All Tags from VI". Browse the path to the example file "Tagged Test VI" and that's it. Saved in 8.6 but will work in 9.0 (2009) as well. Note that the versioning is important as tags are seen only through scripting and NI can change the way it is saved from one version to the other. Retrieve Tags 8.6.zip1 point