-

Posts

5,001 -

Joined

-

Days Won

311

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by ShaunR

-

You don't need to do partial matching. You translate the string "The remaining time is %d Seconds" and use the format into string primitive. . You might want to take a look at Passa Mak. It will generate all the language files for translation and switch all the UI controls. (Good luck with the Chinese one. I'm not brave enough to attempt the East Asian translations)

-

I think the OP was just having difficulties figuring out how to do #5 and #6. He'll be back again when he runs into #2 between modules.

- 20 replies

-

- modular

- application design

-

(and 1 more)

Tagged with:

-

MoveFileWithProgress problem from kernel32.dll

ShaunR replied to Houmoller's topic in Calling External Code

Integrating LabVIEW code -

Functional Dataflow Programming with LabVIEW

ShaunR replied to Tomi Maila's topic in LabVIEW Feature Suggestions

I hate strict typing. It prevents so much generic code. I like the SQLite method of dynamic typing where there is an "affinity" but you can read and write as anything. I also like PHPs dynamic typing, but that is a bit looser than SQLite and therefore a bit more prone to type cast issues, but still few and far between. That is why sometimes you see things like value+0.0 so as to make sure that the type is stored in the variable as a double, say. Generally, though. I have spent a lot of time writing code to get around strict typing. A lot of my code would be a lot cleaner and generic if it didn't have to be riddled with Var to Data with hard-coded clusters and conversion to every datatype under the sun. You wouldn't need a polymorphic VI for every data-type or a humungous case statement for the same. It's why I choose strings which is the next best thing with variants coming in 3rd. <rant> I mean. We have a primitive for string to int, one for string to double another for exponential (and again, all the same in reverse). Really? Why can't we connect a string straight to an integer indicator and override the auto-guess type if we need to. </rant> But yes. I think it can can be done in LabVIEW. They could do it with variants. Not as good as dynamic typing, but it'd be closer. A variant knows what type the data is and you can connect anything to them but they failed to do the other end when you recover the value (I think it was a conscious decision). That is why I call variants "the feature that never was" because they crippled them. I think recovery of data is a bit of a blind spot with NI. Classes suffer the same problem. It's always easy getting stuff into a forma/type/class, but getting it out again is a bugger.- 7 replies

-

- functional programming

- dataflow

-

(and 1 more)

Tagged with:

-

Need help: want to build a real-time GPS tracker for multiple movers

ShaunR replied to Aristos Queue's topic in Hardware

Thinking about it. If you go the micro controller route for the detector, you might as well go for Bluetooth to get the extra range and tell people to keep their ids visible. I once used a similar idea to upload results data to engineers phones when they passed by inspection machines on the factory floor. It continuously scanned for bluetooth phones (not many tablets in those days ) and if it found one, compared the mac address to a user list. It then pushed the files to their SD card. You may remember the OPP Push software that was in the CR a while ago. That was part of it (the bit that detected and pushed the files). -

Need help: want to build a real-time GPS tracker for multiple movers

ShaunR replied to Aristos Queue's topic in Hardware

Well, apart from the phone doesn't need to be out and can be in their pocket (they only have to visit a page once that auto-refreshes and leave the browser open without killing it) then you'll need 3 USRPs to do that passively via their GSM signal (not cheap). But sure. There's lots of ways from using their phones (which we have covered) , RFID tags, GPS pedometers, Wifi triangulation and a myriad of custom soluyions. I could carry on for weeks giving solutions with the tech available. It depends on you budget, timescale and amount of effort you want to put in and only you currently know that. I get the feeling, though, that you have been asked to do it as the "tech guy" and gone "sheesh, I hope I don't have to build one" -

Need help: want to build a real-time GPS tracker for multiple movers

ShaunR replied to Aristos Queue's topic in Hardware

No. I'm suggesting you just use a normal webserver with PHP (or labview if you really must) and Javascript and the phones browser. (HTML5 geolocation). Thanks to the Russians, we now have much better accuracy if your phone can use GNSS.I guess yours can't -

I've though for a long time we've needed another option apart from just Error and Warning. Errors break VIs, but warnings are just overwhelming in number but completely trivial. So much so that they are usually ignored and/or turned off-ineffective.. I think most warnings should be regarded as "hints" and things like your race condition are actual warnings but they shouldn't break the VI - mainly for Mje's reason that it may not matter but also for "out there" edge cases like the RNG. Personally, I'd love to see warnings (my definition of them) about race conditions. If you could pull it off, it would prevent quite a few of us stepping in those bear traps.

-

Need help: want to build a real-time GPS tracker for multiple movers

ShaunR replied to Aristos Queue's topic in Hardware

Sure, if you just want to use a router as a proximity device and say they are in the building. If you want to know which room they are in, then use an RFID and send the data via that wifi router. You can have 100 scattered all throughout the building then and know who's in all the rooms. If, on the other hand you want to know which room they are in with Wifi, you need 3 of them o do tiangulation with less accuracy and dead rooms. It depends on granularity required and how much time you want to put in You have 4 technologies which can all interface to each other and can be used to pinpoint people. Combinations of those technologies will give you differing accuracies and capabilities. I'd want to be able to resolve who was talking to who, how many are grouped and where and who's wandered off to where they shouldn't. That's just me though If you want a cheap, cheerful and quick solution, then you could just go for them using their own phones (or let them borrow some). Then you don't need wifi or any fancy hardware (although if you borrowed a USRP from NI, you could set up your own cellphone base-station ). You could then just track them on Google Maps with a bit of PHP and javascript on a webserver. But where's the fun in that? -

Need help: want to build a real-time GPS tracker for multiple movers

ShaunR replied to Aristos Queue's topic in Hardware

It's unusual for buy now buttons for hardware solutions. Here's one for RFID. Many mobile phones also come with Near Field technologies now, too. It really has to be GPS for long range, though. I think Wifi on a few acres will give huge blind spots (just a gut feeling). -

Streaming timestamped data to chart, but ignoring gaps in acquisition

ShaunR replied to Mike Le's topic in User Interface

You could use the "Picture Plot" to draw it. If you are heavily dependent on cursors and annotations, then you'd have to handle all that yourself so that may put you off. Another alternative is to have two graph controls side-by-side with their scales hidden and use a Picture Plot function to draw the scale. You won't have to worry about alignment but cursors wont cross the boundaries so you'd have to "fake" it. In a similar vein, you could have two controls, one on top of the other and manipulate the start and maximum scales so that they appear to be contiguous. You may get flicker with this though. With the exception of the first, these are all variations on "don't put them all in one graph", so I expect there are others. We've all gotten used to the in-built features of the graphs so generally we balk at having to write the code to get the features back. This puts many people off the first option but you can do some fantastic graphs with the Picture Plots (gradient shaded limit areas behind the data, anyone?). -

Need help: want to build a real-time GPS tracker for multiple movers

ShaunR replied to Aristos Queue's topic in Hardware

There probably are things off the shelf (maybe look into car fleet trackers). This sounds like a fun project that you should just do because you can, though. Maybe later your charity can sell it to other organisations (paintball?) to raise some funds, If you get the users to use their own phones, you will even get cell location enhancement and cell location when GPS is unavailable. Remember that mobile phones are trracking devices that make telephone calls You could use RFID in addition to GPS. GPS is accurate to a few meters (10?) and RFID is cheap. They would be great for detecting when a room is occupied and by whom if the GPS cannot distinguish. I would even be tempted to make my own RFID senders with an arduino or similar, but it really depends on your time-scale. How long have you got? Whats the time constraints for this project? I'll give your charity some licences for the Websocket API, gratis, if you want to stream the data via websockets to peoples browsers in their tablets or phones (you only need them for development). Webservices? Data Dashboard? Meh! I thought you wanted real-time That technology is sooooo 2000 -

Well. You mention that you don't want to use LVOOP because it makes it difficult to grasp for novices but then advocate a Muddled Verbose Confuser (MVC) architecture which even experts on that design pattern can't agree on what should be in which parts when it comes to real code. As it needs to be simple for novices, I also suggest you throw rotten tomatoes at anyone that mentions "The Actor Framework". Since there maybe many people who build on the code and many will have limited experience, Have you thought about a service oriented architecture? With this approach you only need to define the interfaces to external code written by "the others". They can write their code anyway they like but it won't affect your core code if they stuff it up. You can then create a plugin architecture that integrates their "modules" that communicate with your core application via the interfaces. The module writers don't need to know any complicated design patterns or architecture or even the details of your core code (however you choose to write it). They will only need to know the API and how to call the API functions..

- 20 replies

-

- modular

- application design

-

(and 1 more)

Tagged with:

-

Xilinx14_7? Did you install that or did it come with your FPGA?

-

Real-time acquisition and plotting of large data

ShaunR replied to wohltemperiert's topic in LabVIEW General

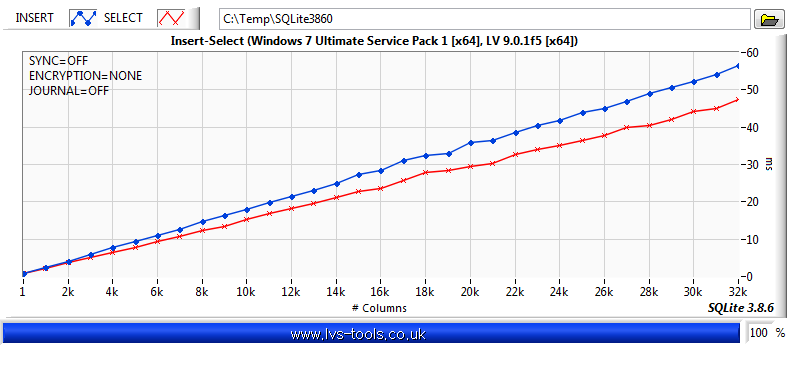

yup. It is linear. A while later............. That was up to the default maximum number of columns (1000 ish for that version). As I was I was building version 3.8.6 for uploading to LVs-Tools I thought I would abuse it a bit and compile the binaries so that SQLite could use 32,767 columns (3.8.6 is a bit faster than 3.7.13, but the results are still comparable). I think I've taken the thread too far off from the OP now, so that's the end of this rabbit hole.Meanwhile. Back at the ranch.......... -

What Data Structure Would You Use?

ShaunR replied to Testy's topic in Application Design & Architecture

Whatever was needed to achieve the required functionality. Probably a boolean, two numerics and an enum plus other stuff like refnums,events etc.. -

What Data Structure Would You Use?

ShaunR replied to Testy's topic in Application Design & Architecture

Something else. -

Real-time acquisition and plotting of large data

ShaunR replied to wohltemperiert's topic in LabVIEW General

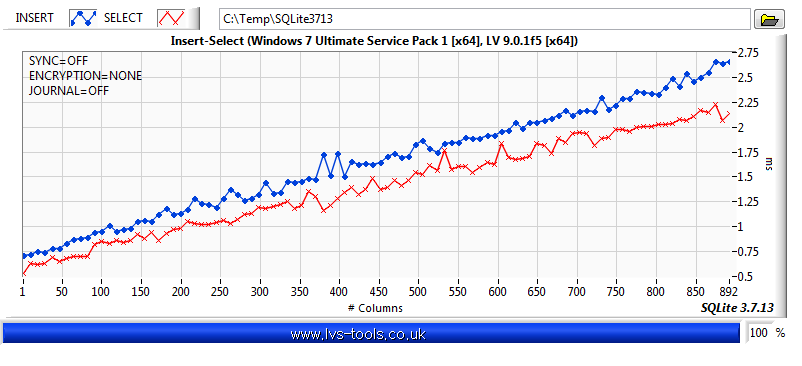

It's an excellent point. For example. Trying to log 200 double precision (8 byte) datapoints at 200Hz on a sbRIO to a class 4 flash memory card is probably asking a bit much. The same on a modern PC with an SSD should be a breeze if money is no object. However. We are all constrained by budgets so a 4TB mechanical drive is a better fiscal choice for long term logging just because of the sheer size. The toolkit comes with a benchmark, so you can test it on the hardware. On my laptop SSD it could just about manage to log 500, 8 byte (DBL) datapoints in 5ms (a channel per column, 1 record per write). If that is sustainable on a non-real time platform is debatable and would probably require buffering. 200 datapoints worked out to about 2ms and 100 was under 1ms so it could be a near linear relationship between number of columns (or channels, if you like) and write times. The numbers could be improved by writing more than one record at a time but a single record is the easiest. I have performance graphs of numbers of records verses insert and retrieve times. I think I'll do the same for numbers of columns as I think the max is a couple of thousand. -

So can we not prepend an index to the name in the variant attribute list to enforce a sort order and strip it off when we retrieve? (I really should download it and take a proper look, but having to find and install all the OpenG stuff first is putting me off...lol)

-

<Dumb question> The request is for values not to be sorted alphabetically.. Is the solution just not to sort? Do we, indeed, sort them? Is it part of the prettyfier rather than the encoding. Where exactly is this sorting taking place?

-

Real-time acquisition and plotting of large data

ShaunR replied to wohltemperiert's topic in LabVIEW General

A relational database (RDB) is far more useful than a flat-file database, generally, as you can do arbitrary queries. Here, we are using the query capability to decimate without having to retrieve all the data and try and decimate in memory (which may not be possible). We can ask the DB to just give us every nth data-point between a start and a finish. To do this with TDMS requires a lot of jumping through hoops to find and load portions of the TDMS file if the total data cannot be loaded completely into memory. That aspect is a part of the RDB already coded for us. It is, of course, achievable in TDMS but far more complicated, more coding and requires fine-grained memory management. With the RDB it is a one-line query and it's job done. Additionally, there is an example written that demonstrates exactly what to do, so what's not to like? If the OP finds that he cannot achieve his 200Hz aquisition via the RDB, then he will have no other choice but to use TDMS. It is, however, not the preferred option in this case (or in most cases IMHO). -

Real-time acquisition and plotting of large data

ShaunR replied to wohltemperiert's topic in LabVIEW General

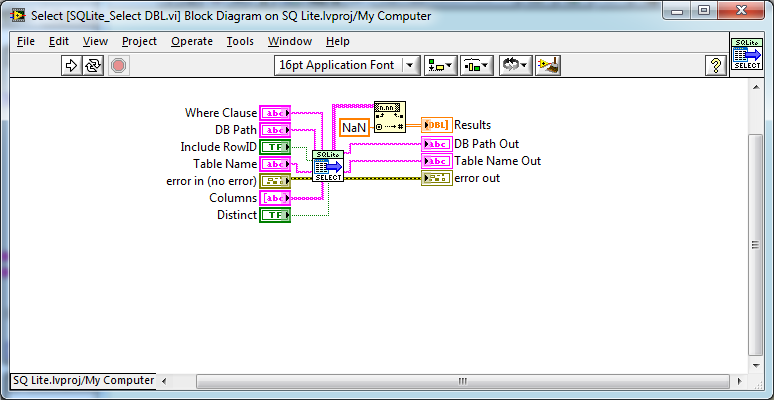

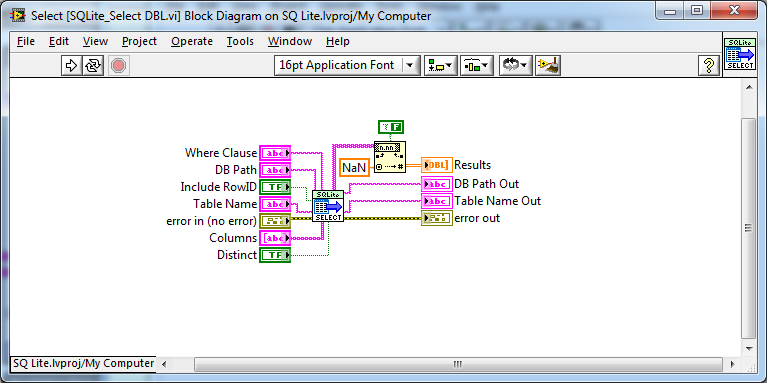

Yes. I see. SQLite is actually the reason here. They removed localisation from the API some time ago so that it will only accept decimal points So the solution, as you say, is to use "%.;" in the query string to enforce it. And yes, you may run into another issue in that the SELECT for double precision uses the Labview primitive "Fract/Exp String To Number Function". This will cause integer truncation on reads of floating point numbers from the DB on localised machines. I've created a ticket to modify it, You can get updates as to the progress from there. In the meantime your suggestion to use the string version of SELECT and use the ""Fract/Exp String To Number Function" yourself with the "use system decimal point" is correct. You can also set to false or to modify the SQLite_Select Dbl.vi yourself like this. Those are the only two issues and thanks for finding them. Both the changes will be added for the next release of the API which, now I finally have an issue to work on as an excuse, will be in the next couple of days -

That's awesome. I'm still trying to figure out how you moved the pieces' images from boolean to boolean without looking at the code and that's before I get to the AI

-

Congrats. Here's your promotion