-

Posts

720 -

Joined

-

Last visited

-

Days Won

81

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by LogMAN

-

A screenshot consists of two elements: A picture of your block diagram and a separate picture of the mouse cursor at that moment. The software also reads the position of the cursor and puts it back on top of the block diagram, scaling it to the current cursor size (of Windows that is) in the process. This is why it looks bigger in the screenshot that it does on your monitor. That being said, if NI isn't willing to implement high DPI cursors in LV "classic", maybe there is a way to add or replace the cursors using one of the resource editors.

-

There is actually an idea on the Idea Exchange for this and it was rejected for already been implemented! You probably won't like their solution... Here you go: https://forums.ni.com/t5/LabVIEW-Idea-Exchange/Make-LabVIEW-DPI-aware-to-support-high-DPI-screens-and-Windows/idc-p/3672540/highlight/true#M37286

-

Web Service Call LabVIEW 8.6.1

LogMAN replied to MDMCE's topic in Remote Control, Monitoring and the Internet

I have never used these protocols myself, so I can only point directions. Here is a topic that has a solution regarding SOAP: https://forums.ni.com/t5/LabVIEW/SOAP-web-service-possible-to-access-from-LabView/td-p/3198844 It's done for LV 2015, but you can always save for previous version to make it work with any version down to 8.0 Somewhere down that thread is a link to a topic on LavaG, might prove useful as well:- 2 replies

-

- 1

-

-

- lv 8.6.1

- web service

-

(and 3 more)

Tagged with:

-

how to make VI like "+" ,"-" functions in Labview

LogMAN replied to thingsful's topic in LabVIEW General

Yes, I can see how that could be an issue. Unfortunately I'm not aware of a solution to fix the icon. The standard functions like Addition, Subtraction, etc... are not really VIs. They are written in C or whatever language they use to program LV nowadays. So, they can do stuff we can't. -

how to make VI like "+" ,"-" functions in Labview

LogMAN replied to thingsful's topic in LabVIEW General

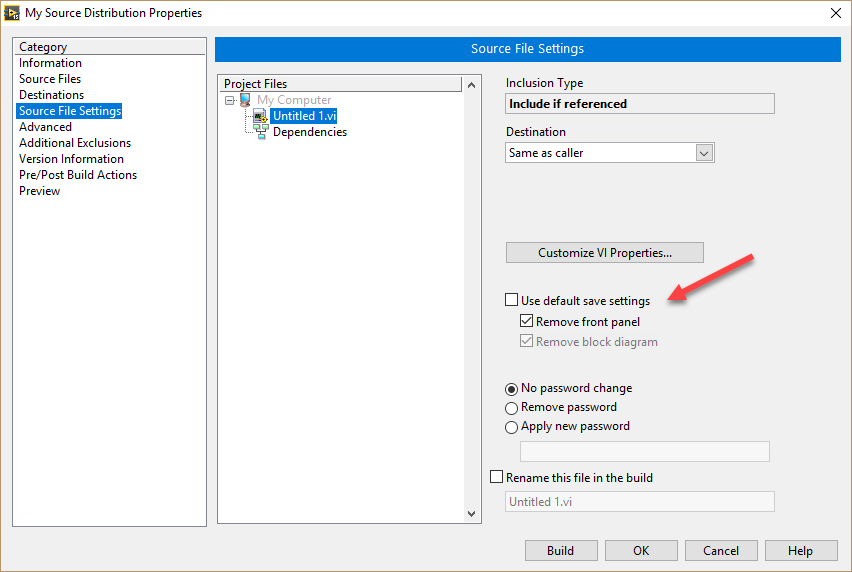

When creating a source distribution you can remove the front panel and block diagram in the source file settings: Be aware that removing the block diagram prevents you from using the VI on a newer version of LabVIEW as it cannot be recompiled. Edit: You might find this article helpful: https://knowledge.ni.com/KnowledgeArticleDetails?id=kA00Z0000019LY1SAM -

Welcome! I haven't had issues for the past few years and didn't notice any problems today. The posts in your picture are from 2014, so maybe they weren't fixed during the upgrade. Please post a link to the topic in question, so we can compare.

-

It's a good sign. At least you can be sure that it'll work if you wipe your machine and install it from scratch If you still want to figure out which part is causing your issues you'll have to eliminate factors one-by-one. Here are a few things you can try: Log-in with a different user account and try to access the database Copy the database to your local drive and try to use it with your application Create a new database that has the necessary tables and try to use it with your application

- 21 replies

-

- sql

- database connectivity toolkit

-

(and 2 more)

Tagged with:

-

Still, you read masses that are way off compared to the values you'd expect, right? Since you do voltage measurement you also have to consider side-effects from other parts of your setup (like wires running alongside that transfer signals which may induct into your wires). I suggest you take a graph of your input signal over a reasonable duration and have a look at it. Try to figure out if the source signal is acceptable for your needs. If that's the case and the output is still wrong, maybe you can share some sample data and a VI to find a solution.

-

Have you since tried reinstalling? Repair might have skipped repairing the database driver. Other than that, there are a few clues on the web which indicate that the error code is caused by some invalid character in your query: http://digital.ni.com/public.nsf/allkb/3BC28421B4761BCC8625710E006D76CB https://knowledge.ni.com/KnowledgeArticleDetails?id=kA00Z000000P83sSAC http://digital.ni.com/public.nsf/allkb/D8FF30B4409602B386256F3A001FC96C Since that is clearly not the case (unless you have some weird zero-width character in your query), you should try reinstalling the software. You could also try running your VI on another machine to see if it works or not.

- 21 replies

-

- sql

- database connectivity toolkit

-

(and 2 more)

Tagged with:

-

You should check your (raw) input signal. Sounds to me like the input signal from the force sensor is not as stable as you think it is. The equation is a simple conversion from force to mass, so it'll produce the same output given the same input. Even if you don't take the average over your input signal, the calculated mass should stay within reasonable limits. Check the datasheet of your force sensor. Its output might be scaled (the same could apply to your DAQ settings). How stable are we talking about? 400 g isn't that much (in my world that is )

-

Paintainable is very nice, I like it a lot! If you want to describe code behavior rather than the impact on the developer, I suggest "Melodramatic Solution".

-

I get the same behavior on my machine using your sample project (attached to the post on the dark side). As in your picture the indicator of the Sub-VI changes its state, but the indicator on the Main VI doesn't. However, I created a VI from scratch with the exact same block diagram as "ReadDVRData" (created manually) and it works fine. Copying the block diagram doesn't work as well as reconnecting and replacing the terminal doesn't work. This is something NI should be concerned about. Looks to me like the terminal assignment is broken or some other weird optimizations failing. Did LabVIEW crash during development by any chance?

-

Not able to receive data using VISA Serial

LogMAN replied to Nishar Federer's topic in LabVIEW General

If I remember correctly the default buffer size is 4096, so even in async the function has to wait for the buffer to clear if the provided data is bigger than the buffer size. Does increasing the buffer size change the result? -

Files as in "Downloads" or files as in "Attachments"? I have no issues attaching files and images to posts: Empty VI.vi

-

Here you go: https://forums.ni.com/t5/LabVIEW/Labview-2016-new-improvments-to-selecting-moving-and-resizing/m-p/3337796#M979928

-

If you compare LV2017 to LV2015 you'll notice that LV2015 only shows the outline of the contents, while LV2017 renders the whole thing. And not only does LV2017 render the whole thing, but it also shows broken wires while dragging, which means it compiles the code, which is obviously time consuming. And for some reason it does that every time the cursor moves Edit: I just noticed that LV2017 also moves FP objects while dragging said code on the BD Try moving the cursor very slowly, it renders much more smoothly. It actually doesn't render while the cursor is moving faster than a certain speed.

-

For your particular problem the Waveform Chart won't work, as you cannot reset the index to zero without deleting data. Please have a look at "Waveform Chart Data Types and Update Modes" in the Example Finder, it visualizes the modes in which you can use the Waveform Chart. To achieve what you want, you'll have to build three separate XY Graphs (e.g. by storing them in shift-registers) and plot them into a single XY Graph. Please have a look at "XY Graph Data Types" in the Example Finder.

-

Not able to receive data using VISA Serial

LogMAN replied to Nishar Federer's topic in LabVIEW General

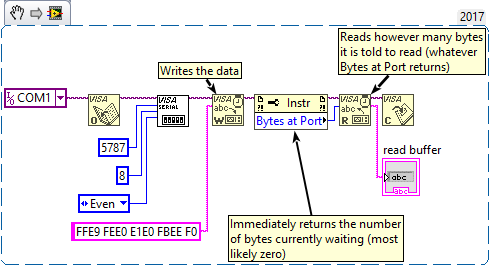

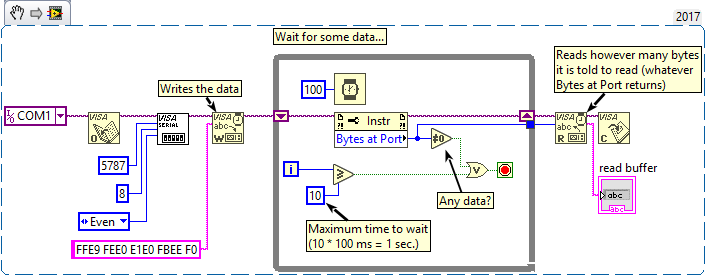

Data communication via serial port (or any communication for that matter) takes time. The receiver must receive, process and reply to the data send by the sender. I suggest having a look at the examples in the Example Finder, they are well documented. Search for "Simple Serial" or just "Serial". Your VI sends data and immediately reads however many "Bytes at port" are available. There is no delay between sending and reading: Here I modified your implementation such that it waits up to one second for data to arrive: -

This topic is very interesting. I would like to re-iterate the initial situation. Maybe we can find a clue to what your IT department is concerned about (you should really go into an open meeting with them to find out). All quotes below are direct copies from https://www.gnu.org/licenses/gpl-2.0.html#SEC1 It is true and at the same time it is not. You must share your source code if you made changes to the original work: By using the program you don't modify it. However, the part "forming a work based on the Program" can be confusing, which is why the license explicitly states: Can you identify sections of your work that are clearly not derived from the program? Can you reasonably consider these parts independent and separate works in themselves? Do I really have to ask these questions? You don't need to be a LabVIEW programmer to compare written text to graphical blocks and answer these questions. Your work is clearly not derived from the program and therefore the license does not apply to your work. If the IT department disagrees let them point out the sections of LabVIEW code that are derived from SVN (please make a video of it and share footage later ) ... the second one is a joke right? Since when is Stack Exchange a trustworthy legal advisor for trained IT professionals? Even worse: Did they receive the links from a professional lawyer? => In that case do them a favor and get a new one The middle part about TFS is the most important part. I don't want to argue about SVN vs. Git, there are more than enough topics about that, but I wonder why they actually did start searching for reasons to make you stop using SVN... To me it sounds like they are actively searching for reasons to make you use TFS and the legal part is just a pretense to do so. If that's the case you have several options to choose from. Here are two that I can think of right now: Accept your fate. SVN is greatly integrated into LabVIEW using the TSVN Toolkit, which is a big plus. Git, however, works very well too if you can live without status indicators in your project. We actually use SourceTree with Git to manage our projects and it works well for us. Present arguments against it. How much time do you spend using SVN during development? Setup a machine with TFS and let the IT department show you how to work with it efficiently. Then do some awesome changes (like renaming one of the core classes in your project that affects loads of VIs) and let them take care of committing changes. Then ask them to merge the changes with some branch (please only do that if you know how to do it properly in SVN, otherwise you won't archive anything). Do the same thing on your machine using SVN and compare the results. This has already been answered. Licenses of third-party packages do apply, so read them carefully. Especially when doing professional work. The sheer amount of dependencies and licenses that come with it is one of the reasons we don't use many packages from the VIPM network, even though they could lead to faster development cycles. Read, accept and work according to the licenses or don't use them at all. Sorry for the extensive post

-

Adding values to Enum resets all instances to default

LogMAN replied to A Scottish moose's topic in LabVIEW General

Have you tried clearing the compiled object cache? Here is a post that sounds exactly like the problem you've got: -

Adding values to Enum resets all instances to default

LogMAN replied to A Scottish moose's topic in LabVIEW General

Do you use strict typedefs by any chance? I've had a similar issue a couple of years ago (using LV2011). It turned out that the reset was caused by using strict typedefs instead of regular ones. Changing from strict to regular typedefs solved the issue (after fixing the project one last time of course). -

Works fine for me. No issues during installation and all examples work flawlessly. Absolutely mind-blowing! Thanks so much for sharing My versions: VIPM 2017.0.0.2007 LabVIEW 2017 17.0 (32-bit) Edit: I should mention this is the only package I've got installed right now.

-

How dare them making a choice when they are asked to? You cannot approve the dialog automatically as it is shown only after starting the application (or restarting the computer) during which you don't have elevated access. However you could add firewall rules as part of the installation procedure (see below). None that I know of. It is also very annoying to get prompted over and over again after making a choice. Why even bother asking if there is no choice in the first place? Software that does that is malware in my opinion. Also I can see users taking three courses of action: Allow access (stupid, since it could be malware, also teaches your users bad habits) Decline and inform IT (IT will knock on your door, so go for it if you need to talk to them urgently ) Uninstall (yeah, might not be IT who is knocking next) You can actually run command line instructions during installation in order to add firewall rules. Have a look at the instructions over at TechNet: https://technet.microsoft.com/en-us/library/dd734783(v=ws.10).aspx Once you figured out the necessary firewall rules (e.g.: by checking a computer that accepted the rule), you can build the commands and execute them during installation. It should be possible to run the instructions using the post-install action (run application after installation), though I'm not sure if it will actually be run in elevated mode. Another option is to use a custom installer (we made our own using Inno Setup) and pack the LabVIEW installer inside the custom installer.

-

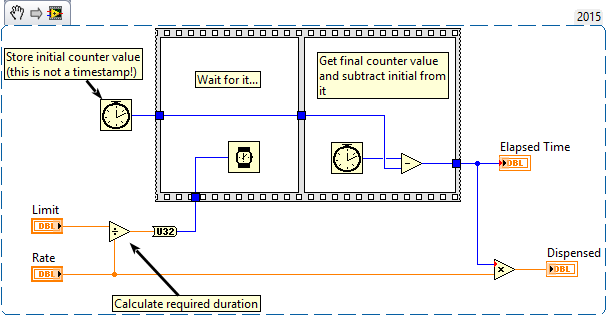

While your VI works it takes a lot of CPU time by running the while loop each millisecond. In case you want it to wait for a desired amount of time, the snippet I posted earlier can be changed to handle any desired duration by adjusting the delay accordingly. You could also just calculate how much time you need in order to reach the specified limit:

-

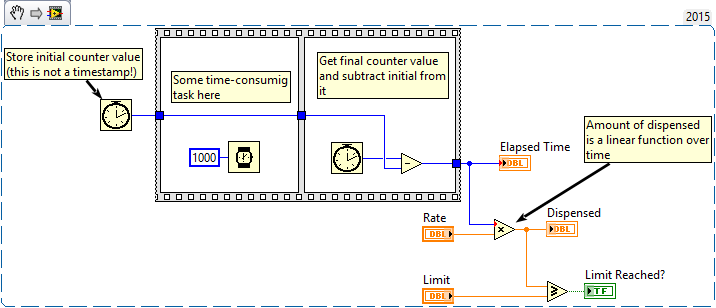

Welcome to LAVA! The short answer to this is: You cannot do time constrained operations (within the millisecond area) using a regular PC. Other processes will "steal" CPU time which can put your program on halt for several milliseconds (up to several hundreds), so the timing is not predictable within your constraint. However do you really need to calculate a value every millisecond? According to your description the dispensed volume is a linear function over time. This means as long as you know how many milliseconds elapsed since a specific moment in time, you can calculate the total volume dispensed. The following VI is an example on how to do that: This will give you a new value after 1 second, however keep in mind that there is still a chance it could take more time depending on how busy your computer is and which process gets priority. One recommendation after reading your VIs: Please make use of controls / indicators before using local variables. It makes your code much more readable and removes unnecessary complexity.