-

Posts

720 -

Joined

-

Last visited

-

Days Won

81

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by LogMAN

-

Destruct object from an Array after Cleanup

LogMAN replied to kull3rk3ks's topic in Object-Oriented Programming

The way your VI is implemented is the correct way to dispose of objects (or any given non-reference type in LabVIEW). The wire has "loose" ends (after the last VI), so that particular copy of the object is removed from memory automatically. Only reference-types must be closed explicitly (like the DVR). So your final VI just has to take care of references within that object to prevent reference leaks. -

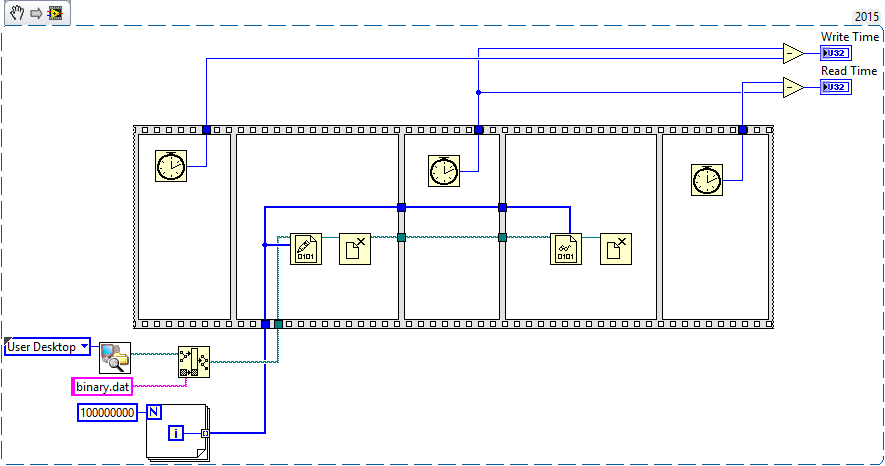

Okay, this is getting a bit off-topic as the discussion is about a specific problem which is not necessarily sqlite related. So I guess this should be moved to a separate thread. drjdpowell alredy mentioned, that sqlite is not the best solution if your data is not structured. TDMS on the other hand is for use with graph data, but creates index files in the process and stores data in a format readable to other applications (like Excel). That is what slows down your writing/reading speed. As far as I understand you want to store an exact copy of what you have in memory to disk in order to retrieve it at a later time. The most efficient way to do that are binary files. Binary files have no overhead. They don't index your data as TDMS files do, and they don't allow you to filter for specific items like an (sqlite) database. In fact the write/read speed is only limited by your hard drive, a limit that cannot be overcome. It works with any datatype and is similar to a BLOB. The only thing to keep in mind is, that binary files are useless if you don't know the exact datatype (same as BLOBs). But I guess for your project that is not an issue (you can always build a converter program if necessary). So I created a little test VI to show the performance of binary files: This VI creates a file of 400MB on the users desktop. It takes about 2 seconds to write all data to disk and 250ms to read it back into memory. Now if I reduce the file size to 4MB it takes 12ms to write and 2ms to read. Notice that the VI takes more time if the file already exists on disk (as it has to be deleted first). Also notice: I'm working with an SSD, so good old HDDs will obviously take more time.

-

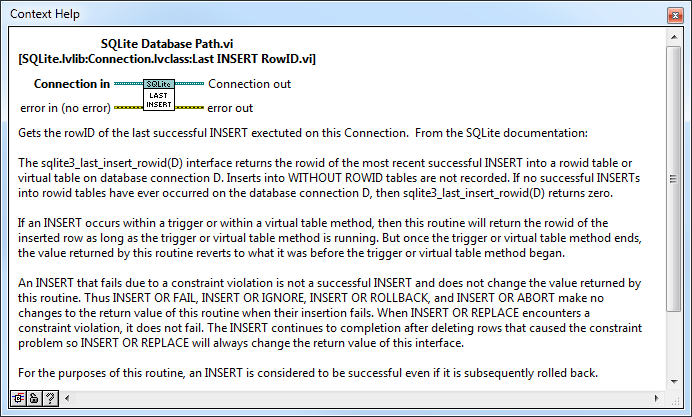

@drjdpowell: I installed the latest version of your library (1.6.2). There is a new VI called "SQLite Database Path" which is missing the output terminal for "Last INSERT RowID":

-

installation error LabVIEW 2014 64-bit on windows 10

LogMAN replied to kuroko's topic in LabVIEW General

I guess you mean that the other way around Thank you for the great links, I didn't know there was such a detailed list of supported versions specifically for Windows 10. This will save much time and effort for anyone planning to take that step. Also one would think they would keep the shop up-to-date in order to sell the product... -

installation error LabVIEW 2014 64-bit on windows 10

LogMAN replied to kuroko's topic in LabVIEW General

Welcome to LabVIEW and welcome to the forums, hope you enjoy your stay LabVIEW does not yet officially support Windows 10, see LabVIEW Operating System Support. That being said I've successfully installed LV2011 and LV2015 (x64) on Windows 10 and had no problems with the installer (don't know about LV2014 though). As far as I can tell from your screenshots, LabVIEW is already installed on your system (does not require any more disk space). Are you sure that's not the case? Did you install LabVIEW before upgrading to Windows 10? => Check if there is a folder "C:\Program Files\National Instruments" Let me already give you answers to two possible scenarios: If you can find the "National Instruments" folder under "Program Files" you have most likely upgraded from a previous Windows version without resetting your computer. That might work for many applications, however in my experience this causes more trouble than resetting your computer and installing everything from scratch. Check the Recovery options in Windows 10 to learn how to do that (use the 'Remove everything') option and make sure to create backups of your important files first. This will of course require you to install and configure all of your applications again (including all settings you've don in Windows)! Don't do that if you don't know how! It might be that LV2014 specifically has issues on Windows 10. Your license key will work with different versions of LabVIEW however (should work with every version from 8.0 upwards). Try downloading the latest version from their site (search over the web, or go to their FTP servers). Try installing LabVIEW 2015 (x64). Here is the direct link: ftp://ftp.ni.com/evaluation/labview/ekit/other/downloader/2015LV-64WinEng.exe Hope this works for you. -

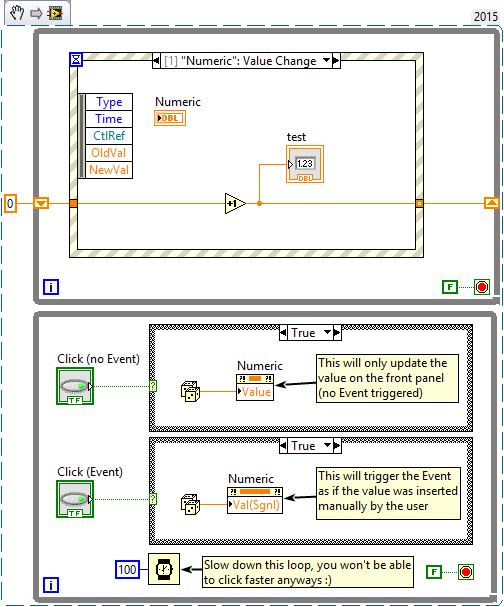

This is only true for the loop without the Event structure. The Event structure will actually wait until either an event or the Timeout occurs. By default the Timeout is -1 causing the Event structure to wait indefinitely thus requiring zero CPU. You can change that by wiring a Timeout to the Event structure which allows you to execute code in the Timeout case on a regular basis. The loop without the Event structure on the other hand will always loop, even though as you correctly stated, the loop can be slowed down using the Wait for ms or Wait for next ms functions. Maybe the following VI snippet helps understanding what ScottJordan already explained (this is based on your VI, however I added another Click button to trigger the Value (Sgnl) property): Press the Click (no Event) button to update the value of Numeric (Test will not increase) Press the Click (Event) button to trigger the Event (Test will increase) Find attached the VI in LV2013 (snippet is for LV2015) Value vs. Value (Sgnl) LV2013.vi

-

I haven't seen such a tool around. However you should give the LabVIEW Link Browser a try. It's a tool to visualize the dependencies between all Sub-VIs of a selected VI. I used it a couple of month ago to demonstrate the differences between those linking issues, so maybe it works for you too. Take a look into this post (scroll down to the picture; don't forget to read the post too ): https://lavag.org/topic/18654-should-i-abandon-lvlib-libraries/?p=112165 Here is an online example: http://resources.chrislarson.me/cla/#/ It's actually an open-source project on GitHub: https://github.com/wirebirdlabs/links, so just clone it and play around a bit. Maybe someone knows a way to make it visualize libraries only.

-

LabVIEW and side libraries (NI-XNET, NI-VISA, etc.)

LogMAN replied to eberaud's topic in LabVIEW General

We just moved from LV2011 to LV2015 and basically had the same issues even though we don't have a separate build PC. We solved this by using a virtual machine for LV2011 and working with LV2015 on the host. So no need for a second hardware. Maybe that works for you too. -

There is to my knowledge no way to retain the palettes when changing the source folder. It's the way VIPM has been designed. However there are ways to get what you want: If you always place the VIPB file in the root folder of the sources, all files are linked with relative paths, so you only have to copy the VIPB file to the new root folder and change it where necessary. As the source path is relative the palettes will persist - or rather you don't have to change the source folder in the first place. Another way is to manually edit the VIPB file. It's basically an XML file and quite easy to read. Search for "Library_Source_Folder" and insert the new path. Or if you just want to replicate the palettes copy the entire "Palette_Sets" subtree.

-

System directory hidden from LabVIEW (Not really a LabVIEW problem)

LogMAN replied to Neil Pate's topic in LabVIEW General

Absolutely right, however you can disable that behavior for a calling thread. To do so just call Wow64DisableWow64FSRedirection and Wow64RevertWow64FsRedirection when you are done. Make sure to call both methods in the same thread (I have used the UI thread in the past)! In between those calls you can access the System32 directory normally. Very important: All calls to the System32 directory must be executed in the same thread as the DLL calls! EDIT: You might be interested in reading this explanation to the File System Redirector: File System Redirector -

are Boolean expression stopped like they would be in C ?

LogMAN replied to ArjanWiskerke's topic in LabVIEW General

You are right! I've just tried it. Initializing an array of Boolean vs. array of U8 allocates the exact same amount of memory. I did not know that! I honestly thought LabVIEW would auto-magically merge bits into bytes... Reality strikes again So I revoke my last statement and state the opposite: The primitive solution won't work on multiple Bits simultaneously. We could do this ourselves by manually joining Boolean to Bytes and using the primitives on Bytes instead of Boolean. Not sure if there is anything gained from it (in terms of computational performance) and I think it's not subject to this topic, so I leave it at that. -

are Boolean expression stopped like they would be in C ?

LogMAN replied to ArjanWiskerke's topic in LabVIEW General

Try to estimate the amount of CPU cycles involved. Your code has two issues: 1) The bits must be put together to form an array (they originate from three different locations in memory). 2) You have an array of 3 bits, so that makes 1 Byte (least amount of memory that can be allocated). The numeric representation is a U32 (4 Bytes), so it won't fit without additional work. The System actually has to allocate and initialize additional memory. After that there is a case-structure with n-cases. The CPU has to compare each case one-by-one until it finds a match. Now the primitive solution: First the CPU gets a copy of one bit from each the first and second array and performs the OR operation (1 cycle). The result is kept in the CPU cache and used for the following AND operation (another 1 cycle). No additional memory is required. The primitive solution could actually work on 32 or even 64 Bits simultaneously (depending on the bitness of your machine and the CPU instructions used), where the numeric solution must be done one-by-one. Hope this makes things a bit more clear. -

are Boolean expression stopped like they would be in C ?

LogMAN replied to ArjanWiskerke's topic in LabVIEW General

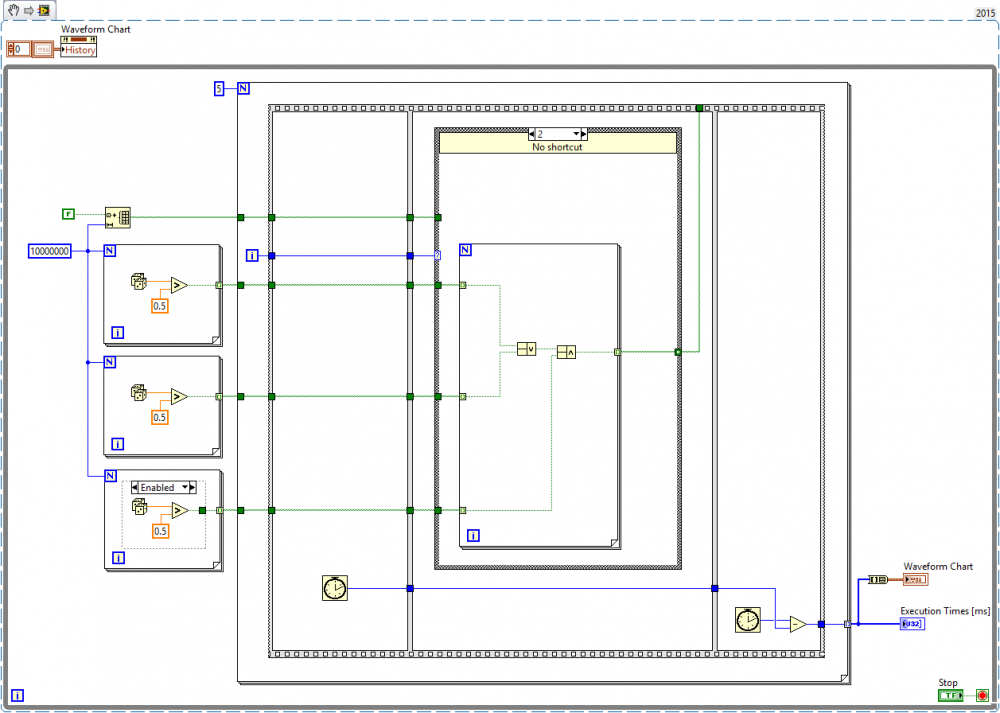

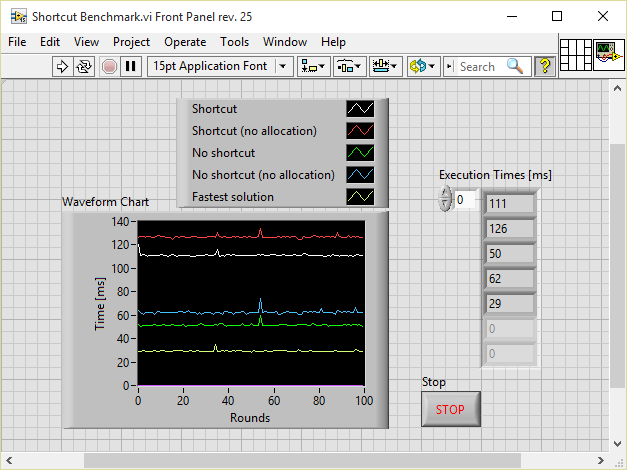

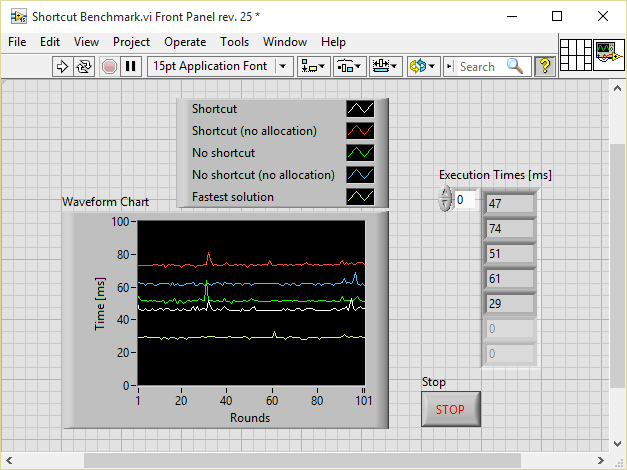

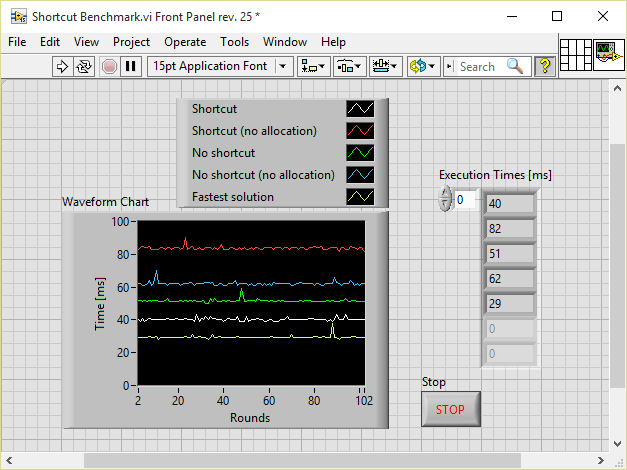

I get very similar results to the ones CraigC has shown. The numeric conversion really is no good option here, neither for performance, nor for memory. In order to find an answer to the initial question I have performed my own tests based on your benchmarks before. My benchmark includes several cases with different ways of solving the same issue. Each case will use the same values and the VI runs in a loop to get some long-term timings. The AND condition can either be constant TRUE, FALSE or random values. The last case will perform the same operation as all others before, but this time without a for-loop, so basically this is totally LabVIEW optimized. For readability a chart shows the timings in comparison. Oh yeah and since we use for-loops there are also cases where the array is initialized beforehand and values are replaced each iteration to prevent memory allocations (who knows, might safe time ). Here is the snippet (running in LV2015, attached VI is saved for LV2011 though): Please tell if you find anything wrong with how it is implemented. Benchmarking is no easy task unfortunately. Now here are some results based on that VI. First with the AND being connected to RANDOM values: Next the AND is connected to TRUE values: And finally AND connected to FALSE: So what do we get from that? Basically initializing a Boolean array and replacing values in a for-loop did not work very well (all the '(no allocation)' times). My guess is that the compiler optimization is just better than me, since the number of iterations is pre-determined LabVIEW can optimize the code way better. All timings with shortcut are worse than the ones without. So using a shortcut does not improve performance, in fact its just the opposite. Results might change if the Boolean operations are way more complex, however in this case LabVIEW is doing its job way better than we do with our 'shortcut'. The last case without for-loop ('Fastest solution') gives a clear answer. LabVIEW will optimize the code to work on such an amount of data. My guess is that the operations also use multiple threads inside, so maybe we get different results with parallel for-loops enabled in the other cases. And most likely much more stuff. The most interesting point is the comparison of timings between the 'Fastest solution'. As you can see there is no real difference between any of the three cases. This could either mean my benchmark is wrong, or LabVIEW has no shortcut optimizations like C. What do you think? Shortcut Benchmark LV2011.vi -

Have you considered VPN tunnels? If you setup the cRIO/PXI to only accept communications from the local address space (which I don't know if it is possible), you can become part of the local address space only by connecting via VPN (or if the hacker is a survival trained helicopter pilot with faked papers / employee working on the rig...). Of course the VPN tunnel is the critical part here, so it should be setup with great care and use secure dongle/token systems with randomly generated tokens. The connection itself however is secure and any communication is encrypted. EDIT: in theory (I'm no expert in this)

-

In most cases our customers have their own security measurements in place or the computer is not even connected to a network. All systems just have the standard Windows installation or the computer is setup by the customer in advance. We worry more about the person in front of the computer rather than the one outside a multi-layer firewall system. We don't. If the data is corrupted, the application fails and goes into error-mode. It then requires administrator privileges to continue working. This often means that me or one of my colleagues has to immediately find a solution. In the mean time the system will work in emergency mode without network communication. This of course is only valid if the customer wishes so, in other cases the application will reset itself and start over. None in particular from the outside world. When it comes to the operators they attempt to manipulate a system to blame it for productivity loss. Passwords. In the past we actually stored passwords non-encrypted into INI files. You can guess what happened No. Never have and never will. I wouldn't even give them encrypted data. Data I don't own will never be uploaded anywhere deliberately; that's thankfully a company policy which is why we have our own servers providing encrypted cloud support (to send or receive sensitive data through secure connections).

-

The most irritating part of people saying "You should learn a real programming language like <insert language here>" is how none of them (at least in my experience) ever worked with LabVIEW more than a few hours. Some even say stuff like that after seeing code in LabVIEW the very first time. Now to your question: I guess it does not hurt to understand the basics of textual languages. With the knowledge you can even give advice to people spouting stuff like above (I like how they hate it ). A few years ago I watched the video series from Stanford on YouTube (Programming Paradims and Programming Methodology) which are really great and gave a good insight on how a computer actually works. I recommend looking into them. I don't program in C though (by far to slow for me ) instead I've learned C# which is very useful when testing .NET components (or even writing one to use in LabVIEW). In the end my programming in LabVIEW did not change much, just the opposite, LabVIEW changed the way how I wrote programs in C# Maybe all the textual programmers should consider learning 'G' instead

-

I work very much the same way you do, but luckily no problems so far. In my case the keyboard is rather far away. My entire forearm is on the table such that the elbow is at the edge of the table and the keyboard is turned right (about 20°) to make it easier for the left hand. For the pressure on your wrists a flat keyboard might help (with a large wrist rest tilting down). My own is a terra keyboard 5500 (pretty old but extremly well made).

-

messenger library Instructional videos on YouTube

LogMAN replied to drjdpowell's topic in Application Design & Architecture

LabVIEW (or rather 'G') is entirely flow-based, so this makes sense. This answers my question to some extend. I actually like to define boundaries between things as clear as possible in order to make my sources accessible for the other developers in my team. So as an example: If my Actor acquires some data and provides it for the outside world, it might have its own visual representation and maybe you can control it (so basically MVC in one Actor). On the other hand I require multiple visual representations to fit the data on different screen resolutions (pure VC units). There are boundaries I have to define. I can either have a 'default' resolution in my core Actor with additional VC Units, or the core Actor is never shown and all resolutions are from separate Actors as VC units. I guess I'll have to play around and think about this for a bit. Thanks for the hint, I'll look into them. -

messenger library Instructional videos on YouTube

LogMAN replied to drjdpowell's topic in Application Design & Architecture

Is it best to ask questions in this thread? Hard to tell for me, however YouTube is just not the place for it For now this is the best place, so here I come: @drjdpowell: In your last video you related to the MVC (or CMV as you called it). As I understand it, an Actor can actually be any combination of Model, View and Controller. In text-based programming languages (like C# for instance) it is common to separate them entirely into separate classes (best case would be zero-coupling). In LabVIEW I think it is okay to separate the Model, but keep the View and Controller close together, as they are bound by design (FP/BD). This applies, unless the View and/or Controller is some external hardware or something alike. What is your recommendation on this? How did you combine them in your actual projects? @everyone: Please feel free to give your thoughts on this too. -

LabVIEW RS232 Serial Read Write Application Com Port Freeze Up / Stuck

LogMAN replied to Tim888's topic in LabVIEW General

RS232 is a very old standard, so even the cheapest devices are quite reliable. For long-term acquisitions however USB is a bad coice. Rather go for on-board or PCI(e). If you really have to go with USB, try one of the NI interfaces. They are well made but quite expensive: http://sine.ni.com/nips/cds/view/p/lang/en/nid/12844 In your case however I doubt any of the USB devices will solve the issue. It sounds to me that the issue is related to the USB connection rather than the device itself. ensegre and hooovahh already mentioned some very likely causes. Such issues might also occur sometimes when connecting another USB device to the same computer. Try to run the application on a computer with on-board RS232. The issue will very likely be solved (if you actually use the on-board RS232 that is ) -

LabVIEW RS232 Serial Read Write Application Com Port Freeze Up / Stuck

LogMAN replied to Tim888's topic in LabVIEW General

What exactly do you mean by getting stuck/freeze? Do you have any error message you could post? Also, check if there is any other application or service running that could access the serial port. The MS printing service for example screwed me over a couple of times. Experience might also change depending on your serial device (USB to serial converter, on board controller or PCI(e) cards). -

It's working since 8.6.1 at least.

-

Hi Neil, please use this link: http://download.ni.com/evaluation/labview/ekit/other/downloader/ You'll find all downloader/installer there. The softlib is no longer available. EDIT: Almost forgot this also works with ftp: ftp://ftp.ni.com/evaluation/labview/ekit/other/downloader/

-

I still think it is justified to wait for SPs and do tests before switching, however the last two releases were focused on fixing bugs and improving stability (2014 + 2015 afaik), with less "big" features like the Actor Framework in 2013. Compared to 2011, 2015 seems to be much more stable at least on my machine (working with a classes is no longer a reason to perform suicide, even with properties ). Being in the club 2011 too, we'll finally move to 2015 by the end of this year (and upgrade to SP1 once it is available). The performance improvement and, as Yair pointed out, the new edit-time features are worth it.