-

Posts

1,986 -

Joined

-

Last visited

-

Days Won

183

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by drjdpowell

-

[CR] LabVIEW Task Manager (LVTM)

drjdpowell replied to TimVargo's topic in Code Repository (Certified)

Note: because of BitBucket dropping support for Mercurial (and their intention to delete a Hg repos in May!?!), I have converted the Hg repo to a Git one, still on Bitbucket under the the LAVAg Team: https://bitbucket.org/lavag/labview-task-manager/commits/ -

Bitbucket sunsetting support for Mercurial

drjdpowell replied to Francois Normandin's topic in Source Code Control

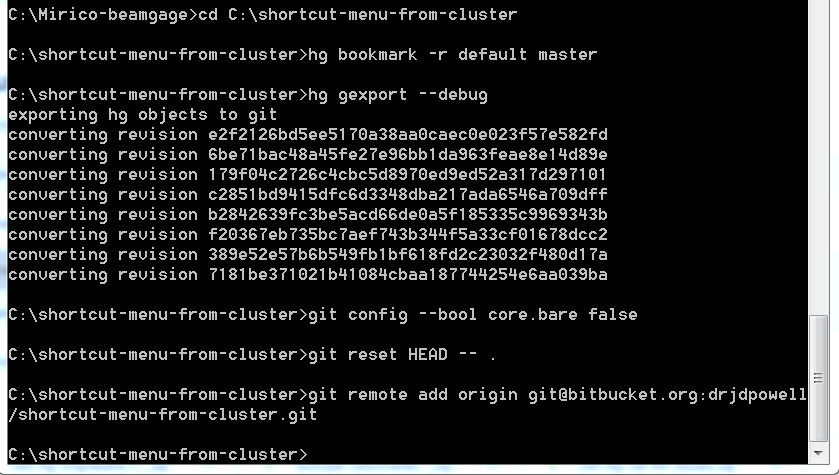

Since I have just painfully converted many years of Hg repos to Git, I thought I'd make a quick note here. I used the procedure described here: https://helgeklein.com/blog/2015/06/converting-mercurial-repositories-to-git-on-windows/ Here is a screenshot of the Windows cmd window: After this one needs to push to the new git repo on Bitbucket (though you could use another service). I could not do this from the command line, but I could push after opening the repo in SourceTree (which I had previously set up to use the right private key to talk to Bitbucket). Personally, I stayed with Bitbucket, partly because I could transfer my Issue Tracker history. It involved Exporting the Issues (under Settings), and then Importing to the new git repo. It was quite painless, except for the tedium of converting many many repos. -

LabVIEW NXG Feature Parity to LabVIEW "Classic"

drjdpowell replied to lvb's topic in LabVIEW General

Does NXG have shortcut menus on controls yet? Can't really tell from Google because NXG seems to follow a "let's rename everything" obscuration strategy. I see it seems to have subpanels now (renamed "panel containers" to prevent new users googling the past expert knowledge on how to best leverage subpanels). And are those menus modern ones with, say, icons and tip strips? That was something I was looking forward to with NXG: modern menus. -

Multiple EXE instances crashing at the same time?

drjdpowell replied to drjdpowell's topic in LabVIEW General

Hi, thanks. Crashes when running only one instance, and on multiple computers. I only ran 5 at once to increase the chance of the crash happening, as it is random and can not happen for many days. Was surprised that they all failed together (the "Application Error" messages in the Windows Event Log were within seconds of each other). This kind of rules out something in the simulated data like an NaN triggering a bug, as they are all independent. Thanks for they idea of running instances compiled under different LabVIEW versions, I may try that. I will also ask the DLL maker about file access. -

Hello, I have a complex application which calls a proprietary dll from another company. Recently, we have been having repeated crashes (silent, no error dialog except "Application Errors" in the Windows Event Log). As a test, I ran 5 copies of the application (using AllowMultipleInstances=True in the EXE's ini file). All four copies calling the dll crashed within a couple of seconds of each other (crashes happen only on hours/days timescale) while a fifth, not calling that dll, did not. I am confused as to how all the 4 copies failed at the same time. The dll just does a calculation (complex, but does not access anything external), so I do not understand how all the copies could fail at the same time (they were running independently on simulated random data). Anyone have a similar experience? All copies are presumably running in the same LabVIEW runtime engine; could there be any relation to the Runtime Engine?

-

Go get VIPM from JKI directly. I have not tested the library on Linux (other than Linux RT), but it should be able to work, if one has a copy of the sqlite3.so shared library installed in the right place. Does anyone else use this library on Linux?

-

First thing is SQLite has no timestamp data type. You are responsible for deciding what to use as a time representation. SQLite includes some functions that help you convert to a few possibilities, including Julian day, but I, in this library, choose to use the standard LabVIEW dbl-timestamp. The "get column as timestamp" function will attempt to convert to a time based on the actual datatype: Text: iso8601 Integer: Unix time seconds Float: LabVIEW dbl-timestamp Binary: LabVIEW full timestamp The first two match possibly forms supported by the time functions in SQLite, but the later two are LabVIEW ones. You are free to pick any format, and can easily write your own subVIs to convert. You can even make a child class of "SQL Statement" that adds "Bind Timestamp (Julian Day)" and "Get Column (Julian Day)" if you like.

-

Standard JSON specification for JSON objects is that they are a collection of named elements with order unimportant, so any JSON library should ignore order and match on names. Missing elements is a design choice, though, and I've gone with "use supplied default" rather than "throw error", for a number of reasons. But as LogMAN shows, there are ways to do checking yourself.

-

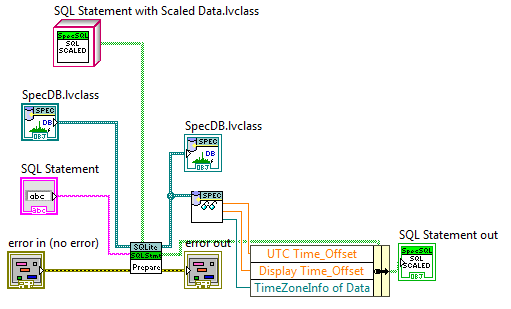

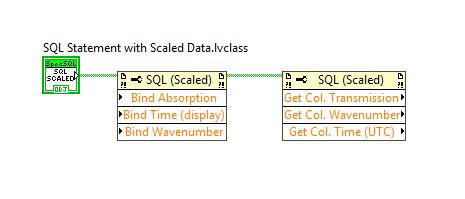

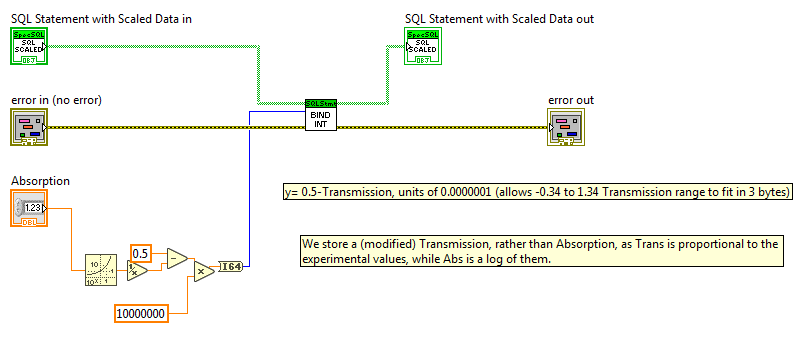

I have a project where Absorption Spectra are saved in an SQLite database. Data is stored in a custom format so as to take up a minimum of space. I have a child class of "Connection" that adds a lot of application-spec code, and a matching child of "SQL Statement. Here is the VI that does a Prepare: Here, multiple bits of information are passes into the child class, about the Timezone of the data and the offset time (times are stored as ms relative to an offset, to save space). The "SQL Statement with Scaled Data" child class has several additional Bind and Get Column vis, which have the scaling in then, allowing the calling code to easily work with different quantities (Absorption or Transmission, Local or UTC Timestamps): Here is the Bind Absorption method (the db actual contains a specially chosen 3-byte format that has to be converted to Absorption): So basically, this is extending the Connection and SQL Statement classes to be more capably for a specific application. Note that one does not have to do this, as one can use regular subVIs instead.

-

That input is to allow one to (optionally) make a LVOOP child class of SQL Statement. Normally one doesn't use this, but you can use a child class to add functionality. For example, I have used it to define new Bind and Get Column methods that store data in a more compressed format. But you probably can ignore it.

-

It depends on your architecture. If all your indicators are updated in a single place then you can easily defer updates, but if indicators are updated from many different places then update-on-change is more natural.

-

I would tend towards A.

-

Open-source online icon library for classy UIs

drjdpowell replied to Darren's topic in User Interface

I also use materialdesignicons, with a standard of either 54% opaque black, or 100% white, which is the google material design recommended values. The semi-transparant black looks grey, but blends slightly with any background colour, which looks nice. Sometimes I use blue to highlight a key control. -

I have always done the second method, and just accepted the extra overhead of one extra message pass. Beware of premature optimization. It is generally rare for me to want to just forward a message like this without the forwarding actor needing to change or react to the message in some way. An alternate design is to accept that you don't have an actor-subactor relationship, but that your subactor should really be a helper loop of the actor. A dedicated helper loop can share references no problem. Your "actors sharing references" is a potentially suboptimal mix of "actors are highly independent but follow restrictive rules" and "helper loops have no restrictions but are completely coupled to their owner"

-

I have never noticed any memory issue, and I wouldn't expect memory as a potential issue with customized controls (the issue that can affect controls is an excessive redraw rate that shows up as a higher CPU load, not memory).

-

I don't think you can match the raw pull-from-file performance of something like TDMS (unfragmented, of course). SQLite advantages is its high capability, and if you aren't using those advantages then then it will not compare well.

-

Can you post an example?

-

First question is what is your table's Primary Key? I would assume it would be your Timestamp, but if not, the lookup of a small time range will require a full table scan, rather than a much quicker search. Have you put a probe on your prepared statement? The included custom probe runs "explain query plan" on the statement and displays the analysis. What does this probe show?

-

My app failed due to Queues created but not being closed under a specific condition. Memory use was trivial, and logged app memory did not increase, but at 55 days the app hit the hard limit of a million open Queues and stopped creating new Queues.

-

I have an app that uses a watchdog built into the motherboard. Failure to tickle the watchdog will trigger a full reboot, with the app automatically restarting and continuing without human intervention. In addition, failure to get data will also trigger restart as a recovery strategy. Still failed at 55 days due to an issue that prevented a Modbus Client to connect and actual get the data from the app. That issue would have been cleared up with an automatic reboot, but detection of that was not considered.

-

Even that way is hard, as you have to detect the problem to trigger a restart, and it is hard to come up with a foolproof detection method of all potential failure modes.

-

No, unfortunately, as it uses VIMs that are only availablee in 2017+

-

Are you using matching bitness? You have to use the same bitness, 32 versus 64, for all DLLs and LabVIEW.

-

I don't tend to use them unless they have meaning beyond the message itself (ie. they are a natural grouping of data, rather than something that just exists for the message). Most of my messages contain only one piece of data, so no typedef needed (unless that data is naturally a typedef). Also, it is possible to go more than just a typedef: have an API of subVIs to send and receive the messages in a common library. This can be a lot more powerful than just a typedef. An example would be having any message starting with "Config:..." being passed to a Config subVI, with multiple possible messages being handled by that subVI ("Config: Get as JSON","Config: Set from JSON", etc.). Another option is to send an Object that has multiple methods that the receiving actor can use. I view a typedef as a poor cost-benefit trade-off, since you have the coupling issue without the maximum possible benefits.

-

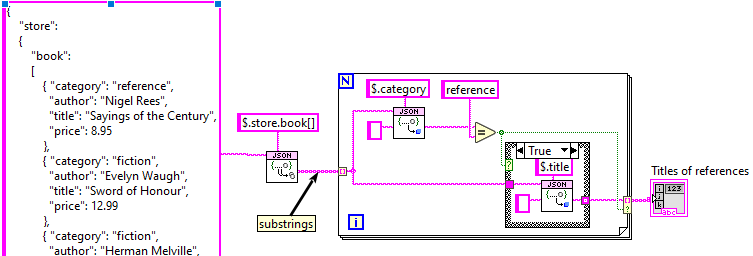

Plan? Yes, but not a priority. Note, though, that JSONtext returns substrings, meaning you can implement filtering in LabVIEW without making data copies, so the following code implements your example: More effort, of course, but it should be fast, and using LabVIEW is very flexible, and more debug-able.