-

Posts

499 -

Joined

-

Last visited

-

Days Won

36

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by JKSH

-

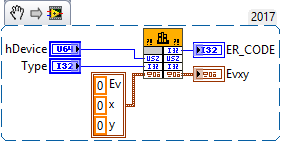

So here are more details about the other parts of the function: https://stackoverflow.com/questions/38632316/typeloadexception-using-dllimport ER_CODE: I32 KMAPI: stdcall DEVICE_HANDLE: Pointer CL_COLORSPACE: Enum (size is not well-defined in C, but it's usually 32-bit) Your CLFN must have Calling Convention = "stdcall (WINAPI)" and have 4 parameters: Name Type Data Type/Format return type Numeric Signed 32-bit Integer hDevice Numeric Signed/Unsigned Pointer-sized Integer (pass by Value) Type Numeric Signed/Unsigned 32-bit Integer (pass by Value) pColor Adapt to Type Handles by Value If you only want to read Evxy from the function, you can pretend that the parameter type is CL_EvxyDATA* instead of CL_MEASDATA*. This works because is a CL_MEASDATA union and CL_EvxyDATA is one of the structs it can store. Make a Type Definition: A Cluster that contains 3 SGL numbers, and use this cluster to to tell the CLFN how to interpret the data.

-

boolean or Boolean? and other wiki style questions...

JKSH replied to Aristos Queue's topic in Wiki Help

These are purely stylistic issues. I'd personally prefer Boolean and subVI, but I'll follow the community guidelines (when they are produced). This one has ramifications for the ease of understanding of an article (especially for newcomers). I'm not sure what's best here. Perhaps we can let NI spearhead the effort to "normalize" the use of "G". We can stick to "LabVIEW" for now since it's more common, but switch over to "G" later when NI's efforts bear fruit. I'm with Rolf; same-page is much more useful in the forseeable future. -

boolean or Boolean? and other wiki style questions...

JKSH replied to Aristos Queue's topic in Wiki Help

This thread is about literature, not code. It's a question of finding a balance between practicality and professionalism. Side A can reasonably accuse Side B of being a Grammar Nazi while Side B can reasonably accuse Side A of being sloppy. My response would be "be consistent within a project". Feel free to have different conventions across different projects. So for the Wiki, we should at least be consistent within a single article or group of closely-related articles. Some might even argue that the whole Wiki is a single project. No, as long as you don't care when people write "LabView". -

If was written successfully, then it can be read too. The key is to find out what name was stored in the TDMS file. If you call TDMS Get Properties.vi without wiring the "property name" and "data type" input, you're meant to get an array of all available properties in the "property names" output. I presume you meant TDMS Get Properties.vi? If so, then that's very weird. My next guess would be you're opening different files in LabVIEW vs. DIAdem. Try calling TDMS Get Properties.vi without the name inputs immediately after you write the custom property.

-

Your cDAQ should have a few GB at least, right? Anyway, I prefer to build the VIs in my Windows PC, and then use Application Builder to convert the VIs into an application + installer. After that, I transfer the installer into the cDAQ. (I also uninstall the LabVIEW development environment from the cDAQ -- this will free up another 1.5 GB)

-

Hello, For years I've used Split String.vi and Join String.vi from the Hiddem Gems palette. I see that LV 2019 has the new "Delimited String to 1D String Array.vi" and "1D String Array to Delimited String.vi". I haven't installed LV 2019 yet; does anyone know whether the new official VIs are just wrappers for the Hidden Gems VIs? Is it OK to keep using the Hidden Gems VIs going forward?

-

Altenbach has done numerous benchmarks on the NI forums and found that maps perform 2% - 3% better than variant attributes in one particular use-case: https://forums.ni.com/t5/LabVIEW/LV2019-Maps-vs-Variant-Attributes-Performance/m-p/3934796#M1118297 He'll publish his code in a few days.

-

In your code, Loop 1 and Loop 2 are parallel and independent as you expect. Loop 2 not blocked by Loop 1. Loop 2 is blocked by the subVI inside Loop 2. Yes, a subVI is a function. But your function does not return, so it blocks the loop! Not correct. You can execute your subVI by putting it OUTSIDE of Loop 2.

-

So your subVI contains its own (perpetual) while loop -- is that right? You must understand: A subVI doesn't "finish running" until all of the loops inside the subVI stops. Therefore, Loop 2 in your Main VI cannot continue -- it is blocked because it is waiting for your subVI to "finish running". You don't need that subVI. Just put all the blinking logic in Loop 2. Anyway, it is best to post actual VIs, not screenshots. The screenshots might not show important connections.

-

Post your code. Without it, we can only rely on our crystal ball.

-

Make sure you have a Wait node in both loops: https://knowledge.ni.com/KnowledgeArticleDetails?id=kA00Z000000P82BSAS&l=en-AU Without a Wait node, one loop will consume 100% of a CPU core. This could be why your 2nd loop is unable to run.

-

Rephrasing for clarity: I'm not 100% sure if different executables built using the LabVIEW Application Builder run as different processes or not

-

As far as Windows is concerned, all the "Application Instances" are still part of the same LabVIEW process. Thus, all application instances (projects) loaded by the same instance of the LabVIEW IDE share the same DLL memory space. You can have "separate copies" of your DLL by loading each project in a separate instance of the LabVIEW IDE (e.g. 32-bit vs. 64-bit, or 2017 vs. 2018). I'm not 100% sure about this part, but I think different executables built using the LabVIEW Application Builder run as different processes, so they would have "separate copies" of your DLL. I'm not 100% sure if different executables built using the LabVIEW Application Builder run as different processes or not. I think they do, which means they would have "separate copies" of your DLL.

-

LLVM/Clang is truly a marvel! If you need to write wrapper VIs for DLLs, then I think a tool like this is the way to go. However, unless the C API has anything non-trivial like passing a large struct by-value or strings/arrays, you'll end up having to write your own wrapper DLL, right? (side note: I do wish LabVIEW would let us pass Booleans straight into a CLFN by-value...) This struct has the same size as a single int, so you can lie to the CLFN: Say that the parameter type is a plain I32. What are the possible types in the union? If they're quite simple and small, you could tell the CLFN to treat it as a fixed-size cluster of U8s (where the number of cluster elements equals the number of bytes of the union), then Type Cast the value to the right type. The type cast will treat your cluster as a byte array). These would require project-specific scripts that don't fit in a generic automatic tool though. The import wizard was useful for study purposes, to figure out how I should create my wrapper (using a simple sample DLL). That was about it.

-

Managing large files within GIT repo size limitations.

JKSH replied to Michael Aivaliotis's topic in Source Code Control

For released installers, GitHub provides a very sensible feature called Releases (https://help.github.com/articles/about-releases/). Each GitHub Release is linked against a tag in your repo, and you can have multiple files within each Release -- for example: One installer for your server PC, one installer for your client PCs, and one Linux RT disk image for your cRIO end node (created via the NI RAD Utility). Each file can be up to 2GB, but there is no hard limit on the total disk space occupied by all your Releases. You can also write release notes for each release which gives you a changelog for your whole project. BitBucket doesn't have something as comprehensive as GitHub Releases, but it does provide a Downloads feature where you can put your installers (https://bitbucket.org/blog/new-feature-downloads). It allows up to 2 GB per file. -

LabVIEW snippet PNGs are being sanitized

JKSH replied to Phillip Brooks's topic in Site Feedback & Support

It used to work, then it stopped: https://forums.ni.com/t5/LabVIEW/Notice-Attach-VI-Snippets-to-post-rather-than-upload/td-p/3660471 Attachments (as opposed to inline images) should be fine, apparently. -

In the video, you used the "Abort" button to stop the code. Does the delay still occur if you shut down everything gracefully instead of aborting? Does the delay still occur if you use File > Save All to save your changes before running again? Does the delay still occur if you disable "Separate compiled code" for all your VIs and classes?

-

LabVIEW NXG - when will we start using it

JKSH replied to 0_o's topic in Development Environment (IDE)

I think it makes sense to have separate APIs for text strings and byte array "strings". However, both are still incomplete at the moment. The feature I miss most from LabVIEW Classic is "hex mode" on a string indicator for inspecting the contents of a byte array. I find the Silver palette quite decent Simply setting the background to white (as opposed to "LabVIEW Grey") also does wonders. A note on your last link: That "stark example of modernity" depicts an old widget technology, basically unchanged for over a decade -- it's still maintained today, but only in bugfix mode. Here's an actual example of modernity. -

LabVIEW NXG - when will we start using it

JKSH replied to 0_o's topic in Development Environment (IDE)

Only for LabVIEW Communications type applications (on PXI). FPGA through RIO (and RIO in general) is not yet supported. Note that Python support is Windows-only. I was hoping to use the Python Node on my LinuxRT cRIO, but no dice. Related: http://www.ni.com/pdf/products/us/labview-roadmap.pdf > Is it using a VI at all? Yes, but the file format is different. > Does it support scripting Not yet (see Roadmap link) > and OO? Mostly. But we can't have type definitions inside classes. > Could I use llb/lvlib/lvlibp/...? llb: Gone in NXG (which is a good thing; these are terrible for source control) lvlib: Yes lvlibp: Not sure > Is the FP resizable? No. My company finds WebVIs pretty useful. We've started using it for some real projects (but only for the web client -- we still use LabVIEW Classic for other parts of the project, including the web server) -

You could save the data to file and then use the System Exec VI to run your choice of Linux chart generation tools like gnuplot (a command-line application) or Matplotlib (a Python library).

-

Just got "The page you requested may have moved, been deleted, or is inaccessible. Search below for other content, or contact NI for assistance." on all 3 Fall 2018 links

-

No problem. We are happy to help, but we'd appreciate it if you try to follow our instructions. Good luck with learning LabVIEW.

-

Open LabVIEW and click Help > Find Examples... then search for "XML". @crossrulz gave you this advice earlier.