-

Posts

499 -

Joined

-

Last visited

-

Days Won

36

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by JKSH

-

Yes, apparently. According to https://opcfoundation.org/forum/opc-ua-standard/clarification-for-index-range-handling-of-readwrite/ the ability to read an array subset (including a single element) is required by the standard. The ability to write is optional, however. I believe it's called "Index Ranges". My previous link shows an example. Here's another: http://forum.unified-automation.com/topic1386.html I don't know of one, sorry This sounds like a recipe for hard-to-detect bugs... Is there any chance of getting NI R&D to change this behaviour (or at the very least provide a way to enable stronger checks)?

-

May I ask why? Off topic: One reason I still use DataSocket in LabVIEW is because that's the only way (that I know of) to programmatically read/write Modbus I/O server data without having to create bound variables: http://forums.ni.com/t5/LabVIEW/Can-I-write-to-Modbus-I-O-Server-addresses-without-creating/td-p/2848048 I'd love to know if there are any alternatives.

-

Hi, I wanted to clarify: How do you get the string, "x23y45z3"? Does the user type it into a text box? How do you give the values back to the user? Why?

-

Plot Selection Overlay for XYGraph - Do I want an XControl?

JKSH replied to Christian Butcher's topic in User Interface

The way I see it, XControls are for cases where you want to create a custom interactive "widget", and you plan to embed multiple copies of this widget in other front panels. (Unfortunately, it doesn't always work well, but that's a different topic.) So, do you want multiple copies of your custom box? If you don't, then you definitely don't need an XControl. If you do, then I'd consider it. Also, does it have to be an overlaid box? Would you consider a separate pop-up dialog altogether? -

Error "...is a member of a cycle" during simulation

JKSH replied to Shleeva's topic in Application Design & Architecture

This is just a guess, but I think the Control and Simulation (C&S) loop treats your code as a continuous-time model, and does some conversion behind-the-scenes to produce a discrete-time model for simulation. A feedback node breaks time continuity, so it's quite possible that the feedback nodes would interfere with the C&S loop's ability to solve your equations. I don't have the toolkit installed so I can't play with it, but I'm not sure if the C&S Loop is capable of solving such complex simultaneous differential equations like yours. I would still do some calculus and algebra to make your equations more simulation-friendly first, before feeding them into LabVIEW. For example, it's pretty easy to get an expression for x. See: Finding dx/dt: http://www.wolframalpha.com/input/?i=Integrate+A-B*(d^2y%2Fdt^2*cos(y)+-+(dy%2Fdt)^2*sin(y))+with+respect+to+t Finding x: http://www.wolframalpha.com/input/?i=Integrate+A*t-B*(dy%2Fdt*cos(y))+%2B+C+with+respect+to+t ...where y = φ, A = F/(M+m), B = m*l/(M+m), C = constant of integration (related to initial conditions). If you still want to try to get the C&S loop to process your equations unchanged, try asking at the official NI forums and see if people there know any tricks: http://forums.ni.com/ -

That's a simple but valid approach, assuming that both sensors are very close together and face the same direction at all times. The technical term for alignment is "image registration" or "image fusion". The Advanced Signal Processing toolkit contains an example for doing image fusion -- do you have it installed?

- 1 reply

-

- 1

-

-

Labview randomly goes into a stepthrough/execution highlighting modde

JKSH replied to parsec's topic in LabVIEW General

The video shows that the nodes that get highlighted are those that take references as inputs. So, I'm guessing that your reference(s) became invalid somehow. I'm not sure why no error messages pop up though -- did you disable those? Wire the error outputs of those highlighted nodes into a Simple Error Handler subVI. What do you see? It's hard to say without seeing your code in detail. Anyway, may I ask why you use the abort button? That can destabilize LabVIEW. It's safer to add a proper "Stop" button to your code -

Error "...is a member of a cycle" during simulation

JKSH replied to Shleeva's topic in Application Design & Architecture

That's quite cool. Note that your system is non-linear (due to the sine and cosine functions). Do you know if those built-in ODE solvers cope well with non-linear systems? It's best to get it working for φ alone first (meaning you need to make sure all your values are non-weird), before you even consider solving for both x and φ at the same time. One quick and dirty technique is to take your "solved" φ variable, pass it through differentiator blocks to calculate (d^2 x / d t^2), and then pass that through integrator blocks to find x. This will avoid the "member of a cycle" problem, but might cause errors to accumulate. See http://zone.ni.com/reference/en-XX/help/371894H-01/lvsim/sim_configparams/ -

Error "...is a member of a cycle" during simulation

JKSH replied to Shleeva's topic in Application Design & Architecture

That's fine in mathematics. Writing equations like this provides a concise yet complete way of describing a system. However, this format doesn't lend itself nicely to programmers' code. The easiest answer for you is one that you already understand. So tell us: What techniques have you learnt in your engineering course for solving differential equations in LabVIEW (or in any other software environment, like MATLAB or Mathematica)? I haven't used the Control & Simulation loop before so I don't know what features it has for solving differential equations. However, the first thing I'd try is to substitute the top equation into the bottom equation, and see if that allows you to solve for φ. If not, then my analysis is below. -------- Those are Differential Equations, which describe "instantaneous", continuous-time relationships. You can't wire them up in LabVIEW as-is to solve for x and φ. I would first convert them into Difference Equations, which describe discrete-time relationships. Difference Equations make it very clear where to insert Feedback Nodes or Shift Registers (as mentioned by Tim_S), which are required to resolve your "member of a cycle" problem. You'll also need to choose two things before you can simulate/solve for x and φ: Your discrete time step, Δt. How much time should pass between each iteration/step of your simulation? Your initial conditions. At the start of your simulation, how fast and which directions are your components moving? -

This isn't related to EULAs or LabVIEW FPGA, but you might like this site: http://tosdr.org/

-

I've experienced this a few times in recent weeks. I then become unable to post on the original page, so I have to copy + paste my comments into a new tab. Running Google Chrome 55.0.2883.87 m, and I often take a long time to post too.

- 137 replies

-

- 1

-

-

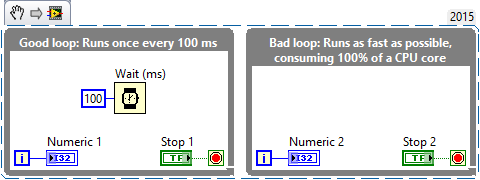

PC 3 has 2 cores, right? 50% CPU usage means that one of your cores is running at 100%. Jordan is probably correct: You have at least one while loop running wild. Run Resource Monitor on PC 2 too. I suspect you'll find your application using 25% CPU there (1/4 cores). Look at your code. For each loop, tell us: How frequently does the code inside the loop run? (Is it... once every second? Once every 10 ms? Once every time a queued item is received?) Anyway, here's a small note about loop timing: You haven't answered the questions asked by ShaunR and hooovahh: Are your Ethernet ports 100 Mbps or 1000 Mbps? Since you are using GigE cameras, you need to use Gigabit (1000 Mbps) Ethernet ports. This should be fine, if you don't run anything else on the PC.

- 14 replies

-

- executable

- labview

-

(and 3 more)

Tagged with:

-

Run your application on PC 2 and PC 3. Launch Resource Monitor. Are you hitting the limit of any resource(s)?

- 14 replies

-

- executable

- labview

-

(and 3 more)

Tagged with:

-

One thing's not clear to me: Does that statement mean, "You should not inherit from a concrete class, ever", or does it mean "you should not make a concrete class inherit from a concrete class, but you can let an abstract class inherit from a concrete class"? Also, before we get too deep, could you explain, in your own words, the meaning of "concrete"? Agreed, I'd call it a "rule of thumb" instead of saying "never". An example where multi-layered inheritance make sense, that should be familiar to most LabVIEW programmers, is LabVIEW's GObject inheritance tree. Here's a small subset: GObject Constant Control Boolean Numeric NamedNumeric Enum Ring NumericWithScale Dial Knob String ComboBox Wire In the example above, green represents abstract classes, and orange represents concrete classes. Notice that concrete classes are inherited from by both abstract and concrete classes. Would you ("you" == "anyone reading this") organize this tree differently? What's the rationale behind this restriction?

-

This small part is straightforward enough, no? The process of making LabPython 64-bit friendly is orthogonal to process of dealing with compatibility breaks in 3rd party components. I myself have not used LabPython (or even Python), so I wasn't commenting (and won't comment) on how the project should/shouldn't be carried forward. Rolf suggested a quick and dirty fix for a specific issue (i.e. 64-bit support), which exposes a large gun that a user could shoot themselves in the foot with. I suggested an alternative quick and dirty fix that hides this gun, and asked what he (and anyone else reading this thread) thought of the idea. Understood. OK, it's now recorded here for posterity: 2 different suggestions on how to achieve 64-bit support, for anyone who's happy to work on LabPython. That sounds sensible. Such blanket statements are a bit unfair... https://www.python.org/dev/peps/pep-0384/ https://community.kde.org/Policies/Binary_Compatibility_Issues_With_C%2B%2B https://en.wikipedia.org/wiki/Linux_Standard_Base http://forums.ni.com/t5/Machine-Vision/IMAQdx-14-5-breaks-compatibility-with-IMAQdx-14-0/m-p/3168479 Granted, what's now in place in the Python and Linux worlds probably isn't enough to smooth things out for LabPython, but snarky remarks help nobody.

-

Would you consider encapsulating the 64-bit "refnum" in an LVClass for the user can pass around?

-

The reduced contrast is intentional on NI's part. There is an Idea Exchange post calling for its reversal: https://forums.ni.com/t5/LabVIEW-Idea-Exchange/Restore-High-Contrast-Icons/idi-p/3363355

-

And for those who like compact lists: https://www.tldrlegal.com/l/freebsd (2-clause, GPower's article) https://www.tldrlegal.com/l/bsd3 (3-clause, Shaun's highlight) https://tldrlegal.com/license/4-clause-bsd (4-clause, the original BSD license)

-

From earlier posts, it sounds to me like btowc() is simply a single-char version of mbsrtowcs(). Good to check, though. You'd then still need to convert UTF-8 -> UTF-16 somehow (using one of the other techniques mentioned?) As @rolfk said, LabVIEW simply uses whatever the OS sees.

-

Yes, iconv() is designed for charset conversion. Possible bear trap (I haven't used it myself): A quick Google session turned up a thread which suggests that there are multiple implementations of iconv out there, and they don't all behave the same. At the same time, I guess ICU would've been an overkill for simple charset conversion -- it's more of an internationalization library, which also takes care of timezones, formatting of dates (month first or day first?) and numbers (comma or period for separator?), locale-aware string comparisons, among others. Thinking about it some more, I believe @ShaunR does want charset conversion after all. This thread has identified 2 ways to do that on Linux: System encoding -> UTF-32 (via mbsrtowcs()) -> UTF-16 (via manual bit shifting) System encoding -> UTF-16 (via iconv()) Hence the rise of cross-platform libraries that behave the same on all supported platforms. Do you have the NI Developer Suite? My company does, and we serendipitously found out that LabVIEW for OS X (or macOS, as it's called nowadays) is part of the bundle. We simply wrote to enquire about getting a license, and NI kindly mailed us the installer disc just like that

-

Ah, I misread your question, sorry. I thought you wanted codepage conversion, but just wanted to widen ASCII characters. No extra library required, then.

-

ICU is a widely-used library that runs on many different platforms, including Windows, Linux and macOS: http://site.icu-project.org/ It's biggest downside is its data libraries can be quite chunky (20+ MB for the 32-bit version on Windows). However, I don't see this as a big issue for modern desktop computers.

-

Action Engines... are we still using these?

JKSH replied to Neil Pate's topic in Application Design & Architecture

I still use AEs for very simple event logging. My AE consists of 2 actions: "Init" where I wire in the log directory and an optional refnum for a string indicator (which acts as the "session log" output console) "Log" (the default action) where I wire in the message to log. Then, all I have to do is plop down the VI and wire in the log message from anywhere in my application. The AE takes care of timestamping, log file rotation, and scolling the string indicator (if specified) Having more than 2 actions or 3 inputs makes AE not that nice to use -- I find myself having to pause and remember which inputs/outputs are used with which actions. I've seen a scripting tool on LAVA that generates named VIs to wrap the AE, but that means it's no longer a simple AE. -

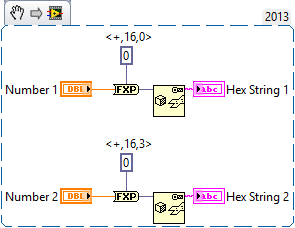

Correct A few useful notes: Your 16-bit fixed point number is stored in memory exactly like a 16-bit integer*. Fixed point multiplication == Integer multiplication. The same algorithms apply to both. The algorithms for integer multiplication don't depend on the value of the number. By extension, they don't depend on the representation of the number either. e.g. Multiplying by 2 is always equivalent to shifting the bits to the left (assuming no overflow) (* LabVIEW actually pads fixed point numbers to 64 or 72 bits, but I digress) Anyway, to illustrate: Bit Pattern (MSB first) | Representation | Value (decimal) =========================|===============================|================= 0000 0010 | <+,8,8> (or equivalently, U8) | 2 0000 0010 | <+,8,7> | 1 0000 0010 | <+,8,6> | 0.5 -------------------------|-------------------------------|----------------- 0000 0100 | <+,8,8> (or equivalently, U8) | 4 0000 0100 | <+,8,7> | 2 0000 0100 | <+,8,6> | 1 That's borderline blasphemy... You can redeem yourself by getting LabVIEW involved:

-

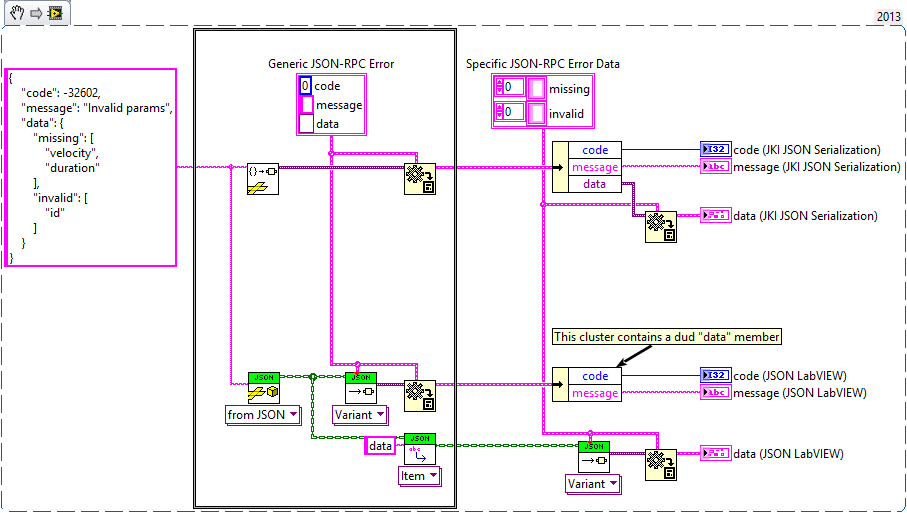

I'm using JSON LabVIEW with a 2013 project, but I don't need the latest and greatest library There is one feature I would love to see added though: Support for non-explicit variant conversion. JKI's recently-open-sourced library handles this very nicely: The black frame represents a subVI boundary. Several months ago I implemented a JSON-RPC comms library for my project, based on JSON LabVIEW. Because the "data" member can be of any (JSON-compatible) type, I had to expose the JSON Value.lvclass to the caller, who then also had to call Variant JSON.lvlib:Get as Variant.vi manually to extract the data. This creates an undesirable coupling between the caller and the JSON library. It would be awesome if Variant JSON.lvlib:Get as Variant.vi could use the structure within the JSON data itself to build up arbitrary variants.