Search the Community

Showing results for tags 'database'.

-

There seem to be very few examples of how to get results out of the standard Teststand SQL database. There is a top level table called UUT_RESULT but it may not be obvious to a new user how to get the results from a test run in the UUT_RESULT table with a given ID GUID. To this end I give an example below in the hope it saves someone else the pain of surfing the internet trying to find an example. The SQL script below when run will return all the results for a test. Obviously you will still need to read up on SQL but it is a good starting point. There is a comment in the code on how to make sure only tests with results are returned. The SQL script is a lot more readable when coloured in a SQL editor. DECLARE @UGID [nvarchar](50) SET @UGID = 'C26760D9-3FE4-4500-99FA-6B4B26341563' SELECT RTRIM (UUT_RESULT.UUT_SERIAL_NUMBER) AS UUT_SERIAL_NUMBER,RTRIM (UUT_RESULT.START_DATE_TIME) AS START_DATE_TIME, RTRIM (UUT_RESULT.USER_LOGIN_NAME) AS USER_LOGIN_NAME, RTRIM(UUT_RESULT.STATION_ID) AS STATION_ID, RTRIM (UUT_RESULT.UUT_STATUS) AS UUT_STATUS,RTRIM (STEP_RESULT.ORDER_NUMBER) AS ORDER_NUMBER,RTRIM(STEP_RESULT.STEP_GROUP) AS STEP_GROUP, RTRIM(STEP_SEQCALL.SEQUENCE_NAME) AS SEQUENCE_NAME, RTRIM(STEP_RESULT.STEP_NAME) AS STEP_NAME,RTRIM(PROP_NUMERICLIMIT.COMP_OPERATOR) AS COMP_OPERATOR, RTRIM(STEP_RESULT.STEP_TYPE) AS STEP_TYPE, RTRIM(STEP_RESULT.STATUS) AS STATUS,RTRIM(PROP_RESULT.DATA) AS RESULT, RTRIM(PROP_NUMERICLIMIT.LOW_LIMIT) AS LOW_LIMIT,RTRIM(PROP_NUMERICLIMIT.HIGH_LIMIT) AS HIGH_LIMIT, RTRIM(STEP_RESULT.MODULE_TIME) AS MODULE_TIME,RTRIM(STEP_SEQCALL.SEQUENCE_FILE_PATH) AS SEQUENCE_FILE_PATH,RTRIM(PROP_NUMERICLIMIT.UNITS) AS UNITS, RTRIM(UUT_RESULT.EXECUTION_TIME) AS EXECUTION_TIME, RTRIM(STEP_RESULT.ERROR_CODE) AS ERROR_CODE,RTRIM(STEP_RESULT.ERROR_MESSAGE) AS ERROR_MESSAGE,RTRIM(UUT_RESULT.UUT_ERROR_CODE) AS UUT_ERROR_CODE,RTRIM(UUT_RESULT.UUT_ERROR_MESSAGE) AS UUT_ERROR_MESSAGE FROM UUT_RESULT INNER JOIN STEP_RESULT INNER JOIN STEP_SEQCALL ON STEP_RESULT.STEP_PARENT = STEP_SEQCALL.STEP_RESULT ON UUT_RESULT.ID = STEP_RESULT.UUT_RESULT LEFT OUTER /*INNER */JOIN -- SWAP LEFT OUTER FOR INNER TO miss out steps without results. PROP_NUMERICLIMIT INNER JOIN PROP_RESULT ON PROP_NUMERICLIMIT.PROP_RESULT = PROP_RESULT.ID ON STEP_RESULT.ID = PROP_RESULT.STEP_RESULTWHERE (STEP_RESULT.UUT_RESULT = @UGID)ORDER BY STEP_RESULT.ORDER_NUMBER

-

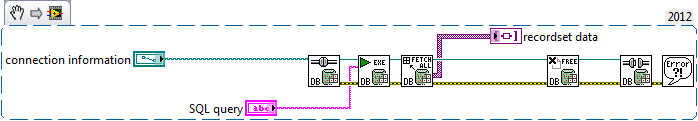

Hi, I am connecting to an SQL server running 10.5. Using LabVIEW Database Connectivity toolkit and/or TestStand's Database Viewer I can successfully open a connection and run a simple query which returns the proper records. A more advanced query and no records are returned and the properties show that the state is closed. Copy and pasting this query into Microsoft SQL Server Management Studio successfully fetches the correct records. Also running this same query in python using pyodbc returns the correct record. The VI is straight forward. I attached the snippet. My first thought is that the string contains some bytes in codes that causes the query to be interpreted wrong by NI's tools. Does anybody have experience as to why a query works in other environments but not in NI's tools? Again, a simple query did work, but a more complex one did not. Thanks!

-

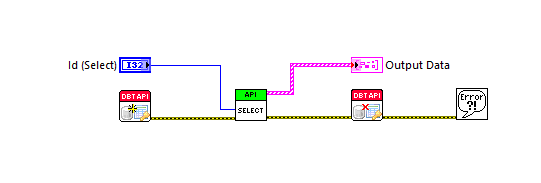

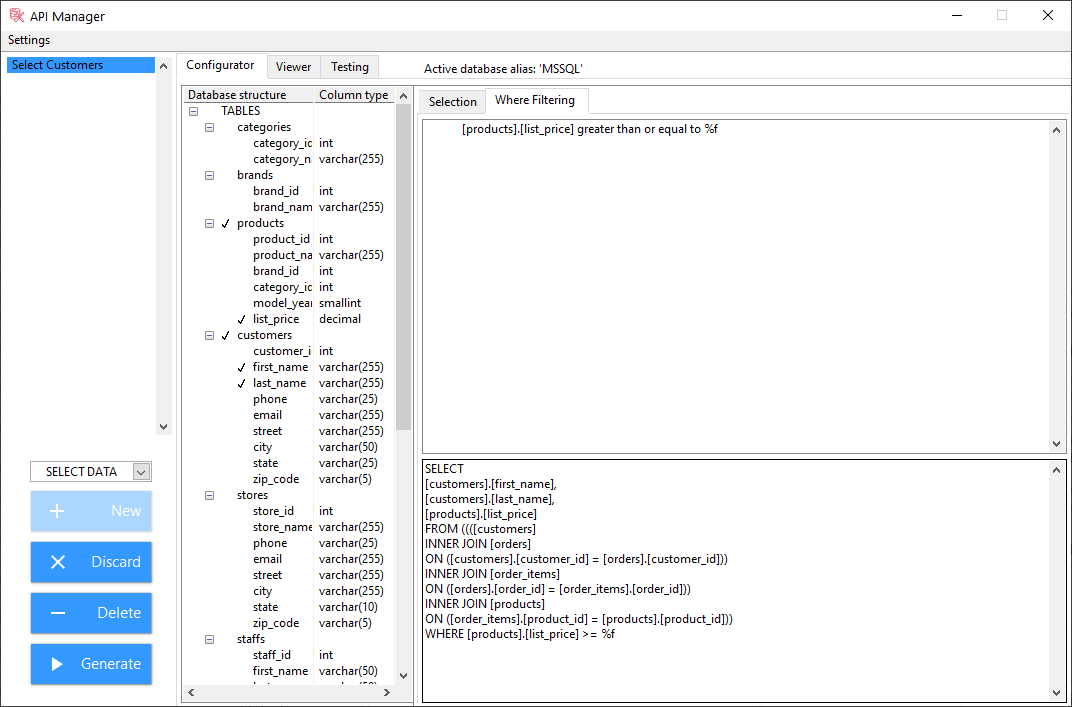

Dear Community, let me present our new ANV Database Toolkit, which has been recently released at vipm.io. Short introduction to the toolkit is posted by this link, and it also describes steps which should be done in order to use this toolkit. ANV Database Toolkit helps developers design LabVIEW API for querying various databases (MS SQL, MySQL, SQLite, Access). It allows to create VIs which can be used as API with the help of graphical user interface. When using these VIs, toolkit handles connection with the database, thus relieving developers of this burden in their applications. It has the following features: Simplifies handling of databases in LabVIEW projects Allows to graphically create API VIs for Databases Supports Read, Write, Update and Delete queries Supports various database types (MS SQL, MySQL, SQLite, Access) Overall idea is that developer could create set of high-level API VIs for queries using graphical user interface, without actual writing of SQL queries. Those API VIs are used in the application, and handle database communication in the background. Moreover, SQL query could be applied to any of the supported database types, it is a matter of database type selection. Change of target database does not require changes in API VI which executes the query. After installation of the toolkit, sample project is available, which shows possibilities of the toolkit in terms of execution different types of queries. Note, that in order to install the toolkit, VI Package Manager must be launched with Administrator privileges. This toolkit is paid, and price is disclosed based on price quotation. But anyway, there are 30 days of trial period during which you could tryout the toolkit, and decide whether it is helpful (and hope that it will be) for your needs. In case of any feedback, ideas or issues please do not hesitate to contact me directly here, or at vipm.io, or at e-mail info@anv-tech.com.

-

TestStand Version(s) Used: 2010 thru 2016 Windows (7 & 10) Database: MS SQL Server (v?) Note: The database connection I'm referring to is what's configured in "Configure > Result Processing", (or equivalent location in older versions). Based on some issues we've been having with nearly all of our TestStand-based production testers, I'm assuming that TestStand opens the configured database connection when the sequence is run, and maintains that same connection for all subsequent UUTs tested until the sequence is stopped/terminated/aborted. However, I'm not sure of this and would like someone to either confirm or correct this assumption. The problem we're having is that: Nearly all of our TestStand-based production testers have intermittently been losing their database connections - returning an error (usually after the PASS/FAIL banner). I'm not sure if it's a TestStand issue or an issue with the database itself. The operator - Seeing and only caring about whether it passed or failed, often ignores the error message and soldiers on, mostly ignoring every error message that pops up. Testers at the next higher assembly that look for a passed record of the sub assemblies' serial number in the database will now fail their test because they can't find a passed record of the serial number. We've tried communicating with the operators to either let us know when the error occurs, re-test the UUT, or restart TestStand (usually the option that works), but it's often forgotten or ignored. The operators do not stop the test sequence when they go home for the evening/weekend/etc. so, TestStand is normally left waiting for them to enter the next serial number of the device to test. I'm assuming that their connection to the database is still opened during this time. If so, it's almost as though MS SQL has been configured to terminate idle connections to it, or if something happens with the SQL Server - the connection hasn't been properly closed or re-established, etc. Our LabVIEW based testers don't appear to have this problem unless there really is an issue with the database server. The majority of these testers I believe open > write > close their database connections at the completion of a unit test. I'm currently looking into writing my own routine to be called in the "Log to Database" callback which will open > write > close the database connection. But, I wanted to check if anyone more knowledgeable had any insight before I spend time doing something that may have an easy fix. Thanks all!

-

Hello all! I've recently been looking for a better way to store time series data. The traditional solutions I've seen for this are either "SQL DB with BLOBs" or "SQL DB with file paths and TDMS or other binary file type on disk somewhere". I did discover a few interesting time series (as opposed to hierarchical) databases in my googling. The main ones I come up with are: daq.io - Cloud based, developed explicitly for LabVIEW InfluxDB - Open source, runs locally on Linux. Basic LV Interface on Github openTSDB graphite I haven't delved too much into any of them, especially openTSDB and graphite, but was wondering if anyone had any insights or experience with them. Thanks! Drew

-

Background: I've been using LabVIEW for a few years for automation testing tasks and until recently have been saving my data to "[DescriptorA]\[DescriptorB]\[test_info].csv" files. A few months ago, a friend turned me on to the concept of relational databases, I've been really impressed by their response times and am reworking my code and following the examples with the Database Connectivity Toolkit (DCT) to use "[test_info].mdb" with my provider being a Microsoft jet oldb database. However, I'm beginning to see the limitations of the DCT namely: No support for auto-incrementing primary keys No support for foreign keys Difficult to program stored procedures and I'm sure a few more that I don't know yet. Now I've switched over to architecting my database in MySQL Workbench. Suffice to say I'm a bit out of my depth and have a few questions that I haven't seen covered in tutorials Questions (General): Using Microsoft jet oldb I made a connection string "Data Source= C:\[Database]\[databasename.mdb]" in a .UDL file. However, the examples I've seen for connecting to MySQL databases use IP addresses and ports. Is a MySQL database still a file? If not, how do I put it on my networked server \\[servername\Database\[file]? If so, what file extensions exist for databases and what is the implication of each extension? I know of .mdb, but are there others I could/should be using (such as .csv's vs .txt's) My peers, who have more work experience than me but no experience with databases, espouse a 2GB limit on all files (I believe from the era of FAT16 disks). My current oldb database is about 200mB in size so 2GB will likely never happen, but I'm curious: Do file size limits still apply to database files? If so, how does one have the giant databases that support major websites? Questions (LabVIEW Specific): I can install my [MainTestingVi.exe], which accesses the jet oldb database, on a Windows 10 computer that is fresh out of the box. When I switch over to having a MySQL database, are there any additional tools that I'll need to install as well?

-

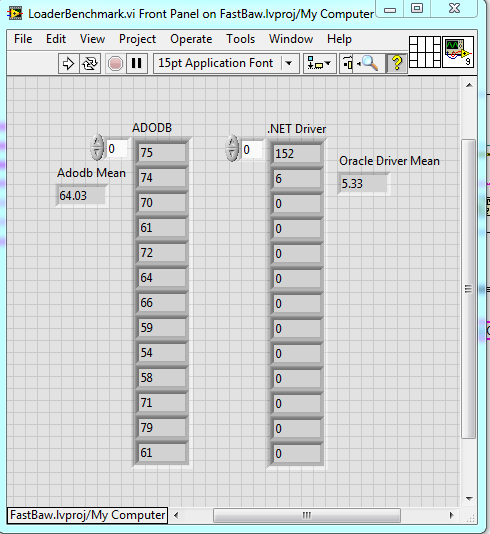

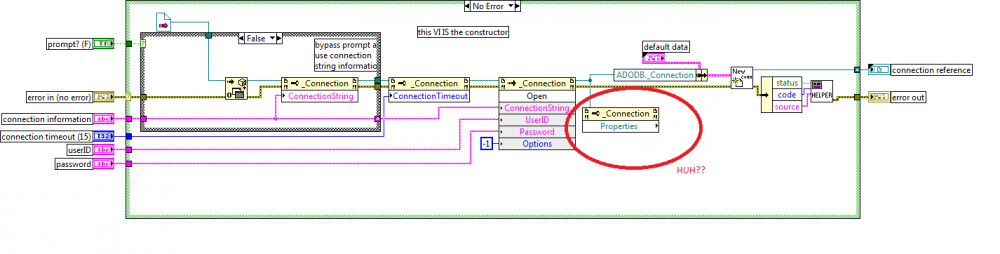

I think I have found a fundamental issue with the DB Toolkit Open connection. It seems to not correctly use connection pooling. The reason I believe it's an issue with LabVIEW and ADODB ActiveX specifically is because the problem does not manifest itself using the ADODB driver in C#. This is better shown with examples. All I am doing in these examples is opening and closing connections and benchmarking the connection open time. Adodb and Oracle driver in LabVIEW. ADODB in C# namespace TestAdodbOpenTime { class Program { static void Main(string[] args) { Stopwatch sw = new Stopwatch(); for (int i = 0; i < 30; i++) { ADODB.Connection cn = new ADODB.Connection(); int count = Environment.TickCount; cn.Open("Provider=OraOLEDB.Oracle;Data Source=FASTBAW;Extended Properties=PLSQLRSet=1;Pooling=true;", "USERID", "PASSWORD", -1); sw.Stop(); cn.Close(); int elapsedTime = Environment.TickCount - count; Debug.WriteLine("RunTime " + elapsedTime); } } } } Output: RunTime 203 RunTime 0 RunTime 0 RunTime 0 RunTime 0 RunTime 0 RunTime 0 RunTime 0 RunTime 0 Notice the time nicely aligns between the LabVIEW code leveraging the .NET driver and the C# code using ADODB. The first connection takes a bit to open then the rest the connection pooling takes over nicely and the connect time is 0. Now cue the LabVIEW ActiveX implementation and every open connection time is pretty crummy and very sporadic. One thing I happened to find out by accident when troubleshooting was if I add a property node on the block diagram where I open a connection, and if I don't close the reference, my subsequent connect times are WAY faster (between 1 and 3 ms). That is what leads me to believe this may be a bug in whatever LabVIEW does to interface with ActiveX. Has anyone seen issues like this before or have any idea of where I can look to help me avoid wrapping up the driver myself?

- 5 replies

-

- 1

-

-

- connections

- database

-

(and 1 more)

Tagged with:

-

I am running calls to a various stored procedures in parallel, each with their own connection refnums. A few of these calls can take a while to execute from time to time. In critical parts of my application I would like the Cmd Execute.vi to be reentrant. Generally I handle this by making a copy of the NI library and namespacing my own version. I can then make a reentrant copy of the VI I need and save it in my own library, then commit it in version control so everyone working on the project has it. But the library is password protected so even a copy of it keeps it locked. I can't do a save as on the VIs that I need and make a reentrant copy, nor can I add any new VIs to the library. Does anyone have any suggestions? I have resorted to taking NIs library, including it inside my own library, then basically rewriting the VIs I need by copying the contents from the block diagram of the VI I want to "save as" and pasting them in another VI.

-

I have been getting some hard crashes in my built application and I have some strange feeling (although it's just a guess) that it is related to a combination of using the database toolkit in a pool of workers that are launched by the start async call node. Their job is to sit there and monitor a directory then parse data files and throw their contents at the database through a stored procedure call. All my hardware comms (2 instruments) are using scpi commands through VISA GPIB and TCP so I think the risk that those are causing crashes is relatively low. Active X inside a bunch of parallel threads seems far more risky, even though as far as I can tell adodb should be thread safe, and each thread manages its own, unshared connection. These crashes are happening ~once a day on multiple test stations. I have sent the crash dumps to NI but am waiting to hear back. Right now I am grasping at straws because when I look at the dump file it's pointing me to function calls in the lvrt dll which does nothing for me. I am mostly just looking for any debugging suggestions or direction to getting this resolved more efficiently than just disabling code one loop at a time. I'm also curious if anyone has seen something similar. For the time being, I have reduced my number of workers to 1 in case it is a thread safety issue. I have also considered getting rid of that DB toolkit and leveraging .NET, even though I think that is just a wrapper around the same calls. Looking inside the database toolkit VIs alone scares me. FWIW I am using LabVIEW 2013 in this application. Thanks!

-

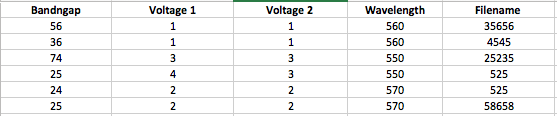

Hello Labview Users, I happen to have thousands of csv data file that I work with. The only way I recognize them is putting their characteristics in the file name. Which brings the problem of making the names too long and Microsoft doesn't like to accept long name. So I wanted to build a database for all my files. I am in the preliminary stage of building it ( I have attached the file and some of you may have seen it before). What I want to do is, have all my files in the database with random names and list them based on their characteristics. I want to do that in my application in the place of 'file' box. So that I can click on the file and run it (double-click on the file in application to make them work in active file). based on the parameters listed on the database I want to filter them to find any specific file. How the interface of database should look like is shown blow (Image) . It doesn't have to be a real database, just a directory application. I am trying to make it without the database toolkit. If anyone can help me out and guide me out or guide me in the right direction then that would be great. Thanks. Multicolumn list box v1.5.vi

-

What toolkits do people use for accessing databases? I’ve been using SQLite a lot, but now need to talk to a proper database server (MySQL or Postgres). I’ve used the NI Database Connectivity Toolkit in the (far) past, but I know there are other options, such as using ADO.NET directly. What do people use for database connectivity? What would you recommend? — James

-

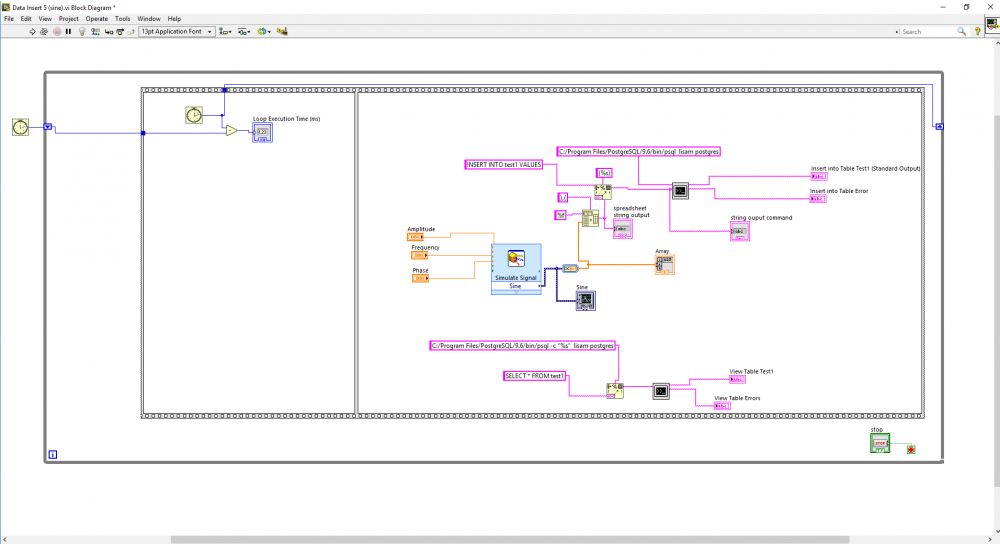

Hi all. I recently got the Database Connectivity Toolkit (DBCT) on LabVIEW 2016 and I'm trying to insert multiple rows into a database. Using a sine signal input I attempted to insert 50 rows in one iteration, but every method I tried returned 50 values in one row. Using the end of line constant and array to spreadsheet string, returned what looked like 50 rows, but when I queried "SELECT COUNT(*) FROM.." the no of rows was equal to the number of iterations. On another forum post, someone suggested it is not possible to insert multiple rows using the DBCT. Can anyone confirm this? I have written some code which allows me to insert 3 rows in one iteration, under a single connection. However, this method is very tedious and wouldn't work for large amounts of data. Can anyone think of a better way to do this? Thanks in advance, Lisa

- 8 replies

-

- database

- connectivity toolkit

-

(and 2 more)

Tagged with:

-

I am looking to determine the time it takes to insert each batch (500rows) of data into a database, i.e. the loop execution time. See the block diagram below. I've place a timer outside the while loop and subtracted it from the timer inside the loop, using shift registers to carry forward the start time. I place the timer in a sequence structure to make sure it starts before the code runs. When I used this method on a simple example - a while loop with wait function, the loop execution time returned the wait time as expected. But in the database application, the loop execution time value continues to increase. Where am I going wrong? Thanks in advance, Lisa

- 2 replies

-

- loop execution time

- postgresql

-

(and 2 more)

Tagged with:

-

I just started developing a LabVIEW 2013 driver for MongoDB . Currenly working with JSON only. https://github.com/3esmit/LabVIEW-MongoDB Name: LabVIEW MongoDB Category: Database LabVIEW Version: 2013 License Type: BSD MongoDB Is a NoSQL database. It store documents in collections instead rows in tables. Tow collection documents don't need to have the same structure, and a document can store another document or an array of documents. Each document is persisted with "_id" containing a special type called ObjectID. This lvclass deals with .NET dlls drivers from version 1.8.3.9. Later versions maybe cannot be used (I could'nt make them work in anyway) since LabVIEW 2013 ignores important functions from later drivers maybe for not supporting some .NET feature it uses. The 1.8.3.9 driver got a bug in list collections that is worked around in the lvclass method. Features: Deamon Start/Shutdown Insert Document Find by ObjectID Aggegate List databases & collections Create Collection Remove by ObjectID Find by Regex Find By Equal Get Document Part Update Set Insert Variant Planned features Find Variant Aggregate to Variant LVOOP ObjectID LVOOP BSON LVOOP Query LVOOP Aggregate

-

- 1

-

-

- mongodb

- connectivity

-

(and 1 more)

Tagged with:

-

Hi Everybody, I'm Zsolt Szabo. I read the the discussions very long time and it was very usefull for me. Thanks everybody for the info. BUT: I have now a problem what I'cant solve. I have to upload and query files (xls, pdf) into MSSQL database. I have to store these as BLOB. How can I do it? Unfortunately I didn't find anything. Please help me! Many thanks in advance!

-

Version 1.0.1.19

1,717 downloads

This is a package containing LabVIEW bindings to the client library of the PostgreSQL database server (libpq). The DLL version 9.3.2 and its dependencies are included in the package. This DLLs are taken out of a binary distribution from the Postgres-Website and are thread-safe (e.g. the call to PQisthreadsafe() returns 1). As of the moment the DLLs are 32bit only. The VIs are saved in LabVIEW 2009. So this package works out of the box if you have a 32bit LabVIEW 2009 or higher on any supported Windows operating system. Because this obviously is a derived work from PostgreSQL it is licensed by the PostgreSQL license. A few words regarding the documentation: This package is meant for developers who know how to use the libpq. You have to read and understand the excellent documentation for the library. Nonetheless all VIs contain extracts of that documentation as their help text. What's coming next? - adding support for 64bit - adding support for Linux (anybody out there to volunteer for testing?) - adding support for MAC (anybody out there to volunteer for testing?)-

- postgresql

- library

-

(and 2 more)

Tagged with:

-

Name: libpq Submitter: SDietrich Submitted: 01 Mar 2014 Category: Database & File IO LabVIEW Version: 2009License Type: Other (included with download) This is a package containing LabVIEW bindings to the client library of the PostgreSQL database server (libpq). The DLL version 9.3.2 and its dependencies are included in the package. This DLLs are taken out of a binary distribution from the Postgres-Website and are thread-safe (e.g. the call to PQisthreadsafe() returns 1). As of the moment the DLLs are 32bit only. The VIs are saved in LabVIEW 2009. So this package works out of the box if you have a 32bit LabVIEW 2009 or higher on any supported Windows operating system. Because this obviously is a derived work from PostgreSQL it is licensed by the PostgreSQL license. A few words regarding the documentation: This package is meant for developers who know how to use the libpq. You have to read and understand the excellent documentation for the library. Nonetheless all VIs contain extracts of that documentation as their help text. What's coming next? - adding support for 64bit - adding support for Linux (anybody out there to volunteer for testing?) - adding support for MAC (anybody out there to volunteer for testing?) Click here to download this file

- 7 replies

-

- postgresql

- library

-

(and 2 more)

Tagged with:

-

Hello, My name is Fedly Chan. Currently, I am facing problem when I try to read Interbase Data on Windows 7 (that act as server). I created program to access Interbase data on Server, with Labview Ver.4. The program could display accessed data, and also can manipulate the data (create, delete, update). The server is using Windows XP. The program access the Interbase data also from Computer that run with Windows XP. I have plan to change the Server into Windows 7. I tried to access the Interbase data from the Server (Windows 7). But error message appear : "100 CINLoad dll setup error." It seemed that I need to install the SQL Toolkids on the Server, SQL Toolkids that work under Windows 7. I tried to install SQL Toolkids (the old version that I have with me) on the Windows7, but it did not work, it said that because of the different environment. Frankly, when I check the price for this SQL Toolkids, it's quite expensive, can somebody help me with this problem... Many thanks, Fedly Chan. (jsum0702@gmail.com)

-

Hi all, I have an error that doesn't affect the functionality of my program, so for the moment I'm just ignoring it, but anyway I would like to know what is its cause and how can I solve it, or if it's safe to ignore it. I have a program that makes some tests and stores the data in some clusters, and then when the job is done it writes everything in a database. Then it asks if you want to start another job or end the program. Up to there everything works perfect. But if you choose to start another job without closing the program, after you complete it there's an error at the end of the database writing. The error is this: Error 505 occurred at NI_Database_API.lvlib:DB Tools Close Connection.vi->Escritura DB.vi->Interfaz Principal.vi Possible Reason: Open Command Object. ADO Error: 0x000001F9 As you can see in the attached image, in my database writing subVI I only open connection, use two "Insert Data" blocks and then close connection. I don't have any command references to use the Free Object block, so I don't know what's wrong. Thanks in advance for any help.

-

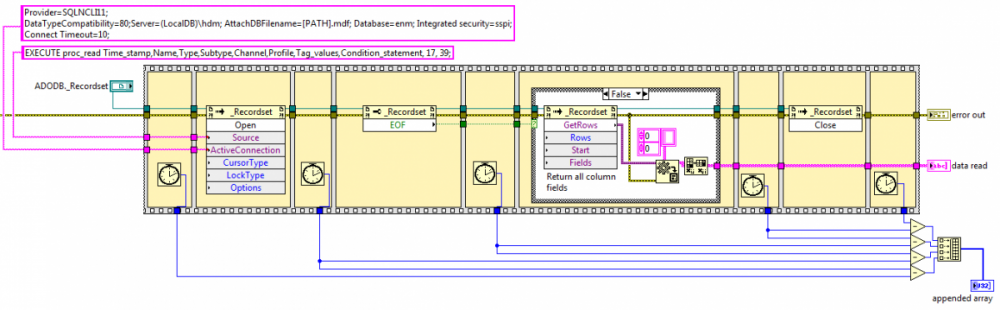

Dear LabVIEW enthusiasts, Our application uses .NET methods to access a SQL Server Express LocalDB database. It works, since we do manage to get the records we want. But on a regular basis, the Open method of the Recordset freezes for several seconds instead of executing within a few milliseconds like it usually does. I was able to determine that it was the Open method (and not the GetRows or the Close methods) by measuring the elapsed time as shown on the attached screenshot. Do you know if there is a known issue? I do have the Database Connectivity Toolkit but I have never used it, is there any chance this would fix my issue? Thank you

-

Attached is the browser program I am working on. I am trying to cycle through the database elements that are displayed in the cluster by means of a boolean button. Any help would be hugely appreciated Browser.vi

-

I have a VI that reads an image directory and the user can cycle through the images with a next and previous button. The image directory is stored in the SQL database just wondering how would I cycle through the images and display the information on the images that is stored in the database sequentially as the user cycles through the images? Cycle Through Images.vi

-

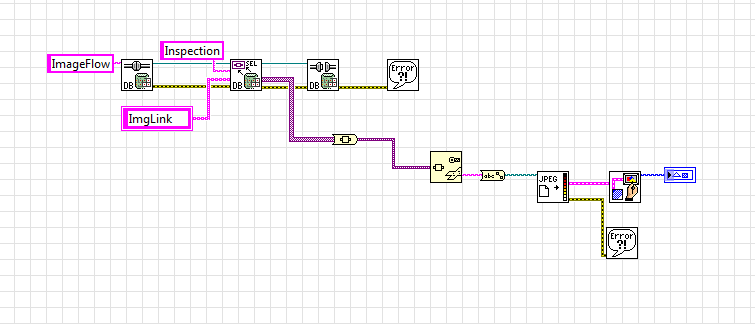

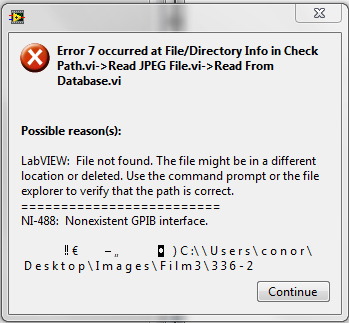

LabVIEW 2013 SQL Server 2012 I would like to read an image path from a SQL database and display the image on the front panel in LabVIEW. The database side works ok but when I run the VI i get an error saying Error 7 file not found. I have checked the directory and the path is correct. I have attached a screenshot of the VI and error.