-

Posts

590 -

Joined

-

Last visited

-

Days Won

26

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by ensegre

-

Uhm, maybe _IOC_TYPECHECK(v4l2_format) involves the length of some pointer buried within the v4l2_format structures, maybe it comes from elsewhere (/usr/include/asm-generic/ioctl.h #defines can be overridden). I meant, it's involved enough to see where it starts, for the rest I rely on the proficiency of v4l people.

-

Thanks for reinforcing Rolf. In the specific case I know that is a constant because: these numbers keep being the same on different platforms, on different instances of the application, in compiled C snippets as well as in LV CLN calls. No indication of pointers depending on relocation. tracking the macro definition through the involved .h's , it boils down to a constant, much likely for the reason Rolf says. Pedantically: _IOWR() is defined in /usr/include/asm-generic/ioctl.h as #define _IOWR(type,nr,size) _IOC(_IOC_READ|_IOC_WRITE,(type),(nr),(_IOC_TYPECHECK(size))) _IOC() is #define _IOC(dir,type,nr,size) \ (((dir) << _IOC_DIRSHIFT) | \ ((type) << _IOC_TYPESHIFT) | \ ((nr) << _IOC_NRSHIFT) | \ ((size) << _IOC_SIZESHIFT)) _IOC_TYPECHECK is #define _IOC_TYPECHECK(t) (sizeof(t)) the various IOC_ constants #define _IOC_NRSHIFT 0 #define _IOC_TYPESHIFT (_IOC_NRSHIFT+_IOC_NRBITS) #define _IOC_SIZESHIFT (_IOC_TYPESHIFT+_IOC_TYPEBITS) #define _IOC_DIRSHIFT (_IOC_SIZESHIFT+_IOC_SIZEBITS) #define _IOC_NRBITS 8 #define _IOC_TYPEBITS 8 and so on. (wasn't there the story that someone writes a lot of C so that I don't have to?) [show of hands, all this was for updating my https://lavag.org/files/file/232-lvvideo4linux/ which works, proof of the pudding. I resolved to tackle the typedef constants issue with a script in the lines of what I quoted above.]

-

I succeeded in adapting the sources to work both on 32bit and 64bit. Package available at the repository linked above.

-

I'd say it's a constant, whose value is computed by the macro, defined in turn in another .h and telescopically. That results in different values, depending on bitness and architecture. But whatever, my point was for using the ring instead of an enum with such odd values. Ah, not blaming anyone for wandering off... the discussion is instructive to me.

-

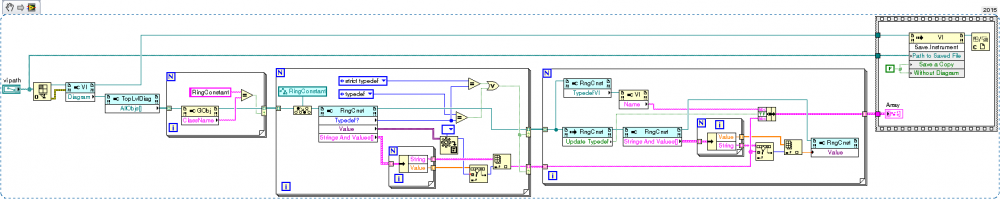

Indeed, and this is what I'm tentatively coming up with: My use case is that of passing to a certain CLN the value VIDIOC_S_FMT, which evaluates to 3234616837 with the 32bit library and to 3234878981 in the 64bit (at least in the 1.0.1-1 version of the library), as #defined in some obscure .h file, which I parse in order to generate the appropriate typedef. When I rebuild the typedef for the new platform, I need to care that the BD constant which represented my VIDIOC_S_FMT is updated to the correct item with the same label, in the new representation (as would happen if it was a changed enum). A different use case than the one you suggest above for combos. I would say fortunately not, because they are controls. Differently from ring constants, controls are true typedefs. Ok, I could have not-typedefd BD cluster constants which contain this type, and I should traverse all clusters, and, and, but let's not exaggerate.

-

hm, this is turning into a nice discussions about the relative merits and limitations of enums vs. rings. On which I agree all the way, but is not my original problem. That was, how to update "typedef" BD constants when their parent typedef is programmatically changed. IDE and prior to loading the involved VI set acceptable, at this point. Combo strings suffer of the same problem btw, as stressed by the KB linked above. Now, unless there is some magical Xnode contraption, I think I would explore some VI scripting way - like scan every VI of the hierarchy for typedefd constants, unlink them and relink them from their parents, resave the VI. Looks like it will be much easier than a bloated translation layer, which implies extra code for every appearance of any constant.

-

Kudoed that idea, and the duplicate one mentioned in the discussion, for what helps. So yes it turns that presenting the BD typedfd ring constant as typedef with a black ear is just a graphical mockery; on the BD, that object is treated as a non-typedefd, "Because type definitions identify only the data type". Only enums carry their legal range and labels as part of their data type. My use case is to interface with a C library, whose (many and sparse in value) #defines may change across versions, and certainly do across bitnesses. I already had in place a dynamic way to create all the typedef.ctls I needed; only, I realized that all was fine when the typedefs were used for subvi controls and indicators, but not for BD constants, which were static. Now I'm scratching my head about whether I want to turn my generated typedefs into enums, then write a translation layer to obtain the nonsequential values, or what. Changing all my BD constants into dummy controls with defined default value doesn't look very elegant to me...

-

Doh, by design: http://forums.ni.com/t5/LabVIEW/Possible-Bug-Ring-Constant-Typedef-not-updating/m-p/2400754 http://digital.ni.com/public.nsf/allkb/46CC27C828DB4205862570920062C125 However enums allow only sequential values... turns out that I'll have to revise what I was doing, which was happily using a lot of ring typedefs.

-

I think I've run into a nasty little bug, or perhaps I fail to grasp the higher reason for this. Seen on LV 2014 and 15 linux. Can someone confirm, so I escalate to NI support? Let's say I have a Text Ring (or Menu Ring) typedef, and use it as a constant somewhere on a BD, like in the attached. At some point I modify the typedef in any way, like changing the text, editing the numeric values... and Apply Changes. The change is reflected in the control and in the indicator bound to that typedef, but not into the constant. Why? They are eventually, only if the constant is turned into a control and back. ConstantControlIndicator.vi TextRingTypedef.ctl

-

And, "slight trick" is just passing "clone.vi:590003" to Open VI reference, options 0, instead of a path. Puzzled about what's the case, too...

- 6 replies

-

- front panel

- clone

-

(and 2 more)

Tagged with:

-

Retrieve Start Path from File Dialog before opening

ensegre replied to alleBarbieri's topic in User Interface

What I would usually do is to retrieve (or strip) the selected path on exit, and feed it to a FGV, or to the property Browse Options/Start Path of the control connected to the input, or something along this lines. This way I can handle multiple initial paths for different controls in my application. Yes you could convert the express VI to standard VI and modify it, but I wouldn't bother to tamper with it. -

You mean a typedefd one, I guess. I'm seeing that in LV15 all plain cluster BD constants turn into small icons. One could argue that if it was, it would be a non-self understood custom BD glyph. At that point, would a subVI with a custom small icon and providing the constant cluster output, be an acceptable workaround?

-

As perhaps others (i.e. Rolf) will be able to explain better than me, LuaVIEW IS conceived for both ways round.

-

"Format Into String" with collection of parameters

ensegre replied to Stobber's topic in LabVIEW General

Is some restriction of the format specifier syntax acceptable? Namely, do you need to support $ order? Do you need to output some argument multiple times? If the correspondence parameter -> formatted form is 1:1, a loop on an array of variants containing arguments, plus one array of format strings could perhaps do? Or, one array of clusters (index of parameter, format string) to account for reshuffling and repetition, and then concatenate as suggested above? -

As per text formatting utilities, gnu has fmt. Additionally, there is par. Perhaps you may consider wrapping them. AFAIR, they simply don't cope with multibyte characters (at best they treat them as independent bytes), but alas, neither LV does really. But right, they count characters, they have no notion of font metrics, which is what could be asked for in an UI.

-

(3 implies 1b or?) I think the answer is in part subjective (how much extra time would you spend for a diligent implementation?), in part dictated by constraints you don't specify. Like: Setting an unnecessary parameter causes an additional delay? Uses up some communication bandwidth? How are the capabilities of the sensors queried, or otherwise known? Does that take time or bandwidth? Is it safer to query always, because a sensor may have been changed without the caller knowing it? Are there risks connected with trying to set an unsupported parameter? In 1b the error is propagated elsewhere as error, or is just the sensor answering "sorry, I can't"? Squelching the 1b errors incurs in the risk of neglecting other real errors?

-

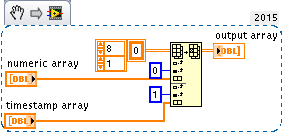

replace two 1D array into first and second 2D array

ensegre replied to Benyamin.KH20's topic in LabVIEW General

Replace Array Subset is your friend, probably. However, note that you cannot format one column of the output as numeric and the other as timestamp. Properties of array elements must be uniform. You may want to consider an 1D array of clusters instead. -

I understand even less what you want to do. Maybe you're better to post your code in the thread, someone else may fill in and help reviewing it. Or use a bottom up approach, first submit a minimal example which addresses clearly the behavior you're missing, and only that, for the group to help; add the rest later on in your private application. Also, please don't send VIs with FP or BD diagram saved as maximized, it can be annoying to some.

-

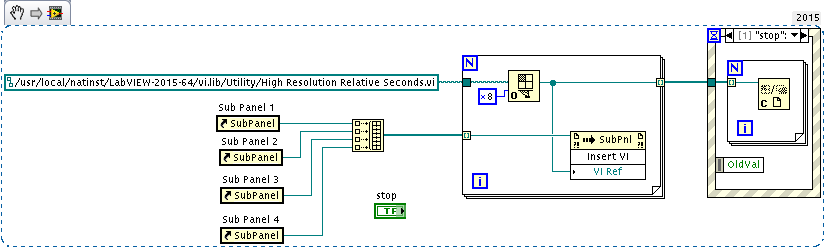

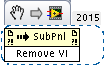

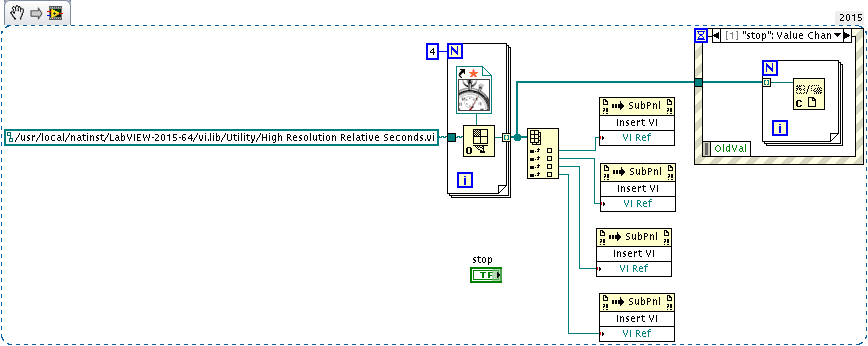

My snippet above dynamically loads four clones of the same [reentrant] vi (the high resolution timer just as an example), opens them in each of the four subpanels, and closes them when you press the stop button, like I understood you asked originally. Here a slightly more elegant variation of it, btw: What do you want to do instead? A complete event-driven application with buttons for closing or reopening the clones in each of the subpanels? Left as an exercise, but the mechanics would be an event loop with Insert VI and Remove VI invoke nodes for each button pressed, I guess.

-

-

For reference, an example of this kind is in "Grab and Attributes Setup.vi" among the IMAQdx examples of the Example finder. Specifically this VI used to have some glitches in older releases of LV, I don't know whether solved in later versions, but anyway should give you the idea of what Yair means. If you happen to have IMAQ and a webcam you can see it in action.

-

-

I have no experience with python classes, but see here: https://sourceforge.net/p/labpython/mailman/message/27990305/ (comment 1). This seems to be a problem others complained too, as you will find if you search in the fora and on the labpython list. My ugly workaround, globalize everything which is lamented as undefined.

-

Yes, this way seems to be reasonably simple and have potential. It looks that symbols of an open project can even be changed on the fly, thus the symbol-setting VI can be part of the same project; the only nuisance is that VIs and project will appear as changed and unsaved. Here is my first attempt at it (LV15); I have still to figure out what could be the leanest way for the end user. TestDependencyChecker.zip