-

Posts

590 -

Joined

-

Last visited

-

Days Won

26

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by ensegre

-

What I'm led to understand is that the OP wants to emulate this: http://www.plexon.com/products/omniplex-software. I presume that various spike templates are drawn at hand with a node tool (more than just one threshold), and that the computational engine does statistical classification of all what is identified as a spike event in the vast stream of incoming electrophysiological data. Classification could label, say, each spike according to the one among the templates which comes closest in rms sense, but PCA may be more sound. Now we are at the level of "how to plot many colored curves". At some point we'll be at "how to draw a polyline with draggable nodes", then "how to compute PCA of a 2D array"... Or? Don't ask, I have my adventures with neourobiologists too...

-

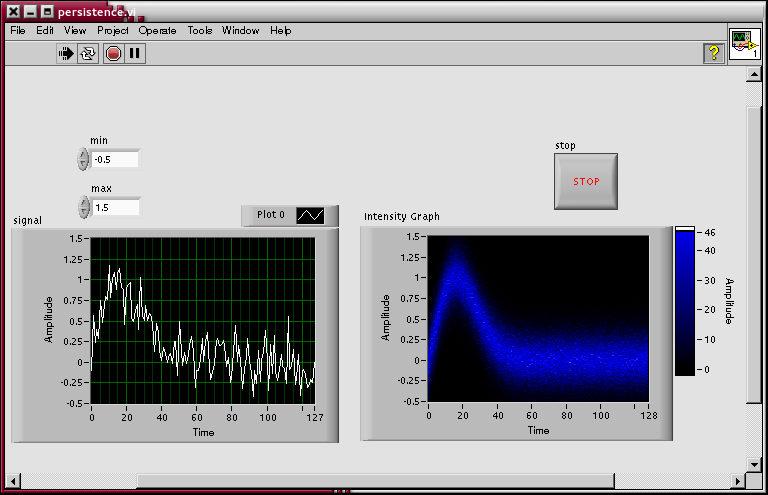

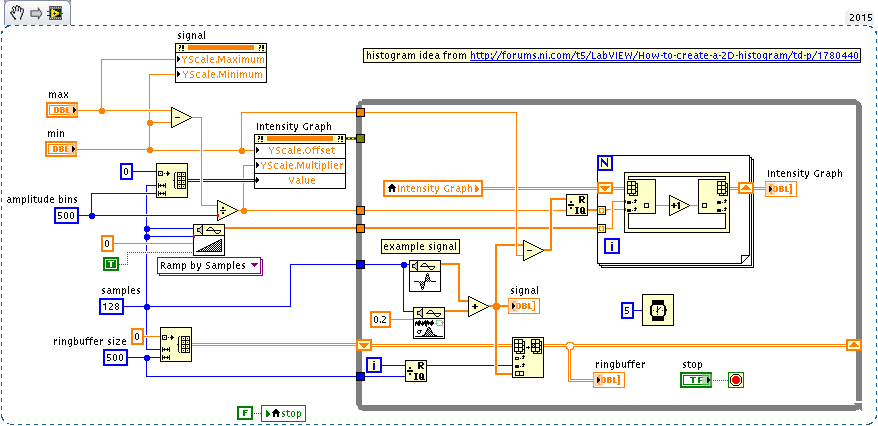

If it helps persistence5.vi

-

Is this one relevant (not tried, discovered now)

-

Hm. Still we started telling the OP - don't look for tricks, don't try to plot all data blindly. I fear that with this or that optimization the idea can hold with acceptable performance up to some ringbuffer limit, taxing cpu and buffer allocations, but at some point, design should kick in or else. I didn't really time it...

-

-

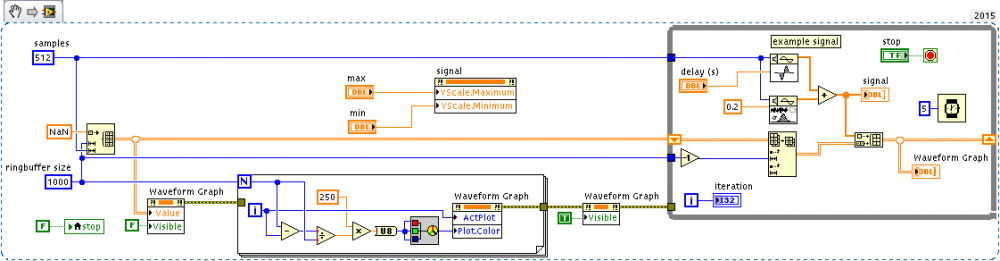

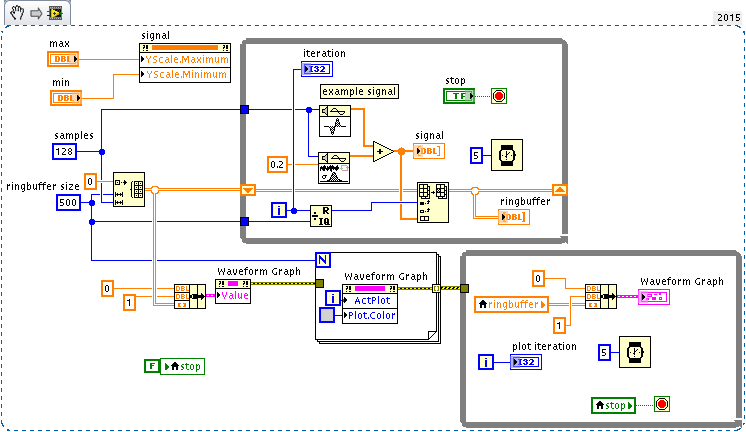

FWIW, I realized that in my previous snippet the waveform graph needed several seconds of initialization time just because of the loop setting the plot colors, which vanish if I initialize the ringbuffer with NaNs instead of zeros. (Maybe hiding the graph while setting could do, too, I haven't tried). Also, my snippet with independent loops is clearly just an example and not the real thing, one should plot only if there is really new data and at a lower rate, some mechanism of notification must be in place. As said, application design, not magic bullet. Agree. If changing colors of a group of curves is expensive (and moreover these have to be brought to foreground), something must happen behind the scenes.

-

Just tried out this out of curiosity, and no, obviously replotting the whole ringbuffer periodically is not a good idea; at least not like this. On my system, it takes about 5 secs each time. But OTOH, to my great surprise, a plain waveform graph with 500 plots is faster: YMMV...

-

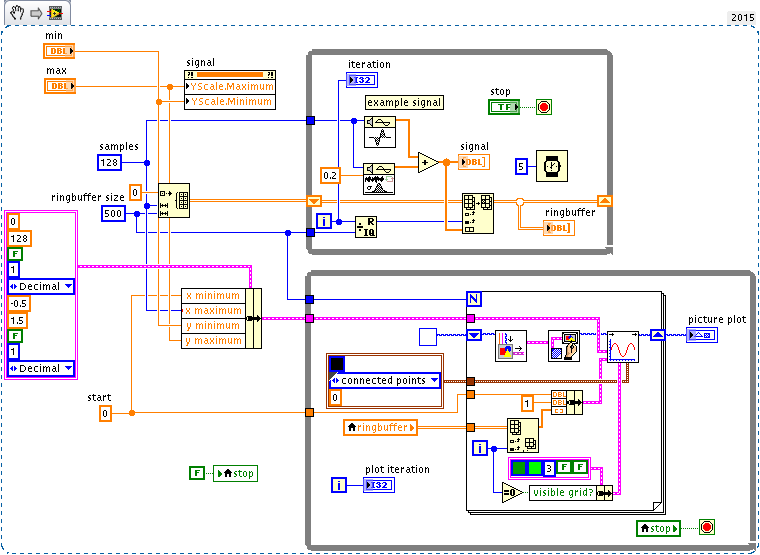

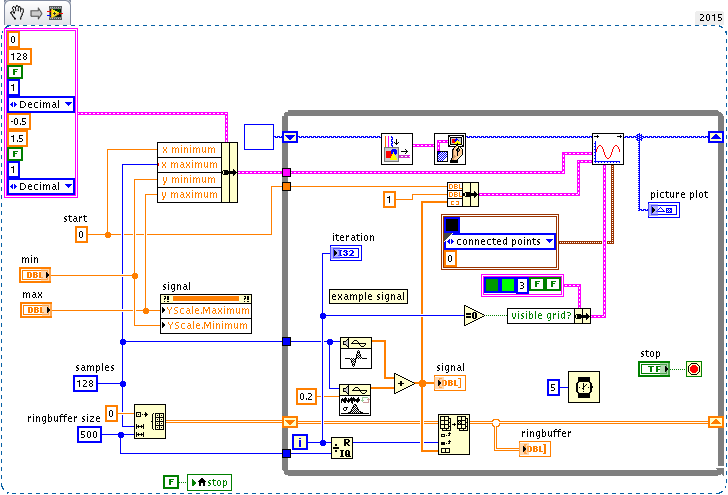

This may depend on many factors, such as what is the acceptable cpu load on your system for that part of the application, the pixel size of your plot, your graphic card, and just about everything else. You have to find it out in your working conditions. From the ringbuffer, you have the data of the oldest curve which you're going to discard, and of the newest which is going to replace it. If it is a waveform graph, you can access its data, replace the relevant wave, and replot everything (that is going to be somewhat slow). You could begin with a waveform graph initialized with N NaN filled waves, and replace them with data as it comes in, to avoid growing, but I doubt that it will make a difference a regime. Maybe the histogram way is still the easiest. Because in its way the histogram flattens the data, but makes still easy to add +1 to the new points and -1 to remove points. For a change you could just binarize the histogram for plotting, and plot in the same color all bins with a count>0. The limitation of the histogram in the form I sketched is that bins are filled according to the datapoint falling in them and not according to the segment traversing them, but perhaps that can be worked out too. I mean, complex requirement, complex solution to be sought.

-

Plotting over a flattened pixmap (rather than incrementally adding to the picture new plot data, that's the trick) can be fast, and has the same cost no matter the previous image content. Like this: I guess that periodically replotting the whole lot of curves would become too expensive even this way. In a case like this perhaps one should manipulate directly the pixmap, like erasing the pixels of the oldest curve first with an xor operation, and then overplotting the newest. Unfortunately the internals of the picture functions, for attempting something like that, are not well documented, IIRC. I think that, much time ago, I ran into some contribution which cleverly worked them out. Maybe some other member is able to fill in?

-

How to Get the input value within a Filter Event?

ensegre replied to patufet_99's topic in LabVIEW General

The trick is to get the Edit Position first, set Key Focus to false, get the (now updated) value and process it, set Key Focus to true again and then restore the previous Edit Position. For an application of mine I had to process arrows with and without modifiers, tabs and whatnot, sometimes jumping columns besides correcting the entered values, and allow only the input of [0-9] in some cells and text in others - you can go quite far in tweaking the standard table navigation behaviour if you invest time in implementing all cases. -

I have somehow the impression that you're assuming that there should be some magic option of the LV plot widget as is, which saves from application design. What you show about hand drawing a template curve, and classifying traces, hints that there is more computation going on behind the scenes. The display in the plot window must be a somewhat sophisticated GUI, only giving the user a feeling of what data is been accumulated and how to interact with it, quite likely not doing the mistake of replotting the whole dataset for every new curve acquired (that would be expensive). The right question might be whether you can use the LV graph widget for what it gives, or rather the picture widget as Shaun suggested above (that would overplot directly to the pixmap, I guess, and be significantly faster), as a starting point for building such a GUI. The LV graph widget, optimized as it may be, is slow because it is complex: it handles autoscaling, abitrary number of curves, arbitrary plotting stiles, filling and whatnot, and this comes with a price. Someone may correct me, but I don't even think it can handle adding incrementally new curves, without replotting the whole bunch. If I would have to build something the like, I would store the data in memory appropriately and without connection to the graph; I would consider to update that display only periodically, fast enough to give the user a feeling of interactivity, and track user clicks on the graph area in order to replot the data with the right highlights, to give the user the impression s/he is playing with it.

-

-

I think you should part with the idea of regenerating a plot at every new event; storing vast data for later analysis is one thing, display for GUI feedback another. Instead of trying to plot 5000 curves at every iteration of the loop, you could try maintaining a different loop plotting, at lower rate, the most recent N, with N suitably large. Another approach I thought of, is using an intensity plot to display a 2D histogram. Grid the time-amplitude space in bins, and increment each bin every time a curve passes in it (you have to write the code for it, I don't know of a ready source); thus you get a plot similar to that of a scope with infinite persistence, brighter where traces overlap. Different loop and lower rate, ditto.

-

Not to mention The MB icons in the screenshot upward in this thread make me think that the OP was referring to the old (not good old) ni-modbus-1.2.1, still available here: http://www.ni.com/example/29756/en/ I have used the latter here and there for simple communication, and while I could make it work, I was not impressed by quality, and vaguely remember bugs and quirks.

-

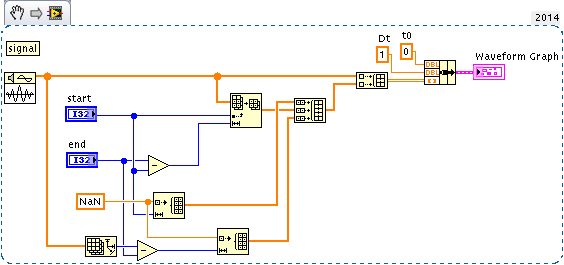

emphasize (envelope) portion of waveform chart

ensegre replied to rharmon@sandia.gov's topic in LabVIEW General

The q&d NaN replacement in a duplicate signal is because Waveform Graph was brought into cause in the first place. For large datasets, and multiple graphs, the solution may be less performant; I would then switch to other solutions, like an XY Graph, where each plot can have its own x dataset. -

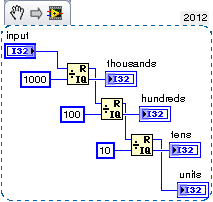

How to splitting number in control to indicator ?

ensegre replied to bukandenny's topic in LabVIEW General

-

emphasize (envelope) portion of waveform chart

ensegre replied to rharmon@sandia.gov's topic in LabVIEW General

-

emphasize (envelope) portion of waveform chart

ensegre replied to rharmon@sandia.gov's topic in LabVIEW General

This is what I'd generally do, and end up doinf for things like confidence intervals. What do you mean exactly "that's not working"? Can we see code? You must be familiar btw with ain’t you? -

shameless self: if linux is an option...

-

How to submit code to the Code Repository

ensegre replied to Michael Aivaliotis's topic in Code Repository (Certified)

Oh, and Sorry, there is a problem The page you requested does not exist Error code: 2S100/6 -

How to submit code to the Code Repository

ensegre replied to Michael Aivaliotis's topic in Code Repository (Certified)

Is the video still available? Shouldn't the blurb be corrected? -

Style quite awful (stacked sequences, local variables, no modularization, and what - you open and close various files for rereading unchanged (?) data between each A/D and D/A operation?), so it's difficult, for me at least, to understand what you want to do. Anyhow, two suggestions which are a bit of a shot in the dark: Open, define and keep open the Daq tasks properly. Don't rely on the auto behavior of DAQmx Read and Write if performance is of any concern. Provided that opening delays are not of concern there, check for poor USB cables/flaky connectors/EM interference. I remember an occurrence I had where simply using shorter cables, wound a couple of turns over a ferrite core, solved random "resource unavailable" issues.

-

I have some minor comments on usability, from quick testing. I don't know if you're already interested in such detail. TopLevelDialog/SelList[] loops erroring, if the VI examined is closed and thus the ref becomes invalid, and ShowEventsFromCtl becomes topmost (i.e. even if there are other opened VIs, but they are not topmost). That should be trapped. TopLevelDialog/SelList[] list loops erroring, if the VI examined is password protected. Either that should be trapped and reported or the standard unlock dialog should be brought up. Show Event blinks the right place in the BD, but that may not be visible if scrolled away. An improvement would be to center the window on it, like Stop on error does.

-

Unregistered users can't download (or, apparently, register))

ensegre replied to Yair's topic in Site Feedback & Support

I second Yair, and think that looking from the user point of view only, no registration for downloads promotes the LAVA spirit. The comparison coming to my mind is sourceforge. My perception as an user is "hey, I can get a lot of interesting stuff from here for free. And I can also give technical feedback, contribute, and even start my projects, but for that it's legitimate that they know who I am". They certainly have orders of magnitude larger technical staff to deal with server issues, though. -

Looking on http://www.modbus.org/docs/Modbus_Application_Protocol_V1_1b3.pdf (page 40), "because they say so"... If "why" means the rationale, maybe historical? UARTs with maximal buffers of 16K, and low bauds (not thinking of ethernet), so 16K was almost synonym of infinite time? Only speculating.