-

Posts

596 -

Joined

-

Last visited

-

Days Won

26

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by ensegre

-

As attachment at times I do. Not sure about the repeatability. Sometimes a timeout (when typing a message for more than x minutes I can neither attach nor submit, have to copy my text, refresh the page, paste, submit); sometimes I can from a computer but not from another.

-

It doesn't seem safe to me either, but if I remove it outright I have no crash, and running continuously the example with 450 formulas I don't observe a growth of process memory.

- 172 replies

-

My mistake . The ones I posted were compiled on Ubuntu 16, and I then tested them as not working on Ubuntu 14. Same conclusion on interoperability, probably.

- 172 replies

-

So far only a single Windows 10 enterprise 2016 64 bit machine, with LV 2017 32 and 64bit. Uhm, how am I supposed to understand which .NET I got?

-

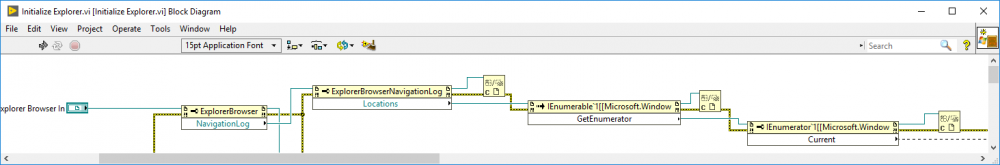

I have an issue with Initialize Explorer.vi not being executable, probably because incorrectly linked .net stuff. However, I am able to run .Net to embed a Windows Explorer . Do you have an idea why?

-

Thanks for the pointers. Indeed inductiveautomation looks too much. First I don't think I can justify the cost (we have anyway a campus license of LV at no extra cost), second it would involve another learning curve. Maybe for a more powerful and flexible solution, but. Third "Use powerful 2D vector drawing tools to create any shape..." - If it is me who has to draw, what do I have Inkscape for, then? draw.io has indeed a few glyphs I could lift for flat buttonsr, but probably not much more added value than other libraries coming with free diagram drawing tools which I have seen. Starting from the good old xfig. Another nice one I discovered recently (mentioned in some tutorial slide I found on the dark side) is yEd. OTOH, the restraint I'd have in paving my way to crap-art, is that so far I only have had unsatisfactory experiences in trying to customize controls. Unwieldy tool, only partial handling of vector graphics and transparency, and strictly rectangular shapes. Btw, anyone knows how the polygonal multi-shaped controls of DSC are built and made responsive (is it at all exposed by NI, and worth messing with)? I think I might have ran into an explanation somewhere but I'm no way sure [was it in the context of save VI as xml, perhaps? Pure hack then]. ETA: looking at and

-

I'll be doing a SCADA for a high vacuum system. I have not yet settled on the graphic style of the hmi (skeuo, flat, diagrammatic, whatever), but I'd like to do something slick. I have looked at DSC controls: 3d pipes somewhat do the job, but all the rest is crude. Looked into the DSC images navigator, images are sooo 2002, and again little specific to lab. Have looked at https://www.openautomationsoftware.com/downloads/free-hmi-symbols/, images are pngs and not too much choice either. Searched a bit the net and found nothing. I miss specific elements like vertical turbopumps, guillotine gates, vacuum chambers, ideally scalable. I'm tempted to inkscape down my path of personal crap-art, but I know it will end into another bad trip. Is there anyone who can suggest a nice source of suitable elements?

-

I keep an eye on you . mupEvalMulti.vi dies on DSDisposePtr. Shouldn't there be a DSNewPtr somewhere, like in other VIs using LabVIEW:MoveBlock? Besides, your .so doesn't load on my system, and I have to use the one I compiled locally, but that is expected, as said above. Only confirming that it is vane to target all possible linux distros at once.

- 172 replies

-

- 1

-

-

Indeed. Well, enough hacking around unfeatures. One learns nothing doing that, and wastes only time. Thanks for the hints anyway!

-

Oh, it's starting to remind me of another bad trip of mine about customizing controls, maybe I'd better do some real work instead...

-

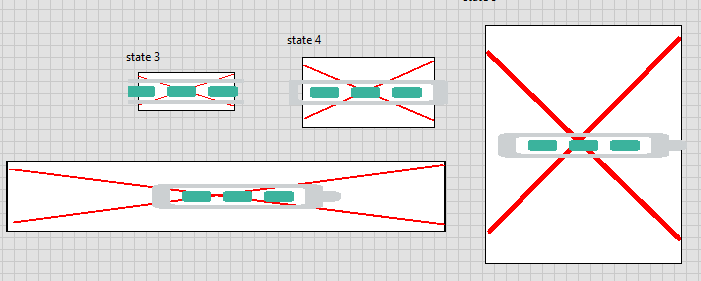

Thanks Brian, but I'm asking specifically about a Pict ring (or any other solution which gets close to enum functionality without bothering to concoct an Xcontrol, unless there is no other way). I thought for a moment, would emf vs wmf make the trick, but as you see it doesn't The red X is the background and it resizes, your battery is the element of the ring.

-

Just to make sure: I'm playing around with a graphic state indicator, and I would like to make it resizable. I thought at a Pict ring, created some proof of the concept svg images with Inkscape, saved them as wmf, customized the ring importing at the same size one image per state (this works apparently only under windows). I got the what attached, but the images don't scale when I resize the indicator. At best, I can import a wmf file for the background (currently white), and that does resize, but is common to all states. Am I missing some obvious trick, or is this the best one can aim to? GateState.ctl

-

deconvolution=inverse problem=sensitivity to small denominators difference of large, almost equal numbers=large truncation errors Without seeing the code (maybe even seeing it), it's difficult to say which is more right. Given the kind of problem, anyway, I'd not be surprised that simply rescaling one set of datapoints (you say Hz or Mhz - this already shifts 6 orders of magnitude) gives a better or worse result. If you ever had experience, even with linear regression you may incur in the problem - fitting (y-<y>)/<y> to (x-<x>)/<y> may do wonders. In the code, I'd look first for possible places where you sum and subtract numbers of very different magnitudes, and see if a proper rescaling reduces the spread in magnitudes, while keeping the computation algebraically equivalent. "numerical analysis", proper.

-

Didn't think at it. [NXG claims to support unicode. Didn't try. The issue might exist for extended ascii chars, like latin1 accented chars, as they are coded as 1byte in LV-windows and as UTF8 in LV-linux]. But why passing anything else than basic 7bit ascii to libmuparser at all? I quickly tried /usr/lib/x86_64-linux-gnu/libmuparser.so.2 with muParser demo.vi, accented chars in variables seem illegal.

- 172 replies

-

you make it sound more impossible than it reasonably is in this case. libmuparser is at least not unknown to ubuntu, I apparently brought it on 14 and 16 as dependency of meshlib or of some FEM package. Now Porter's toolbox is apparently using a patched version of it to add some syntactical feature, but the toolbox would also work with the off the box version of it. Even with an earlier version, if the ABI didn't change. If, I concede. But LV-muParser could say: requires x.x.x<libmuparser<y.y.y If a locally patched version of the library is so important, to avoid conflicts just give it an insanely improbable name - like libmuparser-with-added-esclamation.so Dump the responsibility to the author of the original library: ./configure; make; make install. If it can be built for the target distro (or if someone bothered to distribute it), ok; if it can't, just don't claim it is supported. I haven't thoroughly looked into, and I'm just a script-kiddy at it anyway, but FWIW nm -C libmuparserX64.so.2.2.4 | grep @ tells me only of GLIBC_2.0, GLIBC_2.3.4, GCC_3.0, CXXABI_1.3, GLIBCXX_3.4 - standard stuff, hopefully not dramatically changing across distros. Anyway - I agree that the effort is best invested in developing the toolbox. I never suggested that the toolbox should provide also compiled .so, and see the reasons why. I was perhaps carried along by the academic discussion, which in my view was in general about the best LV strategy to support different shared libraries for different platforms.

- 172 replies

-

- 1

-

-

more PCs, more money, more performance...

-

6 PCs if you have a high bandwidth and a highly demanding image processing, one PC if you're tight in space or need a single control station. Or 7 PCs, 6 for processing and one for concentrated display. Personally I'd first evaluate the resources you need for a single task, and how many tasks could compete for resources if they run on a single PC, then assess how many PCs you need for upscaling.

-

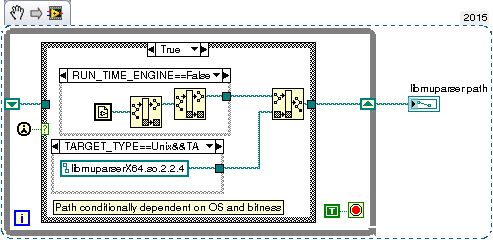

I get the point. Dynamically referenced will not be visible by the builder as dependency. If I may forgiven for being so stubborn, as a workaround one could still put just one conditional disable structure with the different statically linked CLNs within a false if frame in just one mandatory, but called once, ancillary, and leave all the rest with mupLibPath.

- 172 replies

-

- 2

-

-

For the record I obstinately still tried this, following smithd. Because of "current VI's path" this cannot be inlined, at most given subroutine priority. The result with the previous benchmarks is ~600ns overhead (on ~12us, 5%) on 32bit and ~200ns (on 10.4us, 2%) on 64 bit. Results obviously degrade for less than subroutine priority. mupLibPath.vi

- 172 replies

-

- 1

-

-

So I unzip that on my desktop. This means "/home/owner/Desktop/LabVIEW 2015/Projects/LV-muParser" and "/home/owner/Desktop/LabVIEW 2015/resource/" . Go figure, "/<resource>/libmuparserX32.so.2.2.5" not found...

- 172 replies

-

pre-build VI? Possibly... instinctively I'd say the less code the better, but have not benchmarked that.

- 172 replies

-

Well, let's be pedantic: CLNbenchmark.vi mupLibPath2.vi (change the library names according to bitness and OS) there is a huge jitter in this benchmarking, to get ~1% variance I need to iterate 1M times, so results are not entirely conclusive testing in parallel like I did earlier was probably not a good idea, suppose libmuparser is single-threaded - indeed running 2 or 3 loops in parallel changes the picture. Testing individually each loop, static library name wins, by perhaps 1% (100ns on 10us) Testing in parallel, each timing is larger (~60%), and jitter is even larger, no clear winner in few attempts I wouldn't be surprised if YMMV in other machines, OS, bitnesses and LV versions I tested on linux LV2015 64bit, 2016 64bit and 2017 32 and 64 bit, results were similar and similarly scattered. On LV2017 32bit I got timings ~25% higher. don't even mention boundary conditions like FP activity Moral, even considering that some time is spent in the libmuparser routines themselves, and some is LV calling overhead, and that the ratio may change from function to function, this overhead is minute, and imho it pays much more to go for the most maintainable solution [hint: inlined subvi with indicator whose default value is set by an install script]. YMMV...

- 172 replies

-

This small experiment, if not flawed, would suggest that actually CLN with a name constant is a few tens of ns faster than CLN with name written into. CLNbenchmark.vi

- 172 replies

-

A supreme one, but proper scripting might be able to alleviate it. Scripts for checking that each CLN is properly conditionally wrapped, and that each conditional disable has the relevant library in its cases. I understand that performant parsing was one of the main motivations of this exercise; however, maybe let's first benchmark precisely the impact of different solutions.

- 172 replies