-

Posts

499 -

Joined

-

Last visited

-

Days Won

36

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by JKSH

-

I wonder if it's a race condition, since you are accessing the same variable in two places in your diagram. I'm also wondering if something got corrupted. What happens if you delete the SV node from your block diagram? What happens if you try to write to the variable without opening a connection? Right-click the SV node and select "Replace with Programmatic Access". Does this one work? What happens if you undeploy everything from your cRIO, and then deploy the variables again? What happens if you undeploy everything from your cRIO, and then create a new project with new variables? (Drastic measures) What happens if you reformat your cRIO, install your software from scratch, and try again?

- 7 replies

-

- compactrio

- real-time

-

(and 2 more)

Tagged with:

-

ensegre has given you a good starting point. Would you like to try modifying his code to match what you want?

-

Sounds good Happy coding!

-

Hi, When would the LStrHandles ever be valid? If you allocate a new CodecInfo array, then all the LStrHandles are uninitialized (that means they are never valid). So the solution is this: For all of the LStrHandles inside your new array of clusters, always call DSNewHandle() and never call DSCheckHandle(). Like I mentioned before, your new LStrHandles are uninitialized pointers. You must never call a function (including DSCheckHandle()) to perform an action on an unitialized pointer. If you do, you will get undefined behaviour (and usually crash) -- This applies to all C/C++ code, not just LabVIEW interface code.

-

why so little love for statecharts

JKSH replied to MarkCG's topic in Application Design & Architecture

I think it's a chicken-and-egg problem: NI doesn't polish it because there are few users; few users use it because it's not polished. I don't think it's the learning curve because the concept of statecharts is quite intuitive, and should be familiar to many people who haven't used LabVIEW before. I used statecharts for one large project once, for coding complex logic in a CompactRIO. The main issues I had were: Deployment took forever. Bugginess, like you said. Modifying triggers is tedious (and you can't share triggers between different statecharts) Managing static reactions is tedious (you can't reorder them or insert a new one in the middle; you gotta delete them and re-add them in the correct order) -

Generate occurrence in FOR loop returns same reference

JKSH replied to eberaud's topic in LabVIEW General

I only looked at occurrences for a very brief while. At the time, I thought that its design is inconsistent with other mechanisms in LabVIEW, so I decided not to use it. See this article: Why Am I Encouraged to Use Notifiers Instead of Occurrences? -

Good call No, I'm not volunteering to create LabVIEW wrappers for native window management APIs. That would be rather silly, as you said. I'm taking a different approach: Investigating how a 3rd-party GUI toolkit could be integrated into a LabVIEW application. That's why I asked my original question -- to see what use cases need "unconventional" features in LabVIEW, and to see if a 3rd-party toolkit can satisfy those use cases.

-

Thanks, all! So in a nutshell, HWND manipulation can give us advanced, dynamic GUI behaviours that Front Panels, Subpanels, and XControls can't do natively. A cross-platform framework (like LabVIEW, or a LabVIEW toolkit) would typically present a unified platform-agnostic API to users, while calling these platform-specific APIs under the hood. (Conditional disable symbols, probably?) Anyway, one could create a wrapper C library for Objective C and C++ libraries (I know that C++ has extern "C" {}, not sure about Obj-C). Then, LabVIEW could call this C wrapper.

-

OK, the terminal was renamed from "Phase increment" to "Frequency (periods/tick)" LabVIEW 2014. I didn't realize this before, but they mean the same thing. Look at Fig 2 in the example again carefully: The example VI takes an unsigned 32-bit integer in the "Phase increment" input. This datatype cannot represent fractions, so you multiply by 232 to scale your input for maximum resolution. However, your version (LabVIEW 2014) uses a fixed-point number, instead of an integer. This datatype can represent fractions, so you no longer need to multiply by 232 As I said before, your VI is not the same as what's shown in the example. The sine wave generator uses the top-level clock by default, which is 40 MHz. If you want, you can use a a derived clock by unchecking "Use top-level clock" and entering a custom clock frequency. The "Frequency"/"Phase increment" input is the ratio of your sine wave frequency to the clock frequency used by this VI. You don't divide by 1.25 MHz. Your top-level FPGA clock is 40 MHz, so: If you want a 400 kHz sine wave, Phase Increment = 400k/40M = 0.01 periods/tick If you want a 4 MHz sine wave, Phase Increment = 4M/40M = 0.1 periods/tick If you want a 40 MHz sine wave, Phase Increment = 40M/40M = 1 period/tick (this is not possible though)

-

Ok, those are pretty low frequencies. There should be no problems there. Strange... Ok, the Tektronix is a good quality scope. There should be no problems there. You're right, but it's much easier to get things working in Scan Mode. I suggested it as a preliminary test, to ensure that your hardware is working properly. (If you can't get it to work using RT code, then you won't get it to work using FPGA code) I haven't used these VIs myself, but the VI in your diagram looks different from the one in the NI example. Yours is http://zone.ni.com/reference/en-XX/help/371599K-01/lvfpga/sine_generator/ which doesn't seem to have a phase increment terminal. Anyway, the formula at the NI example does not give you a frequency. It is a percentage, scaled to fit an unsigned 32-bit integer. (232 = 100%, 0 = 0 %)

-

This is just another case of the Law of Leaky Abstractions: http://www.joelonsoftware.com/articles/LeakyAbstractions.html LabVIEW abstracts away our need to manage every thread manually. However, every so often, we find ourselves in a position where we need to understand how threads work and how LabVIEW hides these details from us.

-

Hi, What frequency is your output sine wave? Do you get better results if you use lower frequencies? How are you measuring the output? Do you get better results if you use RT code and the Scan Engine (instead of the FPGA) to output the waveform? (RT code can handle floating point numbers, so you're not restricted by fixed point)

-

I'm interested in the topic of manipulating HWNDs directly. Could you guys kindly list some example use cases?

-

Please vote for this idea: http://forums.ni.com/t5/LabVIEW-Idea-Exchange/Font-size-standardization/idi-p/1405022 Someone made a tool to compensate for this issue (I haven't used it myself though): https://decibel.ni.com/content/groups/ui/blog/2013/06/07/font-sizes-in-labview--conversion-utility-vi

-

There are 2 major problems: You did not allocate any memory for PixArray, so your C code is writing into memory that belongs to someone else. That's why your memory got corrupted. You treated that the IMAQ Image LabVIEW wire as a raw array of integers in C. That's the wrong data structure. Anyway, why not just use IMAQ ImageToArray.vi? I wouldn't say that. Always try to understand why you are having memory problems. If you ignore it, you might one day find that your important data got destroyed.

-

Hi, Pay attention to the error messages that you get. They will provide clues for you to find out what's wrong. Show us your LabVIEW code. First of all, you must know that 32-bit CPUs always reads in multiples of 4 bytes. See this article: http://www.ibm.com/developerworks/library/pa-dalign/ So, even if the 4th byte is unused, it is most efficient for it to let each pixel occupy 4 bytes. If each pixel occupies 3 bytes only, then you will get poor performance for read/write operations. But anyway, the 4th byte is commonly used to store the alpha channel (transparency).

-

Thanks everyone, for the insightful discussion. I agree that it's most sensible to perform cleanups even if an error occurred, and the Network Stream example sounds like a design flaw. Aside from cleanups though, I can't think of any other good cases which should run regardless of error -- do you guys do this in non-cleanup code? As for accessors, I agree that read VIs for a simple number shouldn't have error I/O terminals. I still put them in write VIs though -- I avoid modifying my object's state if an error occurred beforehand. Does anyone feel strongly against this?

-

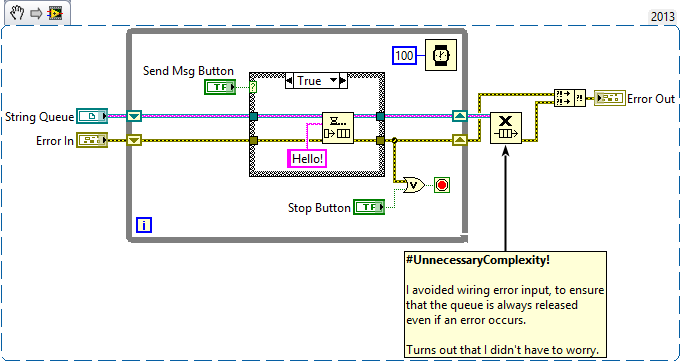

Hello, For the past 2+ years, I've always thought that official LabVIEW nodes/VIs do nothing (and only output default values) if they receive an error in their "Error In" terminal. As a result, I wrote code like this: (I thought that a memory leak could occur if an error input stopped Release Queue from doing its usual job) Today, I discovered that nodes like Destroy User Event and Release Queue actually still work even if they receive an error. I guess this behaviour makes sense. However, I'm now wondering if there's a definitive guide on how errors should affect code execution -- Should I expect all nodes/VIs to do nothing if they receive an error, except for "cleanup" nodes/VIs? The reason I investigated this is because I'm writing a library that uses an initialize-work-deinitialize pattern. To be consistent with official LabVIEW nodes/VIs, it looks like my "Initialize" and "Work" VIs should do nothing if they receive an error, but my "Deinitialize" VI should still attempt to free resources even if it receives an error. Would you agree?

-

Parallel process significantly slower in runtime then in Development

JKSH replied to Wim's topic in LabVIEW General

Prepare to lose productivity: http://thedailywtf.com/- 32 replies

-

- development env

- runtime

-

(and 1 more)

Tagged with:

-

Wow. How much time have you been allocated? Are there any other LabVIEW developers in the company you can talk to? Here are some official tutorials: http://www.ni.com/academic/students/learn-labview/ (You probably don't need #7: Signal Processing) Good luck!

-

Hi, I think your best bet is to create a wrapper DLL that converts that string to a LabVIEW-friendly string. You can't do the conversion in LabVIEW, as there is no way to tell LabVIEW how many bytes it should read. To be precise, the string is encoded in UTF-16 (or maybe UCS2). There are other Unicode encodings. If the string was encoded in UTF-8 (another Unicode encoding), then you would not have faced this issue because UTF-8 is a strict superset of ASCII. That means, when you convert an "ASCII string" to UTF-8, the the output bytes look exactly the same as the input bytes. Thus, LabVIEW would be able to treat it as a plain C string. If it crashes when you use your Pascal string approach, that's probably because LabVIEW read the first character, interpreted is as a (very long) length, and then tried to read beyond the end of the string (into memory that it's not allowed to read).

-

That's quite an obscure problem. Thanks for sharing your solution!

-

How can I share labview vi with android devices

JKSH replied to haalem's topic in Remote Control, Monitoring and the Internet

I... can't decide if I'm impressed or not! I have a Microsoft Surface Pro 2 and I find it a good fit for LabVIEW programming I've done it many times on the bus/train on the way to work. I can install LabVIEW (to open files locally), I have a keyboard (for typing, keyboard shortcuts, and Quick Drop), I have a stylus (for drag+drop, wiring, opening menus, etc.). The stylus is as fast as a mouse. Pen-tap = Left-click, Pen-button+Pen-tap = Right-click. It has an active digitizer, so hovering the pen across the screen moves the cursor (like moving the mouse). In contrast, dragging the pen tip across the screen is like dragging the mouse. The one thing it doesn't fully replace is the mouse wheel, but I have Ctrl+Shift+Drag for scrolling the FP/BD. I've always found mouse-wheel too slow for scrolling FP arrays so I don't use it anyway. -

How can I share labview vi with android devices

JKSH replied to haalem's topic in Remote Control, Monitoring and the Internet

Have a look at Data Dashboard: http://www.ni.com/tutorial/13757/en/ Your picture shows a LabVIEW editor on the mobile phone; that's not possible right now.