-

Posts

720 -

Joined

-

Last visited

-

Days Won

81

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by LogMAN

-

edk.dll missing eventhough in the same directory

LogMAN replied to David Yee's topic in LabVIEW General

Maybe I'm missing something, but I don't see any edk.dll in your screenshots, just the edk.lib... Anyways, here are a couple of things you could try (assuming we are talking about a C DLL): -> Check if the Call Library Function does correctly point to the dll file (the path should be absolute imo) -> Look into the dependency tree if the file is listed there (it does not seem to be part of your project) -> Include the DLL file into your project -> Put the DLL next to the LabVIEW.exe (in Program FilesNational InstrumentsLabVIEW <version>) and try to start your application again (if it works you most likely have specified the DLL file name in the Call Library Function instead of the path) -

Erroneous output with the String to 1D Array

LogMAN replied to JKSH's topic in OpenG General Discussions

I've never done that, but I know there must be some option as you open a new ticket(might depend on the project). Some projects I follow provide a category called 'patches' to do such things. It seems there has been movement in your bug reports a couple of days ago, so that's a good sign I would say. -

Erroneous output with the String to 1D Array

LogMAN replied to JKSH's topic in OpenG General Discussions

SourceForge is the best starting point in addition to this forum. However the project has not developed much over the last couple of years (there are quite a lot bugs filed with no development for almost a year). You could fork the project for your own purposes and upload patches as you file bug reports or maybe become part of the team yourself (if one of the members would allow that). The project might be dead, if none of the developers is interested in continuing it. In that case you could still fork the project, but there is little chance that anybody would benefit from patches (unless there is a way to fork a project and upload new versions to the VI Package Network...) -

Your link explains a way to merge multiple sheets into a single workbook. You'll end up with a single workbook containing all data, same as before. The fact that you copy pieces differently does not change the fact that all data will be loaded into memory each time Excel loads the workbook (This is just how Excel works). Since the amount of data is the same, the problem will remain. You'll always experience the same limitations no matter how you build your workbook.

-

Wow, that's a new one for me... Could not belief at first, but it does work. Also just tested with a cluster containing strings and it works too (only if the cluster contains string and numeric types, but hey)! I've never used it for anything other than string or array of string up till now. Thanks for sharing Neil!

-

REx - Remote Export Framework and Remote Events

LogMAN replied to Norm Kirchner's topic in Application Design & Architecture

Thanks for the port! I've just tested on my machine with LV2011 and it works just fine. I was able to run the DEMO Command Sender.vi & DEMO Command Listener.vi in the development environment, as well as the sender in the IDE and the listener as separate executable. No relinking issues or any kind of problem with file versions this far. EDIT: Well ok, the Rex_Sprinboard library seems to miss some files for generating user icons and such. I guess that's just not available in LV2011. It's missing files from ..vi.libLabVIEW Icon API* Does my installation miss something?- 34 replies

-

My bad, I already forgot it had to be 64 columns each page... I've tested again and hit the same limitation on the 32-bit environment (with Excel using up to ~1.7GB of memory according to the Task Manager). Your system is more than sufficient for that operation (unless as rolfk correctly pointed out, you use the 32-bit environment). This is absolutely right. There is however one thing: Excel runs in a seperate process and owns a seperate virtual memory of 2GB while in 32-bit mode. So you can only create a workbook of that amount, if there is no memory leak in the LabVIEW application. In my case LabVIEW will take up to 500MB of memory, while Excel takes 1.7GB at the same time. If the references are not closed properly, you'll hit the limit much earlier, because LabVIEW itself runs out of memory. In my case the ActiveX node will throw an exception without anything running out of memory (well Excel is out of memory, but the application would not fail if I would handle the error). I have never tried to connect Excel over .NET... Worth a shot? jatinpatel1489: as already said a couple of posts ago: Make use of a database to keep that amout of data. If the data represents a large amount of measurement data, TDMS files are a way to go (I've tested with a single group + channel, with a file size ~7.5GB containing 1Billion values -> milliard for the european friends ). You can import portions of the data in Excel (or use a system like NI Diadem.. I've never used it though). If it must be Excel, there are other ways like linking Excel to the database (using a database connection). Last but not least you could switch to 64bit LabVIEW + 64bit Excel in order to unleash the full power of your system.

-

OK, here are the latest results: This time I let the application create 6 sheets with 20 columns, 600,000 rows each (writing 2 rows at a time). Notice that the values are of type DBL (same as before). Memory for Excel build up to 1,3 GB, but everything still works perfectly for me. My advice for you is to check if your RAM is sufficient, and review the implementation of the Excel ActiveX Interface. Be sure to close any open references! Without more information of your actual system (RAM) and at least a screenshot of your implementation, it is most difficult to give any more advice. If you could share a sample project that fails on your system, I could test on mine and may check for problems on the Excel Interface. Here is a screenshot of what I did (sorry, but I can't share the implementation of the Excel Interface)

-

LavaG is kidding me right now... Again: I would recommend a database, however one of my colleagues implemented something similar in the past (Export huge datasets from an database). You should carefully check the RAM utilization of your System (Do you keep all data in Excel + LabVIEW?). I tested on my machine (LV2011SP1) with 4GB RAM and Excel 2013 using the ActiveX Interface. Writing 64 columns with 600.000 random DBL numbers in a single sheet, one row at a time. It works like a charm, however Excel now uses 650MB RAM EDIT: Just checked what would happen if I build all data in LabVIEW first and then export to Excel: LabVIEW now takes ~1.6GB of memory + throws an error: LabVIEW Memory is full The issue is due to the 32bit environment, where a single process can only handle up to 2GB of virtual address space, see: http://msdn.microsoft.com/en-us/library/windows/desktop/aa366912(v=vs.85).aspx The issue is also caused by my way of memory management (reallocating memory each iteration!) Just checked again, I tried to copy all data at once, therefore trying to copy a contiguous block of 64x600.000 array elements (which might not be possible if there is no block large enough to contain this amount of data)... Anyways, the issue is clearly not Excel, but the implementation in LabVIEW (in my case).

-

Strange delays in loading LabVIEW Vi that call .NET

LogMAN replied to dannyt's topic in Calling External Code

This issue is quite common. You might be interested in this article: http://digital.ni.com/public.nsf/allkb/7DC537BCD6E679C3862577D9007B799E It describes two Workarounds, where the first one matches your solution. I preffer the second workaround, because it is easier to implement into the application itself (create if not exists). -

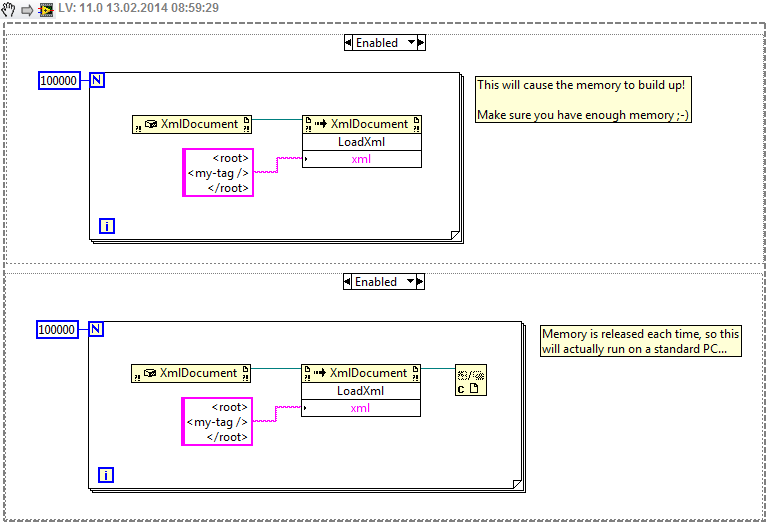

.net Events in LabVIEW causing memory leak ?

LogMAN replied to Runjhun's topic in Application Design & Architecture

Could you provide an example project? There have certainly been memory leaks with some .NET libraries ( Ping for example ), but it is hard to tell without seeing the actual implementation. However the usual issue is related to references that are not released ( using the 'Close Reference' function from .NET palette ). I've attached a code example with two implementations of the same function ( System.Xml.XmlDocument ), one releases the reference, the other not. Disable either of them and watch the memory usage.- 11 replies

-

- .net events

- .net

-

(and 2 more)

Tagged with:

-

I know these figures very well. I would like to see the code, but my current system does not have a LV2013 installation. Do you run your code on a standard OS/Hardware? (e.g.: Windows 7 on PC) For now I assume you do: General purpose OS and Hardware do not guaranty real-time capabilities. So the kernel will pause any thread whenever it sees fit. In my past tests the time sometimes spiked up to 400ms instead of the required 10ms, resulting in such graphs (even if the priority is set to 'critical'). Now you could let an external hardware do the acquisition and stream directly to disk. There might be other solutions though.

-

My understanding of this is far to basic, but maybe this thread is of interest for you: http://lavag.org/topic/17123-lvoop-with-dvrs-to-reduce-memory-copies-sanity-check

-

You should be able to view the code of the toolkit. All VIs are basically wrapper around an ADODB Connection. The function you use to receive parameters does that on purpose (using ADODB functions!), but only if the database supports that (pros & cons are listed in that VI too). There is a whitepaper from NI that describes the DCT: http://www.ni.com/white-paper/3563/en/ One statement says: Now if there is a native way to receive a list of stored procedures using 'ADO-compilant OLE DB provider or ODBC driver', you could write an idea in the Idea Exchange.

-

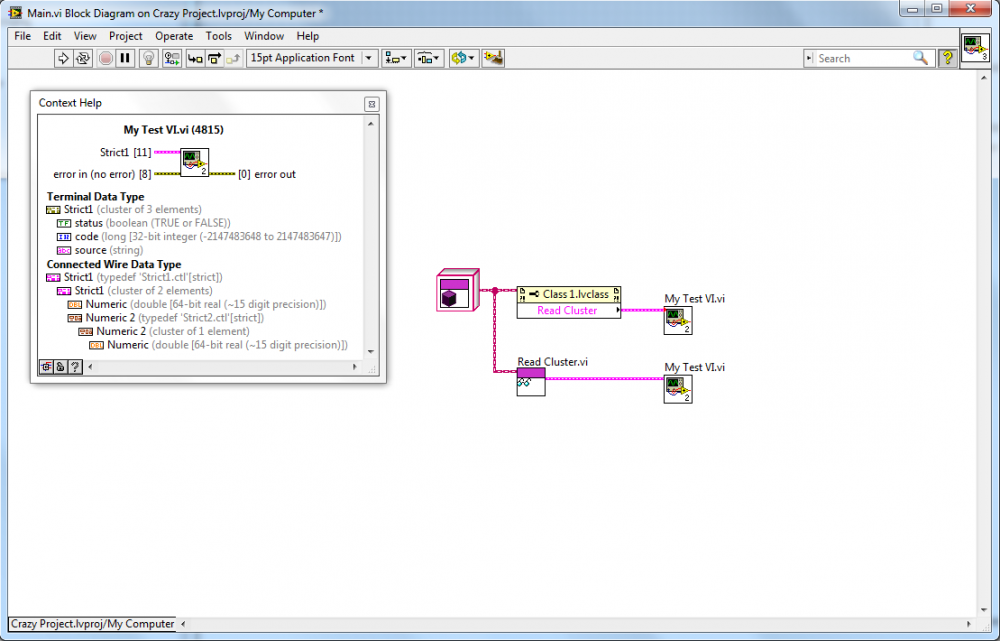

Do you use strict-typedefs for your properties? Did you try to replace the VIs by their properties after saving for previous version? Some month ago I ran into various problems related to property nodes. In LV2011 the IDE got very slow and almost unresponsive... No problem without properties... Anyways, I reached a point where the entire IDE would just crash at load time for class private data! So I upgraded that particular project to LV2013 where I realized an odd behavior (that has been there even in LV2011, but did not catch my eye until then): If you wire a strict typedef from a property-node to a VI with the same typedef, a coercion dot will occur once you change a font in that typedef, or anything that is only available for strict-typedef. In LV2013 you get an explaination for the connected data types in the context help. The source is presented as type of 'error in' or some other non-related type... Once you place the VI instead of the property node, everything is fine. According to NI that has something to do with the way LabVIEW updates the typedef. I did not get much details, but basically the property node remains with an 'old' or rather 'partial-new' version of the typedef, since a property has no FP, while the VI is updated with the entire 'new' typedef, thus leaving the IDE with "two" typedef revisions at the same time... See the picture and notice that there is a coercion dot and the Main-VI is not broken, even though the context help makes me ... ... ... ! (this is LV2013) Also notice, that the same implementation without property-node has no coercion dot! Not to mention that the terminal data type according to the context help is of type 'error in', but correctly named 'Strict1' and the connected wire data type represents the correct type <there is no emote to display how I feel about this, maybe if LavaG had something... exploding... no?> This is (according to NI and my experience so far) just a IDE issue and has no known effect to the build exe (code is not even broken). NI is informed of course, but there will not be an update to this until LV2014 or later. Anyways, I wonder if what you experience might be related (LV2010 could have even more issues with this than LV2011). From what you've written, it kind of fits.

-

Library & Class Naming Convention Advice

LogMAN replied to MartinMcD's topic in Application Design & Architecture

The easiest solution would be to rename the private method to "PrivateReadDevice.vi", as the private interface can still be changed anytime without side effects on implementations (like linking issues). However your example should be two seperate classes in my opinion: One for serialized access (i.e. the implementation of a driver) and a second class for access in terms of a messaging system to an async. state-machine/process which implements the driver. -

I did not have a chance to test it yet, but maybe thats worth a look? http://lavag.org/topic/17024-rex-remote-export-framework-and-remote-events/

-

Library & Class Naming Convention Advice

LogMAN replied to MartinMcD's topic in Application Design & Architecture

shoneill is right, having a convention is a very important thing. ( I used to change my 'convention' because of various, situational and sometimes stupid reasons, so code got messy or time run out ) There are some things to consider, this is what I could guess of (speaking in general, not specifically for libraries or classes): Prefixes -> Use libraries/classes instead (you'll get a namespace 'Library.lvlib::Member.vi'), how would that look with prefixes: 'prefix_Library.lvlib::prefix_Member.vi' ( )... You have to organize the files on disk to prevent name conflicts though. If there is a need to prefix everything, just change the library/class name (linking issues are conveniantly easy to solve this way in my experience). Spaces -> One of the most obvious things that make LabVIEW different to languages like C. Good for starters and easy to read. However if you build DLL files (or enable ActiveX afaik), spaces are a problem. CamelCase -> Sometimes hard to read and maintain, but in combination with libraries the most flexible one in my opinion. Makes it easier to reason with people from the other side. CamelCase in combination with libraries/classes is what I actually use, even though it is sometimes painfull to stick to ( ever batch-created accessor VIs to class members? I'm always having fun renaming all of them ). As you're asking for libraries & classes specifically, I don't use prefixes (they tend to change on my end...) and I have trouble to write well-named VIs including spaces (would you write 'Write to Disk' or 'Write To Disk'? Therefore CamelCase is a good solution for me ( 'WriteToDisk' ). I also no longer create subfolders on disk to organize class files, but virtual folders in the project only (how I hated to move files because they are in a different category for some stupid reason...). Also libraries (*.lvlib) and class (*.lvclass) files are within their particular subfolder on disk. If I now move a library/class to a different section, I just have to drag the entire folder. This handles quite well in SVN too. Would be interesting to see how everybody else handles such things... EDIT: Error in database, but still posted? Sorry for this tripple-post. -

I have never implemented this function with a remote target, so I can only reason with the little information I have: Telling from the help, your solution should work fine. However, it says: vi path accepts a string containing the name of the VI... and furthermore Note If you specify a remote application instance with application reference, the path is interpreted on the remote machine in the context of the remote file system... It explicitly says path and not string or path, so I'm not sure if your problem is the expected behavior, or a bug (I could argument for either of them). Anyways, in my opinion the application instance perfectly identifies the target and there should be no problem with either a string or a path.

-

Dynamic Dispatch: Bug or Expected Behavior

LogMAN replied to GregFreeman's topic in Object-Oriented Programming

The last time I had such an issue, it was related to the 'Seperate Compiled Code' option in combination with revision control: I reverted one of my components in SVN and for some reason it has not been recompiled automatically (broken-unbroken issue)... After hours of searching, an NI engineer suggested to clear the compiled object cache, which solved the problem entirely. Not sure if that's related. -

I may have got confused. So this is basically a different implementation of the actor model? The only one I've ever used up till now is the "Actor Framework" that is now also shipped with LabVIEW. http://lavag.org/files/file/220-messenging/ Never mind. Memo to myself: Actor Framework != Only possible way & RTFM. It's fine, I too often consider more advanced implementations over the ones which are "recommended". It is just harder to learn multiple specialized modules over a single one for beginner (like me for this one). The NI version does everything, using standard tools that are shipped with LabVIEW (even though it is far away from being "easy to understand for beginner"). I favor your implementation of asynchronous calls for scalability. The NI version is good, but 3 or 4 parallel loops in a single VI are to much for me to stay calm ( always trying to find a "better" solution ). I was trying to say the exact same thing, however my initial statement has become irrelevant for this matter. You are not bypassing actor messages with your message system, it's just a different implementation of the actor model. I was comparing apples with oranges and got confused about something that was missing... Thinking about it from the new point of view, your implementation might be less complex than the "Actor Framework" I was talking about earlier. The name sounds familiar and I think he is the one (big guy with full beard). It's too long ago for me to be sure though. I just remembered a warning about bypassing the actor messaging using any kind of secondary system, since that would eliminate the idea behind the actor framework model. But that was completely related to the "Actor Framework" and some crazy complex project (and yes, it was way to complicated). Now I'm getting excited to try the Messaging package... So many things I want to know, but no time at all

-

I tested it and it works fine. One dependency was missing, but this is easy to solve: http://lavag.org/files/file/235-cyclic-table-probes/ Like neil, my time is limited right now and this is also not my specialty. I'll give my opinions anyways (notice, I had like 10min for this): I think your code is much easier to extend (while maintaining readability that is) than the NI version, but the complexity is on a much higher level (Actor Framework, JKI State Machine, Messaging Library). Anyways, there is one thing bothering me regarding the coupling of your actors. An actor is defined by its messages. You could bypass that by sharing references or reference-like objects that allow direct access to actor-specific data or functions, thus eliminating the need to use any actor message furthermore. If I understand your code correctly, the actor messaging system is bypassed by the the messaging library. Now would it make any difference if you call an asynchronous VI instead of the actors? There has been a webcast about a big project that has been solved using the actor framework, but I can't recall the name or find it... It involved a flexible UI where some actors are bypassed, as well as sharing actor objects with realtime targets and network sharing. The presenter did a very good job to reason about the downside of some stuff they did. Does somebody know which webcast I mean? (NI Week?) I hope my explaination is understandable. Maybe some actor framework guru could give his opinions. Feel free to correct me.