-

Posts

596 -

Joined

-

Last visited

-

Days Won

26

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by ensegre

-

Yesterday I played a little around with constructing an absolute path inside muLibPath.vi, kind of how you did now in the windows case, and the benchmark started crawling. To keep the libraries in the same dir as muLibPath.vi may simplify matters in the IDE but right, you have to do something for the exe. So yes, either devising the path in Construct.vi and passing it along with the class data, or having an installation script fixing the return value of an inlined subvi and saving it, look to me ways to go.

- 172 replies

-

Maybe that is an output only argument to mupGetExprVar? It would make little sense that the call sequence has a completely transparent argument. In any case, have you noted the shift register for that pointer? This should keep the case of N=0 exprs from crashing. Apropo which, mupGetExprVars still crashes if called with Parser in=0, that should perhaps be trapped in a production version. yes. Probably sloppiness of the author who didn't push the version number everywhere (I added the X32 and X64 in the names on my own, though). About the choice of the library, I'm still thinking at the best strategy for muLibPath.vi. If one puts more complex path logics into it, that affects very badly performance, as the VI is called for every single CLN. Probably the best is to make an inlined VI out of it, containing only a single path indicator, whose default value is assigned once for good by an installation script. I hope that the compiler is then able to optimize it as a hardwired constant.

- 172 replies

-

- 1

-

-

Debugging off, I realized that it is for inlining; compiled code, I downloaded your first commit on LAVA rather than the latest github. 2015, please: mupLib.zip If ever useful: libmuparser.zip compiled .so for X32 and 64 on ubuntu16. As they are dynamic, I doubt they may be themselves dependent on some other system lib, and hence particular of that distro. I'd also think at a benchmark which iterates on repeated open/evaluate/close. I see it as a frequent use case, and suspect that muparser might do better than the formula parser there.

- 172 replies

-

- 1

-

-

6 PC. We mean, what do you take images for? What is the bandwidth? Are the cameras synchronized? Do the images need to be analyzed together, so that some program decides something according to what is in them? Can the computers exchange messages? What latencies are tolerated? Do the images need to be streamed disk? All these are considerations you have to take into account for a design.

-

Cracked it finally. You were passing the wrong pointer to DSDisposePtr. Here mupLib-path.zip is a corrected version of the whole lvlib. Now all examples and benchmarks run for me in LV2017 32&64, 64bit being marginally faster with the default eq in the benchmark

- 172 replies

-

- 1

-

-

Ok, reporting progress: compiled a 32bit .so of your modified library sudo apt-get install g++-multilib cd muparser-2.2.5 ./configure --build=x86_64-pc-linux-gnu --host=i686-pc-linux CC="gcc -m32" CXX="g++ -m32" LDFLAGS="-L/lib32 -L/usr/lib32 -Lpwd/lib32 -Wl,-rpath,/lib32 -Wl,-rpath,/usr/lib32" make clean make patched muParser.lvlib, to include a target and bitness dependent libmuparser path. Attached, with compiled code removed (orderly: I should submit a pull request on github). mupLib-path.zip Testing on LV2017-32bit: mupLib example.vi WORKS all other examples crash when mupGetExprVars gets to DSDisposePtr, with trace like *** Error in `labview': free(): invalid pointer: 0xf48c3a40 *** Aborted (core dumped) Testing on LV2017-64bit, with system libmuparser 2.2.3 Ditto. Only difference, longer pointer in *** Error in `labview64': free(): invalid pointer: 0x00007f03aa6b78e0 ***

- 172 replies

-

Ok, I traced it down to that, for me, muParser Demo.vi crashes on its first call of DSDisposePtr in mupGetExprVars.vi. Just saying.

- 172 replies

-

I'm opening some random subvi to check which one segfaults. All those I opened were saved with allow debugging off and separate compiled code off (despite your commit message on github). Any reason for that?

- 172 replies

-

Just mentioning, if not OT. Something else not supported are booleans (ok you could use 0 and 1 and + *). In a project of mine I ended up using this, which is fine but simplistic. I don't remember about performance; considering my application it may well be that simple expressions evaluated in less than a ms.

-

It appears that it might be straightforward to make this work on linux too. In fact, I found out that I had libmuparser2 2.2.3-3 already on my system, for I dunno which other dependency need. Would you consider to make provisions for cross platform? Usually I wire the CLN library path to a VI providing the OS relevant string through a conditional disable; LV should have it's own way like writing just the library name without extension, to resolve it as .dll or .so in standard locations, but there may be variants. I just gave a quick try, replacing all dll paths to my /usr/lib/x86_64-linux-gnu/libmuparser.so.2 (LV2017 64b), I get white arrows and a majestic LV crash as I press them, subtleties. I could help debugging, later, though. Also, to make your wrapper work with whatever version of muparser installed systemwise, how badly does your wrapper need 2.2.5.modified? How about a version check on opening?

- 172 replies

-

Perhaps you stacked the decoration frontmost, so that it prevents clicking on the underlying controls? Try selecting the beveled rectangle and Ctrl-Shift-J (last pulldown menu on the toolbar)

-

-

Provided that the communication parameters are correct, probably you should initialize once, read no too often and close only when you're really done. Trying to do that 10000 times per second usually impedes communication.

-

If it is this one, you could test communications first with their software, as per page 46 of the manual, to exclude that you have wired the interface incorrectly, or that the usb dongle is defective.

-

it looks wrong from scratch that you're repeatedly initializing and closing the port in a full throttled loop. Anyway, first things first, not knowing what your device is, and whether the communication parameters and the register address are the right ones, there is little we can say beyond "ah, it doesn't work". It might even be that you didn't wire the device correctly. Is that 2 wire RS485 or 4? Are you positive about polarities? Do the VIs give some error?

-

It occurs to me that the 5114 is an 8bit digitizer, so the OP could just get away with 10MB/s saving raw data. Well less actually, if I get what the OP means, it is 1000 samples acquired at 10Msps, triggered every ms, so only 1MB/s.

-

His TDMS attempt tries indeed to write a single file, but timestamps the data when it is dequeued by the consumer loop. His first VI, however, uses a Write Delimited Spreadsheet.vi within a while loop, with a delay of 1ms (misconception, timing is anyway dictated by the elements in queue) and a new filename at each iteration.

-

Quite likely this is a bad requirement, and the combination of your OS/disks is not up to it, and won't be unlike you make special provisions for it - like controlling the write cache and using fast disk systems. The way to go imho is to stream all this data in big files, with a format which enables indexed access to the specific record. If your data is fixed, like e.g. 1000 doubles + one double as timestamp, even just dumping everything to a binary file and retrieving it by seek & read is easy (proviso - disk writes are way more efficient if unbuffered and write an integer number of sectors at a time). TDMS, etc, adds flexibility, but at some price (which probably you can afford to pay at only 80MB/sec and a reasonably fast disk); text is the way to spoil completely speed and compactness with formatting and parsing, with the only advantage of human readability. You say timing is critical to your postprocessing; but alas, do you postprocess your data by rereading it from the filesystem, and expect to do it with low latency? Do you need to postprocess your data online in real time or offline? Do you care for timestamping your data the moment it is transferred from the digitizer into memory (which already lags behind the actual acquisition, obviously), not at the moment of writing to disk, I hope?

-

Out of memory error when using Picture Control

ensegre replied to Neil Pate's topic in User Interface

If relevant, I got the impression that the picture indicator queues its updates. I don't know what is really going under the hood, but presume that whatever it is, it should be happening in the UI thread. In circumstances also not clear to me, I observed that the picture content may lag many seconds behind its updates, with a corresponding growing memory occupancy, and seemingly weird, kept streaming in the IDE even seconds after the containing VI stopped. I suspect that a thread within the UI thread is handling the content queue, and that this might be impeded when intensive UI activity is taking part. Is this your case? I actually observed this most, while developing an Xcontrol built around a picture indicator. My observation was that invariably after some editing the indicator became incapable of keeping up with the incoming stream, for a given update rate, zoom, UI activity, etc. However, closing and reopening the project restored the pristine digesting speed. -

I would say: the fields which are more beneficial are those which are useful to the problem you have to deal with. You do signals, functional analysis is good. You do computational geometry, geometry is good. You do image processing... you name it. Labview is only a programming tool. It's not that you become more proficient in Labview because you know a special branch of maths, such as, you know graph theory, you are good to grasp diagrams. [in fact LV diagrams are just a representation of dataflow, more kin to an electronics diagram than to formal graph theory]. Rather, on general terms, I would say numerical analysis and sound principles of algorithm design really help. How to make an efficient algorithm for doing X, how truncation errors propagate, how to optimize resource use, etc. But this is true of any programming language used for practical problem solving. Formal language theory, compilers - not really, LV conceals these details from you. Unless your task is to implement a compiler in G...

-

As an aside: I realize that the computation of the current pixel coordinates could be avoided using, like you did, however it seems that these coordinates are not always polled at the right time; for instance I get {-1,-1} during mouse scroll. That might be part of the problem...

-

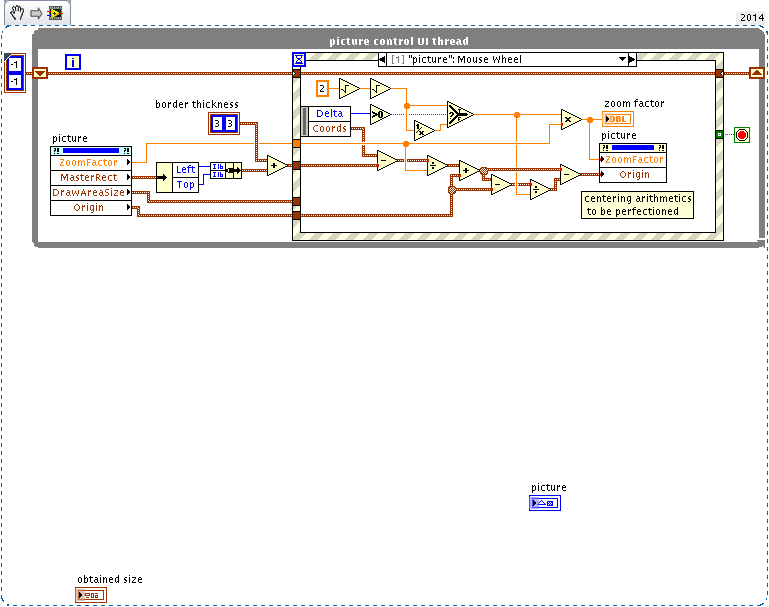

This is an imperfect solution from a project of mine. A scroll of the mouse wheel zooms in or out by a factor sqrt(sqrt(2)), centering the zoom on the pixel upon which the cursor lies. The arithmetics of that is easy, it just involves that {ox,oy}->{px,py}-{px-ox,py-oy}*z1/z2, where {ox,oy} is the origin and {px, py} are the image coordinates of the pixel pointed at. That is, the new origin is just moved proportionally along the line connecting the old origin and the current pixel, all in image coordinates. Differently than you, I haven't implemented limits on the zoom factor based on the image size and position, perhaps one should.

-

[collided in air with infinitenothing] single port GigE is ~120MBytes/sec. you're not talking of bits/sec? Or of a dual GigE (I ran into one once)? GigE (at least Genicam compliant) is supported by IMAQdx. Normally I just use high level IMAQdx blocks (e.g. GetImage2) and get good throughput, whatever is under the hood. But a camera driver might be in the way for a less efficient than ideal transfer.

-

Git. Because my IT, begged for centralized SCC of some sort, settled for an intranet installation of gitlab, which I'm perfectly fine with. But I'm essentially a sole developer, so SCC is to me more for version tracking than for collaboration. Tools: git-cola and command line. Reasonably happy with Gitkraken, using git-gui on windows as a fallback.

-

This way perhaps? (or maybe this one since now we're all obsoleting out)

- 9 replies

-

- crash

- troubleshooting

-

(and 1 more)

Tagged with: