-

Posts

596 -

Joined

-

Last visited

-

Days Won

26

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by ensegre

-

How to delete phantom controls from a TypeDef

ensegre replied to Roger Moss's topic in User Interface

If you attach your .ctl, maybe someone could look into it. Anyway, have you tried to autosize to fit the cluster? My guess are that the extra controls are in there, just far out of your current border. Reorder controls in cluster might also give you some hint. Just open your typedef, create a new cluster (this will temporarily break the typedef), and drag into it the elements you want to keep. When you are done delete the original cluster and apply changes. That saves you from replacing every single instance of it in your codebase. -

Update just for the record - a number of phone calls to the representatives, "so explain me again what error do you get" "Did you read my email?? When do I get an updated firmware?" I got this new firmware, whose release note spells Fixed Problems -------------- - some improvements in Ethernet communication and the problem disappeared...

-

Is anyone aware of a limitation on what the smallest possible FP size is? On LV 2017, I am under the impression that this is 116x41 on one windows machine, and 1x1 on a linux. Known issue, WM, or deserves a CAR? The reason I'm asking: I'm fiddling with the cosmetics of an Xcontrol. I would have nothing against a larger transparent border, but when I webpublish the big FP using it, the large transparent border turns grey on the snap png. ETA:, ah, https://forums.ni.com/t5/LabVIEW/How-to-set-front-panel-size-to-be-same-as-one-led/td-p/1565524 ETA2: ok, tried the snippet of that thread and get either no reduction or eloquent "Error 1 occurred at Property Node (arg 1) - Command requires GPIB Controller to be Controller-In-Charge" for tighter sizes.

-

crosspost: https://forums.ni.com/t5/LabVIEW/Read-Holding-registers-of-Radix-x-72/td-p/3835439

-

An UI glitch, I realize. On the toolbar, the light bulbs icons are inverted. The left one turns highlighting on, the right one off. The tooltips are correct.

-

Maybe 8.5 not supported on a newer OS? I can only say I have LV17 running ok on an i7 7567U, Win 10 enterprise 2016 LTSB

-

I tried Mouse up, and discovered that it works only if you choose the last element and move the pointer out of the popup window but still at the edge of the bevel. Curious to know too.

-

acs motioncontroller ACS motioncontroller command “ExtendedSegmentedMotion”

ensegre replied to Mavs's topic in LabVIEW General

I don't use this device, but: you mean what, you got a DLL exposing those functions, and you have a problem when calling this Ch.extendedSegmentedMotion() from labview? If so, are you able to call successfully any other function? If that is the case it may be a simple mistake in interfacing with the CLFN - calling convention, wrong typing of the arguments, or the like. What did you try and what are the results? -

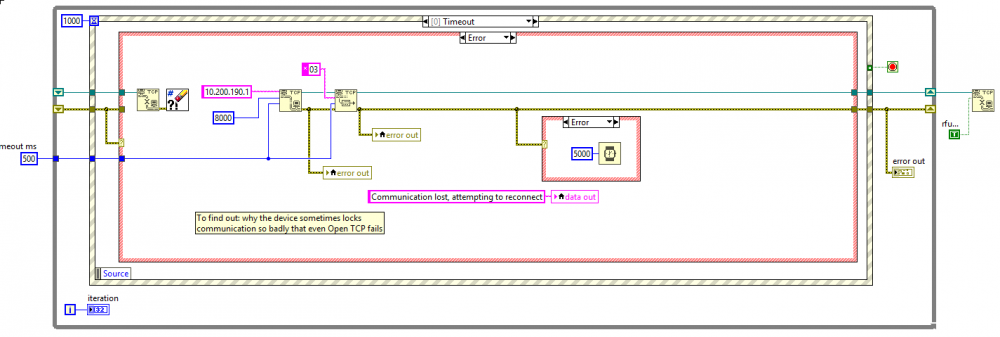

Note taken. For the moment though I took the path of calling Pfeiffer service. It would make more sense to me that their firmware is buggy, failing to release a disconnected port. After all this device acts as a telnet server, when it refuses incoming connections I would blame it rather than the client. Oh, and no, today I had occurrences when neither windows nor linux could connect, as well as ones where disconnecting and reconnecting the cable a few times didn't lose it. Either I'm missing something in this tests, or something is fishy.

-

[after some more inconclusive tests with another linux laptop back-to-back and intranet, and dumb wiresharking]. Maybe. I mean, you're not suggesting to disable Nagle (that would effect all other traffic from the host NIC, probably not good), just to get the handle from that VI. I'll have to educate myself, e.g. https://stackoverflow.com/questions/3757289/tcp-option-so-linger-zero-when-its-required. I wish I could defer it to a rainy day, unless hangups with that device become frequent. Oh, is this in the way?

-

Still shamelessly hijacking. So I'm polishing this VI, using basic tcp/ip, and observe the following. I have occasional network disconnections, and work around then attempting to reconnect, if tcp read gave error. I'm testing it on various PCs, with the device connected back-to-back or through some amount of intranet. Disconnections are easily simulated by pulling the ethernet cable and reattaching. Now my consistent observation is: one linux machine, reconnection seems to work robustly well. Three windows machines, quite often re-open TCP fails with Error 56. Not even restarting labview helps, only power cycling the instrument does. [I haven't yet had the chance of trying when both win and linux are on the same intranet]. Any idea why this is happening, is windows basic tcp different? Can I force some kind of port release harder than with tcp close?

-

yes, wireshark with plain telnet should be easy. I got the impression that I ended in a case of "device refuses to admit the connection broke, and refuses a new connection because outbuffer is full", whatever. If so, avoidable with programming by not asking too much at once. For the rest, I'm probably there, by now. Pfeiffer Maxigauge TPG 366, btw. Thx both for the feedback!

-

Thanks for confirming, Rolf. I also suspect that in addition to that is that ::socket is a windows only concept, or perhaps I don't have a sane VISA on my linux dev. I'm actually progressing with plain TCP. I'm only stumbling in my device sometimes hanging and refusing connections, this was what confused me at the beginning. A matter of programming so to avoid the condition which causes the lock, rather than abruptly power cycle the device. Anyway, now seemingly related to the particular device and not to the communication layer.

-

You mean plain tcp read/write VIs? I am wondering. Searching just a bit more I found that I can use VISA read/write with resource id TCPIP::<ip>::<port>::SOCKET; what I'm fighting with now is how my instrument behaves in term of holding a failed connection and refusing a new one. I need to disentangle a bit the message protocol itself and the odd behaviour around it.

-

This is a DSC module question: has anybody here experience with building standalone executables which include shared variables bound to DSC modbus i/o servers? I have an issue with deployment, possibly related to licensing. I posted on the dark side, but haven't got feedback yet. https://forums.ni.com/t5/LabVIEW/shared-variable-bound-to-Modbus-i-o-not-working-in-deployed/td-p/3809801 TIA, Enrico

-

My speculation would be that NI implemented in IMAQdx only the support for the most common events, and that this is static. The fact that one particular camera has additional capabilities, and perhaps even advertises them when connected (like it does with its attributes) may simply not be taken into account. But I'm curious to know about too.

-

Well, my idea for multiplatform is in fact trivial: replace the three wsapi VIs with independent ones, fiddle a little to get rid of the broken library dependencies, and go. For the two % escape vis it is just a matter of copying the code which is open, modulo some correction. Talking about the third VI is a bit hairy because the original is password protected, but, I found out a posteriori, you have been there too. As you said there, the code appears as conceived for working on the three platforms. So I did that and played a bit with it, with inconsistent results. I tested three machines, one windows and two linuces, and concentrated just in getting the two examples read as file:// in chrome and firefox. The one windows was most of the time updating correctly (but not sometimes, not on second run of the same vi, sometimes only refreshing the browser, etc.) The first linux updated itself erratically when it should have, mostly updated correctly only refreshing the page, but still transmitted correctly to the VI the controls operated in the browser. The third linux produced a static and messed page in the browers. Alas, that one happens to be a system with comma decimal separator, so perhaps there is this in the way. All together nothing yet stable enough to post here. I'd need to grasp the mechanics of what goes on, and look for races or bugs, not sure if I'll manage soon. For one, I have a vague suspect that the final anonymous call to Cleanup.vi cripples the synchronization if the Example vi is run the second time.

- 137 replies

-

- 1

-

-

Ok, 1 depends apparently on a double NIC. I should have read this before, perhaps. In fact "Here we can use the external IP address..." in Example.vi is wired in Brian's latest version, and not in Thomas' one on github. Sorry for the noise. For 2 I'm testing a solution which could make it work on linux and possibly even on mac.

- 137 replies

-

Onboard. Observations: Brian's last version must have a bug, the resulting html is almost static (I can type inside numerics and strings, I can pull down Averaging, but non data is sent from or to the VI). I wouldn't be surprised if it is a trivial mistake in JSON escaping. See my image, the double backslashes in the path are suspicious.[EDIT - no] The thing still depends on wsapi (dragging in the whole library for good), thus working only on windows. This is because Escape HTTP URL.vi, Unescape HTTP URL.vi and LV Image to PNG Data.vi.

- 137 replies

-

I see, thanks Rolf and smithd for the pointers. Setting aside the stability and future support of private stuff, about which I agree with Rolf's first comment. Let's suppose that I "guessed" the calling sequence of one core function, one which is for example (linux) inside /usr/local/natinst/LabVIEW-2017/cintools/liblv.so along with those of the old list, but not one of them. If I release code based upon this guess, would I place myself on the bad side?

-

Off the head, I think that it may be common knowledge on this site that some functions are realized via CLNs which call certain procedures, with "LabVIEW" as library. I don't have a particular link to prove this, but somehow it is my off-the-head, long lurker impression. Certainly "LabVIEW" is not a smart search term here. The intriguing fact about the "LabVIEW" dynamical library is that the application resolves it opaquely, perhaps (from minimal explorations I did on Linux and windows versions) even pointing at differently located dynamic libraries on different platforms. Now, is there some semiofficial resource about this? The reason I'm asking is actually the following: I think I could offer a nice addition to a nice project here on the site, using native LV calls, by providing a couple of VIs which implement such calls. The concern is: would I be breaching the LV licence if I did? [apart of "it's private stuff, don't cry if"]. The alternative would be to locate a really suitable external library, interface it, maintain it; a much larger hassle.

-

I was thinking, an useful addition to the cool Modbus Comm Tester would be a section for Read Device Identification. Probably fitting nicely the 7th rectangle on the FP is the difficult part of it; the extension of the code, which is elegantly written, seems trivial. What do you think about?

-

No experience with the Moxa beyond reading the product page, which reminds me much of the Vlinx and Advantech serial servers I'm using. These do have TCP and UDP modes, and are thus cross platform. You have to configure them first using either the windows configurator or their webby. Here is an example for TCP. Note the .vim which provide alternate code for either TCP or VISA. VLINXqueryTCP.vi VISA_TCP_query.vim CloseConnection.vim

-

So it is.