-

Posts

369 -

Joined

-

Last visited

-

Days Won

43

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by dadreamer

-

Not sure about the toolkits, but you can easily find LabVIEW 2017 SP1 download links having done just a proper googling: http://download.ni.com/support/softlib/labview/labview_development_system/2017 SP1/2017sp1LV-WinEng.exe http://download.ni.com/support/softlib/labview/labview_development_system/2017 SP1/2017sp1LV-64WinEng.exe

- 28 replies

-

- labview2017sp1

- labview rt

-

(and 3 more)

Tagged with:

-

I'm pretty sure, you can find LabVIEW 4 demo version on NI ftp server. I've also seen 3.x, 4.x and 5.x versions on macintoshgarden. As for the others, not sure, if they could be shared here as it still seems to be illegal, even though these versions are super dated and (almost) nobody uses them for production now. Well, I do have some, but I'm going to see the admins position about this. P.S.: I too like this kind of fun and I'm seeking for some versions. Still couldn't find 1.x to try it in some early Mac emulator. Interested in BridgeVIEW distros as well and maybe in some old toolkits like Picture Control Toolkit.

-

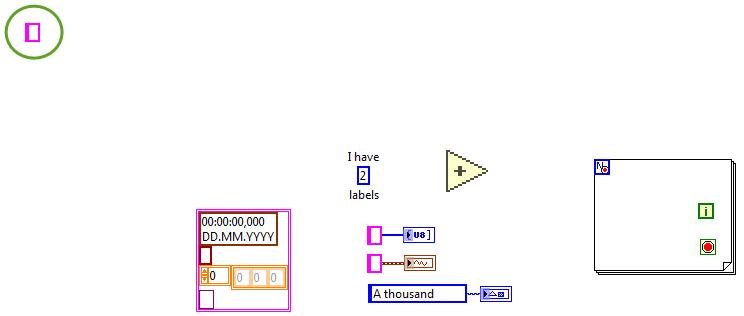

I meant this second window. It actually allows coding there. But after saving and reopening all the code things are gone.

-

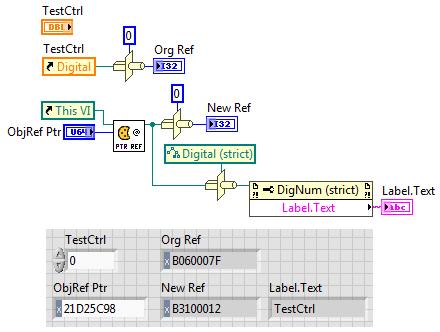

Well, this was discussed a lot here and on NI forums. The link there is invalid, the new one is Closing References in LabVIEW There's a paragraph about implicit references (current app, current VI, controls/indicators on the panel): All the other references should be closed with Close Reference node as a good manner rule. Of course, you may leave everything opened and LabVIEW disposes/closes it on the VI/project unload or on the app exit. But it's gonna eat up some extra resources in the system and slightly slow down your program. Or you may always close everything and don't care about the ref types. 🙂 Just a note for another guys out there, who might use that VI.

-

Also to note for your VI. 1. Looks like UID to GObject Reference.vi is giving away a duplicate reference instead of the original one. Hence it should be closed explicitly with Close Reference after the work with it is done. 2. UID to GObject Reference.vi is not working in RTE. The Context Help for it has the related remark and even though the underlying UidToObjRef function is present in lvrt.dll, it does nothing. So, for RTE another way should be found. 3. Icon picture of Pointer to Refnum.vi doesn't reflect, that reference, not pointer, is outputted. BCJ is not used here as well. Maybe, something like " @ # " should fit more or less ok.

-

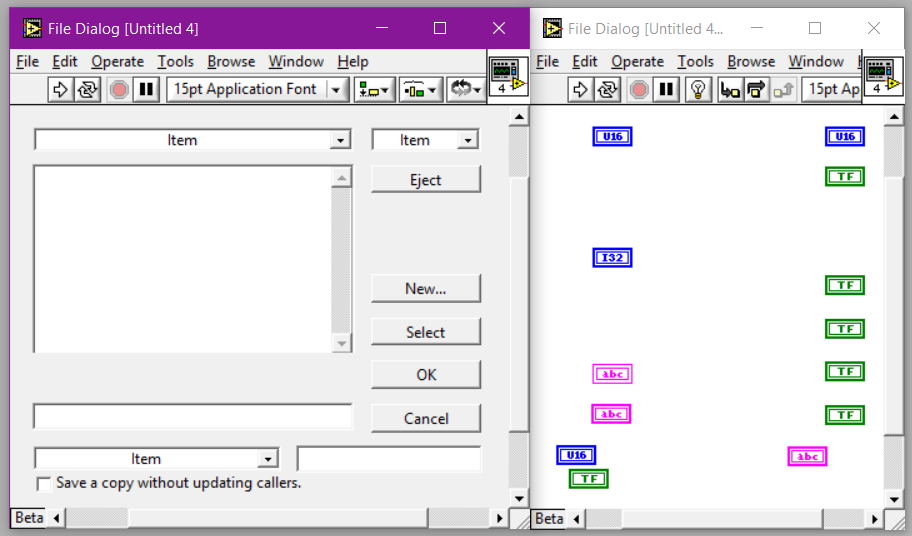

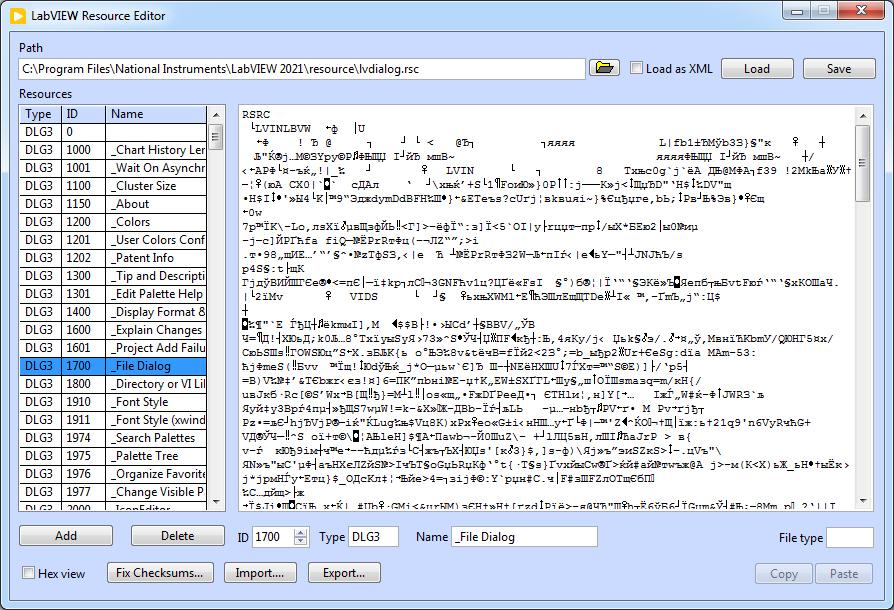

None that I've known of. It needs extra investigations, but I'm afraid no easy way around that. upd: At least you could try to adjust the File Dialog appearance, by replacing it with your own. It doesn't have a block diagram and is in locked state. I suppose, the diagram is created on-the-fly, when the Dialog Editor opens a .rsc as it's always (?) empty anyway. The code to the buttons is assigned by the Dialog Manager API (defined in DIALOG.H; check LV 2.5 sources). Sadly dialogEditor token is not working in modern LV's, so you have to extract, alter and pack that VI "manually". Maybe it's better to experiment with LV 5-7, where the dialog menu entries are available.

-

An inverted Boolean constant, for all your pranking needs

dadreamer replied to Sparkette's topic in LAVA Lounge

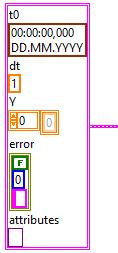

Thanks. I like that toggle switch control on the diagram. Assume, you changed the image, representing True/False states? By the way, I believe this is how exactly analog waveform looks like. Anybody knows, what was the error cluster used for? -

Might be worth trying to put useNativeFileDialog token into your config file (/home/<username>/natinst/.config/LabVIEW-x/labview.conf). Check both True and False values. upd: Doesn't work for me. Then maybe try some third party dialog libraries out there, e.g. osdialog or Portable File Dialogs or Native File Dialog / Native File Dialog Extended , there are thousands.

-

An inverted Boolean constant, for all your pranking needs

dadreamer replied to Sparkette's topic in LAVA Lounge

All the new snippets from here don't work for me. Tried in three browsers. I don't know, whether anyone else experiences the same. I guess, that thread relates to this issue: Old snippets still work fine though, for example this one. -

Back to experiments with Basic Object Flags? I think, you forgot to cast a spell on that string constant. 😀

-

Pink vs Brown (variable sized vs fixed sized cluster)

dadreamer replied to Taylorh140's topic in LabVIEW General

funkyErrClustWire works even in LabVIEW 6.0 (6i). https://labviewwiki.org/wiki/LabVIEW_configuration_file/Block_Diagram Doesn't work in LV 5.0. But! This token is still being read in modern LV versions. For example, this is from LV 2020. So, if you don't like that brown(ish), you can switch to pink classic. -

LabVIEW CLFN - pass bool pointer to function and change it value

dadreamer replied to Łukasz's topic in Calling External Code

Well, if you implement everything in LabVIEW, you could create such a global with DSNewPtr, which would give you a pointer, that you could easily pass to UA_Server_run. Then when the server is running, you may stop it by writing a zero to that pointer using MoveBlock. In such a way you won't need an additional wrapper DLL. Of course, your global should be one before you call UA_Server_run. Also don't forget to dispose that pointer when finished with it. -

LabVIEW CLFN - pass bool pointer to function and change it value

dadreamer replied to Łukasz's topic in Calling External Code

If you export running global variable from your DLL, then you could get its memory address with GetProcAddress and pass it to your UA_Server_run function as the second argument. That should work, if I got your tactic right. -

event structure localization issue on OpenSuse

dadreamer replied to Antoine Chalons's topic in Linux

Regarding your second issue - on which LabVIEW did you see that it doesn't require a case to handle the Timeout event if the timeout input is wired? I have checked on various LabVIEWs for Windows and even in LV 7.1 it breaks the VI and wants me to provide a Timeout case. -

event structure localization issue on OpenSuse

dadreamer replied to Antoine Chalons's topic in Linux

Your issue with Event Structure names reminds me this thread - LabVIEW Event names are completely off. Even though that was experienced on Windows and the OP solved the issue by removing LabVIEW and cleaning up the system with msiBlast. Might it be that something didn't get installed properly in your case?.. -

Getting a list of sound devices in the system

dadreamer replied to MartinMcD's topic in LabVIEW General

Maybe it's time to try WaveIO library written by Christian Zeitnitz then? -

It's just that NI designed those JPEGToLVImageDataPreflight and JPEGToLVImageData functions to run in UI thread. I don't know whether it's safe to set the CLFNs to "Run in any thread" and use it, because we don't have the sources. You could test it on your own and see. But it's known that most of WinAPI functions are reentrant, thus you may freely use WIC or GDI+ from multiple threads simultaneously. So, to re-invent JPEG reading VI with WIC this thread could be a starting point (along with Decode Image Stream VI diagram as an example). For GDI+ this thread becomes useful, but this way requires writing a small DLL to use GDI classes. I don't recommend using .NET nodes in LabVIEW here, as the execution speed is that important for you.

-

You could probably take a look at this: https://forums.ni.com/t5/Machine-Vision/Convert-JPEG-image-in-memory-to-Imaq-Image/m-p/3786705#M51129 For PNGs there are already native PNG Data to LV Image VI and LV Image to PNG Data VI.

-

Another option would be to get NI's hands on IntervalZero RTX64 product, which is able to turn any Windows-driven computer into a real-time target. That would definitely require writing kernel drivers for NI hardware and some utilities/wrappers for LabVIEW to interact with the drivers through user-space libraries. Of course, the latter is possible now with CLFN's but it's not that user-friendly, because focuses mainly on C/C++ programing. Not to mention, that a limited subset of hardware is supported.

-

Also take into account the bitness of your ActiveX libraries, that you're going to use. If you want to use 32-bit libraries, then you invoke "%systemroot%\SysWoW64\regsvr32.exe" in your command shell. For 64-bit libraries you invoke "%systemroot%\System32\regsvr32.exe" to register. That is true on 64-bit Windows. Better do this manually and, of course, with administrator privileges (otherwise it may not register or may report "fake" success).

-

You also can create the buttons in run-time with the means of .NET - the basic example is here (of course, you need to attach the event callback (handler) to your button(s) to be able to catch the button events).

-

Can Queues be accessed through CIN?

dadreamer replied to Taylorh140's topic in Calling External Code

If you meant me, then no, I even didn't use your conversations with Jim Kring on OpenG subject. Seriously, what's the joy of just rewriting the prototypes?.. I have studied those on my own, even though I have LV 2.5 distro for a while and do know, that some Occurrence functions are exposed there (in MANAGER.H, to be more precise). Moreover, those headers don't contain the entire interface. This is all, that is presented: /* Occurrence routines */ typedef Private *OccurHdlr; #define kNonOccurrence 0L #define kMaxInterval 0x7FFFFFFFL extern uInt32 gNextTimedOccurInterval; typedef void (*OHdlrProcPtr)(int32); Occurrence AllocOccur(void); int32 DeallocOccur(Occurrence o); OccurHdlr AllocOccurHdlr(Occurrence o, OHdlrProcPtr p, int32 param); int32 DeallocOccurHdlr(OccurHdlr oh); int32 Occur(Occurrence o); void OccurAtTime(Occurrence o, uInt32 t); int32 OnOccurrence(OccurHdlr oh, boolean noPrevious); int32 CancelOnOccur(OccurHdlr oh); boolean ChkOccurrences(void); boolean ChkTimerOccurrences(void); The headers lack OnOccurrenceWithTimeout and FireOccurHdlr and some others (likely, they seem to be non-existent in those early versions). Having said that, I admit that Occurrence API is not that complicated and is easily reversible for more or less experienced LV and asm programmers. -

Can Queues be accessed through CIN?

dadreamer replied to Taylorh140's topic in Calling External Code

Queues, Notifiers, DVRs and similar stuff, even when seems to be exposed from labview.exe in some form, is totally undocumented. Of course, you could try to RE those functions and if you're lucky enough, you could use few, maybe. But it will take a significant effort of you and won't become worth it at all. To synchronize your library with LabVIEW, you'd better try OS-native API (like Events, Mutexes, Semaphores or WaitableTimers on Windows) or some documented things like PostLVUserEvent or Occur of Occurrence API. To be honest, there are more Occurrence functions revealed, but they're undocumented as well, so use them at your own risk. What about CINs, I do recall that former Queues/Notifiers implementations were made on CINs entirely. I never had a chance to study their code, and not that I really wanted to. I suppose, they're not functional in modern LV versions anymore as they got replaced with better internal analogues.