-

Posts

717 -

Joined

-

Last visited

-

Days Won

81

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by LogMAN

-

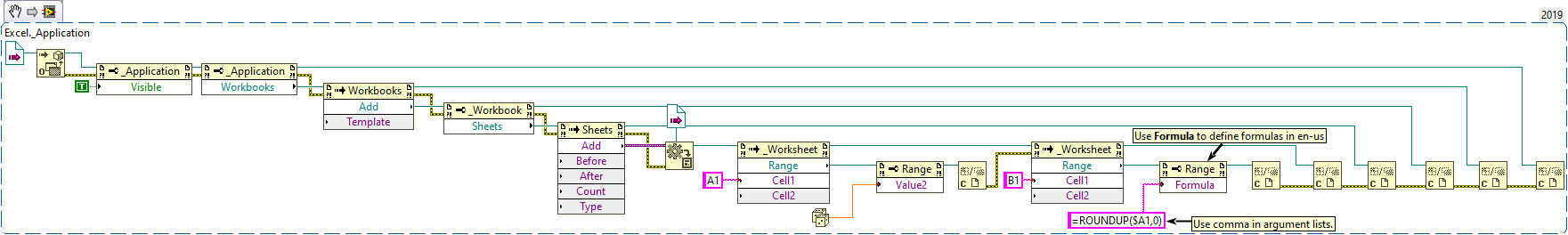

I'm not quite sure if this is what you are looking for but here is an example that works for me: Excel Formula.vi

-

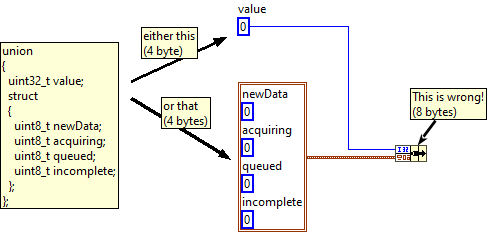

A union is always sized to its largest member, not the sum of its members. In your case, 4 bytes. You currently provide 8 bytes of memory. Try reducing the size of the union to 4 bytes.

-

+1 for Unbundle. It it simple, requires less code and you understand immediately that these elements belong to the current class. They are also easier to maintain in case you ever feel the need to change the name or type of an element and work well with In-Place Element Structures in Unbundle-Bundle-Scenarios.

-

A few month later, this is what Bing Image Creator produces for the same input: Can confirm, wires everywhere...

-

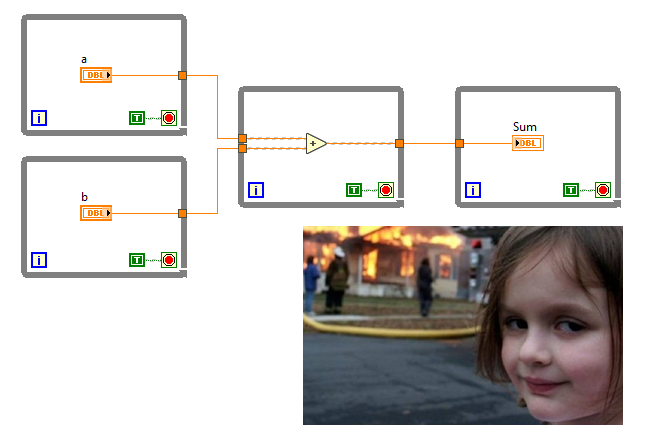

Not like this Because that is the goal; break down your complex and complicated data types into simple and uncomplicated ones. For configuration data you could maintain the path to the storage location and load the data as needed.

-

This is explained in the SQLITE help pages: https://www.sqlite.org/lang_savepoint.html#savepoints

-

Sounds like an uncommitted transaction. Make sure you have committed all transactions before closing the file. Uncommitted transactions are lost.

-

Upgrade LV2017 => LV2021 : Windows 10 memory increase

LogMAN replied to Francois Aujard's topic in LabVIEW General

This appears to be a known issue in LabVIEW 2021 SP1. https://www.ni.com/de-de/support/documentation/bugs/22/labview-2021-sp1-known-issues.html -

For future reference: https://download.ni.com/#support/daq/pc/ni-daq/daqmx/ It appears they moved their old ftp server to that site.

-

-

-

Here is the kind of response it produces for LabVIEW: The responses are impressive but it doesn't look like we are getting replaced any time soon...

-

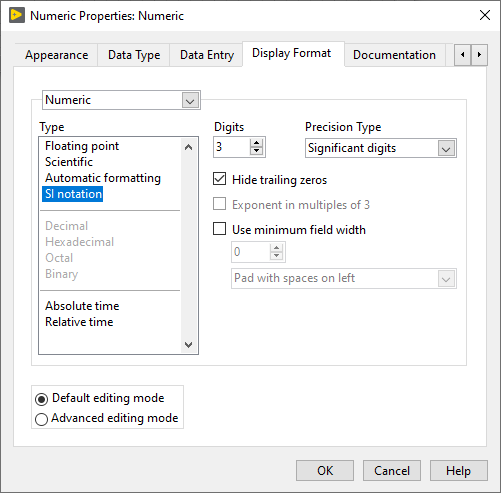

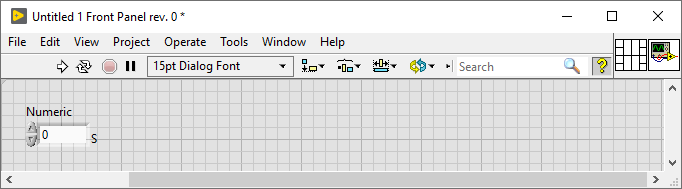

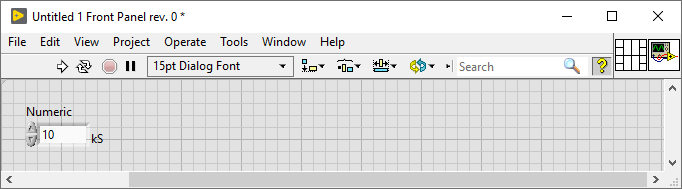

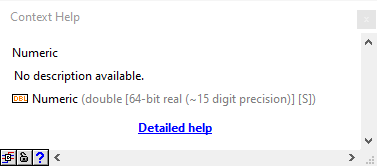

The reason your cant change the unit label at runtime is because unit labels change the data type of the wire (notice the "S" in brackets at the end). That said, what you want can be achieved with the display format. Enable unit label and specify the unit "S" Change the display format to SI notation and the number of digits to 3 Now it will automatically add the prefix according to your value. For example, 10000 S will turn into 10 kS.

- 1 reply

-

- 1

-

-

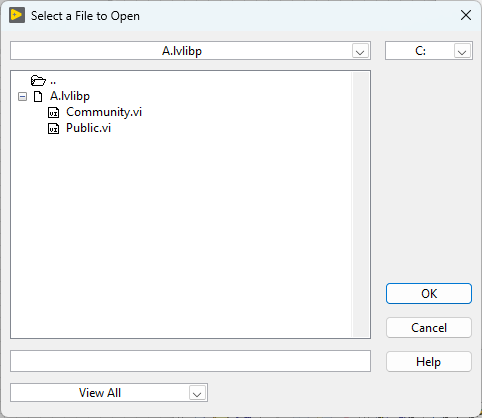

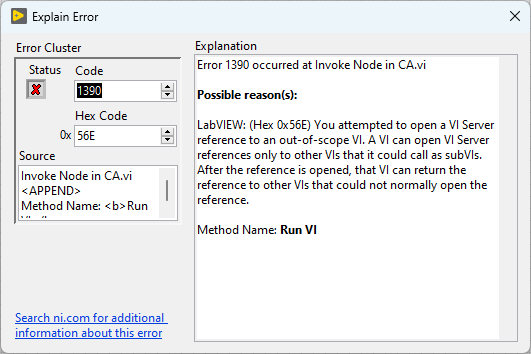

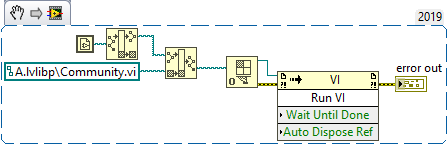

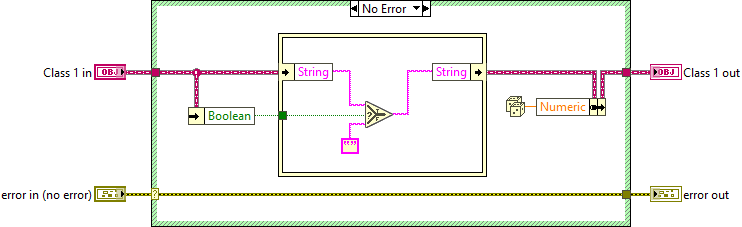

While community-scoped VIs are only accessible from VIs in the same library and friends, they are still exported. To see the complete list of exported members, use Get Exported File List.vi or open the library from the Getting Started Window. Attempting to execute community-scoped VIs results in a runtime error. Here is an example using Open VI Reference. The same error should appear in TestStand (otherwise it's a bug). LabVIEW simply hides community-scoped members in Project Explorer for convenience. Looks like TestStand does not do that.

-

LabVIEW clusters can actually be passed by value, given that the values are structs. For classes, you need to construct the class before you pass it to the method.

-

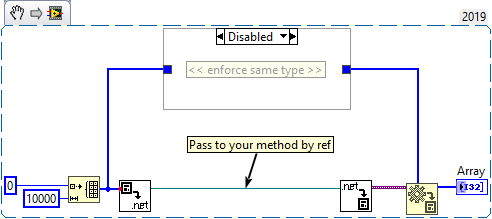

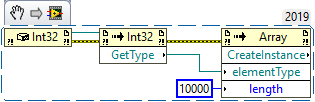

It sounds as if you want to pass a .NET Array type by ref to your method. This should be possible by constructing an array in LabVIEW, for example, by using the To .NET Object function, and passing the instance by reference to your method (assuming that your method signature is by ref). If you want to avoid generics, you can also initialize your own array as illustrated below.

-

-

Yes, this makes sense for class members. They should always access the private data cluster directly. Property nodes are only good for callers (and maybe when accessing parent class data). In the past I also avoided property nodes. Mostly because of stability and performance issues (~2011-2015). Nowadays they appear to be stable and are just easier to read (also, I'm lazy and property nodes don't need icons 😏). This is probably the best way to do it. Read-only and write-only access, however, should still be done with standalone bundle/unbundle. It makes it easier to understand what is going on, avoids unnecessary wires, and has the same memory footprint. By the way, Darren Nattinger recently held a presentation at GDevConNA 2022 that might be interesting to you. He provides some insights into features of LabVIEW that aren't as stable as one would hope...

-

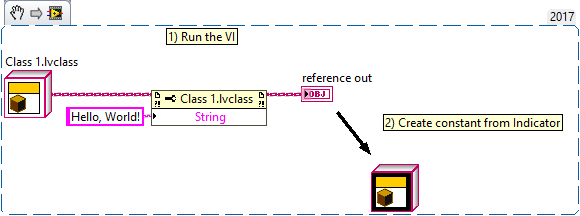

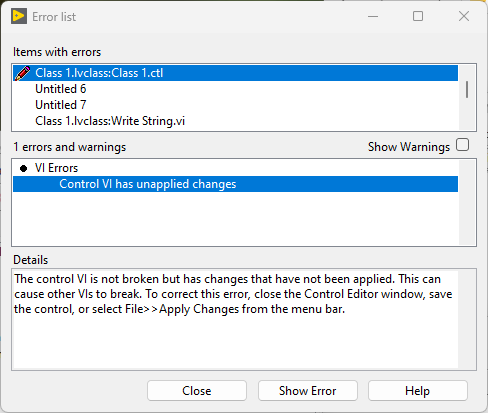

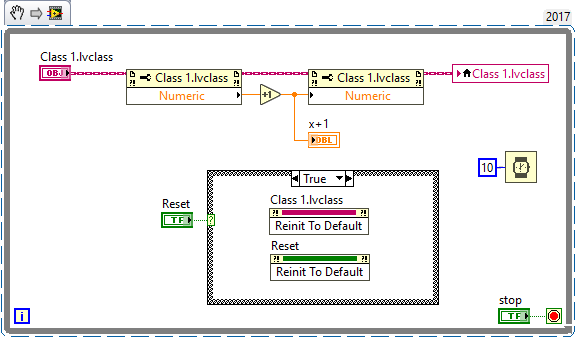

Class constants and controls have black background when they contain non-default values: Class constants (and controls) always have the default value of their private data control unless you explicitly create a non-standard constant like in the example above. It is updated every time the private data control is changed. This is why VIs containing the class are broken until the changes to the private data control are applied. That is correct. It does not. The default control does not actually contain a copy of the private data control, but a value to indicate that it returns the class default value. Even if you make this value its default value, it is still just a value that indicates that it returns the class default value. Only when the background turns black, you have to worry. By any chance, do you write values to class controls? This can result in undesired situations when combined with 'Make Current Values Default':

-

How to check if a class is an interface or a concrete?

LogMAN replied to bjustice's topic in LabVIEW General

That is very unlikely. It would turn classes into interfaces, which is a major breaking change. -

How to check if a class is an interface or a concrete?

LogMAN replied to bjustice's topic in LabVIEW General

Not sure about speed, but these VIs use features that aren't available in earlier versions. However, if backwards compatibility isn't an issue, this is probably the most native way to go about it. As for speed, perhaps caching is an option?