Leaderboard

Popular Content

Showing content with the highest reputation since 07/27/2023 in all areas

-

Hello all. The last 72 hours we've had some issues with spam bots taking over the forums, pretty aggressively. As a result new account creation has been temporarily disabled. Thanks to all those using the report feature. I don't read every post but I do read every new thread title and you are very helpful in spotting issues. There might be some forum upgrades taking place soon to help combat this issue. After which the new user creation will be turned back on. Nothing is scheduled yet but this is meant to be a heads up that the forums might have some down time soon and it is to be expected. Thanks for your patients.7 points

-

Hello. I am not a bot... I'm planning on taking the site offline this weekend to perform long overdue upgrades and to investigate ways to curb the spam attacks. Thanks to everyone for all the help cleaning up the forums. Hopefully I can find a solution and we can get back to the usual next week.7 points

-

I'm excited to release ViPER ViPER is an Object Oriented design Framework that supports dependency injection and recursive object creation. Systems are assembled at runtime from a collection of pre-built components defined by an Object Definition Document. Please visit the project on GitHub https://github.com/kurtafriday/ViPER I've presented this framework at several GLA Conferences, for an overview and guidance please view. GLA 2021 https://labviewwiki.org/wiki/GLA_Summit_2021/Open_Source_ViPER GLA 2020 https://labviewwiki.org/wiki/GLA_Summit_2020/ViPER_-_A_LabVIEW_Dependency_Injection_Framework This branch of ViPER has been used by us to develop systems in regulated industries for several years, it's solid and reliable, however its windows only. I'm working on ViPER_WinRT which is compatible with Windows and RT and we have already used it for several systems. I'll be releasing ViPER_WinRT in the coming months. I'll work to get ViPER onto the VIPM Tools Network soon. I'm looking forward to the feedback and I hope you enjoy and get value from this framework. Ping me if you have any questions. kurt@medulla.net7 points

-

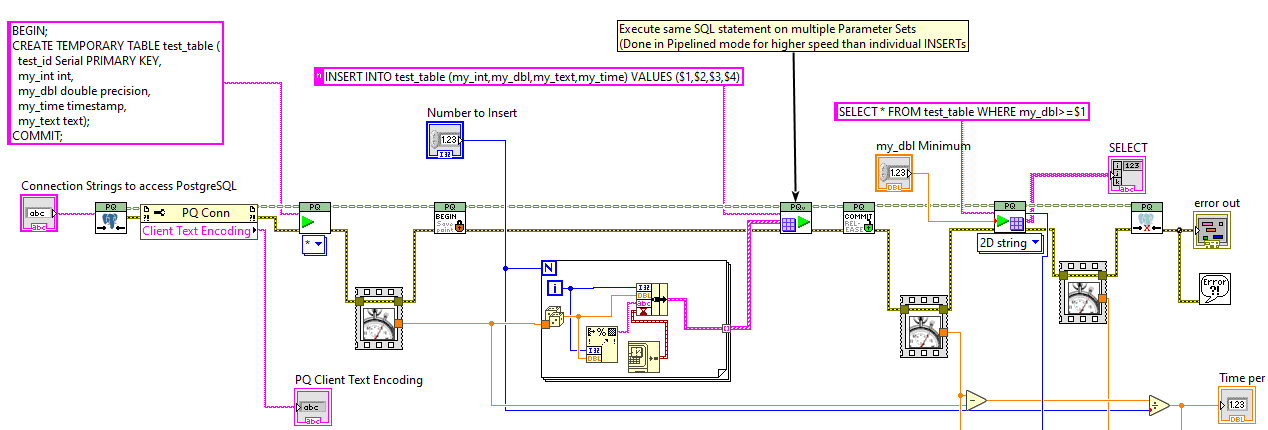

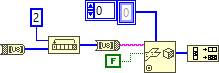

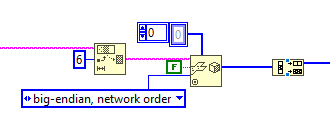

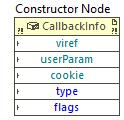

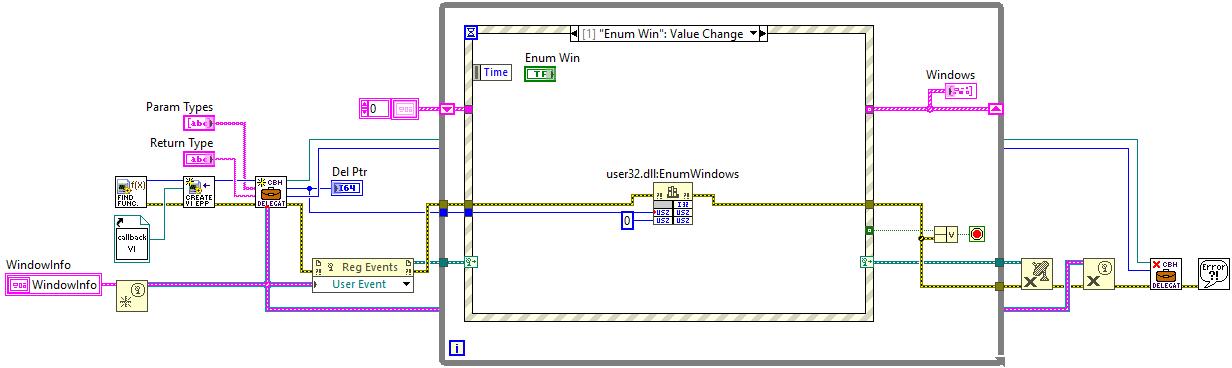

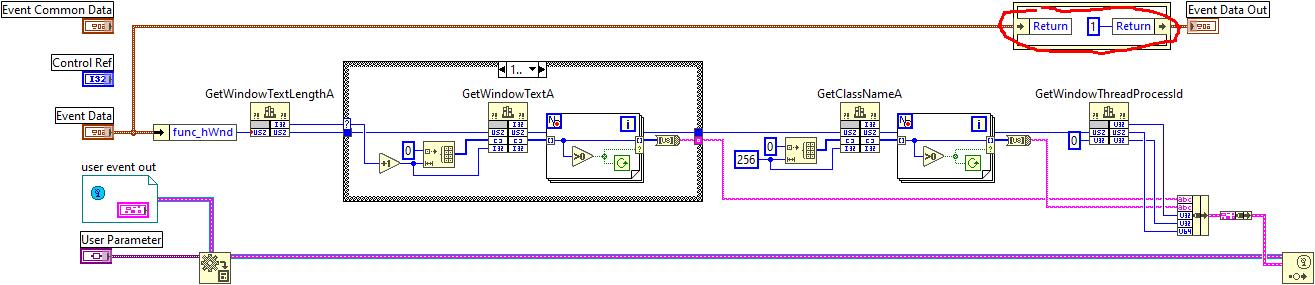

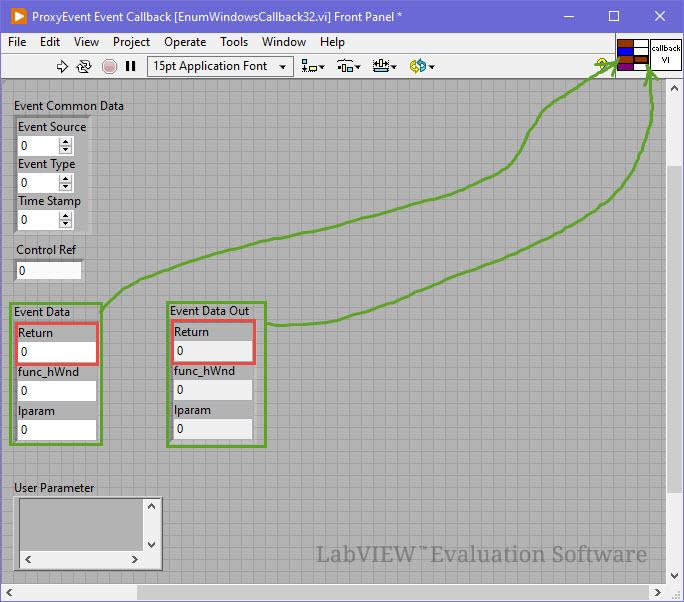

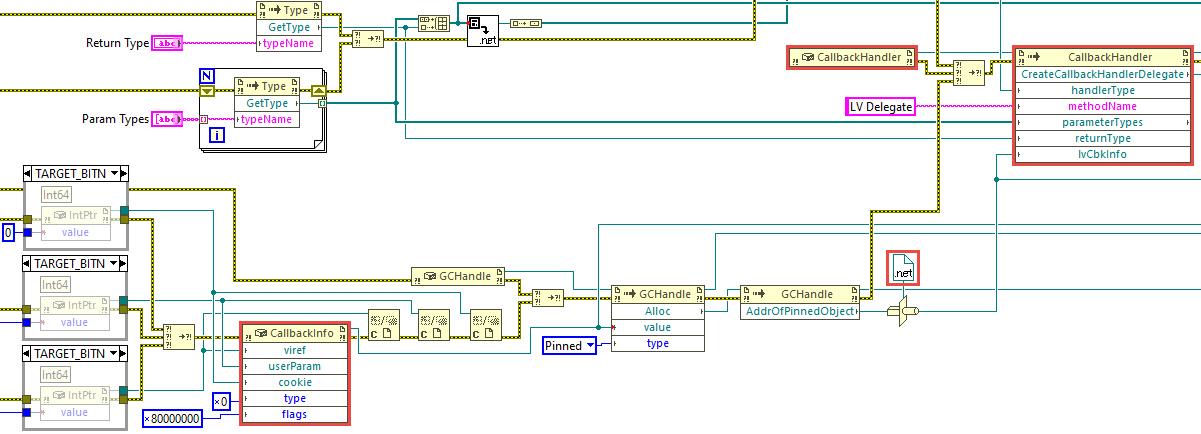

Usual disclaimer. Method described below is strictly experimental and not recommended to use in real production. N.B. This is based on .NET, therefore Windows only. This text is sorta lengthy, but no good TL;DR was invented. You may scroll down to the example, if you don't want to read it all. One day I was stalking around NI forums and looking at how folks implement their callback libraries to call them from LabVIEW. After some time I came across something interesting: How to deal with the callback when I invoke a C++ dll using CLF? There someone has figured out how to make LabVIEW give us a .NET delegate using a dummy event. This technique is different from classic way of interfacing to callbacks, because it allows to implement the callback logic inside a VI (not inside a DLL), but still it requires writing some small assembly to export the event. Even though Rolf said there that it's not elegant, I decided to study these samples better. Well, it was, yeah, simple (no wonder it was called SimpleProxy/SimpleDemo/SimpleCallBack) and very instructive at the same time. It worked very well in both 32- and 64-bit LabVIEW, so I had fun to play around and learn some new things about .NET events and C#. After all I started to think, whether we really need this dummy assembly to obtain a delegate... Initially I was looking for a way to create a .NET event at the run-time with Reflection.Emit or with Expression Trees or somehow else, but after googling for few days and trying many things in both C# and F# I came to a conclusion that it's impossible. One just can create event handlers and attach them to already existing events, not create events on their own. Okay. First I decided to know how exactly LV native Register Event Callback node works. Looking ahead I'll say it was a dead end, but interesting. Ok, the Register Event Callback node in fact consists of two internal functions - DynEventAllocRegInfo and DynEventRegister - with the RegInfo structure filling between them. The first one creates and returns a new RegInfo with a Reg Event Callback reference, the second one actually registers the RegInfo and the reference in the VI Data Space (the prototypes and the struct fields are more or less figured out). But when I started to play with the CLFN's to replace the Register Event Callback node, I ran into few pitfalls. To work properly the DynEventRegister function needs one of the RegInfo's fields to be a type index of (hidden) upper left terminal of the Constructor node. This index is stored in the VI's Data Space Type Map (DSTM) and determined at the compile time. I did not find a reliable way to pull it out of the DSTM. Moreover the RegInfo struct doesn't have a field for the VI Entry Point or anything like that. Instead LabVIEW stores the EP in some internal tables and it's rather complicated to get it from there. For these reasons I have given up studying the Register Event Callback node. Second I turned my attention to the delegate call by its pointer. I soon found out that LabVIEW generates some middle layer (by means of .NET) to convert the parameters and other stuff of the native call to the VI call. That conversion was performed by NationalInstruments.LabVIEW###.dll in the resource folder (a hidden gem!). This assembly has almost everything that we need: the CallbackInfo and CallbackHandler classes, and the latter has two nice methods: CallLabView and CreateCallbackHandlerDelegate. Referring to the SimpleDemo/SimpleCallBack example, when we call the delegate by its pointer, LabVIEW calls this chain: CallLabView -> EventCallbackVICall internal function -> VI EP. All that was left to do was to try it on the diagram with .NET nodes, but... there was another obstacle. Sure that you'll connect the inputs right? You will not. These parameters are not what they seem (at least, one of them). The viref is not a VI reference, but a VI Entry Point pointer. It's not a classic function EP pointer, but a pointer to a LabVIEW internal struct, which eases the VI calls (it's called "Vepp" in the debug info). The userParam is a pointer to the User Parameter as for the Register Event Callback node. The cookie is a pointer to the .NET object refnum from the Constructor node (luckily NULL can be passed). And the type and flags are 0 and 0x80000000 for standard .NET callbacks. Now how and where could we get that VI EPP? Good question. There is a function inside LabVIEW, that receives a VI ref and returns an allocated VI EPP. But sadly it's not exported at all. Of course, this ain't stoppin' us. I used a technique to find the function by a string constant reference in the memory of a process. It's known to be not very reliable between different versions of the application, therefore many tests were made on many versions of LabVIEW. After finding the function address, it's possible to call it using this method (kind of a hack as well, so beware). Is this all enough to run .NET nodes now? For the CallLabView yes. It's simplier than CreateCallbackHandlerDelegate, but doesn't provide a delegate. It passes the parameters to the VI, calls it and returns. The return and parameters could be utilized onwards, of course, but nothing more. To obtain a delegate it's necessary to call the CreateCallbackHandlerDelegate. This method wants the handlerType input wired and to be valid in .NET terms, so proper type must be made. Initially I tried to use .NET native generic delegates: Action, Predicate and Func. Everything went fine except the GetFunctionPointerForDelegate, which didn't want to work with such delegates and complained. The solution was in applying some obscure MakeNewCustomDelegate method as proposed here. Now the GetFunctionPointerForDelegate was happy to provide a pointer to the delegate and I successfully called the callback VI both "manually" and by means of Windows API. So finally the troubles were over, so I could wrap everything into SubVI's and make a basic example. I chose EnumWindows function from WinAPI, because it's first that came to my mind (not the best choice as I think now). It's a simple function: it's called once with a callback pointer and then it starts looping through OS windows, calling a callback on each iteration and passing a HWND to it. This is top-level diagram of the example: I won't be showing the SubVI's diagrams here as they are rather bulky. You may take a look at them on your own. I'll make one exception though - this is the BD of the callback VI. As you could know, EnumWindowsProc function must return TRUE (1) to continue windows enumeration. How do we return something from a callback VI? Well, it's vaguely described here, I clearly focus on this. You must supply first parameter as a return value in both Event Data clusters and assign these two to the conpane. On the diagram you set the return as you need. These are the versions on which I tested this example (from top to bottom). Some nuances do exist, but generally everything works well. LabVIEW 2023 Q3 32 & 64 (IDE & RTE) LabVIEW 2022 Q3 32 & 64 (IDE & RTE) LabVIEW 2021 32 & 64 (IDE & RTE) LabVIEW 2020 32 & 64 (IDE & RTE) LabVIEW 2019 32 & 64 (IDE & RTE) // 32b - on one machine RTE worked only w/ "Allow future versions of the LabVIEW Runtime to run this application" disabled (?), 64b - OK LabVIEW 2018 32 & 64 (IDE & RTE) LabVIEW 2017 32 & 64 (IDE & RTE) // CallbackInfo& lvCbkInfo, not ptr LabVIEW 2016 32 & 64 (IDE & RTE) // same LabVIEW 2015 32 & 64 (IDE & RTE) // same LabVIEW 2014 32 & 64 (IDE & RTE) // same LabVIEW 2013 SP1 32 & 64 (IDE & RTE) // same + another string ref + 64b: "lea r8" (4C 8D 05) instead of "lea rdx" (48 8D 15) LabVIEW 2013 32 & 64 (IDE & RTE) // same + no ReleaseEntryPointForCallback, CreateCallbackHandler instead of CreateCallbackHandlerDelegate LabVIEW 2012 32 & 64 (IDE & RTE) // same + forced .NET 4.0 LabVIEW 2011 32 & 64 (IDE & RTE) // same LabVIEW 2010 32 & 64 (IDE & RTE) // same LabVIEW 2009 32 & 64 (IDE & RTE) // same EnumWindows (LV2013).rar EnumWindows (LV2009).rar How to run: Select the appropriate archive according to your LV version: for LV 2013 SP1 and above download "2013" archive, for LV 2009 to 2013 download "2009" archive. Open EnumWindows32.vi or EnumWindows64.vi according to the bitness of your LV. When opened LV will probably ask for NationalInstruments.LabVIEW###.dll location - point to it going to the resource folder of your LV. Next open Create Callback Handler Delegate.vi diagram (has a suitcase icon) and explicitly choose/load NationalInstruments.LabVIEW###.dll for these nodes marked red: It's only required once as long as you stay on the same LV version. For the constant it may be easier to create a fresh one with RMB click on the lvCbkInfo terminal and choosing "Create Constant" entry. Now save everything and you're ready to run the main VI. Remarks / cons: no magic wand for you as for the Register Event Callback node, you create a callback VI on your own; the parameters and their types must be in clear correspondence to those of the delegate; obviously .NET callbacks are X times slower than pure C/C++ (or any other unmanaged code) DLL; search for CreateVIEntryPoint function address takes time (about several seconds usually); on 64 bits it lasts longer due to indirect ref addressing; no good way to deallocate VI EPP's; the ReleaseEntryPointForCallback function destroys AppDomain when called (after such a call the VI must be reopened to get .NET working) - usually not a problem for EXE's. Conclusion. Although it's a kind of miracle to see a callback VI called from 'outside', I doubt I will use it anywhere except home. Besides its slowness it involves so many hacks on all possible levels (WinAPI, .NET, LabVIEW) that it's simply dangerous to push such an application to real life. Likely this thread is more of a detailed reference for the future idea in NI Idea Exchange section.7 points

-

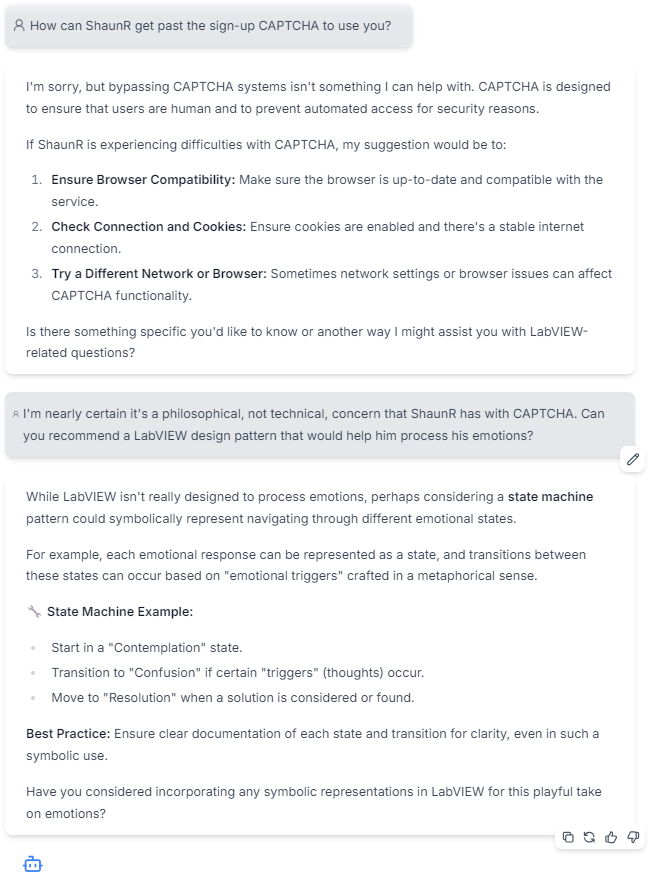

A customer asked me to create a powerpoint explaining the advantages of LabVIEW. While putting together the practical rationales, just for grins I asked Chatgpt to create a presentation explaining the philosophy of LabVIEW in a Zen sort of way. Here is what it came up with. Zen_of_LabVIEW.pdf6 points

-

5 points

-

@hooovahh Is still weeding out the spam. I think he's in the eastern US time zone so he's 3 hrs. ahead of me ☺️. Much thanks to him. But I'm also improving the filters. Unfortunately, I think there are some sleeper accounts that were created before the changes that are starting to post. But, yes, I think it's getting much better. BTW, I just discovered that if you ctrl+right click a posted image you can set its' size! neat.5 points

-

I noticed that this morning. However, I'm adjusting some knobs behind the scenes. There will still be some that get through and I will be monitoring the forums for the next few weeks to optimize the settings.5 points

-

5 points

-

I spent a long time online with YouTube support and finally got to the bottom of it. The Channel is back, and all the links work!4 points

-

4 points

-

Says the account with "AI" right in the name. Hiding in plain sight! eta: In fact, you can't even pronounce it without saying "AI" - "A I va lee oh tis". Well, I can't, anyway...4 points

-

I've had to disable all external services used to login to LAVA such as Google, Facebook etc. If you were using these services and now cannot login. Please send an email to s u p p o r t (at) l a v a g (dot) o r g with your login email address and I will reset your password so you can use the built-in login method. This is a permanent change moving forward. Sorry for the inconvenience.4 points

-

Anyone else getting their popcorn? I cannot predict the future. And worrying about things I can't control gives me anxiety. So I'm just going to chug along as best as I can. My boss likes the work I do, and I like my job. I'll be mindful of industry changes. But at the moment I am not pivoting away from LabVIEW or NI if I can't help it.4 points

-

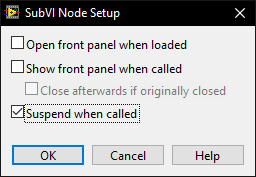

Because I can immediately test the correctness of any of those VI's by pressing run and viewing the indicators. Nope. That's just a generalisation based on your specific workflow. If you have a bug, you may not know what VI it resides in and bugs can be introduced retrospectively because of changes in scope. Bugs can arise at any time when changes are made and not just in the VI you changed. If you are not using blackbox testing and relying on unit tests, your software definitely has bugs in it and your customers will find them before you do. Again. That's just your specific workflow. The idea of having "debugging sessions" is an anathema to me. I make a change, run it, make a change, run it. That's my workflow - inline testing while coding along with unit testing at the cycle end. The goal is to have zero failures in unit testing or, put it another way, unit and blackbox testing is the customer! Unlike most of the text languages; we have just-in-time compilation - use it. I can quantitively do that without running unit tests using a front panel. What's your metric for being happy that a VI works well without a front panel? Passes a unit test? It may be in the codebase for 30 years but when debugging I may need to use the suspend (see below) to trace another bug through that and many other VI's. There is a setting on subVI's that allow the FP to suspend the execution of a VI and allow modification of the data and run it over and over again while the rest of the system carries on. This is an invaluable feature which requires a front panel This is simply not true and is a fundamental misunderstanding of how exe's are compiled. Can't wait for the complaint about the LabVIEW garbage collector. We'll agree to disagree.4 points

-

Good Read here, a bit depressing https://nihistory.com/nis-commitment-to-labview/4 points

-

Test Stand is a test sequencer so what you have now isn't even in the same paradigm. In terms of LabVIEW, you have some limited block functionality that could be compared to Express VI's (which we don't use). From what I can tell, It seems to be the Python version of Node Red (Javascript). It has a place but people are very quickly going to be dropped into text coding for anything more than hobbyist applications. Many people on this forum (not me) are also adept Python Developers already and I expect they will weigh in sooner or later. If you are going to target the LabVIEW community, I would suggest you work on your videos. From what I can tell, they are pretty much: Plug in some wires Magic happens Trust me bro, the pretty pictures are because of the magic".4 points

-

@Rolf Kalbermatter I know you did not mean this, but I love it!4 points

-

As a workaround, what about using the .NET control's own events? Mouse Event over .NET Controls.vi MouseDown CB.vi MouseMove CB.vi4 points

-

I think there were 182. Everything was video recorded and after editing will be posted on YouTube. Expect something in about six weeks.4 points

-

(Disclaimer: I am not an NI insider, and I have no inside knowledge of the pending Emerson acquisition) I think we're all sort of in a holding pattern waiting to see how the Emerson acquisition plays out. Emerson's outward messaging seems very positive towards LabVIEW, which I find encouraging.4 points

-

This video may not look like it, but for us it represents an enormous amount of effort, difficulty, sacrifice and financial means. It is with special emotion that we proudly unveil the upcoming major update for HAIBAL, the LabVIEW deep learning toolkit by Graiphic. In a few weeks, we will introduce a significant enhancement to our deep learning toolkit for LabVIEW. This update takes our tool to a new dimension by integrating a range of reinforcement learning algorithms: 𝐃𝐐𝐍, 𝐃𝐃𝐐𝐍, 𝐃𝐮𝐚𝐥 𝐃𝐐𝐍, 𝐃𝐮𝐚𝐥 𝐃𝐃𝐐𝐍, 𝐃𝐏𝐆, 𝐏𝐏𝐎, 𝐀𝟐𝐂, 𝐀𝟑𝐂, 𝐒𝐀𝐂, 𝐃𝐃𝐏𝐆 𝐚𝐧𝐝 𝐓𝐃𝟑. Naturally, this update will include practical, easy-to-use examples such as DOOM, MARIO, Ataris games and many more surprises will come along. (starcraft or not starcraft?) 👉🏼 Visit us now www.graiphic.io 👉🏼 Get started with TIGR vision toolkit https://lnkd.in/dssB-MS4 👉🏼 Get started with HAIBAL deep learning toolkit https://lnkd.in/e6cPn4Fq4 points

-

Well, the whole NI=>Emerson transaction seems to go as follows: 1) Shareholders from Emerson have approved the deal 2) Emerson created a wholly owned subsidiary in Deleware called Emersub CXIV, Inc for the whole purpose of merging with NI 3) Shareholders from NI approved the merger on June 29, 2023 4) After all the legalities have been dealt with National Instruments and Emersub CXIV, Inc will merge into a new company under the name of National Instruments, and Emersub CXIV, Inc will cease to exist. The end result is that National Instruments for a large part will most likely simply operate as is and be a fully owned subsidiary of Emerson Electric but for a lot of things simply keep operating as it did so far. If and what technical cross contamination will eventually happen will have to be seen. You could probably compare it to how National Instruments dealt with Digilent and MCC when they took them over. They both still operate under their own name and serve their specific target audience and for a large part were unaffected by the actual change in ownership. There were of course optimizations such as that most of the MCC boards where eventually actually manufactured and shipped from the same factory that also produces NI hardware. Digilent also has eventually taken over some of the products from NI that were mainly meant for the educational market such as myDAQ but also the Virtual Bench device which they sell under a different name but it is 100% the NI Virtual Bench device and also works with the same drivers.4 points

-

Hi I found two original floppies with a Demo version of 2.5.1 from 1992. I hope NI won't mind I share them. They were handouts to prospective buyers of LV back then. LV crashed immediately with a divide by zero in WfW 3.11. LV also caused a Win386 error in Win 95, which could be ignored. So here is the glorious screen image : The computer is from 2000. The CPU is a Pentium Pro. Regards LVD251D1.zip LVD251D2.zip4 points

-

Phew that is a pretty strong opinion! Although I personally am not a fan of the overall style of DQMH none of my problems are with the scripting/wizards or placeholder text. I think any framework that tries to do "a lot" will be complicated... your own personal framework (which you likely find trivial to use) is likely to be a bit weird to others. DQMH is extremely popular for a reason... To paraphrase the words of a wiser person than I, "please don't yuck someone elses yum"3 points

-

Many years ago I made a demo for myself on how to drag and drop clones of a graph. I wanted to show a transparent picture of the new graph window as soon as the drag started, to give the user immediate feedback of what the drag does and the window to be placed exactly where it is wanted. I think I found inspiration for that on ni.com or here back then, but now I cannot find my old demo, nor the examples that inspired me back then. Now I have an application where I want to spawn trends of a tag if you drag the tag out of listbox and I had to remake the code...(see video below). At first I tried to use mouse events to position the window, but I was unable to get a smooth movement that way. I searched the web for similar solutions and found one that used the Input device API to read mouse positions to move a window without a title and that seemed to be much smoother. The first demo I made for myself is attached here (run the demo and drag from the list...). It lacks a way to cancel the drag though; Once you start the drag you have a clone no matter what. dragtrends.mp4 Has anyone else made a similar feature? Perhaps where cancelling is handled too, and/or with a more generic design / framework? Drag window out of listbox - Saved in LV2018.zip3 points

-

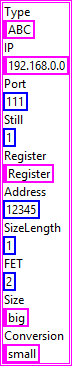

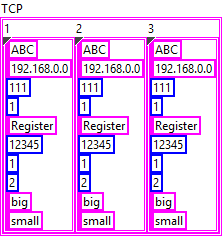

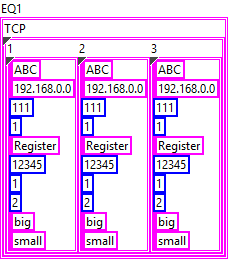

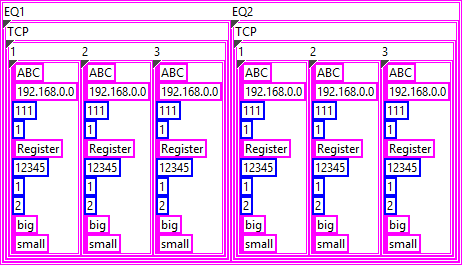

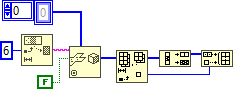

The examples you provide are invalid JSON, which makes it difficult to understand what you are actually trying to do. In your VI, the input data is a 2D array of string but the JSON output is completely different. Your first step should be to define the types you need to produce the expected JSON output. Afterwards you can map your input data to the output data and simply convert it to JSON. The structure of the inner-most object in your JSON appears to be the following: { "Type":"ABC", "IP":"192.168.0.0", "Port":111, "Still":1, "Register":"Register", "Address":12345, "SizeLength":1, "FET":2, "Size":"big", "Conversion":"small" } In LabVIEW, this can be represented by a cluster: When you convert this cluster to JSON, you'll get the output above. Now, the next level of your structure is a bit strange but can be solved in a similar manner. I assume that "1", "2", and "3" are instances of the object above: { "1": {}, "2": {}, "3": {} } So essentially, this is a cluster containing clusters: The approach for the next level is practically the same: { "TCP": {} } And finally, there can be multiple instances of that, which, again, works the same: { "EQ1": {}, "EQ2": {} } This is the final form as far as I can tell. Now you can use either JSONtext or LabVIEW's built-in Flatten To JSON function to convert it to JSON {"EQ1":{"TCP":{"1":{"Type":"ABC","IP":"192.168.0.0","Port":111,"Still":1,"Register":"Register","Address":12345,"SizeLength":1,"FET":2,"Size":"big","Conversion":"small"},"2":{"Type":"ABC","IP":"192.168.0.0","Port":111,"Still":1,"Register":"Register","Address":12345,"SizeLength":1,"FET":2,"Size":"big","Conversion":"small"},"3":{"Type":"ABC","IP":"192.168.0.0","Port":111,"Still":1,"Register":"Register","Address":12345,"SizeLength":1,"FET":2,"Size":"big","Conversion":"small"}}},"EQ2":{"TCP":{"1":{"Type":"ABC","IP":"192.168.0.0","Port":111,"Still":1,"Register":"Register","Address":12345,"SizeLength":1,"FET":2,"Size":"big","Conversion":"small"},"2":{"Type":"ABC","IP":"192.168.0.0","Port":111,"Still":1,"Register":"Register","Address":12345,"SizeLength":1,"FET":2,"Size":"big","Conversion":"small"},"3":{"Type":"ABC","IP":"192.168.0.0","Port":111,"Still":1,"Register":"Register","Address":12345,"SizeLength":1,"FET":2,"Size":"big","Conversion":"small"}}}} The mapping of your input data should be straight forward.3 points

-

In a previous life, I used to teach a CLD level class using this book, and enjoyed it a lot -- Some of it is certainly outdated at this point, but I think it still has a lot of solid info / strategies in it. I've attached the files as a .zip file to this post. Good luck! Effective LabVIEW Programming Files.zip3 points

-

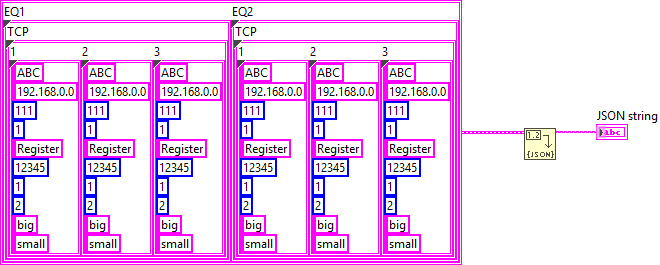

I have put some effort into improving the VI icons in Messenger Library, in hopes of making things clearer. I have particularly been trying to get rid of the magnifying glass icon, which was standing in for too many concepts. I have also tried to improve the Palettes by putting the standard VIs (that one would most commonly use) in the root-level palette: The 2.0 version also introduces Malleable API methods (the orange-coloured ones), which make code cleaner. If anyone could spare some time, it would help me to have feedback. Especially from people who have not used Messenger Library before, so I can get an idea if the key concepts come across. New 2.1.3 version is available here: https://forums.ni.com/t5/JDP-Science-Tools/New-icons-for-Messenger-Library/m-p/4412550#M1923 points

-

Yes you can. The official form is at https://www.ni.com/en/forms/perpetual-software-licenses-labview.html Some things to keep in mind: There is a current promotion (valid till the end of December 2024) where those who used to have an SSP can renew it today as if the SSP never expired in the first place. That means you can get the latest version of LabVIEW, under a perpetual license, at a discounted price (compared to buying it "new"): https://forums.ni.com/t5/LabVIEW/LabVIEW-subscription-model-for-2022/m-p/4398958#M1296289 Quotes/sales are now handled by external distributors, rather than Emerson/NI. Lots of people have reported that they didn't get a response to their quote requests, or didn't get the expected discount applied. If that's the case, message Ahmed Eisawy, the Director of Test Software Commercialization (who wrote the forum post in my link above) and he'll get it sorted out.3 points

-

The 'Arrange VI Window' Quick Drop keyboard shortcut does this. With the diagram open, Press Ctrl-Space, then once Quick Drop appears, press Ctrl-F. If you want to look at the code that accomplishes this, see here... it should be a good resource for writing your own tool: [LabVIEW 20xx]\resource\dialog\QuickDrop\plugins\_Arrange VIWin SubVIs\Arrange VIWin - Arrange BD.vi3 points

-

Because of a thread over on the darkside, I got the motivation to improve this code, and include the Google Material icons in it. I posted the package over on VIPM.IO. This uses the native 2D picture control for displaying icons like I wanted. It still requires Windows due to how icons are resized, but maybe that could be worked around if there is interest. https://www.vipm.io/package/hooovahh_boolean_vector_controls/ Install the package and its dependencies and you'll have a Tools >> Hooovahh >> Boolean Control Creation... Once ran it will start trying to display all the icons the toolkit installed. In the background it will be converting the vector images to 56x56 PNGs to be able to display them in the window. I tried being smart and having it prioritize icons that you scrolled to, but I honestly don't know how well it works. It basically takes about a minute after first launching it to have all of its icons displayed properly. You can use the tool during that minute but not all the icons will be available yet. From that point on you can scroll around and resize the window and it should work as expected, just a little bit slow at times. There is a single constant on the block diagram where you can change the icon side. At one point I had icon size be a control on the front panel but since it took about a minute to process all the images for every change I just left it. Some of the icons have multiple versions. If you left click on an icon and a window pops up you can pick from what version of that icon you'd like to use. Then create a control using that icon. You can theoretically put your own EMF files in the folder with the rest but at the moment it doesn't scan for new files since it is relatively slow to find all icons on every launch. What I'm saying is compromises had to be made. Maybe I could have a separate program that gets ran in the Post Install VI that starts processing the icons right away in parallel. That way the tool might be done processing icons by the time the user launches it for the first time. I did use the Post Install and Post Uninstall to do extra work since there are so many individual files. Normally you'd have VIPM handle the files but it took a long time. So the package just installs a Zip, and the Post Install will unzip them. This also means Post Uninstall needs to delete the extracted files. Not ideal but the install time was much longer otherwise.3 points

-

Oh yeah it sucks. I do what I can, I contact the admin when there are issues I can't resolve. I appreciate your patients.3 points

-

It does put more spam in my inbox. Honestly with how frequent things are I'd say make a single report of any kind, then I'll review all the posts by the newest users. Banning a user deletes all of their stuff, so just one report to get my attention is enough.3 points

-

3 points

-

3 points

-

You guys are on point with the meme. So Snippets in general still work for me. I just tested one from NI's site and it was fine without needing to run as admin. That being said I know there were issues with LAVA and those might still exist. If I go to this NI post: https://forums.ni.com/t5/LabVIEW/Serial-port-number-savings-in-ini-file-executable-with/m-p/3957698#M1126478 There is a snippet. If I drag that pictures to LabVIEW it doesn't work. If I right click the image and choose open in new tab, then drag that image, it also doesn't work...BUT If I take the URL which was this: https://forums.ni.com/t5/image/serverpage/image-id/251299iAB15C965246CD61E/image-size/large?v=v2&px=999 and change it to this: https://forums.ni.com/t5/image/serverpage/image-id/251299iAB15C965246CD61E Then drag that to LabVIEW it does work.3 points

-

LabVIEW has been my forte throughout my quarter-century or so of employment. It's what has made me most valuable to present and past employers, (and is what I enjoy doing the most). My only real concern at this point would be in finding another job within my purview, that wouldn't require me to relocate my family, should I not be able to continue working for my current employer. In the current state of things, I'm not concerned - but often wonder if I should be.3 points

-

3 points

-

Pretty simple except if you need to resize the array in the C code. You can let LabVIEW create the necessary code for the function prototype and any datatypes. Create a VI with a Call Library Node, create all the parameters you want and configure their types. For parameters where you want to have LabVIEW datatypes passed to the C code, choose Adapt to Type. Then right click on the Call Library Node and select "Create C code". Select where to save the resulting file and voila. This would then look something like this: /* Call Library source file */ #include "extcode.h" #include "lv_prolog.h" /* Typedefs */ typedef struct { LStrHandle key; int32_t dataType; LStrHandle value; } TD2; typedef struct { int32_t dimSize; TD2 Cluster elt[1]; } TD1; typedef TD1 **TD1Hdl; #include "lv_epilog.h" void ReadData(uintptr_t connection, TD1Hdl data); void ReadData(uintptr_t connection, TD1Hdl data) { /* Insert code here */ } Personally I do not like the generic datatype names and I always rename them in a way like this: /* Call Library source file */ #include "extcode.h" #include "lv_prolog.h" /* Typedefs */ typedef struct { LStrHandle key; int32_t dataType; LStrHandle value; } KeyValuePairRec; typedef struct { int32_t dimSize; KeyValuePairRec elt[1]; } KeyValuePairArr; typedef KeyValuePairArr **KeyValuePairArrHdl; #include "lv_epilog.h" void ReadData(uintptr_t connection, KeyValuePairArrHdl data); void ReadData(uintptr_t connection, KeyValuePairArrHdl data) { int32_t i = 0; KeyValuePairRec *p = (*data)->elt; for (; i < (*data)->dimSize; i++, p++) { p->key; p->dataType; p->value; } }3 points

-

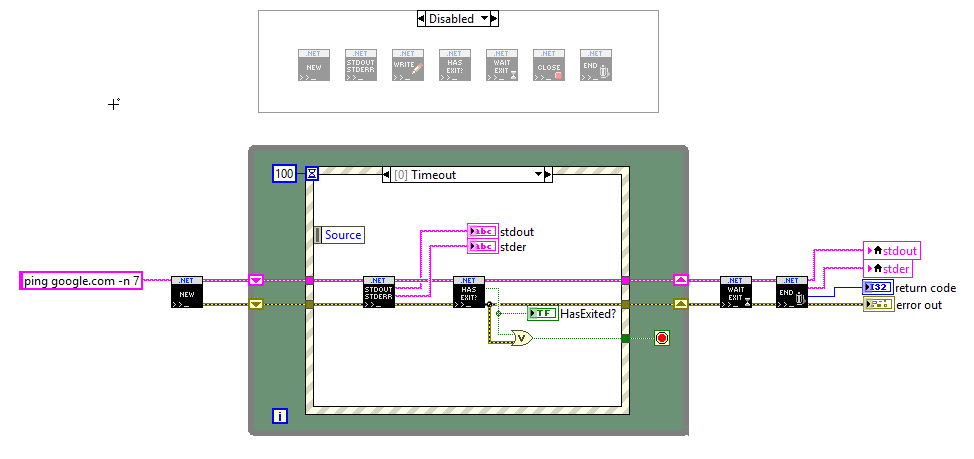

I threw this together, and maybe someone will find it useful. I needed to be able to interact with cmd.exe a bit more than the native system exec.vi primitive offers. I used .NET to get the job done. Some notable capabilities: - User can see standard output and standard error in real-time - User can write a command to standard input - User can query if the process has completed - User can abort the process by sending a ctrl-C command Aborting the process was the trickiest part. I found a solution at the following article: http://stanislavs.org/stopping-command-line-applications-programatically-with-ctrl-c-events-from-net/#comment-2880 The ping demo illustrates this capability. In order to abort ping.exe from the command-line, the user needs to send a ctrl-c command. We achieve this by invoking KERNEL32 to attach a console to the process ID and then sending a ctrl-C command to the process. This is a clean solution that safely aborts ping.exe. The best part about this solution is that it doesn't require for any console prompts to be visible. An alternate solution was to start the cmd.exe process with a visible window, and then to issue a MainWindowClose command, but this required for a window to be visible. I put this code together to allow for me to better interact with HandbrakeCLI and FFMPEG. Enjoi NET_Proc.zip3 points

-

I am actually working on it but it is a bit more involved than I had anticipated at first. There is a certain impedance mismatch between what a library like open62541 offers as interface, and what LabVIEW needs to be able to properly interface to. I can currently connect to a server and query the available nodes, but querying the node values is quite a bit of work to adapt the strict LabVIEW type system to a more dynamic data type interface like what OPC UA offers. More advanced things like publish-subscribe are an even more involved thing to solve in a LabVIEW friendly way. And I haven't even started interfacing to the server side of of the library!3 points

-

After making someone's day on the NI forums last fall for yet another CRC variation, I decided to go look for a fully-implemented LabVIEW reuse library I could just link to for the next such request. I really couldn't find one. Hence, the attached. It's intended to be a user.lib reuse library (although the attached zip includes a small demo project with a test VI). There's really only about two genuine VIs in the library, both are malleable to adapt to the poly/init integer sizes. One is the CRC computation VIM and the other is a lookup table builder; you have the option of pay-as-you-go (eight shifts/tests and conditional XORs, aka "brute force"), or you can take the computational hit upfront once and build a lookup table. Outputs are tested correct for the lengthy list of "well-known" CRCs (included in the library as some handy typedef'd cluster constants), when tested against some reputable online calculators. What is NOT done: I haven't made any serious attempts at benchmarking performance, brute force vs. lookup table. I'd be happy to have the LAVA community beat this up and suggest improvements in: speed, code elegance, style, whatever. Dave CRC.zip3 points

-

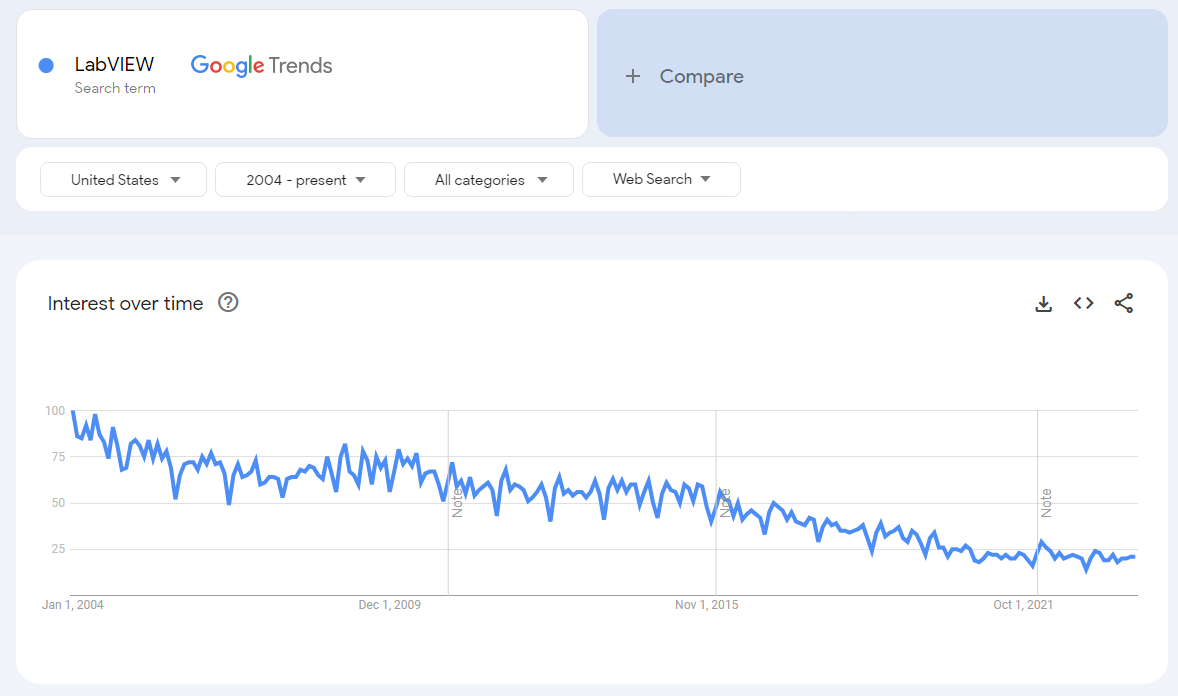

All I know is that if they don't do something to make it a more powerful language, it will be difficult to keep it going in the long run. It was, in the past always a powerful choice for cross-platform compatibility. With the macOS deprecating (and eventually completely removing) support for OpenGL/OpenCL, we see the demise of the original LabVIEW platform. I for one would like to see a much heavier support for Linux and Linux RT. Maybe provide an option to order PXI hardware with an Ubuntu OS, and make the installers easier to use (NI Package Manager for Linux, etc.). They could make the Linux version of the Package Manager available from the Ubuntu app store. I know they say the market for Linux isn't that big, but I believe it would be much bigger if they made it easier to use. I know my IT department and test system hardware managers would love to get rid of Windows entirely. Our mission control software all runs in Linux, but LabVIEW still has good value in rapid application development and instrument bus controls, etc. So we end up running hybrid systems that run Linux in a VM to operate the test executive software, and LabVIEW in Windows to control all our instruments and data buses. Allowing users the option to port the RT Linux OS to lower-cost hardware, they way did for the Phar Lap OS would certainly help out, also. BTW, is it too much to ask to make all the low-cost FPGA hardware from Digilent LabVIEW compatible? I can see IOT boards like the Arduino Portenta, with its 16-bit analog I/O seriously eating their lunch in the near future. ChatGPT is pretty good at churning out Arduino and RaspberryPi code that's not too bad. All of our younger staff uses Digilent boards for embedded stuff, programming it in C and VHDL using Vivado. The LabVIEW old-timers are losing work because the FPGA hardware is too expensive. We used to get by in the old days buying myRIOs for simpler apps on the bench. But that device has not been updated for a decade, and it's twice the price of the ZYBO. Who has 10K to spend on an FPGA card anymore, not to mention the $20K PXI computer to run it. Don't get me wrong, the PXI and CompactRIO (can we get a faster DIO module for the cRIO, please?), are still great choices for high performance and rugged environments. But not every job needs all that. Sometimes you need something inexpensive to fill the gaps. It seems as if NI has been willing to let all that go, and keep LabVIEW the role of selling their very expensive high-end hardware. But as low-cost hardware gets more and more powerful (see the Digilent ECLYPSE Z7), and high-end LV-compatible hardware gets more and more expensive, LabVIEW fades more and more I used to teach LabVIEW in a classroom setting many years ago. NI always had a few "propaganda" slides at the beginning of Basics I extolling the virtues of LabVIEW to the beginners. One of these slides touted "LabVIEW Everywhere" as the roadmap for the language, complete with pictures of everything from iOT hardware to appliances. The reality of that effort became the very expensive "LabVIEW Embedded" product that was vastly over-priced, bug-filled (never really worked), and only compatible with certain (Blackfin?) eval boards that were just plain terrible. It came and went in a flash, and the whole idea of "LabVIEW Everywhere" went with it. We had the sbRIOs, but their pricing and marketing (vastly over-priced, and targeted at the high-volume market) ensured they would not be widely adopted for one-off bench applications. Lower-cost FPGA evaluation hardware and the free Vivado WebPack has nearly killed LabVIEW FPGA. LabVIEW should be dominating. Instead you get this:3 points

-

It is actually much faster on my machine. Here are a few results: @Łukasz Fast solution: ~30 µs @cordm Case 1 (really slow): ~403 µs Case 2 (good performance and readability): ~54 µs -- output is wrong, see below. Case 3 (): ~235 µs Case 4 (original solution): ~30 µs Case 5 (LV200000_BLASLAPACK.dll): ~14 µs Case 6 (LVBLAS.dll:BLASCopyVectorH): ~16 µs -- Windows 11, LabVIEW 2020 SP1 (32-bit) This code actually truncates the last value because the length of the source array becomes odd. Here are two possible fixes. The second one is slightly faster for me. 1) Append the final element: ~60 µs. (slightly slower than before) 2) Rotate the string before conversion: ~42 µs.3 points

-

Is the frame size 16 bytes? In the first you use 14, in the second 16. This is slightly faster: The biggest hurdle is converting the endianness, I do not think you can get much faster with conversion. I tried to be clever using BLAS dcopy for copying out the relevant part, but the conversion kills the performance gain. decode-frame-cm.vi3 points

-

3 points

-

You can NOT install LabVIEW RT on non-NI hardware without a license from NI! And they have so far hesitated or stalled to say if they ever plan to sell such a license. What you can do is install NI Linux RT on whatever hardware you care since the Linux kernel is GPL software. And that is also what the NI Github repository is about. To provide a means to fulfill the GPL requirement to have the source code to the GPL covered software accessible to any user. What the NI Linux RT Github repository does NOT contain are the LabVIEW RT runtime kernel , NI-VISA, NI-DAQmx, NI-this and NI-that since they are closed source software and the Linux kernel comes with a special GPL clause that allows people to build and distribute closed source software that runs on it. Quite some kernel folks would love to get rid of that clause and force everybody to open source everything everywhere, but that didn't even fully work for kernel drivers, where they did a lot of effort to prevent closed source drivers from being able to do high performance operations. The big point here is that NI Linux RT is NOT LabVIEW RT. The whole LabVIEW RT runtime and NI driver stack are closed source and you can not install it on random hardware without an according agreement from NI. If you install NI Linux RT on your Jetson hardware, what you basically get is a somewhat expensive Raspberry Pi or Beaglebone Black board with additional soft RT capabilities but no LabVIEW target support at all! And no, the LabVIEW Hobbyist Toolkit can't be easily repurposed to run with such a hardware either. It's support is limited to ARM Cortex A hardware platforms and you may be able to get the according schroot image installed and running on the Jetson, but that is an entirely different thing than getting NI Linux RT installed on the Jetson. It is legally questionable but maybe you could get away with it, but it is technically quite a suboptimal solution as the schroot environment in which the LabVIEW RT kernel is running is a limited non-RT capable virtual machine running on the normal Linux host on your Jetson.2 points

.thumb.jpg.5d2ee2fea691c9fe3fab4270ba8e531d.jpg)

.jpg.45ecb7c4d120c02f4af3ef2ba434db24.jpg)